<!DOCTYPE html>

<html lang='ja'>

<head>

<title>Recording to WebSocket</title>

<meta http-equiv='Content-Type' content='text/html; charset=utf-8' />

<meta http-equiv="Pragma" content="no-cache">

<meta http-equiv="Cache-Control" content="no-cache">

</head>

<body>

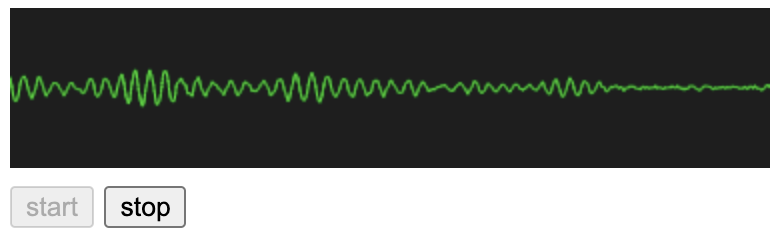

<canvas id="canvas" width="380" height="80"></canvas>

<div>

<button class='start'>start</button>

<button class='stop' disabled>stop</button>

</div>

<script>

const canvas = document.getElementById('canvas');

const _CanvasRenderingContext2D = canvas.getContext('2d');

const bufferSize = 0 //256, 512, 1024, 2048, 4096, 8192, 16384

const numberOfInputChannels = 1

const numberOfOutputChannels = 1

const startButton = document.querySelector(".start")

const stopButton = document.querySelector(".stop")

if (!navigator.getUserMedia)

navigator.getUserMedia = navigator.webkitGetUserMedia || navigator.mozGetUserMedia

var _MediaStream

// ---------------------------------------------

// Start Button

// ---------------------------------------------

startButton.onclick = () => {

startButton.disabled = true

stopButton.disabled = !startButton.disabled

var _AudioContext = new (window.AudioContext || window.webkitAudioContext)();

// ##############################################

// # Recording

// ##############################################

navigator.mediaDevices.getUserMedia({ audio: true, video: false }).then((constraints) => {

console.log('start recording')

_MediaStream = constraints

var _MediaStreamAudioSourceNode = _AudioContext.createMediaStreamSource(constraints)

_ScriptProcessorNode = _AudioContext.createScriptProcessor(bufferSize, numberOfInputChannels, numberOfOutputChannels)

_ScriptProcessorNode.onaudioprocess = (_AudioProcessingEvent) => {

let dataArray = new Uint8Array(_AnalyserNode.fftSize);

_AnalyserNode.getByteTimeDomainData(dataArray);

visualize(dataArray, _AnalyserNode.fftSize);

}

_MediaStreamAudioSourceNode.connect(_ScriptProcessorNode)

_ScriptProcessorNode.connect(_AudioContext.destination)

_CanvasRenderingContext2D.fillStyle = "rgb(30, 30, 30)";

_CanvasRenderingContext2D.lineWidth = 1;

_CanvasRenderingContext2D.strokeStyle = "rgb(0, 250, 30)";

var _AnalyserNode = _AnalyserNode = _AudioContext.createAnalyser();

//_AnalyserNode.fftSize = 2048;

_MediaStreamAudioSourceNode.connect(_AnalyserNode);

}).catch((err) => {

console.log(err);

})

}

// ---------------------------------------------

// Stop Button

// ---------------------------------------------

stopButton.onclick = () => {

if (!startButton.disabled)

return

startButton.disabled = false

stopButton.disabled = !startButton.disabled

_MediaStream.getTracks().forEach((_MediaStreamTrack) => {

_MediaStreamTrack.stop()

console.log('stop recording')

})

}

// ---------------------------------------------

// Audio Visualizer

// ---------------------------------------------

function visualize(dataArray, bufferLength) {

_CanvasRenderingContext2D.fillRect(0, 0, canvas.width, canvas.height);

_CanvasRenderingContext2D.beginPath();

var sliceWidth = (canvas.width * 1.0) / bufferLength;

var x = 0;

for (var i = 0; i < bufferLength; i++) {

var v = dataArray[i] / 128.0;

var y = (v * canvas.height) / 2;

i === 0 ? _CanvasRenderingContext2D.moveTo(x, y) : _CanvasRenderingContext2D.lineTo(x, y);

x += sliceWidth;

}

_CanvasRenderingContext2D.lineTo(canvas.width, canvas.height / 2);

_CanvasRenderingContext2D.stroke();

}

</script>

</body>

</html>

More than 5 years have passed since last update.

Register as a new user and use Qiita more conveniently

- You get articles that match your needs

- You can efficiently read back useful information

- You can use dark theme