備忘録を兼ね、kerasによる深層学習のスクリプトを記載します。

Google Colaboratoryで実行したものです。

データの準備

from tensorflow.examples.tutorials.mnist import input_data

from sklearn.model_selection import train_test_split

import numpy as np

mnist = input_data.read_data_sets("MNIST_data/")

X_train = mnist.train.images

Y_train = mnist.train.labels

X_test = mnist.test.images

Y_test = mnist.test.labels

X_train, X_validation, Y_train, Y_validation = \

train_test_split(X_train, Y_train, test_size=4000)

print(X_train.shape)

print(Y_train.shape)

print(X_test.shape)

print(Y_test.shape)

print(X_validation.shape)

print(Y_validation.shape)

(51000, 784)

(51000,)

(10000, 784)

(10000,)

(4000, 784)

(4000,)

%matplotlib inline

import matplotlib.pyplot as plt

import numpy as np

plt.imshow(np.array(X_train[0], dtype=np.float32).reshape(28, 28), cmap='gray')

print('この画像データのラベルは{:0d}です。'.format(Y_train[0]))

この画像データのラベルは3です

1-of-k表現に変換

Y_train = np.eye(10)[Y_train.astype(int)]

Y_test = np.eye(10)[Y_test.astype(int)]

Y_validation = np.eye(10)[Y_validation.astype(int)]

ニューラルネットワークによる深層学習

from keras.models import Sequential

from keras.layers import Dense, Activation

from keras.optimizers import SGD, Adam

from keras.callbacks import EarlyStopping

from keras import backend

モデル設定

n_in = len(X_train[0])

n_hidden = 200

n_out = len(Y_train[0])

model = Sequential()

model.add(Dense(n_hidden, input_dim=n_in))

model.add(Activation('relu'))

model.add(Dense(n_hidden))

model.add(Activation('relu'))

model.add(Dense(n_out))

model.add(Activation('softmax'))

model.compile(loss='categorical_crossentropy',

optimizer=SGD(lr=0.01),

metrics=['accuracy'])

model.summary()

_________________________________________________________________

Layer (type) Output Shape Param #

=================================================================

dense_1 (Dense) (None, 200) 157000

_________________________________________________________________

activation_1 (Activation) (None, 200) 0

_________________________________________________________________

dense_2 (Dense) (None, 200) 40200

_________________________________________________________________

activation_2 (Activation) (None, 200) 0

_________________________________________________________________

dense_3 (Dense) (None, 10) 2010

_________________________________________________________________

activation_3 (Activation) (None, 10) 0

=================================================================

Total params: 199,210

Trainable params: 199,210

Non-trainable params: 0

_________________________________________________________________

モデル学習

epochs = 300

batch_size = 100

early_stopping = EarlyStopping(monitor='val_loss', patience=10, verbose=1)

hist = model.fit(X_train, Y_train, epochs=epochs,

batch_size=batch_size,

validation_data=(X_validation, Y_validation),

callbacks=[early_stopping])

Train on 51000 samples, validate on 4000 samples

Epoch 1/300

51000/51000 [==============================] - 4s 69us/step - loss: 1.2016 - acc: 0.7145 - val_loss: 0.5900 - val_acc: 0.8497

Epoch 2/300

51000/51000 [==============================] - 3s 51us/step - loss: 0.4635 - acc: 0.8798 - val_loss: 0.4182 - val_acc: 0.8802

Epoch 3/300

51000/51000 [==============================] - 3s 50us/step - loss: 0.3651 - acc: 0.8995 - val_loss: 0.3602 - val_acc: 0.8980

...

Epoch 125/300

51000/51000 [==============================] - 3s 50us/step - loss: 0.0190 - acc: 0.9969 - val_loss: 0.0953 - val_acc: 0.9745

Epoch 126/300

51000/51000 [==============================] - 3s 49us/step - loss: 0.0187 - acc: 0.9971 - val_loss: 0.0946 - val_acc: 0.9740

Epoch 127/300

51000/51000 [==============================] - 3s 50us/step - loss: 0.0185 - acc: 0.9971 - val_loss: 0.0949 - val_acc: 0.9745

Epoch 00127: early stopping

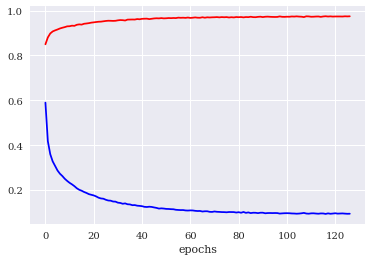

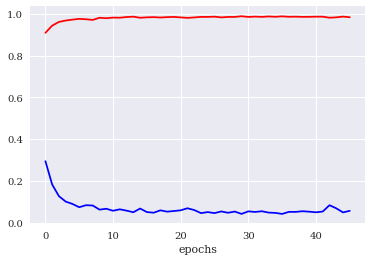

学習曲線

acc = hist.history['val_acc']

loss = hist.history['val_loss']

plt.rc('font', family='serif')

fig = plt.figure()

plt.plot(range(len(loss)), loss, label='loss', color='blue')

plt.plot(range(len(acc)), acc, label='acc', color='red')

plt.xlabel('epochs')

plt.show()

予測精度の評価

loss_and_metrics = model.evaluate(X_test, Y_test)

print(loss_and_metrics)

10000/10000 [==============================] - 1s 66us/step

[0.07168153105271048, 0.9778]

plt.imshow(np.array(X_train[0], dtype=np.float32).reshape(28, 28), cmap='gray')

print('この画像データのラベルは{:0d}です。'.format(np.argmax(Y_train[0])))

predict = model.predict(X_train[0:1], batch_size=1)

np.set_printoptions(precision=20, floatmode='fixed', suppress=True)

predict

この画像データのラベルは3です。

array([[0.00000000788268739171, 0.00000000027212274101,

0.00000182624910394225, 0.99998569488525390625,

0.00000000000230626889, 0.00000038970770788183,

0.00000000000011150467, 0.00000000041174075260,

0.00000760536704547121, 0.00000458146223536460]], dtype=float32)

畳み込みニューラルネットワーク

X_train = X_train.reshape(X_train.shape[0], 28, 28, 1)

X_test = X_test.reshape(X_test.shape[0], 28, 28, 1)

X_validation = X_validation.reshape(X_validation.shape[0], 28, 28, 1)

モデル設定

from keras.layers import Conv2D, MaxPooling2D, Flatten

model = Sequential()

model.add(Conv2D(32, (3, 3), activation='relu', input_shape=(28, 28, 1)))

model.add(MaxPooling2D((2, 2)))

model.add(Conv2D(64, (3, 3), activation='relu'))

model.add(MaxPooling2D((2, 2)))

model.add(Conv2D(64, (3, 3), activation='relu'))

model.add(Flatten())

model.add(Dense(64, activation='relu'))

model.add(Dense(10, activation='softmax'))

model.compile(loss='categorical_crossentropy',

optimizer=SGD(lr=0.01),

metrics=['accuracy'])

model.summary()

Layer (type) Output Shape Param #

=================================================================

conv2d_1 (Conv2D) (None, 26, 26, 32) 320

_________________________________________________________________

max_pooling2d_1 (MaxPooling2 (None, 13, 13, 32) 0

_________________________________________________________________

conv2d_2 (Conv2D) (None, 11, 11, 64) 18496

_________________________________________________________________

max_pooling2d_2 (MaxPooling2 (None, 5, 5, 64) 0

_________________________________________________________________

conv2d_3 (Conv2D) (None, 3, 3, 64) 36928

_________________________________________________________________

flatten_1 (Flatten) (None, 576) 0

_________________________________________________________________

dense_4 (Dense) (None, 64) 36928

_________________________________________________________________

dense_5 (Dense) (None, 10) 650

=================================================================

Total params: 93,322

Trainable params: 93,322

Non-trainable params: 0

モデル学習

epochs = 300

batch_size = 100

early_stopping = EarlyStopping(monitor='val_loss', patience=10, verbose=1)

hist = model.fit(X_train, Y_train, epochs=epochs,

batch_size=batch_size,

validation_data=(X_validation, Y_validation),

callbacks=[early_stopping])

Train on 51000 samples, validate on 4000 samples

Epoch 1/300

51000/51000 [==============================] - 7s 139us/step - loss: 1.0894 - acc: 0.6954 - val_loss: 0.4037 - val_acc: 0.8715

Epoch 2/300

51000/51000 [==============================] - 6s 123us/step - loss: 0.3256 - acc: 0.9009 - val_loss: 0.2859 - val_acc: 0.9105

Epoch 3/300

51000/51000 [==============================] - 6s 124us/step - loss: 0.2253 - acc: 0.9322 - val_loss: 0.2033 - val_acc: 0.9378

...

Epoch 52/300

51000/51000 [==============================] - 6s 123us/step - loss: 0.0170 - acc: 0.9950 - val_loss: 0.0423 - val_acc: 0.9865

Epoch 53/300

51000/51000 [==============================] - 6s 122us/step - loss: 0.0178 - acc: 0.9943 - val_loss: 0.0444 - val_acc: 0.9853

Epoch 54/300

51000/51000 [==============================] - 6s 122us/step - loss: 0.0161 - acc: 0.9953 - val_loss: 0.0383 - val_acc: 0.9885

Epoch 00054: early stopping

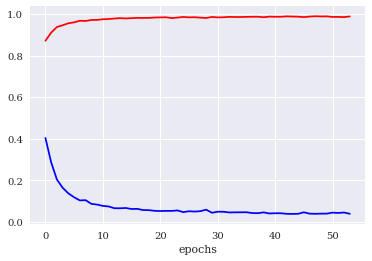

学習曲線

acc = hist.history['val_acc']

loss = hist.history['val_loss']

plt.rc('font', family='serif')

fig = plt.figure()

plt.plot(range(len(loss)), loss, label='loss', color='blue')

plt.plot(range(len(acc)), acc, label='acc', color='red')

plt.xlabel('epochs')

plt.show()

予測精度の評価

loss_and_metrics = model.evaluate(X_test, Y_test)

print(loss_and_metrics)

10000/10000 [==============================] - 1s 107us/step

[0.037091853324585825, 0.9879]

plt.imshow(np.array(X_train[0], dtype=np.float32).reshape(28, 28), cmap='gray')

print('この画像データのラベルは{:0d}です。'.format(np.argmax(Y_train[0])))

predict = model.predict(X_train[0:1], batch_size=1)

np.set_printoptions(precision=20, floatmode='fixed', suppress=True)

predict

この画像データのラベルは3です。

array([[0.00000000000000142575, 0.00000000000000055516,

0.00003081574323005043, 0.99996912479400634766,

0.00000000000000000163, 0.00000000003253877723,

0.00000000000000000003, 0.00000000000156541327,

0.00000002436952506457, 0.00000000206656181057]], dtype=float32)

リカレントニューラルネットワーク

データの準備

X_train = mnist.train.images

Y_train = mnist.train.labels

X_test = mnist.test.images

Y_test = mnist.test.labels

X_train = X_train.reshape(X_train.shape[0], 28, 28)

X_test = X_test.reshape(X_test.shape[0], 28, 28)

X_train, X_validation, Y_train, Y_validation = \

train_test_split(X_train, Y_train, test_size=4000)

1-of-k表現に変換

Y_train = np.eye(10)[Y_train.astype(int)]

Y_test = np.eye(10)[Y_test.astype(int)]

Y_validation = np.eye(10)[Y_validation.astype(int)]

モデル設定

from keras.layers.wrappers import Bidirectional

from keras.layers.recurrent import LSTM

n_in = 28

n_time = 28

n_hidden = 128

n_out = 10

def weight_variable(shape, name=None):

return np.random.normal(scale=.01, size=shape)

model = Sequential()

model.add(Bidirectional(LSTM(n_hidden), input_shape=(n_time, n_in)))

model.add(Dense(n_out, kernel_initializer=weight_variable))

model.add(Activation('softmax'))

model.compile(loss='categorical_crossentropy',

optimizer=Adam(lr=0.001, beta_1=0.9, beta_2=0.999),

metrics=['accuracy'])

model.summary()

_________________________________________________________________

Layer (type) Output Shape Param #

=================================================================

bidirectional_1 (Bidirection (None, 256) 160768

_________________________________________________________________

dense_6 (Dense) (None, 10) 2570

_________________________________________________________________

activation_4 (Activation) (None, 10) 0

=================================================================

Total params: 163,338

Trainable params: 163,338

Non-trainable params: 0

モデル学習

epochs = 300

batch_size = 250

early_stopping = EarlyStopping(monitor='val_loss', patience=10, verbose=1)

hist = model.fit(X_train, Y_train,

batch_size=batch_size,

epochs=epochs,

validation_data=(X_validation, Y_validation),

callbacks=[early_stopping])

Train on 51000 samples, validate on 4000 samples

Epoch 1/300

51000/51000 [==============================] - 27s 538us/step - loss: 0.8864 - acc: 0.6984 - val_loss: 0.2940 - val_acc: 0.9095

Epoch 2/300

51000/51000 [==============================] - 26s 516us/step - loss: 0.2314 - acc: 0.9294 - val_loss: 0.1822 - val_acc: 0.9440

Epoch 3/300

51000/51000 [==============================] - 26s 517us/step - loss: 0.1437 - acc: 0.9565 - val_loss: 0.1262 - val_acc: 0.9617

...

Epoch 44/300

51000/51000 [==============================] - 26s 516us/step - loss: 0.0116 - acc: 0.9964 - val_loss: 0.0681 - val_acc: 0.9837

Epoch 45/300

51000/51000 [==============================] - 26s 515us/step - loss: 0.0089 - acc: 0.9971 - val_loss: 0.0486 - val_acc: 0.9872

Epoch 46/300

51000/51000 [==============================] - 26s 511us/step - loss: 0.0062 - acc: 0.9983 - val_loss: 0.0561 - val_acc: 0.9842

Epoch 00046: early stopping

学習曲線

acc = hist.history['val_acc']

loss = hist.history['val_loss']

plt.rc('font', family='serif')

fig = plt.figure()

plt.plot(range(len(loss)), loss, label='loss', color='blue')

plt.plot(range(len(acc)), acc, label='acc', color='red')

plt.xlabel('epochs')

plt.show()

予測精度の評価

loss_and_metrics = model.evaluate(X_test, Y_test)

print(loss_and_metrics)

10000/10000 [==============================] - 11s 1ms/step

[0.07001748875972698, 0.9822]

plt.imshow(np.array(X_train[0], dtype=np.float32).reshape(28, 28), cmap='gray')

print('この画像データのラベルは{:0d}です。'.format(np.argmax(Y_train[0])))

predict = model.predict(X_train[0:1], batch_size=1)

np.set_printoptions(precision=20, floatmode='fixed', suppress=True)

predict

この画像データのラベルは3です。

array([[0.00002549441342125647, 0.00008204823825508356,

0.14743362367153167725, 0.85239017009735107422,

0.00000651806931273313, 0.00003918525180779397,

0.00000030165386988301, 0.00000143035322253127,

0.00000095124897825372, 0.00002027293339779135]], dtype=float32)

終了処理

backend.clear_session()