はじめに

AutoGluonというAutoMLツールを使ってみました。

今回はAutoGluonが用意している表形式データとサンプルコードを試した内容を記載します。

AutoGluonとは

概要

オープンソースの自動機械学習ツールで、表形式でデータを用意しておけば簡単な特徴量エンジニアリング、モデル選択、ハイパーパラメータチューニング、アンサンブルまで自動でやってくれます。

2020年5月2日現在、バージョン0.0.6がリリースされています。

構造化データに対しては、分類、回帰、画像分類や物体検知、文章分類ができるようです。

表形式データに対する精度評価(論文)

こちらの論文で精度評価がされています。

AutoGluon、TPOT、H2O、AutoWEKA、auto-sklearn、Google AutoML Tablesに対して、50種類のデータセットを使って予測精度を算出すると、AutoGluonが一番精度が高かったとのことです。

この論文ではAutoGluonの特徴として、Multi-layer Stack Ensemblingを挙げています。詳細は論文を参照下さい。

環境

Google Colaboratoryで実施しました。

内容

準備

!pip install --upgrade mxnet

!pip install autogluon

インストールはこれで終了です。

この段階でpandasのバージョンなどでerrorや、warningが出ましたが、最終的に使えました。

import autogluon as ag

from autogluon import TabularPrediction as task

ここでエラーがでましたが、ランタイムを再起動させるとエラーなく実行できました。

チュートリアルと同じデータを使います。

train_data = task.Dataset(file_path='https://autogluon.s3.amazonaws.com/datasets/Inc/train.csv')

train_data = train_data.head(10000)

print(train_data.head())

使用するデータは実行時間を短縮するために1万行に制限しました。

以下のように、15列あり、数値やら、文字列が含まれています。

Loaded data from: https://autogluon.s3.amazonaws.com/datasets/Inc/train.csv | Columns = 15 / 15 | Rows = 39073 -> 39073

age workclass fnlwgt ... hours-per-week native-country class

0 25 Private 178478 ... 40 United-States <=50K

1 23 State-gov 61743 ... 35 United-States <=50K

2 46 Private 376789 ... 15 United-States <=50K

3 55 ? 200235 ... 50 United-States >50K

4 36 Private 224541 ... 40 El-Salvador <=50K

[5 rows x 15 columns]

目的変数もチュートリアル通りclassを使用します。

label_column = 'class'

print("Summary of class variable: \n", train_data[label_column].describe())

Summary of class variable:

count 10000

unique 2

top <=50K

freq 7568

Name: class, dtype: object

デフォルトで構築した場合

実際に機械学習モデルを構築してみますが、上記のデータに対してfitさせるだけなので、

非常に簡単にモデルを構築できます。

最初に、fitの引数はほとんどデフォルトのまま実行してみます。

%%time

dir = 'agModels-predictClass' # specifies folder where to store trained models

predictor = task.fit(train_data=train_data, label=label_column, output_directory=dir)

説明変数14個を数値、objectの型を判別して、特徴量エンジニアリングしているようですが、

何をどうしたのかまではログに出てきませんでした。

デフォルトだとaccuracyを使って、最適化と、early stoppingさせているようです。

モデルは10個程度調べて、アンサンブルまでやって2分なので、非常に早いと思いました。

ただし、API reference( https://autogluon.mxnet.io/api/autogluon.task.html#autogluon.task.TabularPrediction.fit )に記載されているように、デフォルトだとハイパーパラメタチューニングが実行されません。

Beginning AutoGluon training ...

AutoGluon will save models to agModels-predictClass/

Train Data Rows: 10000

Train Data Columns: 15

Preprocessing data ...

Here are the first 10 unique label values in your data: [' <=50K' ' >50K']

AutoGluon infers your prediction problem is: binary (because only two unique label-values observed)

If this is wrong, please specify `problem_type` argument in fit() instead (You may specify problem_type as one of: ['binary', 'multiclass', 'regression'])

Selected class <--> label mapping: class 1 = >50K, class 0 = <=50K

Feature Generator processed 10000 data points with 14 features

Original Features:

int features: 6

object features: 8

Generated Features:

int features: 0

All Features:

int features: 6

object features: 8

Data preprocessing and feature engineering runtime = 0.15s ...

AutoGluon will gauge predictive performance using evaluation metric: accuracy

To change this, specify the eval_metric argument of fit()

AutoGluon will early stop models using evaluation metric: accuracy

Fitting model: RandomForestClassifierGini ...

0.842 = Validation accuracy score

2.44s = Training runtime

0.22s = Validation runtime

Fitting model: RandomForestClassifierEntr ...

0.846 = Validation accuracy score

3.04s = Training runtime

0.22s = Validation runtime

Fitting model: ExtraTreesClassifierGini ...

0.83 = Validation accuracy score

1.93s = Training runtime

0.22s = Validation runtime

Fitting model: ExtraTreesClassifierEntr ...

0.836 = Validation accuracy score

2.25s = Training runtime

0.22s = Validation runtime

Fitting model: KNeighborsClassifierUnif ...

0.772 = Validation accuracy score

0.07s = Training runtime

0.11s = Validation runtime

Fitting model: KNeighborsClassifierDist ...

0.748 = Validation accuracy score

0.04s = Training runtime

0.11s = Validation runtime

Fitting model: LightGBMClassifier ...

0.854 = Validation accuracy score

1.21s = Training runtime

0.02s = Validation runtime

Fitting model: CatboostClassifier ...

0.864 = Validation accuracy score

8.85s = Training runtime

0.02s = Validation runtime

Fitting model: NeuralNetClassifier ...

0.854 = Validation accuracy score

93.04s = Training runtime

0.26s = Validation runtime

Fitting model: LightGBMClassifierCustom ...

0.852 = Validation accuracy score

2.2s = Training runtime

0.03s = Validation runtime

Fitting model: weighted_ensemble_k0_l1 ...

0.864 = Validation accuracy score

0.82s = Training runtime

0.0s = Validation runtime

AutoGluon training complete, total runtime = 121.37s ...

CPU times: user 1min 27s, sys: 6.04 s, total: 1min 33s

Wall time: 2min 1s

fit_summaryで構築されたモデルのサマリーを見ることができます。

今回はアンサンブルモデルがaccuracy=0.864で一番高いという結果になりました。

results = predictor.fit_summary()

*** Summary of fit() ***

Estimated performance of each model:

model score_val fit_time pred_time_val stack_level

10 weighted_ensemble_k0_l1 0.864 0.822482 0.002695 1

7 CatboostClassifier 0.864 8.849893 0.016495 0

8 NeuralNetClassifier 0.854 93.040479 0.263205 0

6 LightGBMClassifier 0.854 1.208874 0.018122 0

9 LightGBMClassifierCustom 0.852 2.201691 0.032273 0

1 RandomForestClassifierEntr 0.846 3.039360 0.218410 0

0 RandomForestClassifierGini 0.842 2.443804 0.224741 0

3 ExtraTreesClassifierEntr 0.836 2.247025 0.215692 0

2 ExtraTreesClassifierGini 0.830 1.930307 0.217770 0

4 KNeighborsClassifierUnif 0.772 0.072491 0.113025 0

5 KNeighborsClassifierDist 0.748 0.035974 0.112589 0

Number of models trained: 11

Types of models trained:

{'CatboostModel', 'LGBModel', 'RFModel', 'KNNModel', 'WeightedEnsembleModel', 'TabularNeuralNetModel'}

Bagging used: False

Stack-ensembling used: False

Hyperparameter-tuning used: False

User-specified hyperparameters:

{'NN': {'num_epochs': 500}, 'GBM': {'num_boost_round': 10000}, 'CAT': {'iterations': 10000}, 'RF': {'n_estimators': 300}, 'XT': {'n_estimators': 300}, 'KNN': {}, 'custom': ['GBM']}

Plot summary of models saved to file: agModels-predictClass/SummaryOfModels.html

*** End of fit() summary ***

それではテストデータに対して予測し、精度を算出してみます。

テストデータについてもチュートリアルと同様に実行します。

test_data = task.Dataset(file_path='https://autogluon.s3.amazonaws.com/datasets/Inc/test.csv')

y_test = test_data[label_column] # values to predict

test_data_nolab = test_data.drop(labels=[label_column],axis=1) # delete label column to prove we're not cheating

print(test_data_nolab.head())

Loaded data from: https://autogluon.s3.amazonaws.com/datasets/Inc/test.csv | Columns = 15 / 15 | Rows = 9769 -> 9769

age workclass fnlwgt ... capital-loss hours-per-week native-country

0 31 Private 169085 ... 0 20 United-States

1 17 Self-emp-not-inc 226203 ... 0 45 United-States

2 47 Private 54260 ... 1887 60 United-States

3 21 Private 176262 ... 0 30 United-States

4 17 Private 241185 ... 0 20 United-States

[5 rows x 14 columns]

予測と学習が別のスクリプトの場合はloadによってモデルを読み込みます。

精度算出用のAPIも用意されているので、そちらを使用します。

predictor = task.load(dir) # unnecessary, just demonstrates how to load previously-trained predictor from file

y_pred = predictor.predict(test_data_nolab)

print("Predictions: ", y_pred)

perf = predictor.evaluate_predictions(y_true=y_test, y_pred=y_pred, auxiliary_metrics=True)

Evaluation: accuracy on test data: 0.8722489507626164

Evaluations on test data:

{

"accuracy": 0.8722489507626164,

"accuracy_score": 0.8722489507626164,

"balanced_accuracy_score": 0.796036984340255,

"matthews_corrcoef": 0.6303973015503467,

"f1_score": 0.8722489507626164

}

Predictions: [' <=50K' ' <=50K' ' >50K' ... ' <=50K' ' <=50K' ' <=50K']

Detailed (per-class) classification report:

{

" <=50K": {

"precision": 0.8965605421301623,

"recall": 0.9410817339954368,

"f1-score": 0.9182818229439498,

"support": 7451

},

" >50K": {

"precision": 0.7746406570841889,

"recall": 0.6509922346850734,

"f1-score": 0.7074542897327709,

"support": 2318

},

"accuracy": 0.8722489507626164,

"macro avg": {

"precision": 0.8356005996071756,

"recall": 0.796036984340255,

"f1-score": 0.8128680563383603,

"support": 9769

},

"weighted avg": {

"precision": 0.8676312460367478,

"recall": 0.8722489507626164,

"f1-score": 0.8682564137942402,

"support": 9769

}

}

Accuracyが0.872という結果でした。f1スコアなども一覧で表示しているので、便利だと思いました。

ハイパーパラメタチューニングを有効にした場合

それでは、ハイパーパラメタチューニングを行うとどれだけ精度が変わるのか試したいと思います。

コードはこちらを参考にしています。

%%time

nn_options = { # specifies non-default hyperparameter values for neural network models

'num_epochs': 10, # number of training epochs (controls training time of NN models)

'learning_rate': ag.space.Real(1e-4, 1e-2, default=5e-4, log=True), # learning rate used in training (real-valued hyperparameter searched on log-scale)

'activation': ag.space.Categorical('relu', 'softrelu', 'tanh'), # activation function used in NN (categorical hyperparameter, default = first entry)

'layers': ag.space.Categorical([100],[1000],[200,100],[300,200,100]),

# Each choice for categorical hyperparameter 'layers' corresponds to list of sizes for each NN layer to use

'dropout_prob': ag.space.Real(0.0, 0.5, default=0.1), # dropout probability (real-valued hyperparameter)

}

gbm_options = { # specifies non-default hyperparameter values for lightGBM gradient boosted trees

'num_boost_round': 100, # number of boosting rounds (controls training time of GBM models)

'num_leaves': ag.space.Int(lower=26, upper=66, default=36), # number of leaves in trees (integer hyperparameter)

}

hyperparameters = {'NN': nn_options, 'GBM': gbm_options} # hyperparameters of each model type

# If one of these keys is missing from hyperparameters dict, then no models of that type are trained.

time_limits = 2*60 # train various models for ~2 min

num_trials = 5 # try at most 3 different hyperparameter configurations for each type of model

search_strategy = 'skopt' # to tune hyperparameters using SKopt Bayesian optimization routine

output_directory = 'agModels-predictOccupation' # folder where to store trained models

dir = 'agModels-predictClass-tuned' # specifies folder where to store trained models

predictor = task.fit(

train_data = train_data, # 学習用データ

label = label_column, # 目的変数

tuning_data = None, # ハイパーパラメタチューニング用の検証用データ

output_directory = dir, # モデルや中間生成ファイルの格納フォルダパス

time_limits=time_limits, # 実行時間の制限(単位:秒)

num_trials=num_trials, # 試行するハイパーパラメタセットの最大数

hyperparameter_tune=True, # ハイパーパラメタチューニングするかどうか。デフォルトはFalse

hyperparameters=hyperparameters, # ハイパーパラメタの指定

search_strategy=search_strategy # ハイパーパラメタチューニングの探索方法

)

各モデルに対してデフォルトでチューニングしてくれるハイパーパラメタの数が少ないので、実質的にモデルごとにチューニングするパラメタを指定する必要があります。

詳細はAPI reference(https://autogluon.mxnet.io/api/autogluon.task.html)を参照してください。

サンプル通り、今回はNeural NetworkとLightGBMのパラメタを指定しています。

Warning: `hyperparameter_tune=True` is currently experimental and may cause the process to hang. Setting `auto_stack=True` instead is recommended to achieve maximum quality models.

Beginning AutoGluon training ... Time limit = 120s

AutoGluon will save models to agModels-predictClass-tuned/

Train Data Rows: 10000

Train Data Columns: 15

Preprocessing data ...

Here are the first 10 unique label values in your data: [' <=50K' ' >50K']

AutoGluon infers your prediction problem is: binary (because only two unique label-values observed)

If this is wrong, please specify `problem_type` argument in fit() instead (You may specify problem_type as one of: ['binary', 'multiclass', 'regression'])

Selected class <--> label mapping: class 1 = >50K, class 0 = <=50K

Feature Generator processed 10000 data points with 14 features

Original Features:

int features: 6

object features: 8

Generated Features:

int features: 0

All Features:

int features: 6

object features: 8

Data preprocessing and feature engineering runtime = 0.12s ...

AutoGluon will gauge predictive performance using evaluation metric: accuracy

To change this, specify the eval_metric argument of fit()

AutoGluon will early stop models using evaluation metric: accuracy

Starting Experiments

Num of Finished Tasks is 0

Num of Pending Tasks is 5

100%

5/5 [01:06<00:00, 13.34s/it]

Finished Task with config: {'feature_fraction': 1.0, 'learning_rate': 0.1, 'min_data_in_leaf': 20, 'num_leaves': 36} and reward: 0.859

Finished Task with config: {'feature_fraction': 0.7568829632019213, 'learning_rate': 0.12424356064985256, 'min_data_in_leaf': 3, 'num_leaves': 55} and reward: 0.858

Finished Task with config: {'feature_fraction': 0.7564494924988235, 'learning_rate': 0.03775715772264416, 'min_data_in_leaf': 8, 'num_leaves': 27} and reward: 0.859

Finished Task with config: {'feature_fraction': 0.9870314769908329, 'learning_rate': 0.1442122206611739, 'min_data_in_leaf': 4, 'num_leaves': 35} and reward: 0.857

Finished Task with config: {'feature_fraction': 0.9339535543959474, 'learning_rate': 0.0050486520525173835, 'min_data_in_leaf': 10, 'num_leaves': 56} and reward: 0.815

0.8645 = Validation accuracy score

1.44s = Training runtime

0.04s = Validation runtime

0.86 = Validation accuracy score

1.54s = Training runtime

0.03s = Validation runtime

0.862 = Validation accuracy score

1.4s = Training runtime

0.04s = Validation runtime

0.8605 = Validation accuracy score

1.33s = Training runtime

0.03s = Validation runtime

0.8155 = Validation accuracy score

1.61s = Training runtime

0.04s = Validation runtime

Starting Experiments

Num of Finished Tasks is 0

Num of Pending Tasks is 5

80%

4/5 [00:57<00:13, 13.95s/it]

Finished Task with config: {'activation.choice': 0, 'dropout_prob': 0.1, 'embedding_size_factor': 1.0, 'layers.choice': 0, 'learning_rate': 0.0005, 'network_type.choice': 0, 'use_batchnorm.choice': 0, 'weight_decay': 1e-06} and reward: 0.8465

Finished Task with config: {'activation.choice': 0, 'dropout_prob': 0.4983419677765833, 'embedding_size_factor': 1.1583623866868233, 'layers.choice': 0, 'learning_rate': 0.0068602771070335935, 'network_type.choice': 0, 'use_batchnorm.choice': 0, 'weight_decay': 0.0004137874852187468} and reward: 0.847

Ran out of time, stopping training early.

Finished Task with config: {'activation.choice': 2, 'dropout_prob': 0.23434709621043653, 'embedding_size_factor': 1.157370849535098, 'layers.choice': 1, 'learning_rate': 0.0024035089234857193, 'network_type.choice': 0, 'use_batchnorm.choice': 0, 'weight_decay': 3.3978628271485844e-09} and reward: 0.806

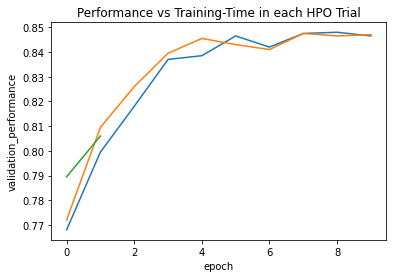

Please either provide filename or allow plot in get_training_curves

0.848 = Validation accuracy score

23.43s = Training runtime

0.57s = Validation runtime

0.8475 = Validation accuracy score

21.99s = Training runtime

0.31s = Validation runtime

0.806 = Validation accuracy score

9.18s = Training runtime

0.65s = Validation runtime

Fitting model: weighted_ensemble_k0_l1 ... Training model for up to 119.88s of the 51.12s of remaining time.

0.8645 = Validation accuracy score

0.8s = Training runtime

0.0s = Validation runtime

AutoGluon training complete, total runtime = 69.75s ...

CPU times: user 53.8 s, sys: 6.07 s, total: 59.9 s

Wall time: 1min 9s

フィットの結果は以下のようになりました。

results = predictor.fit_summary()

*** Summary of fit() ***

Estimated performance of each model:

model score_val fit_time pred_time_val stack_level

8 weighted_ensemble_k0_l1 0.8645 0.804953 0.001838 1

0 LightGBMClassifier/trial_15 0.8645 1.438680 0.037270 0

2 LightGBMClassifier/trial_17 0.8620 1.400933 0.039980 0

3 LightGBMClassifier/trial_18 0.8605 1.325390 0.030934 0

1 LightGBMClassifier/trial_16 0.8600 1.536028 0.026618 0

5 NeuralNetClassifier/trial_20 0.8480 23.431652 0.566203 0

6 NeuralNetClassifier/trial_21 0.8475 21.988767 0.310336 0

4 LightGBMClassifier/trial_19 0.8155 1.610676 0.038171 0

7 NeuralNetClassifier/trial_22 0.8060 9.177221 0.650193 0

Number of models trained: 9

Types of models trained:

{'WeightedEnsembleModel', 'LGBModel', 'TabularNeuralNetModel'}

Bagging used: False

Stack-ensembling used: False

Hyperparameter-tuning used: True

User-specified hyperparameters:

{'NN': {'num_epochs': 10, 'learning_rate': Real: lower=0.0001, upper=0.01, 'activation': Categorical['relu', 'softrelu', 'tanh'], 'layers': Categorical[[100], [1000], [200, 100], [300, 200, 100]], 'dropout_prob': Real: lower=0.0, upper=0.5}, 'GBM': {'num_boost_round': 100, 'num_leaves': Int: lower=26, upper=66}}

Plot summary of models saved to file: agModels-predictClass-tuned/SummaryOfModels.html

Plot summary of models saved to file: agModels-predictClass-tuned/LightGBMClassifier_HPOmodelsummary.html

Plot summary of models saved to file: LightGBMClassifier_HPOmodelsummary.html

Plot of HPO performance saved to file: agModels-predictClass-tuned/LightGBMClassifier_HPOperformanceVStrials.png

テストデータに対して精度を算出してみます。

y_pred = predictor.predict(test_data_nolab)

print("Predictions: ", y_pred)

perf = predictor.evaluate_predictions(y_true=y_test, y_pred=y_pred, auxiliary_metrics=True)

Evaluation: accuracy on test data: 0.8688709182106664

Evaluations on test data:

{

"accuracy": 0.8688709182106664,

"accuracy_score": 0.8688709182106664,

"balanced_accuracy_score": 0.7895131714141826,

"matthews_corrcoef": 0.6195102922288485,

"f1_score": 0.8688709182106664

}

Predictions: [' <=50K' ' <=50K' ' >50K' ... ' <=50K' ' <=50K' ' <=50K']

Detailed (per-class) classification report:

{

" <=50K": {

"precision": 0.8931939841957686,

"recall": 0.9405448933029124,

"f1-score": 0.9162580898215336,

"support": 7451

},

" >50K": {

"precision": 0.7696307852314093,

"recall": 0.6384814495254529,

"f1-score": 0.6979485970290026,

"support": 2318

},

"accuracy": 0.8688709182106664,

"macro avg": {

"precision": 0.8314123847135889,

"recall": 0.7895131714141826,

"f1-score": 0.8071033434252681,

"support": 9769

},

"weighted avg": {

"precision": 0.8638747606110224,

"recall": 0.8688709182106664,

"f1-score": 0.8644573523567893,

"support": 9769

}

AutoStackを有効にした場合

auto_stackのオプションを有効にするとMulti-layer stack ensemblingを実行してくれます。

このauto-stackオプションのサンプルコードの一番下に注意事項が記載されています。

一番気になったのは、ハイパーパラメタチューニングのオプションを有効にしないということ。

実際には個別のモデルはハイパーパラメタチューニングしつつアンサンブルしたいんじゃないかなーと思ったのですが、現在はおすすめではないようです。

%%time

long_time = 30*60 # for quick demonstration only, you should set this to longest time you are willing to wait

dir = 'agModels-predictClass-autostack' # specifies folder where to store trained models

predictor = task.fit(

train_data=train_data,

label=label_column,

auto_stack=True,

output_directory = dir,

time_limits=long_time)

Beginning AutoGluon training ... Time limit = 1800s

AutoGluon will save models to agModels-predictClass-autostack/

Train Data Rows: 10000

Train Data Columns: 15

Preprocessing data ...

Here are the first 10 unique label values in your data: [' <=50K' ' >50K']

AutoGluon infers your prediction problem is: binary (because only two unique label-values observed)

If this is wrong, please specify `problem_type` argument in fit() instead (You may specify problem_type as one of: ['binary', 'multiclass', 'regression'])

Selected class <--> label mapping: class 1 = >50K, class 0 = <=50K

Feature Generator processed 10000 data points with 14 features

Original Features:

int features: 6

object features: 8

Generated Features:

int features: 0

All Features:

int features: 6

object features: 8

Data preprocessing and feature engineering runtime = 0.1s ...

AutoGluon will gauge predictive performance using evaluation metric: accuracy

To change this, specify the eval_metric argument of fit()

AutoGluon will early stop models using evaluation metric: accuracy

Fitting model: RandomForestClassifierGini_STACKER_l0 ... Training model for up to 899.95s of the 1799.89s of remaining time.

0.8571 = Validation accuracy score

20.36s = Training runtime

1.13s = Validation runtime

Fitting model: RandomForestClassifierEntr_STACKER_l0 ... Training model for up to 876.77s of the 1776.72s of remaining time.

0.859 = Validation accuracy score

25.58s = Training runtime

1.34s = Validation runtime

Fitting model: ExtraTreesClassifierGini_STACKER_l0 ... Training model for up to 847.93s of the 1747.87s of remaining time.

0.8486 = Validation accuracy score

16.65s = Training runtime

2.13s = Validation runtime

Fitting model: ExtraTreesClassifierEntr_STACKER_l0 ... Training model for up to 824.45s of the 1724.4s of remaining time.

0.8478 = Validation accuracy score

18.99s = Training runtime

2.14s = Validation runtime

Fitting model: KNeighborsClassifierUnif_STACKER_l0 ... Training model for up to 799.25s of the 1699.2s of remaining time.

0.7694 = Validation accuracy score

0.33s = Training runtime

1.11s = Validation runtime

Fitting model: KNeighborsClassifierDist_STACKER_l0 ... Training model for up to 797.76s of the 1697.7s of remaining time.

0.751 = Validation accuracy score

0.3s = Training runtime

1.11s = Validation runtime

Fitting model: LightGBMClassifier_STACKER_l0 ... Training model for up to 796.29s of the 1696.24s of remaining time.

0.874 = Validation accuracy score

11.52s = Training runtime

0.17s = Validation runtime

Fitting model: CatboostClassifier_STACKER_l0 ... Training model for up to 784.49s of the 1684.44s of remaining time.

0.8735 = Validation accuracy score

54.41s = Training runtime

0.13s = Validation runtime

Fitting model: NeuralNetClassifier_STACKER_l0 ... Training model for up to 729.9s of the 1629.85s of remaining time.

Ran out of time, stopping training early.

Ran out of time, stopping training early.

Ran out of time, stopping training early.

Ran out of time, stopping training early.

0.8591 = Validation accuracy score

641.59s = Training runtime

3.02s = Validation runtime

Fitting model: LightGBMClassifierCustom_STACKER_l0 ... Training model for up to 85.08s of the 985.02s of remaining time.

0.8673 = Validation accuracy score

23.17s = Training runtime

0.28s = Validation runtime

Completed 1/20 k-fold bagging repeats ...

Fitting model: weighted_ensemble_k0_l1 ... Training model for up to 360.0s of the 960.22s of remaining time.

0.874 = Validation accuracy score

3.57s = Training runtime

0.0s = Validation runtime

Fitting model: RandomForestClassifierGini_STACKER_l1 ... Training model for up to 956.64s of the 956.63s of remaining time.

0.873 = Validation accuracy score

32.32s = Training runtime

1.76s = Validation runtime

Fitting model: RandomForestClassifierEntr_STACKER_l1 ... Training model for up to 921.46s of the 921.45s of remaining time.

0.8745 = Validation accuracy score

45.88s = Training runtime

1.36s = Validation runtime

Fitting model: ExtraTreesClassifierGini_STACKER_l1 ... Training model for up to 873.32s of the 873.31s of remaining time.

0.871 = Validation accuracy score

17.69s = Training runtime

2.16s = Validation runtime

Fitting model: ExtraTreesClassifierEntr_STACKER_l1 ... Training model for up to 850.66s of the 850.65s of remaining time.

0.8703 = Validation accuracy score

20.02s = Training runtime

2.15s = Validation runtime

Fitting model: KNeighborsClassifierUnif_STACKER_l1 ... Training model for up to 825.65s of the 825.64s of remaining time.

0.7685 = Validation accuracy score

0.49s = Training runtime

1.13s = Validation runtime

Fitting model: KNeighborsClassifierDist_STACKER_l1 ... Training model for up to 823.94s of the 823.93s of remaining time.

0.7494 = Validation accuracy score

0.41s = Training runtime

1.13s = Validation runtime

Fitting model: LightGBMClassifier_STACKER_l1 ... Training model for up to 822.3s of the 822.3s of remaining time.

0.8783 = Validation accuracy score

12.55s = Training runtime

0.18s = Validation runtime

Fitting model: CatboostClassifier_STACKER_l1 ... Training model for up to 809.49s of the 809.48s of remaining time.

0.878 = Validation accuracy score

46.66s = Training runtime

0.15s = Validation runtime

Fitting model: NeuralNetClassifier_STACKER_l1 ... Training model for up to 762.64s of the 762.63s of remaining time.

Ran out of time, stopping training early.

0.873 = Validation accuracy score

543.08s = Training runtime

3.31s = Validation runtime

Fitting model: LightGBMClassifierCustom_STACKER_l1 ... Training model for up to 216.03s of the 216.03s of remaining time.

0.8741 = Validation accuracy score

25.99s = Training runtime

0.21s = Validation runtime

Completed 1/20 k-fold bagging repeats ...

Fitting model: weighted_ensemble_k0_l2 ... Training model for up to 360.0s of the 189.19s of remaining time.

0.8784 = Validation accuracy score

3.56s = Training runtime

0.0s = Validation runtime

AutoGluon training complete, total runtime = 1614.4s ...

CPU times: user 22min 10s, sys: 1min 8s, total: 23min 19s

Wall time: 26min 54s

同様にテストデータに対して精度を算出すると、accuracyが0.871程度になりました。

y_pred = predictor.predict(test_data_nolab)

print("Predictions: ", y_pred)

perf = predictor.evaluate_predictions(y_true=y_test, y_pred=y_pred, auxiliary_metrics=True)

Evaluation: accuracy on test data: 0.8705087521752483

Evaluations on test data:

{

"accuracy": 0.8705087521752483,

"accuracy_score": 0.8705087521752483,

"balanced_accuracy_score": 0.7893980679524981,

"matthews_corrcoef": 0.6232120872465466,

"f1_score": 0.8705087521752483

}

Predictions: [' <=50K' ' <=50K' ' >50K' ... ' <=50K' ' <=50K' ' <=50K']

Detailed (per-class) classification report:

{

" <=50K": {

"precision": 0.8926123381568926,

"recall": 0.9437659374580594,

"f1-score": 0.9174766781916628,

"support": 7451

},

" >50K": {

"precision": 0.7784241142252777,

"recall": 0.635030198446937,

"f1-score": 0.6994535519125684,

"support": 2318

},

"accuracy": 0.8705087521752483,

"macro avg": {

"precision": 0.8355182261910852,

"recall": 0.7893980679524981,

"f1-score": 0.8084651150521156,

"support": 9769

},

"weighted avg": {

"precision": 0.8655176198568123,

"recall": 0.8705087521752483,

"f1-score": 0.8657438901156119,

"support": 9769

}

}

指標を変更した場合

fitのeval_metricの引数に好きな指標を選択することができます。

デフォルトは二値分類の場合は、accuracyです。

%%time

dir = 'agModels-predictClass-defaultlogloss' # specifies folder where to store trained models

predictor = task.fit(train_data=train_data, label=label_column, eval_metric='log_loss', output_directory=dir)

log_lossを試したところ、以下のようなRuntimeWarningが出ました。

モデルによっては問題なく動いているように見えますが、最終的にちゃんと動いているのかわかりません...

/usr/local/lib/python3.6/dist-packages/sklearn/metrics/_classification.py:2295: RuntimeWarning: divide by zero encountered in log

loss = -(transformed_labels * np.log(y_pred)).sum(axis=1)

/usr/local/lib/python3.6/dist-packages/sklearn/metrics/_classification.py:2295: RuntimeWarning: invalid value encountered in multiply

loss = -(transformed_labels * np.log(y_pred)).sum(axis=1)

特徴量選別した場合

fitの引数の1つに、feature_pruneがあったので、試してみました。

下がコードになります。

%%time

dir = 'agModels-predictClass-defaultprune' # specifies folder where to store trained models

predictor = task.fit(train_data=train_data, label=label_column, feature_prune=True, output_directory=dir)

実行した直後に以下のwarningが出ました。まだ動いていないようです。

Warning: feature_prune does not currently work, setting to False.

まとめ

公式のサンプルコードや、いくつかの引数のオプションを試してみました。

いくつか動いていないものがあるようなので、今後のアップデートに期待です。