はじめに

以下のマニュアルに沿って、OCI Service Meshを試してみます。

事前準備

OKE クラスタの作成

事前に以下のクラスタを作成してあります。

$ k get node

NAME STATUS ROLES AGE VERSION

10.0.1.223 Ready node 29h v1.26.2

なお、Service Meshを利用するには、以下の前提条件を満たす必要があります。

- OCI VCN-Native Pod Networking

- Oracle Linux 7

- Kubernetes 1.26以降

クライアントの準備

操作用のクライアントとして、Oracle Linuxの仮想マシンを作成してあります。

$ cat /etc/oracle-release

Oracle Linux Server release 8.8

$ kubectl version --short

Client Version: v1.27.2

Kustomize Version: v5.0.1

Server Version: v1.26.2

$ docker -v

Docker version 24.0.2, build cb74dfc

$ oci -v

3.23.2

Python環境の確認、インストール

Pythonはインストール済みです。

$ python3 --version

Python 3.6.8

$ pip3 -V

pip 9.0.3 from /usr/lib/python3.6/site-packages (python 3.6)

Wrapperをインストールします。

$ pip3 install --user virtualenv

Collecting virtualenv

Downloading https://files.pythonhosted.org/packages/18/a2/7931d40ecb02b5236a34ac53770f2f6931e3082b7a7dafe915d892d749d6/virtualenv-20.17.1-py3-none-any.whl (8.8MB)

100% |████████████████████████████████| 8.9MB 199kB/s

Collecting distlib<1,>=0.3.6 (from virtualenv)

Downloading https://files.pythonhosted.org/packages/76/cb/6bbd2b10170ed991cf64e8c8b85e01f2fb38f95d1bc77617569e0b0b26ac/distlib-0.3.6-py2.py3-none-any.whl (468kB)

100% |████████████████████████████████| 471kB 3.6MB/s

Collecting importlib-metadata>=4.8.3; python_version < "3.8" (from virtualenv)

Downloading https://files.pythonhosted.org/packages/a0/a1/b153a0a4caf7a7e3f15c2cd56c7702e2cf3d89b1b359d1f1c5e59d68f4ce/importlib_metadata-4.8.3-py3-none-any.whl

Collecting filelock<4,>=3.4.1 (from virtualenv)

Downloading https://files.pythonhosted.org/packages/84/ce/8916d10ef537f3f3b046843255f9799504aa41862bfa87844b9bdc5361cd/filelock-3.4.1-py3-none-any.whl

Collecting importlib-resources>=5.4; python_version < "3.7" (from virtualenv)

Downloading https://files.pythonhosted.org/packages/24/1b/33e489669a94da3ef4562938cd306e8fa915e13939d7b8277cb5569cb405/importlib_resources-5.4.0-py3-none-any.whl

Collecting platformdirs<3,>=2.4 (from virtualenv)

Downloading https://files.pythonhosted.org/packages/b1/78/dcfd84d3aabd46a9c77260fb47ea5d244806e4daef83aa6fe5d83adb182c/platformdirs-2.4.0-py3-none-any.whl

Requirement already satisfied: typing-extensions>=3.6.4; python_version < "3.8" in /usr/lib/python3.6/site-packages (from importlib-metadata>=4.8.3; python_version < "3.8"->virtualenv)

Collecting zipp>=0.5 (from importlib-metadata>=4.8.3; python_version < "3.8"->virtualenv)

Downloading https://files.pythonhosted.org/packages/bd/df/d4a4974a3e3957fd1c1fa3082366d7fff6e428ddb55f074bf64876f8e8ad/zipp-3.6.0-py3-none-any.whl

Installing collected packages: distlib, zipp, importlib-metadata, filelock, importlib-resources, platformdirs, virtualenv

Successfully installed distlib-0.3.6 filelock-3.4.1 importlib-metadata-4.8.3 importlib-resources-5.4.0 platformdirs-2.4.0 virtualenv-20.17.1 zipp-3.6.0

$ pip3 install --user virtualenvwrapper

Collecting virtualenvwrapper

Downloading https://files.pythonhosted.org/packages/c1/6b/2f05d73b2d2f2410b48b90d3783a0034c26afa534a4a95ad5f1178d61191/virtualenvwrapper-4.8.4.tar.gz (334kB)

100% |████████████████████████████████| 337kB 4.2MB/s

Requirement already satisfied: virtualenv in ./.local/lib/python3.6/site-packages (from virtualenvwrapper)

Collecting virtualenv-clone (from virtualenvwrapper)

Downloading https://files.pythonhosted.org/packages/21/ac/e07058dc5a6c1b97f751d24f20d4b0ec14d735d77f4a1f78c471d6d13a43/virtualenv_clone-0.5.7-py3-none-any.whl

Collecting stevedore (from virtualenvwrapper)

Downloading https://files.pythonhosted.org/packages/6d/8d/8dbd1e502e06e58550ed16c879303f83609d52ac31de0cd6a2403186148a/stevedore-3.5.2-py3-none-any.whl (50kB)

100% |████████████████████████████████| 51kB 11.8MB/s

Requirement already satisfied: platformdirs<3,>=2.4 in ./.local/lib/python3.6/site-packages (from virtualenv->virtualenvwrapper)

Requirement already satisfied: distlib<1,>=0.3.6 in ./.local/lib/python3.6/site-packages (from virtualenv->virtualenvwrapper)

Requirement already satisfied: importlib-resources>=5.4; python_version < "3.7" in ./.local/lib/python3.6/site-packages (from virtualenv->virtualenvwrapper)

Requirement already satisfied: filelock<4,>=3.4.1 in ./.local/lib/python3.6/site-packages (from virtualenv->virtualenvwrapper)

Requirement already satisfied: importlib-metadata>=4.8.3; python_version < "3.8" in ./.local/lib/python3.6/site-packages (from virtualenv->virtualenvwrapper)

Collecting pbr!=2.1.0,>=2.0.0 (from stevedore->virtualenvwrapper)

Downloading https://files.pythonhosted.org/packages/01/06/4ab11bf70db5a60689fc521b636849c8593eb67a2c6bdf73a16c72d16a12/pbr-5.11.1-py2.py3-none-any.whl (112kB)

100% |████████████████████████████████| 122kB 12.2MB/s

Requirement already satisfied: zipp>=3.1.0; python_version < "3.10" in ./.local/lib/python3.6/site-packages (from importlib-resources>=5.4; python_version < "3.7"->virtualenv->virtualenvwrapper)

Requirement already satisfied: typing-extensions>=3.6.4; python_version < "3.8" in /usr/lib/python3.6/site-packages (from importlib-metadata>=4.8.3; python_version < "3.8"->virtualenv->virtualenvwrapper)

Installing collected packages: virtualenv-clone, pbr, stevedore, virtualenvwrapper

Running setup.py install for virtualenvwrapper ... done

Successfully installed pbr-5.11.1 stevedore-3.5.2 virtualenv-clone-0.5.7 virtualenvwrapper-4.8.4

インストールされたスクリプトを確認します。

$ ls -l .local/bin/virtualenvwrapper.sh

-rwxrwxr-x. 1 opc opc 41703 Feb 9 2019 .local/bin/virtualenvwrapper.sh

.bashrcに以下を追記して有効にします。

・・・

# set up Python env

export WORKON_HOME=~/envs

export VIRTUALENVWRAPPER_PYTHON=/usr/bin/python3

export VIRTUALENVWRAPPER_VIRTUALENV_ARGS=' -p /usr/bin/python3 '

source /home/opc/.local/bin/virtualenvwrapper.sh

$ source ~/.bashrc

virtualenvwrapper.user_scripts creating /home/opc/envs/premkproject

virtualenvwrapper.user_scripts creating /home/opc/envs/postmkproject

virtualenvwrapper.user_scripts creating /home/opc/envs/initialize

virtualenvwrapper.user_scripts creating /home/opc/envs/premkvirtualenv

virtualenvwrapper.user_scripts creating /home/opc/envs/postmkvirtualenv

virtualenvwrapper.user_scripts creating /home/opc/envs/prermvirtualenv

virtualenvwrapper.user_scripts creating /home/opc/envs/postrmvirtualenv

virtualenvwrapper.user_scripts creating /home/opc/envs/predeactivate

virtualenvwrapper.user_scripts creating /home/opc/envs/postdeactivate

virtualenvwrapper.user_scripts creating /home/opc/envs/preactivate

virtualenvwrapper.user_scripts creating /home/opc/envs/postactivate

virtualenvwrapper.user_scripts creating /home/opc/envs/get_env_details

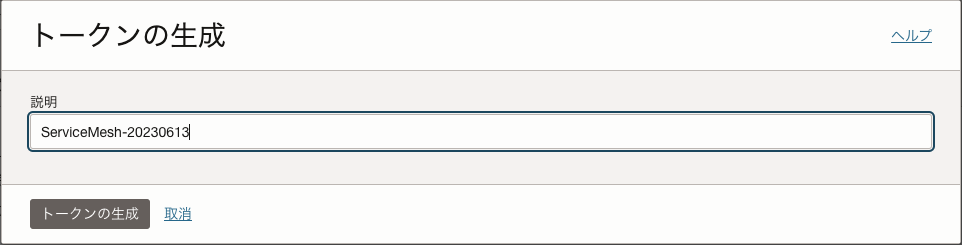

認証トークンの作成

OCIコンソールのユーザ画面から認証トークンを作成します。

コピーしたトークンをテキストエディターなどにメモしておきます。

必要な情報の収集

以降で必要となる以下の情報をメモしておきます。

- テナンシー名

- オブジェクトストレージ・ネームスペース

- テナンシーOCID

- ユーザ名

- ユーザOCID

- リージョン

- リージョンキー

- コンパートメントOCID

- 動的グループOCID

ポリシーの設定

マニュアルでは複数の動的グループに対してポリシーを設定していますが、今回は権限の関係上、一つの動的グループに対して以下のポリシーを設定しました。

Allow dynamic-group 動的グループ名 to use keys in compartment コンパートメント名

Allow dynamic-group 動的グループ名 to manage objects in compartment コンパートメント名

Allow dynamic-group 動的グループ名 to manage service-mesh-family in compartment コンパートメント名

Allow dynamic-group 動的グループ名 to read certificate-authority-family in compartment コンパートメント名

Allow dynamic-group 動的グループ名 to use certificate-authority-delegates in compartment コンパートメント名

Allow dynamic-group 動的グループ名 to manage leaf-certificate-family in compartment コンパートメント名

Allow dynamic-group 動的グループ名 to manage certificate-authority-associations in compartment コンパートメント名

Allow dynamic-group 動的グループ名 to manage certificate-associations in compartment コンパートメント名

Allow dynamic-group 動的グループ名 to manage cabundle-associations in compartment コンパートメント名

Allow dynamic-group 動的グループ名 to use metrics in compartment コンパートメント名

Allow dynamic-group 動的グループ名 to use log-content in compartment コンパートメント名

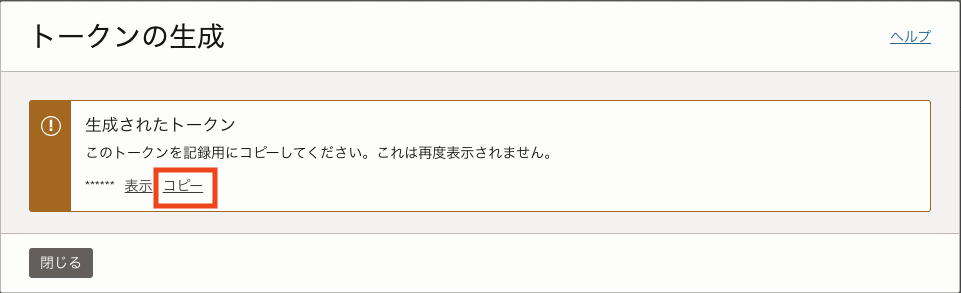

Vaultの作成

Vaultを作成し、それに紐づくマスター暗号化キーを作成します。

マスター暗号化キーの作成

以降の認証局で使用するため、HSMと2048ビットまたは4096ビットのRSAキーが必要になります。

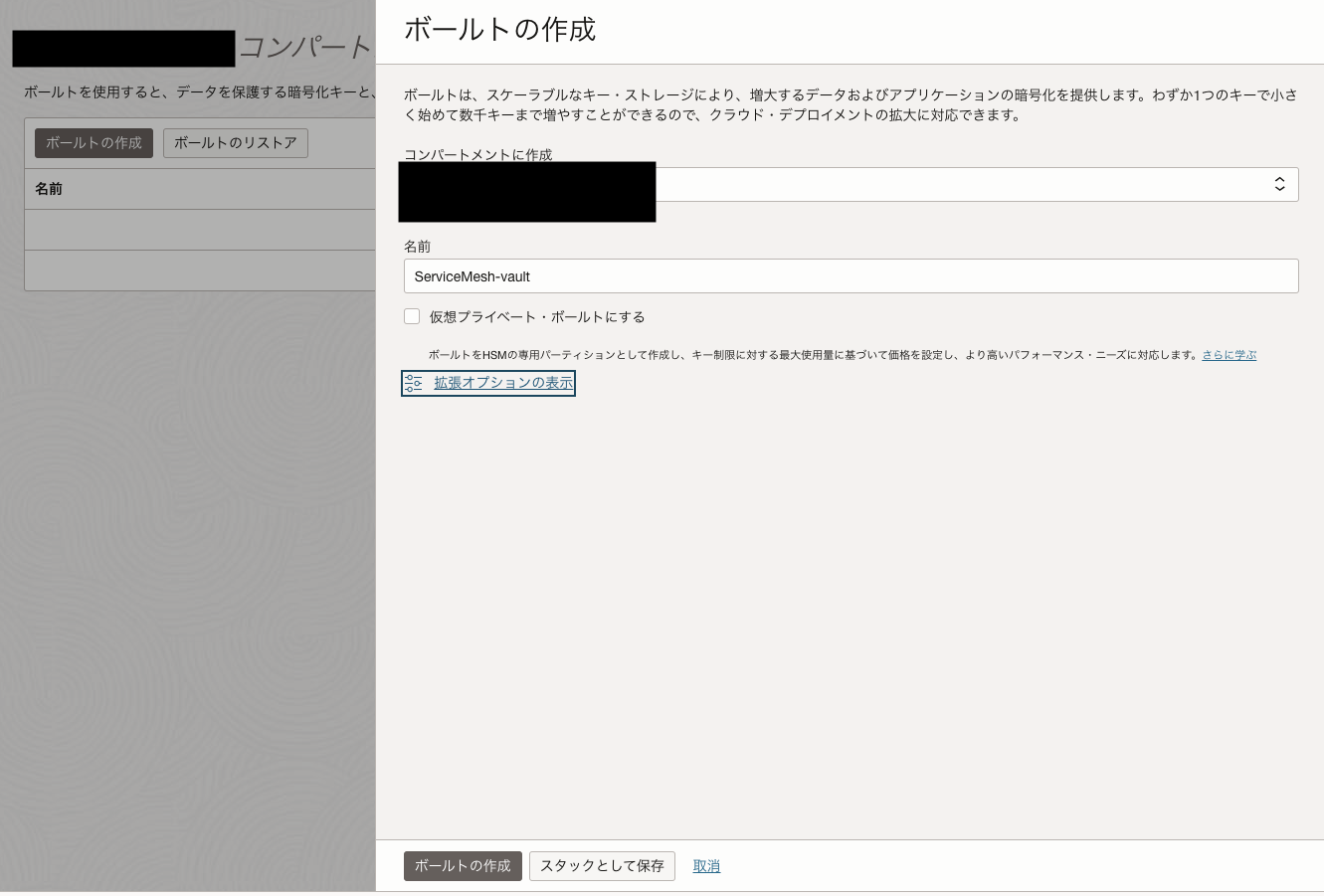

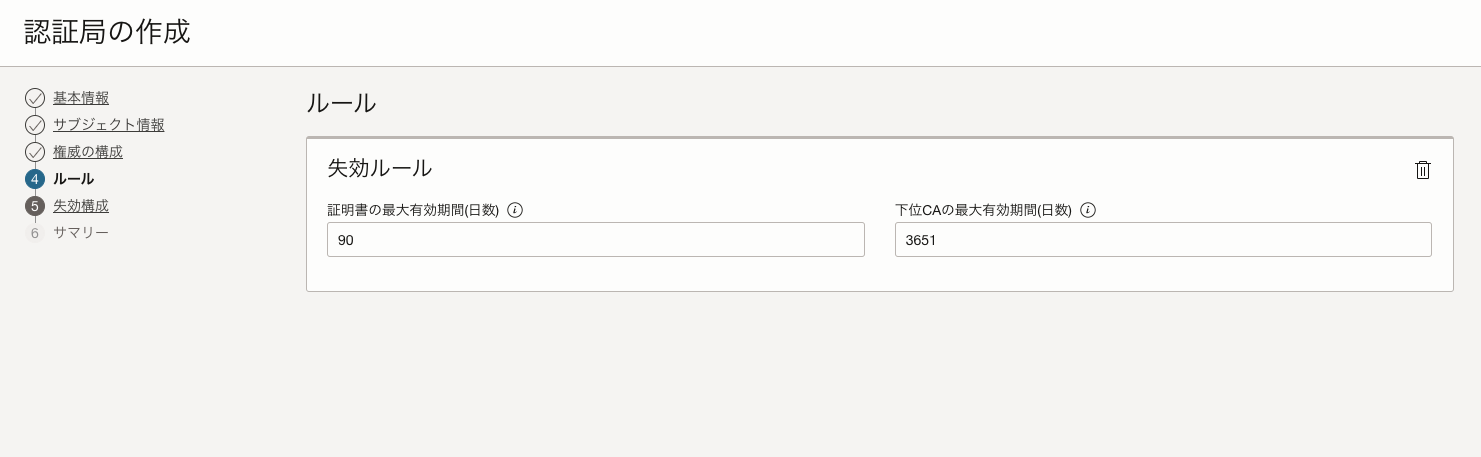

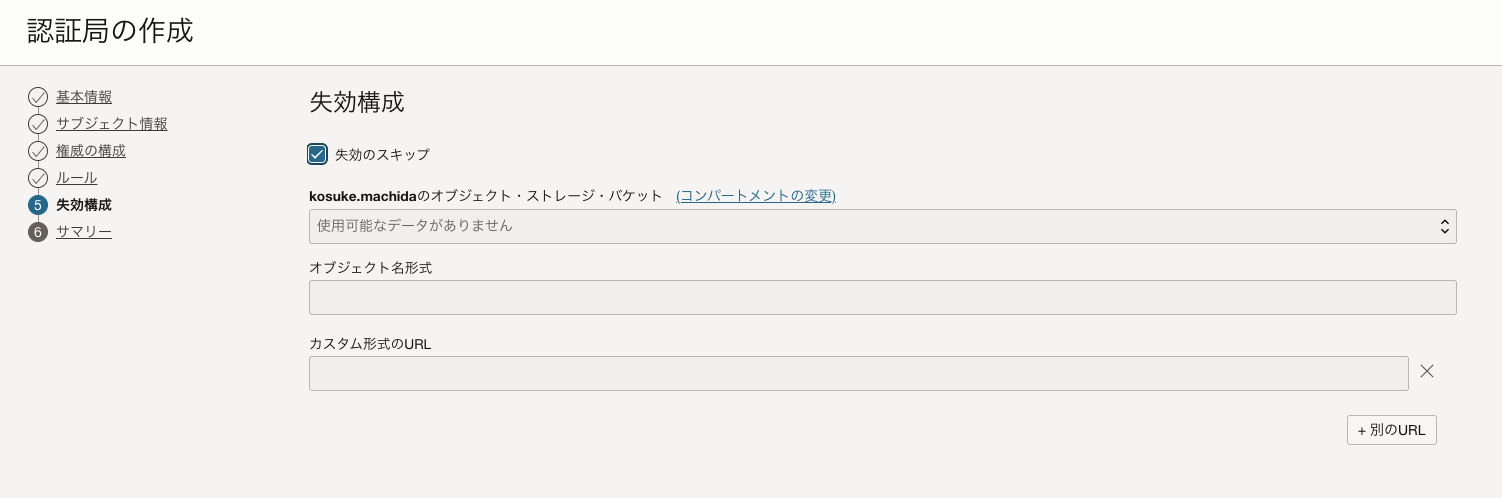

認証局の作成

以下の設定で認証局を作成します。

Service Meshの構成

OCI Service Operator のインストール

Kubernetes環境からOCIリソースを管理するために必要なOCI Service Operator for Kubernetesをインストールします。

Operator SDKのインストール

以下に沿ってOperator SDKをインストールします。

$ export ARCH=$(case $(uname -m) in x86_64) echo -n amd64 ;; aarch64) echo -n arm64 ;; *) echo -n $(uname -m) ;; esac)

$ export OS=$(uname | awk '{print tolower($0)}')

$ export OPERATOR_SDK_DL_URL=https://github.com/operator-framework/operator-sdk/releases/download/v1.29.0

$ curl -LO ${OPERATOR_SDK_DL_URL}/operator-sdk_${OS}_${ARCH}

% Total % Received % Xferd Average Speed Time Time Time Current

Dload Upload Total Spent Left Speed

0 0 0 0 0 0 0 0 --:--:-- --:--:-- --:--:-- 0

100 83.8M 100 83.8M 0 0 19.2M 0 0:00:04 0:00:04 --:--:-- 23.4M

$ ls -l operator-sdk_linux_amd64

-rw-rw-r--. 1 opc opc 87967310 Jun 13 05:44 operator-sdk_linux_amd64

checksumの確認

$ gpg --keyserver keyserver.ubuntu.com --recv-keys 052996E2A20B5C7E

gpg: directory '/home/opc/.gnupg' created

gpg: keybox '/home/opc/.gnupg/pubring.kbx' created

gpg: /home/opc/.gnupg/trustdb.gpg: trustdb created

gpg: key 052996E2A20B5C7E: public key "Operator SDK (release) <cncf-operator-sdk@cncf.io>" imported

gpg: Total number processed: 1

gpg: imported: 1

$ curl -LO ${OPERATOR_SDK_DL_URL}/checksums.txt

% Total % Received % Xferd Average Speed Time Time Time Current

Dload Upload Total Spent Left Speed

0 0 0 0 0 0 0 0 --:--:-- --:--:-- --:--:-- 0

100 1680 100 1680 0 0 2181 0 --:--:-- --:--:-- --:--:-- 2181

$ curl -LO ${OPERATOR_SDK_DL_URL}/checksums.txt.asc

% Total % Received % Xferd Average Speed Time Time Time Current

Dload Upload Total Spent Left Speed

0 0 0 0 0 0 0 0 --:--:-- --:--:-- --:--:-- 0

100 566 100 566 0 0 756 0 --:--:-- --:--:-- --:--:-- 756

$ gpg -u "Operator SDK (release) <cncf-operator-sdk@cncf.io>" --verify checksums.txt.asc

gpg: assuming signed data in 'checksums.txt'

gpg: Signature made Wed May 31 20:38:19 2023 GMT

gpg: using RSA key 8613DB87A5BA825EF3FD0EBE2A859D08BF9886DB

gpg: Good signature from "Operator SDK (release) <cncf-operator-sdk@cncf.io>" [unknown]

gpg: WARNING: This key is not certified with a trusted signature!

gpg: There is no indication that the signature belongs to the owner.

Primary key fingerprint: 3B2F 1481 D146 2380 80B3 46BB 0529 96E2 A20B 5C7E

Subkey fingerprint: 8613 DB87 A5BA 825E F3FD 0EBE 2A85 9D08 BF98 86DB

$ grep operator-sdk_${OS}_${ARCH} checksums.txt | sha256sum -c -

operator-sdk_linux_amd64: OK

ダウンロードしたバイナリーをPATHが通っているところに移動します。

$ chmod +x operator-sdk_${OS}_${ARCH} && sudo mv operator-sdk_${OS}_${ARCH} /usr/local/bin/operator-sdk

$ operator-sdk version

operator-sdk version: "v1.29.0", commit: "78c564319585c0c348d1d7d9bbfeed1098fab006", kubernetes version: "1.26.0", go version: "go1.19.9", GOOS: "linux", GOARCH: "amd64"

Operator Lifecycle Manager (OLM) のインストール

$ operator-sdk olm install

INFO[0005] Fetching CRDs for version "latest"

INFO[0005] Fetching resources for resolved version "latest"

INFO[0015] Creating CRDs and resources

INFO[0015] Creating CustomResourceDefinition "catalogsources.operators.coreos.com"

INFO[0015] Creating CustomResourceDefinition "clusterserviceversions.operators.coreos.com"

INFO[0016] Creating CustomResourceDefinition "installplans.operators.coreos.com"

INFO[0016] Creating CustomResourceDefinition "olmconfigs.operators.coreos.com"

INFO[0016] Creating CustomResourceDefinition "operatorconditions.operators.coreos.com"

INFO[0016] Creating CustomResourceDefinition "operatorgroups.operators.coreos.com"

INFO[0017] Creating CustomResourceDefinition "operators.operators.coreos.com"

INFO[0017] Creating CustomResourceDefinition "subscriptions.operators.coreos.com"

INFO[0017] Creating Namespace "olm"

INFO[0017] Creating Namespace "operators"

INFO[0018] Creating ServiceAccount "olm/olm-operator-serviceaccount"

INFO[0018] Creating ClusterRole "system:controller:operator-lifecycle-manager"

INFO[0018] Creating ClusterRoleBinding "olm-operator-binding-olm"

INFO[0018] Creating OLMConfig "cluster"

INFO[0019] Creating Deployment "olm/olm-operator"

INFO[0019] Creating Deployment "olm/catalog-operator"

INFO[0019] Creating ClusterRole "aggregate-olm-edit"

INFO[0019] Creating ClusterRole "aggregate-olm-view"

INFO[0020] Creating OperatorGroup "operators/global-operators"

INFO[0020] Creating OperatorGroup "olm/olm-operators"

INFO[0020] Creating ClusterServiceVersion "olm/packageserver"

INFO[0020] Creating CatalogSource "olm/operatorhubio-catalog"

INFO[0021] Waiting for deployment/olm-operator rollout to complete

INFO[0021] Waiting for Deployment "olm/olm-operator" to rollout: 0 of 1 updated replicas are available

INFO[0031] Deployment "olm/olm-operator" successfully rolled out

INFO[0031] Waiting for deployment/catalog-operator rollout to complete

INFO[0031] Waiting for Deployment "olm/catalog-operator" to rollout: 0 of 1 updated replicas are available

INFO[0032] Deployment "olm/catalog-operator" successfully rolled out

INFO[0032] Waiting for deployment/packageserver rollout to complete

INFO[0032] Waiting for Deployment "olm/packageserver" to rollout: 0 of 2 updated replicas are available

INFO[0039] Deployment "olm/packageserver" successfully rolled out

INFO[0042] Successfully installed OLM version "latest"

NAME NAMESPACE KIND STATUS

catalogsources.operators.coreos.com CustomResourceDefinition Installed

clusterserviceversions.operators.coreos.com CustomResourceDefinition Installed

installplans.operators.coreos.com CustomResourceDefinition Installed

olmconfigs.operators.coreos.com CustomResourceDefinition Installed

operatorconditions.operators.coreos.com CustomResourceDefinition Installed

operatorgroups.operators.coreos.com CustomResourceDefinition Installed

operators.operators.coreos.com CustomResourceDefinition Installed

subscriptions.operators.coreos.com CustomResourceDefinition Installed

olm Namespace Installed

operators Namespace Installed

olm-operator-serviceaccount olm ServiceAccount Installed

system:controller:operator-lifecycle-manager ClusterRole Installed

olm-operator-binding-olm ClusterRoleBinding Installed

cluster OLMConfig Installed

olm-operator olm Deployment Installed

catalog-operator olm Deployment Installed

aggregate-olm-edit ClusterRole Installed

aggregate-olm-view ClusterRole Installed

global-operators operators OperatorGroup Installed

olm-operators olm OperatorGroup Installed

packageserver olm ClusterServiceVersion Installed

operatorhubio-catalog olm CatalogSource Installed

確認します。

$ operator-sdk olm status

INFO[0012] Fetching CRDs for version "v0.24.0"

INFO[0012] Fetching resources for resolved version "v0.24.0"

INFO[0018] Successfully got OLM status for version "v0.24.0"

NAME NAMESPACE KIND STATUS

olm-operators olm OperatorGroup Installed

operators.operators.coreos.com CustomResourceDefinition Installed

operatorconditions.operators.coreos.com CustomResourceDefinition Installed

catalog-operator olm Deployment Installed

olm-operator-binding-olm ClusterRoleBinding Installed

operatorhubio-catalog olm CatalogSource Installed

subscriptions.operators.coreos.com CustomResourceDefinition Installed

system:controller:operator-lifecycle-manager ClusterRole Installed

installplans.operators.coreos.com CustomResourceDefinition Installed

operatorgroups.operators.coreos.com CustomResourceDefinition Installed

olm Namespace Installed

cluster OLMConfig Installed

packageserver olm ClusterServiceVersion Installed

operators Namespace Installed

catalogsources.operators.coreos.com CustomResourceDefinition Installed

olm-operator olm Deployment Installed

global-operators operators OperatorGroup Installed

aggregate-olm-view ClusterRole Installed

aggregate-olm-edit ClusterRole Installed

olmconfigs.operators.coreos.com CustomResourceDefinition Installed

clusterserviceversions.operators.coreos.com CustomResourceDefinition Installed

olm-operator-serviceaccount olm ServiceAccount Installed

olm namespaceのリソースも確認しておきます。

$ k -n olm get all

NAME READY STATUS RESTARTS AGE

pod/catalog-operator-77b8589cd8-xtt7v 1/1 Running 0 4m1s

pod/olm-operator-5ccf676d8b-9gkmw 1/1 Running 0 4m1s

pod/operatorhubio-catalog-nfdxj 1/1 Running 0 3m51s

pod/packageserver-5cd5d8b9fd-md8f5 1/1 Running 0 3m50s

pod/packageserver-5cd5d8b9fd-xm9sl 1/1 Running 0 3m50s

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/operatorhubio-catalog ClusterIP 10.96.79.110 <none> 50051/TCP 3m50s

service/packageserver-service ClusterIP 10.96.60.180 <none> 5443/TCP 3m50s

NAME READY UP-TO-DATE AVAILABLE AGE

deployment.apps/catalog-operator 1/1 1 1 4m1s

deployment.apps/olm-operator 1/1 1 1 4m1s

deployment.apps/packageserver 2/2 2 2 3m50s

NAME DESIRED CURRENT READY AGE

replicaset.apps/catalog-operator-77b8589cd8 1 1 1 4m1s

replicaset.apps/olm-operator-5ccf676d8b 1 1 1 4m1s

replicaset.apps/packageserver-5cd5d8b9fd 2 2 2 3m50s

Operator用のnamespaceを作成します。

$ kubectl create ns oci-service-operator-system

namespace/oci-service-operator-system created

$ k get ns

NAME STATUS AGE

default Active 5h12m

kube-node-lease Active 5h12m

kube-public Active 5h12m

kube-system Active 5h12m

oci-service-operator-system Active 10s

olm Active 19m

operators Active 19m

作成したnamespaceにOCI Service Operator for Kubernetes Operatorをインストールします。

$ operator-sdk run bundle iad.ocir.io/oracle/oci-service-operator-bundle:1.1.8 -n oci-service-operator-system --timeout 5m

INFO[0017] Creating a File-Based Catalog of the bundle "iad.ocir.io/oracle/oci-service-operator-bundle:1.1.8"

INFO[0019] Generated a valid File-Based Catalog

INFO[0025] Created registry pod: iad-ocir-io-oracle-oci-service-operator-bundle-1-1-8

INFO[0026] Created CatalogSource: oci-service-operator-catalog

INFO[0026] OperatorGroup "operator-sdk-og" created

INFO[0026] Created Subscription: oci-service-operator-v1-1-8-sub

INFO[0031] Approved InstallPlan install-zk7s4 for the Subscription: oci-service-operator-v1-1-8-sub

INFO[0031] Waiting for ClusterServiceVersion "oci-service-operator-system/oci-service-operator.v1.1.8" to reach 'Succeeded' phase

INFO[0031] Waiting for ClusterServiceVersion "oci-service-operator-system/oci-service-operator.v1.1.8" to appear

INFO[0049] Found ClusterServiceVersion "oci-service-operator-system/oci-service-operator.v1.1.8" phase: Pending

INFO[0050] Found ClusterServiceVersion "oci-service-operator-system/oci-service-operator.v1.1.8" phase: InstallReady

INFO[0053] Found ClusterServiceVersion "oci-service-operator-system/oci-service-operator.v1.1.8" phase: Installing

INFO[0064] Found ClusterServiceVersion "oci-service-operator-system/oci-service-operator.v1.1.8" phase: Succeeded

INFO[0065] OLM has successfully installed "oci-service-operator.v1.1.8"

Metrics Serverのインストール

Metrics Serverをインストールして確認します。

(ちなみに、マニュアルにあるマニフェストだとPodDisruptionBudgetのAPIバージョンが古くてエラーになりましたので、最新のマニフェストを使用しています。)

kubectl apply -f https://github.com/kubernetes-sigs/metrics-server/releases/latest/download/high-availability-1.21+.yaml

serviceaccount/metrics-server created

clusterrole.rbac.authorization.k8s.io/system:aggregated-metrics-reader created

clusterrole.rbac.authorization.k8s.io/system:metrics-server created

rolebinding.rbac.authorization.k8s.io/metrics-server-auth-reader created

clusterrolebinding.rbac.authorization.k8s.io/metrics-server:system:auth-delegator created

clusterrolebinding.rbac.authorization.k8s.io/system:metrics-server created

service/metrics-server created

deployment.apps/metrics-server created

poddisruptionbudget.policy/metrics-server created

apiservice.apiregistration.k8s.io/v1beta1.metrics.k8s.io created

$ k top node

NAME CPU(cores) CPU% MEMORY(bytes) MEMORY%

10.0.1.223 66m 3% 1914Mi 12%

サンプルアプリのデプロイ

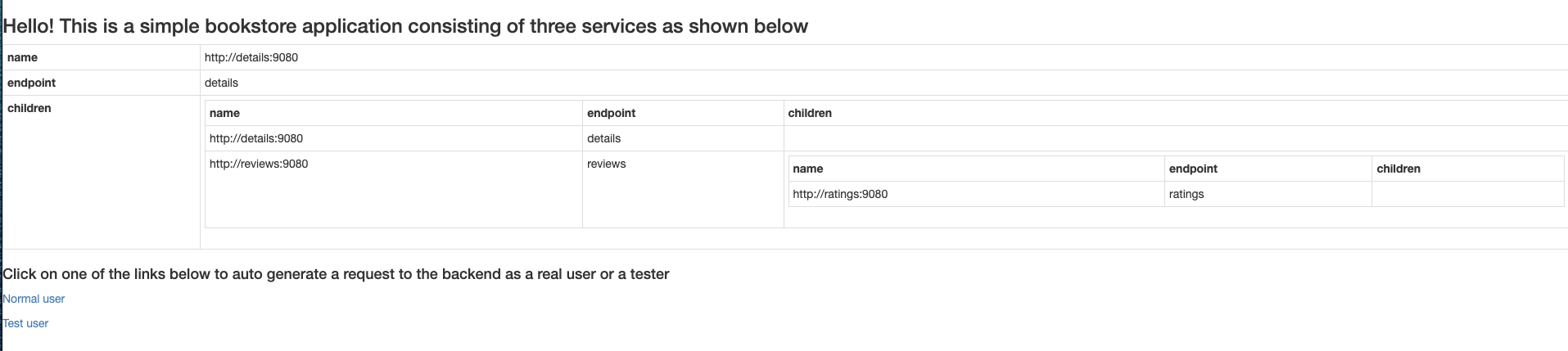

Istioのサンプルアプリ(Bookinfo)をデプロイします。

使用するマニフェストは、チュートリアルにあるものを利用していますが、一点だけ変更しています。

LoadBalancerにannotationsを追記して、Flexible LoadBalancerを使用するようにしています。

(annotationsを追加しない場合、非推奨の動的シェイプのLoadBalancerがプロビジョニングされます)

apiVersion: v1

kind: Service

metadata:

name: bookinfo-ingress

namespace: bookinfo

labels:

app: bookinfo

service: ingress

## 追記

annotations:

service.beta.kubernetes.io/oci-load-balancer-shape: "flexible"

service.beta.kubernetes.io/oci-load-balancer-shape-flex-min: "10"

service.beta.kubernetes.io/oci-load-balancer-shape-flex-max: "50"

## ここまで

spec:

ports:

- port: 80

targetPort: 9080

name: http

selector:

app: productpage

type: LoadBalancer

---

以降省略

$ k apply -f bookinfo-v1.yaml

service/bookinfo-ingress created

service/details created

serviceaccount/bookinfo-details created

deployment.apps/details-v1 created

service/ratings created

serviceaccount/bookinfo-ratings created

deployment.apps/ratings-v1 created

service/reviews created

service/reviews-v1 created

service/reviews-v2 created

service/reviews-v3 created

serviceaccount/bookinfo-reviews created

deployment.apps/reviews-v1 created

deployment.apps/reviews-v2 created

deployment.apps/reviews-v3 created

poddisruptionbudget.policy/reviews-pdb created

service/productpage created

serviceaccount/bookinfo-productpage created

deployment.apps/productpage-v1 created

$ k -n bookinfo get all

NAME READY STATUS RESTARTS AGE

pod/details-v1-c796f666c-tgccm 1/1 Running 0 2m1s

pod/details-v1-c796f666c-zw5pm 1/1 Running 0 2m1s

pod/productpage-v1-7c76cc46d5-62v5f 1/1 Running 0 113s

pod/productpage-v1-7c76cc46d5-8tgwz 1/1 Running 0 113s

pod/ratings-v1-7d9c5f5487-jxc6k 1/1 Running 0 119s

pod/ratings-v1-7d9c5f5487-lcf6h 1/1 Running 0 119s

pod/reviews-v1-6767c5f5f-8bczn 1/1 Running 0 116s

pod/reviews-v1-6767c5f5f-v72rn 1/1 Running 0 116s

pod/reviews-v2-5bdc85557f-cx9kb 1/1 Running 0 116s

pod/reviews-v2-5bdc85557f-l425g 1/1 Running 0 116s

pod/reviews-v3-69d6dd6c95-rmjjp 1/1 Running 0 115s

pod/reviews-v3-69d6dd6c95-zz6cn 1/1 Running 0 115s

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/bookinfo-ingress LoadBalancer 10.96.38.149 192.18.149.xx 80:30492/TCP 2m3s

service/details ClusterIP 10.96.90.93 <none> 9080/TCP 2m2s

service/productpage ClusterIP 10.96.95.173 <none> 9080/TCP 114s

service/ratings ClusterIP 10.96.76.177 <none> 9080/TCP 2m

service/reviews ClusterIP 10.96.176.218 <none> 9080/TCP 119s

service/reviews-v1 ClusterIP 10.96.173.109 <none> 9080/TCP 118s

service/reviews-v2 ClusterIP 10.96.101.234 <none> 9080/TCP 118s

service/reviews-v3 ClusterIP 10.96.138.44 <none> 9080/TCP 117s

NAME READY UP-TO-DATE AVAILABLE AGE

deployment.apps/details-v1 2/2 2 2 2m2s

deployment.apps/productpage-v1 2/2 2 2 114s

deployment.apps/ratings-v1 2/2 2 2 2m

deployment.apps/reviews-v1 2/2 2 2 117s

deployment.apps/reviews-v2 2/2 2 2 117s

deployment.apps/reviews-v3 2/2 2 2 116s

NAME DESIRED CURRENT READY AGE

replicaset.apps/details-v1-c796f666c 2 2 2 2m2s

replicaset.apps/productpage-v1-7c76cc46d5 2 2 2 114s

replicaset.apps/ratings-v1-7d9c5f5487 2 2 2 2m

replicaset.apps/reviews-v1-6767c5f5f 2 2 2 117s

replicaset.apps/reviews-v2-5bdc85557f 2 2 2 117s

replicaset.apps/reviews-v3-69d6dd6c95 2 2 2 116s

LoadBalancerのEXTERNAL-IPを指定して、ブラウザで確認します。

Service Meshの構成

OCI Service Meshの各リソースはkubectlで管理します。デプロイしたアプリケーションでService Meshを有効にするには、以下2つのリソースセットを作成する必要があります。

- Service Mesh Control Plane

- Service Mesh binding resource

Service Mesh Control Planeの作成

アプリケーション間の通信をService Meshで管理するために、アプリケーションがデプロイされているnamespaceのSidecar Injectionを有効にします。

$ kubectl label namespace bookinfo servicemesh.oci.oracle.com/sidecar-injection=enabled

namespace/bookinfo labeled

チュートリアルにあるマニフェストを利用してControl Planeをデプロイします。

以下3点を修正しています。

- コンパートメントOCID

- 認証局OCID

- IngressGatewayDeploymentで使用するLoadBalancerをフレキシブルシェイプに変更(以下の追記部分)

・・・

apiVersion: servicemesh.oci.oracle.com/v1beta1

kind: IngressGatewayDeployment

metadata:

name: bookinfo-ingress-gateway-deployment

namespace: bookinfo

spec:

ingressGateway:

ref:

name: bookinfo-ingress-gateway

deployment:

autoscaling:

minPods: 1

maxPods: 1

ports:

- protocol: TCP

port: 9080

serviceport: 80

service:

type: LoadBalancer

## 追記

annotations:

service.beta.kubernetes.io/oci-load-balancer-shape: "flexible"

service.beta.kubernetes.io/oci-load-balancer-shape-flex-min: "10"

service.beta.kubernetes.io/oci-load-balancer-shape-flex-max: "50"

## ここまで

・・・

$ k apply -f meshify-bookinfo-v1.yaml

mesh.servicemesh.oci.oracle.com/bookinfo created

virtualservice.servicemesh.oci.oracle.com/details created

virtualdeployment.servicemesh.oci.oracle.com/details-v1 created

virtualserviceroutetable.servicemesh.oci.oracle.com/details-route-table created

virtualservice.servicemesh.oci.oracle.com/ratings created

virtualdeployment.servicemesh.oci.oracle.com/ratings-v1 created

virtualserviceroutetable.servicemesh.oci.oracle.com/ratings-route-table created

virtualservice.servicemesh.oci.oracle.com/reviews created

virtualdeployment.servicemesh.oci.oracle.com/reviews-v1 created

virtualdeployment.servicemesh.oci.oracle.com/reviews-v2 created

virtualdeployment.servicemesh.oci.oracle.com/reviews-v3 created

virtualserviceroutetable.servicemesh.oci.oracle.com/reviews-route-table created

virtualservice.servicemesh.oci.oracle.com/productpage created

virtualdeployment.servicemesh.oci.oracle.com/productpage-v1 created

virtualserviceroutetable.servicemesh.oci.oracle.com/productpage-route-table created

ingressgateway.servicemesh.oci.oracle.com/bookinfo-ingress-gateway created

ingressgatewaydeployment.servicemesh.oci.oracle.com/bookinfo-ingress-gateway-deployment created

ingressgatewayroutetable.servicemesh.oci.oracle.com/bookinfo-ingress-gateway-route-table created

accesspolicy.servicemesh.oci.oracle.com/bookinfo-policy created

ACTIVEがTrueになっていることを確認します。

$ k -n bookinfo get mesh

NAME ACTIVE AGE

bookinfo True 3m39s

$ k -n bookinfo get virtualserviceroutetables,virtualservices,virtualdeployment

NAME ACTIVE AGE

virtualserviceroutetable.servicemesh.oci.oracle.com/details-route-table True 8m5s

virtualserviceroutetable.servicemesh.oci.oracle.com/productpage-route-table True 7m59s

virtualserviceroutetable.servicemesh.oci.oracle.com/ratings-route-table True 8m3s

virtualserviceroutetable.servicemesh.oci.oracle.com/reviews-route-table True 8m

NAME ACTIVE AGE

virtualservice.servicemesh.oci.oracle.com/details True 8m7s

virtualservice.servicemesh.oci.oracle.com/productpage True 8m1s

virtualservice.servicemesh.oci.oracle.com/ratings True 8m5s

virtualservice.servicemesh.oci.oracle.com/reviews True 8m4s

NAME ACTIVE AGE

virtualdeployment.servicemesh.oci.oracle.com/details-v1 True 8m6s

virtualdeployment.servicemesh.oci.oracle.com/productpage-v1 True 8m

virtualdeployment.servicemesh.oci.oracle.com/ratings-v1 True 8m5s

virtualdeployment.servicemesh.oci.oracle.com/reviews-v1 True 8m3s

virtualdeployment.servicemesh.oci.oracle.com/reviews-v2 True 8m3s

virtualdeployment.servicemesh.oci.oracle.com/reviews-v3 True 8m2s

$ k -n bookinfo get ingressgateway,ingressgatewaydeployments,ingressgatewayroutetables

NAME ACTIVE AGE

ingressgateway.servicemesh.oci.oracle.com/bookinfo-ingress-gateway True 9m34s

NAME ACTIVE AGE

ingressgatewaydeployment.servicemesh.oci.oracle.com/bookinfo-ingress-gateway-deployment True 20m

NAME ACTIVE AGE

ingressgatewayroutetable.servicemesh.oci.oracle.com/bookinfo-ingress-gateway-route-table True 9m33s

コンソールでも確認できます。

Service Mesh Bindingリソースの作成

Service Mesh Bindingリソースを作成し、Service Mesh Control Planeと各Podをバインドします。

利用するマニフェストは、チュートリアルのbind-bookinfo-v1.yamlです。

$ k apply -f bind-bookinfo-v1.yaml

virtualdeploymentbinding.servicemesh.oci.oracle.com/details-v1-binding created

virtualdeploymentbinding.servicemesh.oci.oracle.com/ratings-v1-binding created

virtualdeploymentbinding.servicemesh.oci.oracle.com/reviews-v1-binding created

virtualdeploymentbinding.servicemesh.oci.oracle.com/reviews-v2-binding created

virtualdeploymentbinding.servicemesh.oci.oracle.com/reviews-v3-binding created

virtualdeploymentbinding.servicemesh.oci.oracle.com/productpage-v1-binding created

$ k -n bookinfo get virtualdeploymentbindings

NAME ACTIVE AGE

details-v1-binding True 56s

productpage-v1-binding True 54s

ratings-v1-binding True 56s

reviews-v1-binding True 55s

reviews-v2-binding True 55s

reviews-v3-binding True 54s

動作確認

IngressGatewayDeploymentで使用されているLoadBalancerのEXTERNAL-IPを確認します。

$ kubectl get svc bookinfo-ingress-gateway-deployment-service -n bookinfo

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

bookinfo-ingress-gateway-deployment-service LoadBalancer 10.96.2.185 140.238.129.xxx 80:31821/TCP 102m

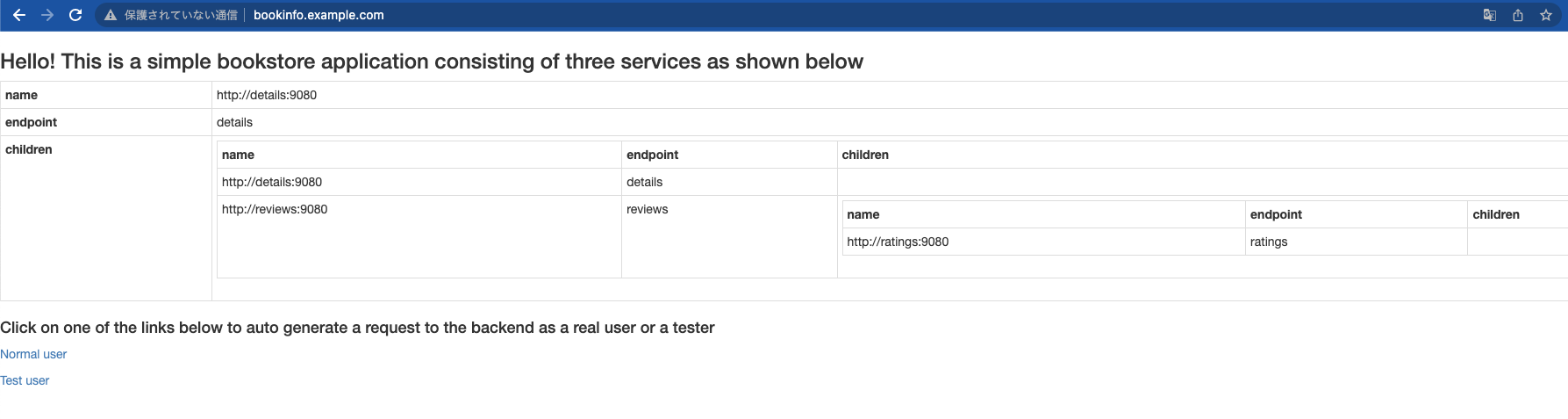

Service Mesh経由でアプリケーションを表示するには、ホスト名でアクセスする必要があるので、/etc/hostsファイルに以下を追記しておきます。

・・・

140.238.129.xxx bookinfo.example.com

ホスト名を指定してブラウザでアクセスし、Service Mesh経由でもアプリを使用できることを確認します。

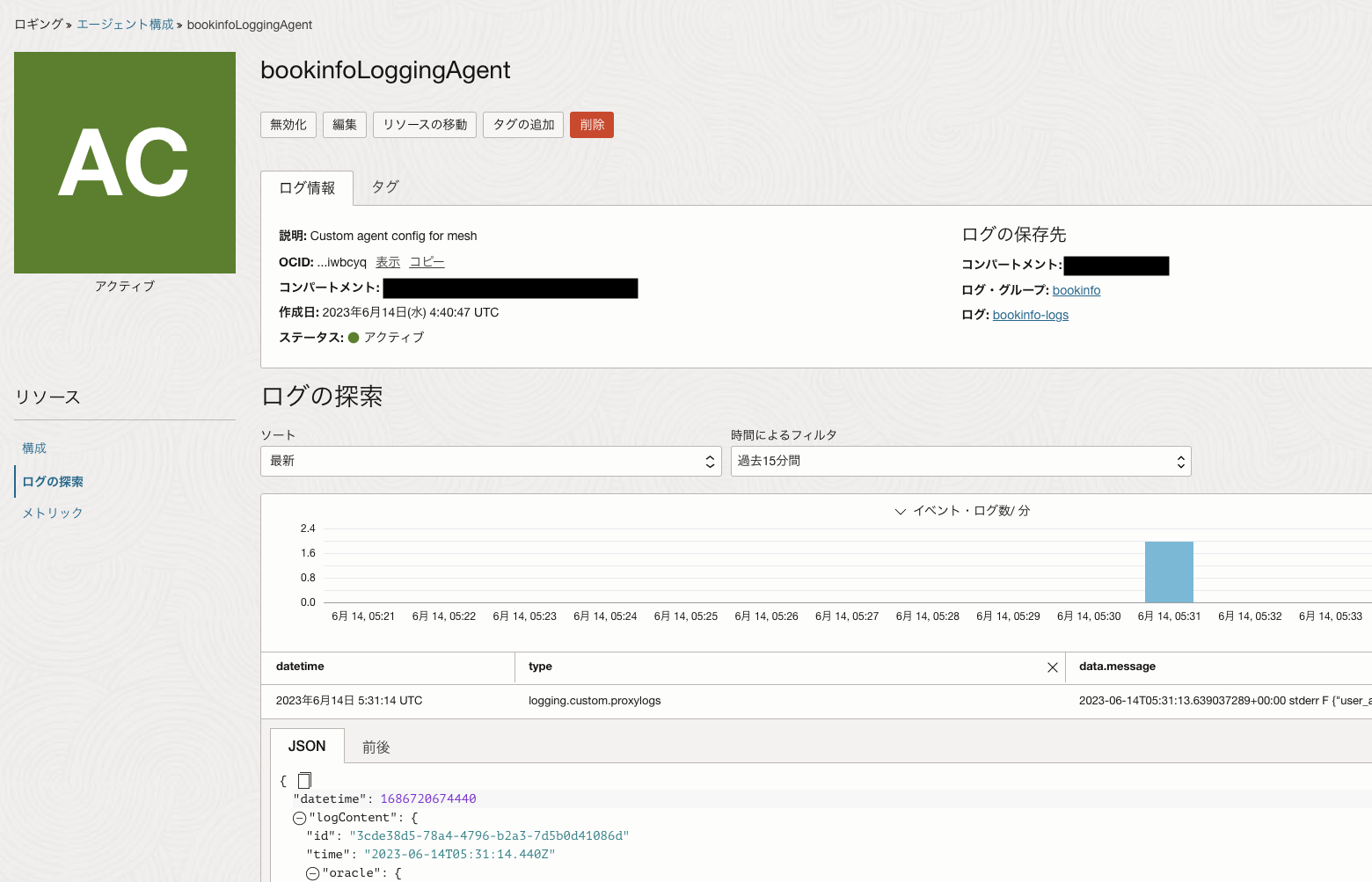

ロギングの設定

Service MeshのLogを取れるようにします。

ロググループの作成

$ oci logging log-group create --compartment-id ocid1.compartment.oc1..aaaaaaaamxxxxxxxxxxxx --region YYZ --display-name bookinfo

{

"opc-work-request-id": "ocid1.logworkrequest.oc1.ca-toronto-1.aaaaaaaamm6zcvgv7unjdsdjwkw7uxxxxxxxxxxxx"

}

作成したロググループのOCIDを確認します。

$ oci logging log-group list --compartment-id ocid1.compartment.oc1..aaaaaaaaxxxxxxxxxxxxxx --region YYZ

{

"data": [

{

"compartment-id": "ocid1.compartment.oc1..aaaaaaaamyexxxxxxxxxxxxx",

"defined-tags": {},

"description": null,

"display-name": "bookinfo",

"freeform-tags": {},

"id": "ocid1.loggroup.oc1.ca-toronto-1.amaaaaaassl65iqa65ootlxxxxxxxxxxxx", #これ

"lifecycle-state": "ACTIVE",

"time-created": "2023-06-14T04:26:00.133000+00:00",

"time-last-modified": "2023-06-14T04:26:00.133000+00:00"

}

]

}

カスタムログの作成

$ oci logging log create --log-group-id ocid1.loggroup.oc1.ca-toronto-1.amaaaaaassxxxxxxxxxxxxxxx --display-name bookinfo-logs --log-type custom --region YYZ

{

"opc-work-request-id": "ocid1.logworkrequest.oc1.ca-toronto-1.aaaaaaaasm7pzrxxxxxxxxxxxxxxx"

}

作成したカスタムログのOCIDを確認します。

$ oci logging log list --log-group-id ocid1.loggroup.oc1.ca-toronto-1.amaaaaaassxxxxxxxxxxxxxx --region YYZ

{

"data": [

{

"compartment-id": "ocid1.compartment.oc1..aaaaaaaamaxxxxxxxxxxxxxx",

"configuration": null,

"defined-tags": {},

"display-name": "bookinfo-logs",

"freeform-tags": {},

"id": "ocid1.log.oc1.ca-toronto-1.amaaaaaassxxxxxxxxxxxxxx", #これ

"is-enabled": true,

"lifecycle-state": "ACTIVE",

"log-group-id": "ocid1.loggroup.oc1.ca-toronto-1.amaaaaaassl65ixxxxxxxxxxxxxx",

"log-type": "CUSTOM",

"retention-duration": 30,

"time-created": "2023-06-14T04:29:30.510000+00:00",

"time-last-modified": "2023-06-14T04:29:30.510000+00:00"

}

]

}

エージェントの作成

チュートリアルにあるサンプルファイルを使用して、Configファイルを作成します。

<your-custom-log-ocid>にはカスタムログのOCIDを入力し、<app-namespace>にはアプリをデプロイしているnamespace(今回はbookinfo)を入力します。

{

"configurationType": "LOGGING",

"destination": {

"logObjectId": "<your-custom-log-ocid>"

},

"sources": [

{

"name": "proxylogs",

"parser": {

"fieldTimeKey": null,

"isEstimateCurrentEvent": null,

"isKeepTimeKey": null,

"isNullEmptyString": null,

"messageKey": null,

"nullValuePattern": null,

"parserType": "NONE",

"timeoutInMilliseconds": null,

"types": null

},

"paths": [

"/var/log/containers/*<app-namespace>*oci-sm-proxy*.log"

],

"source-type": "LOG_TAIL"

}

]

}

カスタムエージェントを作成します。

$ oci logging agent-configuration create --compartment-id ocid1.compartment.oc1..aaaaaaaamyxxxxxxxxxxxxxx --is-enabled true --service-configuration file://logconfig.json --display-name bookinfoLoggingAgent --description "Custom agent config for mesh" --group-association '{"groupList": ["ocid1.dynamicgroup.oc1..aaaaaaaajqxxxxxxxxxxxxxxxxx"]}' --region YYZ

{

"opc-work-request-id": "ocid1.logworkrequest.oc1.ca-toronto-1.aaaaaaaaaulxxxxxxxxxxxxxxx"

}

Service Mesh経由でアプリにアクセスして適当に操作し、ログが収集されていることを確認します。

モニタリングの設定

PrometheusとGrafanaをデプロイして、Service Meshの状況をモニタリングします。

Prometheus

namespaceを作成します。

$ kubectl create namespace monitoring

namespace/monitoring created

チュートリアルのマニフェストprometheus.yamlを使用して、Prometheusをデプロイします。

$ k apply -f prometheus.yaml

serviceaccount/prometheus created

clusterrole.rbac.authorization.k8s.io/prometheus created

clusterrolebinding.rbac.authorization.k8s.io/prometheus created

configmap/prometheus-server-conf created

service/prometheus created

deployment.apps/prometheus-deployment created

しばらく待ってもPodがPendingの状態なので確認します。

$ k -n monitoring describe pod

Name: prometheus-deployment-68c76cdd7-8nfdt

Namespace: monitoring

・・・

Limits:

cpu: 1

memory: 1Gi

Requests:

cpu: 1

memory: 1Gi

Environment: <none>

・・・

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Warning FailedScheduling 4m16s default-scheduler 0/1 nodes are available: 1 Insufficient cpu. preemption: 0/1 nodes are available: 1 No preemption victims found for incoming pod..

1 OCPU(2vCPU)、1ノードのクラスタなので、CPUが足らないようです。

本来であればリソースを追加すべきところですが、今回はLimitsの設定をコメントアウトして対処します。

・・・

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: prometheus-deployment

namespace: monitoring

labels:

app: prometheus-server

spec:

replicas: 1

selector:

matchLabels:

app: prometheus-server

template:

metadata:

namespace: monitoring

labels:

app: prometheus-server

spec:

serviceAccountName: prometheus

containers:

- name: prometheus

image: prom/prometheus

args:

- "--storage.tsdb.retention.time=30d"

- "--config.file=/etc/prometheus/prometheus.yml"

- "--storage.tsdb.path=/prometheus/"

- "--web.enable-lifecycle"

ports:

- containerPort: 9090

# resources:

# limits:

# cpu: 1

# memory: 1Gi

volumeMounts:

- name: prometheus-config-volume

mountPath: /etc/prometheus/

- name: prometheus-storage-volume

mountPath: /prometheus/

volumes:

- name: prometheus-config-volume

configMap:

defaultMode: 420

name: prometheus-server-conf

- name: prometheus-storage-volume

emptyDir: {}

再度デプロイして確認します。

$ k -n monitoring get all

NAME READY STATUS RESTARTS AGE

pod/prometheus-deployment-67fd84d9ff-847m7 1/1 Running 0 25s

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/prometheus ClusterIP 10.96.165.166 <none> 9090/TCP 26s

NAME READY UP-TO-DATE AVAILABLE AGE

deployment.apps/prometheus-deployment 1/1 1 1 26s

NAME DESIRED CURRENT READY AGE

replicaset.apps/prometheus-deployment-67fd84d9ff 1 1 1 26s

Grafana

チュートリアルのマニフェストgrafana.yamlを使用して、Grafanaをデプロイします。

サンプルの中のX.Y.ZをGrafanaのバージョンに置き換えます。10.0.0が最新のようですが、今回は1つ前の9.5.3にしておきました。

何ヶ所かあるので、sedで置き換えます。

$ sed -i -e 's/X.Y.Z/9.5.3/g' grafana.yaml

$ k apply -f grafana.yaml

serviceaccount/grafana created

configmap/grafana created

service/grafana created

deployment.apps/grafana created

configmap/mesh-demo-grafana-dashboards created

確認します。

$ k -n monitoring get all

NAME READY STATUS RESTARTS AGE

pod/grafana-77f85bf7bd-clqms 1/1 Running 0 41s

pod/prometheus-deployment-67fd84d9ff-847m7 1/1 Running 0 15m

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/grafana LoadBalancer 10.96.167.31 140.238.148.xx 80:32492/TCP 41s

service/prometheus ClusterIP 10.96.165.166 <none> 9090/TCP 15m

NAME READY UP-TO-DATE AVAILABLE AGE

deployment.apps/grafana 1/1 1 1 42s

deployment.apps/prometheus-deployment 1/1 1 1 15m

NAME DESIRED CURRENT READY AGE

replicaset.apps/grafana-77f85bf7bd 1 1 1 42s

replicaset.apps/prometheus-deployment-67fd84d9ff 1 1 1 15m

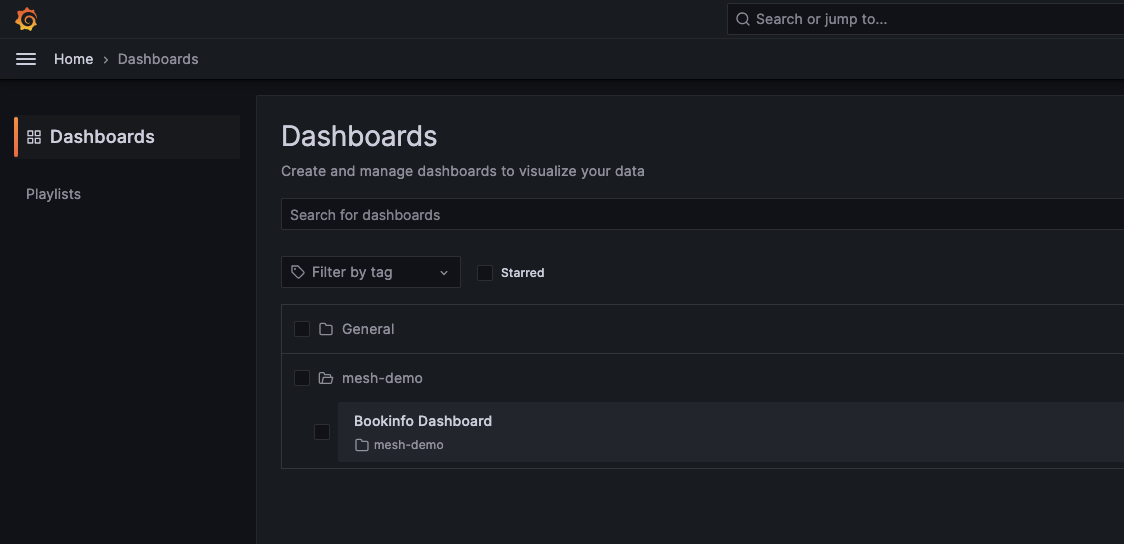

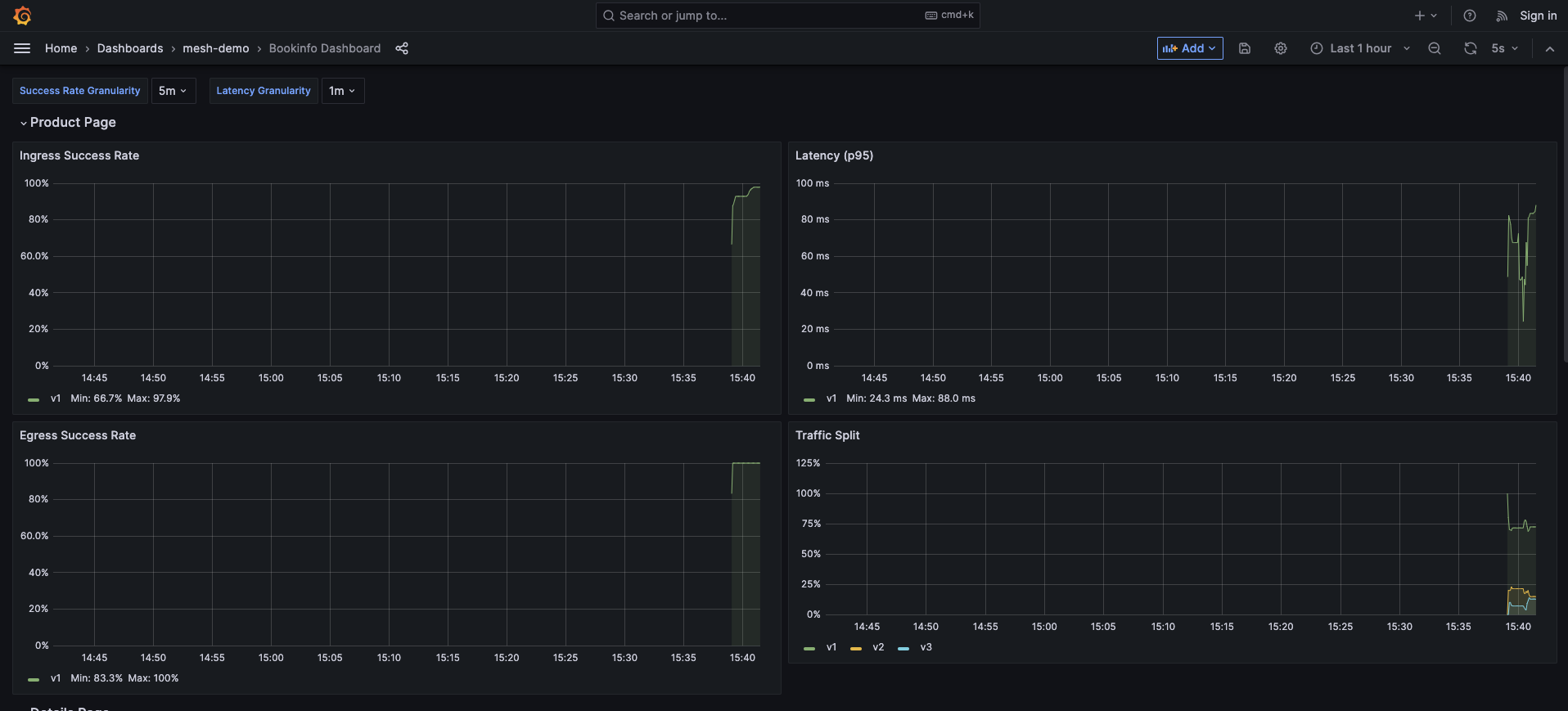

ブラウザでLoadBalancerのEXTERNAL-IPにアクセスします。

Dashboardsのmesh-demoからBookinfo Dashboardを選択します。

こんな感じで見えます。