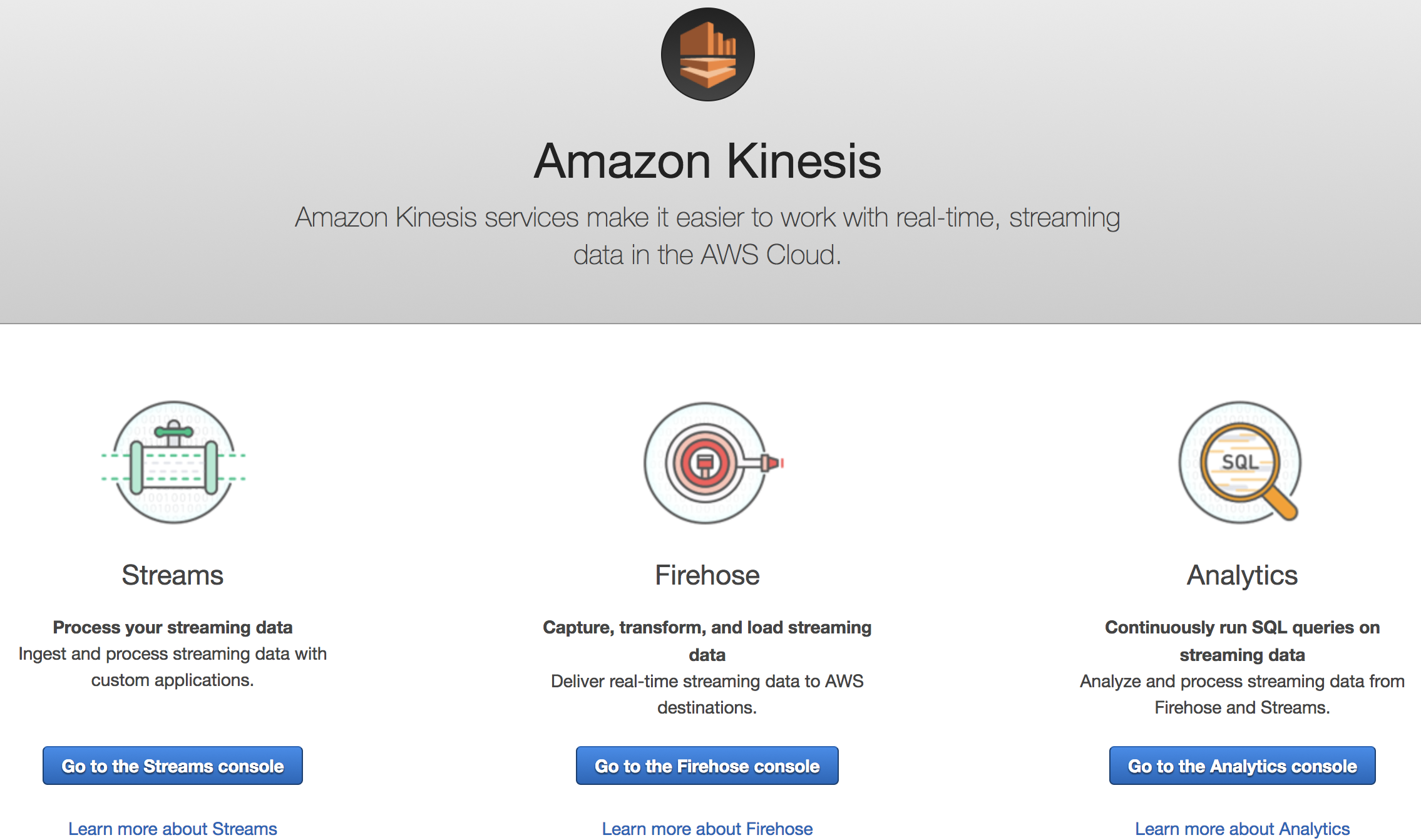

What the Kinesis-firehose?

- Streams,Analyticsなど、KinesisStreamingDataPlatformの一部

- kinesis-agentをサーバで起動させ、configに処理を定義します

- Javaで動き、任意のログをS3などのデータレイクへ任意のタイミングでPushします

Kinesis-agent

Prerequisites

- UbuntuというかDebianは入ってないです...

- Writing to Amazon Kinesis Streams Using Kinesis Agent

Your operating system must be either Amazon Linux AMI with version 2015.09 or later, or Red Hat

Enterprise Linux version 7 or later.

```

Method

- 公式ドキュメントには書いてないけど、ReadMeにBuildすれば使えるって書いてありました

Building from Source

The installation done by the setup script is only tested on the following OS Disributions:

Red Hat Enterprise Linux version 7 or later

Amazon Linux AMI version 2015.09 or later

Ubuntu Linux version 12.04 or later

Debian Linux version 8.6 or later

```

Enviroment

- ubuntu15.04(サポート終了してるしLTSでさえないことは諸事情により触れないでください...)

- Terraform v0.10.7

- itamae (1.9.11)

Apply

Firehose delivery streams

terraform

- 構成は端的に書くと以下

- 他サービスと同じリージョンに作成するなら、構造は分離しなくてもOK

.

├── kinesis_firehose_delivery_stream.tf

├── main.tf

└── terraform_remote_state.tf

// config

provider "aws" {

region = "ap-northeast-1"

profile = "xxxxxx"

}

// backend

terraform {

backend "s3" {

bucket = "xxxxxx"

key = "xxxxxx"

region = "ap-northeast-1"

profile = "xxxxxx"

}

}

data "terraform_remote_state" "xxxxxx" {

backend = "s3"

config {

bucket = "xxxxxx"

key = "xxxxxx"

region = "ap-northeast-1"

profile = "xxxxxx"

}

}

// syslog

resource "aws_kinesis_firehose_delivery_stream" "xxxxxx-syslog" {

name = "xxxxxx-syslog"

destination = "s3"

s3_configuration {

role_arn = "${aws_iam_role.xxxxxx.arn}"

buffer_interval = "300"

buffer_size = "5"

bucket_arn = "arn:aws:s3::: xxxxxx"

prefix = "kinesis-firehose/rsyslog/syslog/"

}

}

itamae

- 構成は以下

.

├── provisioner

│ └── cookbooks

│ ├── aws-kinesis

│ │ ├── default.rb

│ │ └── file

│ │ └── etc

│ │ └── aws-kinesis

│ │ └── agent.json

# kinesis-agent depend-packages install

execute "updte repository" do

command "sudo apt-get update"

end

package 'openjdk-8-jdk' do

action :install

end

# kinesis-agent install

execute "git clone" do

command "git clone https://github.com/awslabs/amazon-kinesis-agent.git"

not_if 'test -e /home/ubuntu/amazon-kinesis-agent'

end

execute "build" do

command "cd /home/ubuntu/amazon-kinesis-agent;./setup --build"

end

execute "install" do

command "cd /home/ubuntu/amazon-kinesis-agent;./setup --install"

end

# change to the agent.json

remote_file "/etc/aws-kinesis/agent.json" do

source "file/etc/aws-kinesis/agent.json"

mode "644"

owner "root"

group "root"

end

directory "/var/log/nginx" do

mode "755"

end

service 'aws-kinesis-agent' do

action [:enable, :start]

end

{

"cloudwatch.emitMetrics": true,

"cloudwatch.endpoint": "https://monitoring.ap-northeast-1.amazonaws.com",

"firehose.endpoint": "https://firehose.ap-northeast-1.amazonaws.com",

"flows": [

{

"filePattern": "/var/log/syslog*",

"deliveryStream": "xxxxxx-syslog",

"initialPosition": "END_OF_FILE",

"maxBufferAgeMillis": "60000",

"maxBufferSizeBytes": "1048576",

"maxBufferSizeRecords": "100",

"minTimeBetweenFilePollsMillis": "100",

"partitionKeyOption": "RANDOM",

"skipHeaderLines": "0",

"truncatedRecordTerminator": "'\n'"

}

//以下、必要な分を記述

]

}

Check

- プロセスは正常に起動しているか確認

-

ヒープ全体はデフォルトで512MBなので、要チューニング

12037 999 20 0 4053620 244480 19052 S 1.1 1.5 3:47.96 /usr/bin/java -server -Xms32m -Xmx512m -XX:OnOutOfMemoryError="/bin/kill -9 %p" -cp /usr/share/aws-kinesis-agent/lib:/usr/share/aws-kinesis-+

9 root 20 0 0 0 0 S 0.3 0.0 1:00.50 [rcuos/0]

18 root 20 0 0 0 0 S 0.3 0.0 0:15.21 [rcuos/1]

1 root 20 0 35896 6024 3688 S 0.0 0.0 0:02.82 /sbin/init -

- /var/log/aws-kinesis-agent/aws-kinesis-agent.logに

Error,WARNが出てないか確認 - 指定したバケットにログが格納されているか確認

Monitor

- kinesis-agentが正常に動作してるかCloudWatchで監視

.

├── kinesis_firehose_delivery_stream.tf

├── cloudwatch_metric_alarm.tf

├── main.tf

└── terraform_remote_state.tf

-

treat_missing_dataを使用することで不連続なデータの扱いを定義できます

treat_missing_data - (Optional) Sets how this alarm is to handle missing data points. The following values are supported: missing, ignore, breaching and notBreaching. Defaults to missing.

```cloudwatch_metric_alarm.tf

resource "aws_cloudwatch_metric_alarm" "xxxxxx-syslog-ServiceErrors" {

alarm_name = "[KINESIS-AGENT] xxxxxx-syslog-ServiceErrors"

alarm_description = "Number of calls to PutRecords that have become service errors (except throttling errors) within the specified time period"

comparison_operator = "GreaterThanOrEqualToThreshold"

evaluation_periods = "1"

treat_missing_data = "notBreaching"

metric_name = "ServiceErrors"

namespace = "AWSKinesisAgent"

period = "300"

statistic = "Sum"

threshold = "2"

alarm_actions = ["arn:aws:sns:ap-northeast-1:012345678910:xxxxxx"]

ok_actions = ["arn:aws:sns:ap-northeast-1:012345678910:xxxxxx"]

dimensions {

Destination = "DeliveryStream:xxxxxx-syslog"

}

}

Caution

- agentには取得対象のログがローテートされた時

copytruncateが定義されているとエージェントが停止するバグがあるので、createを使用します