はじめに

2019年の機械翻訳の進化が非常に良くまとまったケンブリッジ大学の論文があったのでご紹介します。

弊社は自社でSlack向けの翻訳Chatbot Plugin、Kiaraを開発しており、

日本初のSlack Developer Chapter Leaderとしてリードエンジニア原田が頑張っております。

https://kiara-app.com/ (無料お試し版あり)

Slackと働き方革命に対するパッションから、今後も開発者コミュニティを盛り上げてまいります。

Abstract

Neural Machine Translation: A Review

(Submitted on 4 Dec 2019)

The field of machine translation (MT), the automatic translation of written text from one natural language into another, has experienced a major paradigm shift in recent years. Statistical MT, which mainly relies on various count-based models and which used to dominate MT research for decades, has largely been superseded by neural machine translation (NMT), which tackles translation with a single neural network. In this work we will trace back the origins of modern NMT architectures to word and sentence embeddings and earlier examples of the encoder-decoder network family. We will conclude with a survey of recent trends in the field.

機械翻訳(MT)、ある自然言語から別の自然言語への文章の自動翻訳の分野は、近年大きなパラダイムシフトを経験しています。主にさまざまなカウントベースのモデルに依存し、数十年間MT研究を支配していた統計MTは、単一のニューラルネットワークで翻訳に取り組むニューラル機械翻訳(NMT)にほぼ取って代わられました。この作業では、最新のNMTアーキテクチャの起源を、単語と文の埋め込み、およびエンコーダ/デコーダネットワークファミリの以前の例にまでさかのぼります。最後に、この分野の最近の傾向を調査します。

Conclusion

Neural machine translation (NMT) has become the de facto standard for large-scale machine translation in a very short period of time. This article traced back the origin of NMT to word and sentence embeddings and neural language models. We reviewed the most commonly used building blocks of NMT architectures – recurrence, convolution, and attention – and discussed popular concrete architectures such as RNNsearch, GNMT, ConvS2S, and the Transformer.

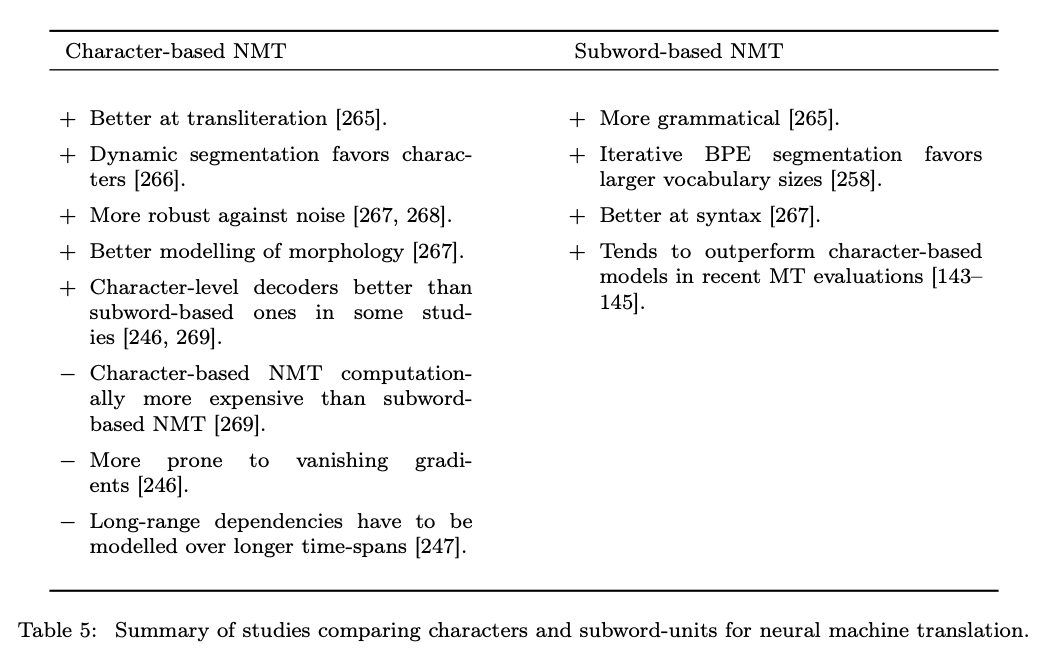

We discussed the advantages and disadvantages of several important design choices that have to be made to design a good NMT system with respect to decoding, training, and segmentation. We then explored advanced topics in

NMT research such as explainability and data sparsity.

主要箇所 Main Topic

NMT=Neural Machine Translation

Word Embeddings

Phrase Embeddings

Sentence Embeddings

Encoder-Decoder Networks

Attentional Encoder-Decoder Networks

Recurrent NMT

Convolutional NMT

Self attention based NMT

Search problem in NMT

Greedy and beam search

Decoding direction

Generating diverse translation

Simultaneous translation

Open vocabulary NMT

NMT model errors

Reinforcement learning

Adversarial training

Explainable NMT

Multilingual NMT