TerraformでLambda自体は作るけど、ソースコードの管理やデプロイは別でやりたい

背景

TerraformでAWSのLambda関数を作成していたときに、「LambdaのソースコードもTerraformで管理するの?」って思ったのがきっかけです。

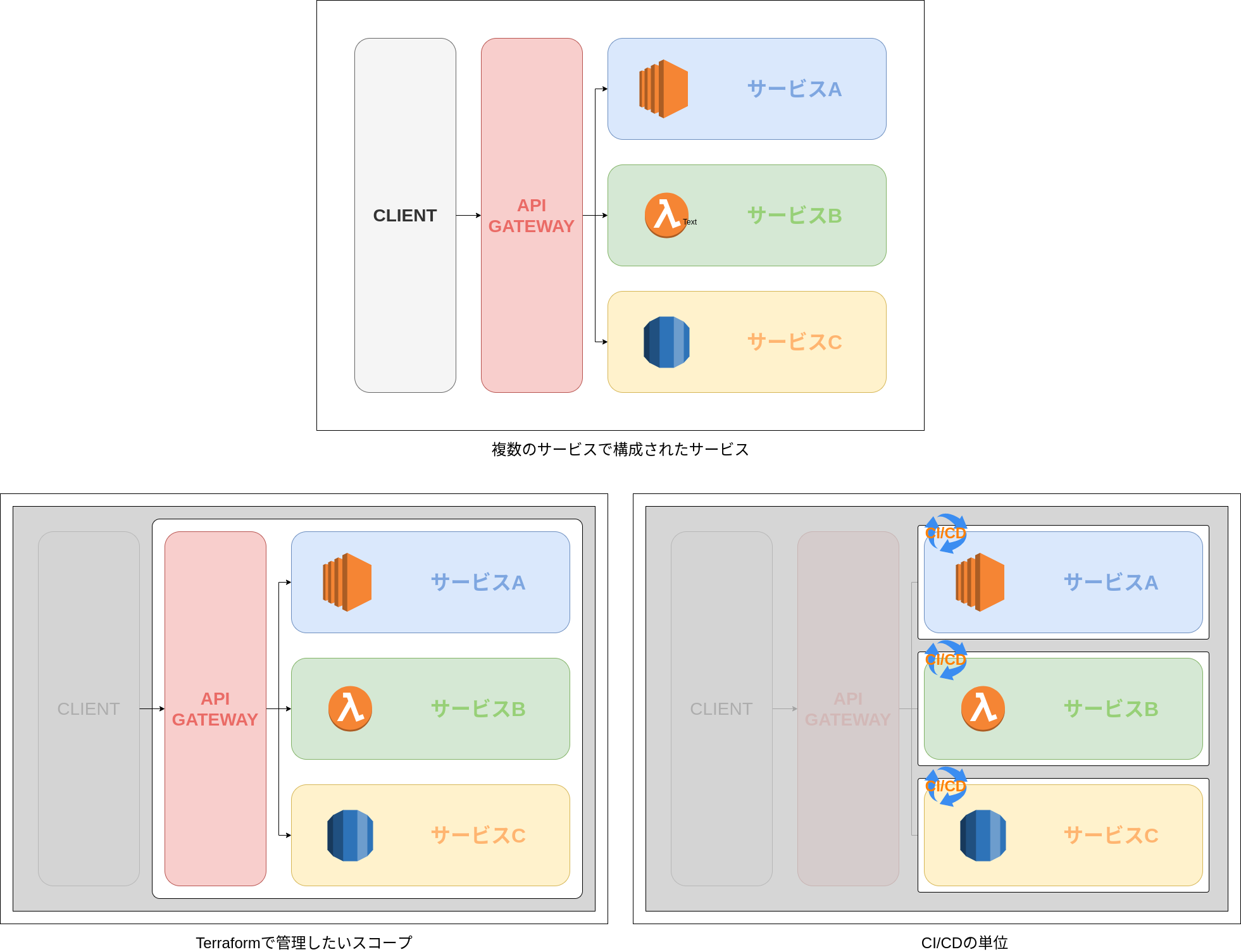

まずTerraformはインフラ構成をコードで管理するツールです。ただそこにアプリケーションのソースコードが入ってもいいのかという議論があると思います。規模が小さい場合などは、Terraformですべて管理するという方法で問題ないかもしれませんが、下記の図のような、複数のサービスで構成されるサービスの場合、Terraformで管理したいスコープとCI/CDの単位に差が出てくると思います。

ということでこの記事では、TerraformでLambda関数の宣言はするが、サービスのソースコードやデプロイは分離した状態を目指します。

方針

Terraformでは空のLambdaのみを宣言、作成し、ソースコードはアップロードしません。

デプロイはaws cliを利用してS3にソースコードをアップロード、Lambdaの参照を切り替えるという実装にします。

実装

Terraform側

TerraformでLambda関数と必要な権限、S3バケット等を作成します。

本来変数にすべきところもすべてベタ書きにしていますがご了承ください。

コードの解説はコメントで書きます。

# providerの宣言

provider "aws" {

region = "ap-northeast-1"

profile = "default"

}

# S3バケットを生成

# CI/CD側でlambdaのソースコードを格納するための箱

# バケット名は変更してください

resource "aws_s3_bucket" "test_bucket" {

bucket = "okamoto-tf-test-bucket"

acl = "private"

tags = {

Name = "okamoto-tf-test-bucket"

}

versioning {

enabled = false # 本番運用する場合はtrue

}

}

# ロールを生成

resource "aws_iam_role" "lambda" {

name = "iam_for_lambda"

assume_role_policy = <<EOF

{

"Version": "2012-10-17",

"Statement": [

{

"Action": "sts:AssumeRole",

"Principal": {

"Service": "lambda.amazonaws.com"

},

"Effect": "Allow",

"Sid": ""

}

]

}

EOF

}

# 初回のみ利用する空のLambdaのファイルを生成

data "archive_file" "initial_lambda_package" {

type = "zip"

output_path = "${path.module}/.temp_files/lambda.zip"

source {

content = "# empty"

filename = "main.py"

}

}

# 生成した空のLambdaのファイルをS3にアップロード

resource "aws_s3_bucket_object" "lambda_file" {

bucket = aws_s3_bucket.test_bucket.id

key = "initial.zip"

source = "${path.module}/.temp_files/lambda.zip"

}

# Lambda関数を生成

# ソースコードは空のLambdaのファイルのS3を参照

resource "aws_lambda_function" "lambda_test" {

function_name = "lambda_test"

role = aws_iam_role.lambda.arn

handler = "main.handler"

runtime = "python3.8"

timeout = 120

publish = true

s3_bucket = aws_s3_bucket.test_bucket.id

s3_key = aws_s3_bucket_object.lambda_file.id

}

これでterraform planを実行して生成されるリソースを確認します。

実行結果

$ terraform plan

Terraform used the selected providers to generate the following execution plan. Resource actions are

indicated with the following symbols:

+ create

Terraform will perform the following actions:

# aws_iam_role.lambda will be created

+ resource "aws_iam_role" "lambda" {

+ arn = (known after apply)

+ assume_role_policy = jsonencode(

{

+ Statement = [

+ {

+ Action = "sts:AssumeRole"

+ Effect = "Allow"

+ Principal = {

+ Service = "lambda.amazonaws.com"

}

+ Sid = ""

},

]

+ Version = "2012-10-17"

}

)

+ create_date = (known after apply)

+ force_detach_policies = false

+ id = (known after apply)

+ managed_policy_arns = (known after apply)

+ max_session_duration = 3600

+ name = "iam_for_lambda"

+ path = "/"

+ unique_id = (known after apply)

+ inline_policy {

+ name = (known after apply)

+ policy = (known after apply)

}

}

# aws_lambda_function.lambda_test will be created

+ resource "aws_lambda_function" "lambda_test" {

+ arn = (known after apply)

+ function_name = "lambda_test"

+ handler = "main.handler"

+ id = (known after apply)

+ invoke_arn = (known after apply)

+ last_modified = (known after apply)

+ memory_size = 128

+ package_type = "Zip"

+ publish = true

+ qualified_arn = (known after apply)

+ reserved_concurrent_executions = -1

+ role = (known after apply)

+ runtime = "python3.8"

+ s3_bucket = (known after apply)

+ s3_key = (known after apply)

+ signing_job_arn = (known after apply)

+ signing_profile_version_arn = (known after apply)

+ source_code_hash = (known after apply)

+ source_code_size = (known after apply)

+ timeout = 120

+ version = (known after apply)

+ tracing_config {

+ mode = (known after apply)

}

}

# aws_s3_bucket.test_bucket will be created

+ resource "aws_s3_bucket" "test_bucket" {

+ acceleration_status = (known after apply)

+ acl = "private"

+ arn = (known after apply)

+ bucket = "okamoto-tf-test-bucket"

+ bucket_domain_name = (known after apply)

+ bucket_regional_domain_name = (known after apply)

+ force_destroy = false

+ hosted_zone_id = (known after apply)

+ id = (known after apply)

+ region = (known after apply)

+ request_payer = (known after apply)

+ tags = {

+ "Name" = "okamoto-tf-test-bucket"

}

+ website_domain = (known after apply)

+ website_endpoint = (known after apply)

+ versioning {

+ enabled = false

+ mfa_delete = false

}

}

# aws_s3_bucket_object.lambda_file will be created

+ resource "aws_s3_bucket_object" "lambda_file" {

+ acl = "private"

+ bucket = (known after apply)

+ bucket_key_enabled = (known after apply)

+ content_type = (known after apply)

+ etag = (known after apply)

+ force_destroy = false

+ id = (known after apply)

+ key = "initial.zip"

+ kms_key_id = (known after apply)

+ server_side_encryption = (known after apply)

+ source = "./.temp_files/lambda.zip"

+ storage_class = (known after apply)

+ version_id = (known after apply)

}

Plan: 4 to add, 0 to change, 0 to destroy.

Changes to Outputs:

+ arn = (known after apply)

+ aws_s3_bucket_object_etag = (known after apply)

+ aws_s3_bucket_object_id = (known after apply)

+ aws_s3_bucket_object_version_id = (known after apply)

+ bucket_domain_name = (known after apply)

+ bucket_regional_domain_name = (known after apply)

+ hosted_zone_id = (known after apply)

+ id = (known after apply)

+ region = (known after apply)

+ website_domain = (known after apply)

+ website_endpoint = (known after apply)

─────────────────────────────────────────────────────────────────────────────────────────────────────

Note: You didn't use the -out option to save this plan, so Terraform can't guarantee to take exactly

these actions if you run "terraform apply" now.

問題なさそうであれば、続けてterraform applyで適応していきます。

実行結果

$ terraform apply

Terraform used the selected providers to generate the following execution plan. Resource actions are

indicated with the following symbols:

+ create

Terraform will perform the following actions:

# aws_iam_role.lambda will be created

+ resource "aws_iam_role" "lambda" {

+ arn = (known after apply)

+ assume_role_policy = jsonencode(

{

+ Statement = [

+ {

+ Action = "sts:AssumeRole"

+ Effect = "Allow"

+ Principal = {

+ Service = "lambda.amazonaws.com"

}

+ Sid = ""

},

]

+ Version = "2012-10-17"

}

)

+ create_date = (known after apply)

+ force_detach_policies = false

+ id = (known after apply)

+ managed_policy_arns = (known after apply)

+ max_session_duration = 3600

+ name = "iam_for_lambda"

+ path = "/"

+ unique_id = (known after apply)

+ inline_policy {

+ name = (known after apply)

+ policy = (known after apply)

}

}

# aws_lambda_function.lambda_test will be created

+ resource "aws_lambda_function" "lambda_test" {

+ arn = (known after apply)

+ function_name = "lambda_test"

+ handler = "main.handler"

+ id = (known after apply)

+ invoke_arn = (known after apply)

+ last_modified = (known after apply)

+ memory_size = 128

+ package_type = "Zip"

+ publish = true

+ qualified_arn = (known after apply)

+ reserved_concurrent_executions = -1

+ role = (known after apply)

+ runtime = "python3.8"

+ s3_bucket = (known after apply)

+ s3_key = (known after apply)

+ signing_job_arn = (known after apply)

+ signing_profile_version_arn = (known after apply)

+ source_code_hash = (known after apply)

+ source_code_size = (known after apply)

+ timeout = 120

+ version = (known after apply)

+ tracing_config {

+ mode = (known after apply)

}

}

# aws_s3_bucket.test_bucket will be created

+ resource "aws_s3_bucket" "test_bucket" {

+ acceleration_status = (known after apply)

+ acl = "private"

+ arn = (known after apply)

+ bucket = "okamoto-tf-test-bucket"

+ bucket_domain_name = (known after apply)

+ bucket_regional_domain_name = (known after apply)

+ force_destroy = false

+ hosted_zone_id = (known after apply)

+ id = (known after apply)

+ region = (known after apply)

+ request_payer = (known after apply)

+ tags = {

+ "Name" = "okamoto-tf-test-bucket"

}

+ website_domain = (known after apply)

+ website_endpoint = (known after apply)

+ versioning {

+ enabled = false

+ mfa_delete = false

}

}

# aws_s3_bucket_object.lambda_file will be created

+ resource "aws_s3_bucket_object" "lambda_file" {

+ acl = "private"

+ bucket = (known after apply)

+ bucket_key_enabled = (known after apply)

+ content_type = (known after apply)

+ etag = (known after apply)

+ force_destroy = false

+ id = (known after apply)

+ key = "initial.zip"

+ kms_key_id = (known after apply)

+ server_side_encryption = (known after apply)

+ source = "./.temp_files/lambda.zip"

+ storage_class = (known after apply)

+ version_id = (known after apply)

}

Plan: 4 to add, 0 to change, 0 to destroy.

Changes to Outputs:

+ arn = (known after apply)

+ aws_s3_bucket_object_etag = (known after apply)

+ aws_s3_bucket_object_id = (known after apply)

+ aws_s3_bucket_object_version_id = (known after apply)

+ bucket_domain_name = (known after apply)

+ bucket_regional_domain_name = (known after apply)

+ hosted_zone_id = (known after apply)

+ id = (known after apply)

+ region = (known after apply)

+ website_domain = (known after apply)

+ website_endpoint = (known after apply)

Do you want to perform these actions?

Terraform will perform the actions described above.

Only 'yes' will be accepted to approve.

Enter a value:yes

aws_iam_role.lambda: Creating...

aws_s3_bucket.test_bucket: Creating...

aws_iam_role.lambda: Still creating... [10s elapsed]

aws_s3_bucket.test_bucket: Still creating... [10s elapsed]

aws_s3_bucket.test_bucket: Creation complete after 16s [id=okamoto-tf-test-bucket]

aws_s3_bucket_object.lambda_file: Creating...

aws_s3_bucket_object.lambda_file: Creation complete after 1s [id=initial.zip]

aws_iam_role.lambda: Still creating... [20s elapsed]

aws_iam_role.lambda: Still creating... [30s elapsed]

aws_iam_role.lambda: Creation complete after 33s [id=iam_for_lambda]

aws_lambda_function.lambda_test: Creating...

aws_lambda_function.lambda_test: Creation complete after 6s [id=lambda_test]

Apply complete! Resources: 4 added, 0 changed, 0 destroyed.

Outputs:

arn = "arn:aws:s3:::okamoto-tf-test-bucket"

aws_s3_bucket_object_etag = "6582ea9c16331bd37b52bf2c8bcca596"

aws_s3_bucket_object_id = "initial.zip"

aws_s3_bucket_object_version_id = "null"

bucket_domain_name = "okamoto-tf-test-bucket.s3.amazonaws.com"

bucket_regional_domain_name = "okamoto-tf-test-bucket.s3.ap-northeast-1.amazonaws.com"

hosted_zone_id = "Z2M4EHUR26P7ZW"

id = "okamoto-tf-test-bucket"

region = "ap-northeast-1"

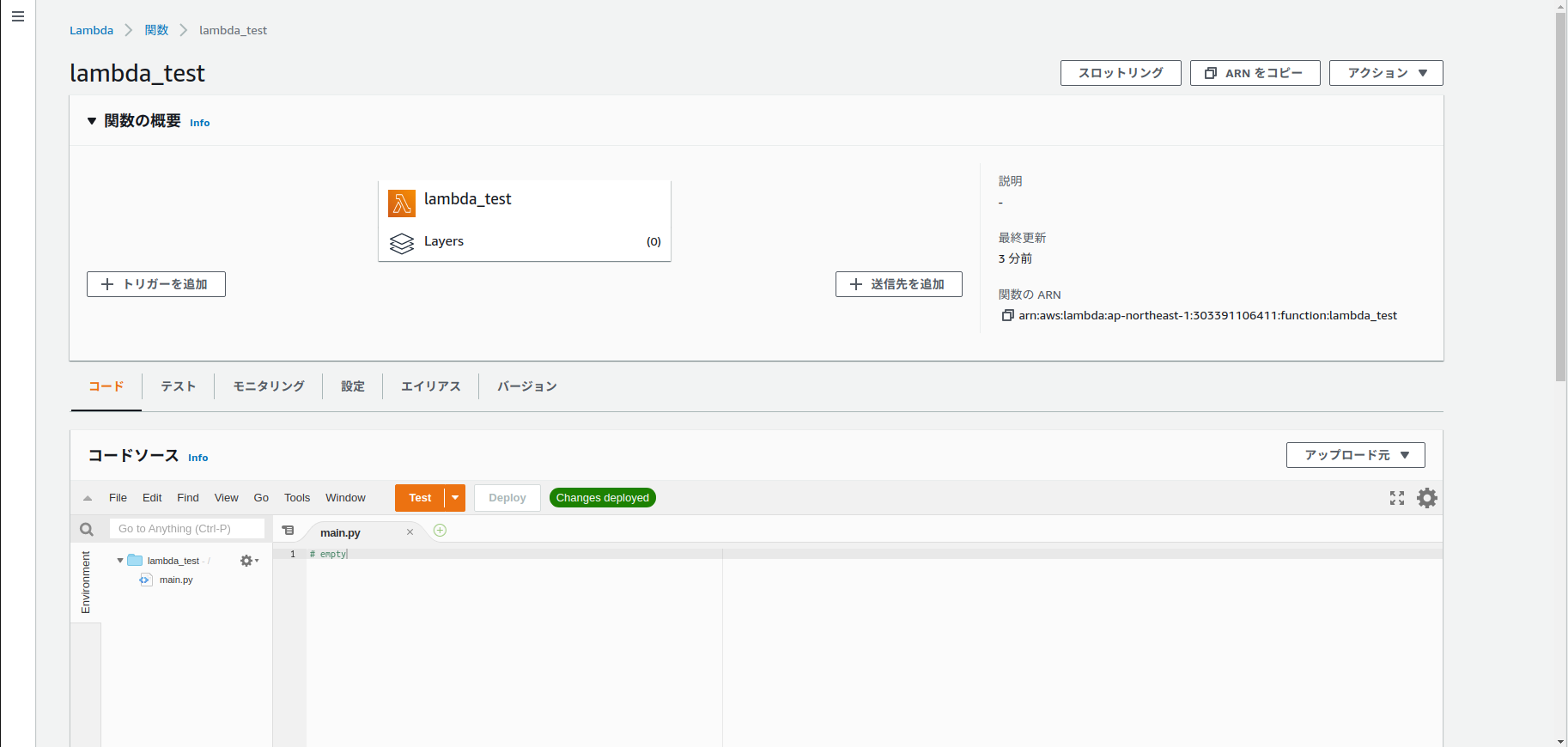

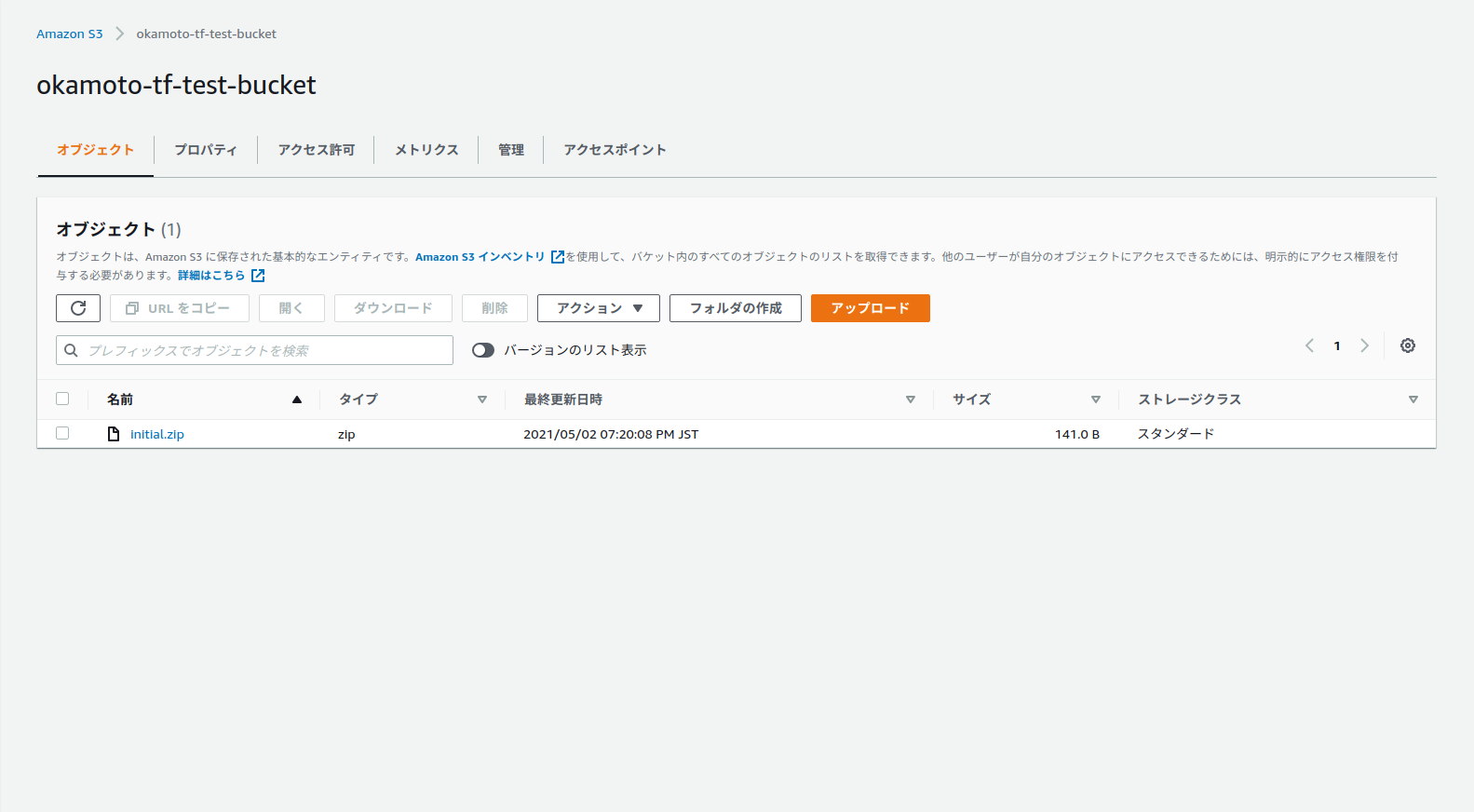

生成されたS3バケットとLabmdaをAWSコンソール上から確認します。

バケットが生成されzipファイルが置かれているとことがわかります。

これでTerraform側は終了です。

デプロイ側

今回はlambdaのソースコードをsrc/main.py、パッケージ情報をrequirements.txtに、デプロイコマンドscripts/deploy.shで管理します。

フォルダ構成は以下のようになっています。

├── requirements.txt

├── scripts

│ └── deploy.sh

└── src

└── main.py

またrequirements.txt、main.pyは以下です。最小限の構成です。

requests==2.25.1

import requests

def handler(*args, **kwargs):

res = requests.get('https://api.github.com')

return res.status_code

以下のドキュメントを参考にしてデプロイシェルを書きます。

https://docs.aws.amazon.com/ja_jp/lambda/latest/dg/python-package.html

# ディレクトリ情報の取得

CURRENT_PATH=$(cd $(dirname $0); pwd)

ROOT_PATH=$CURRENT_PATH/..

# pythonパッケージのインストール

pip install --target ./package -r $ROOT_PATH/requirements.txt --system

# pythonパッケージのzip化

cd package

zip ../deployment-package.zip -r ./*

cd ..

# ソースコードをzipファイルに追加

zip -g deployment-package.zip -j $ROOT_PATH/src/main.py

# S3にアップロードしてlambda関数の参照を書き換える

aws s3 cp ./deployment-package.zip s3://okamoto-tf-test-bucket/

aws lambda update-function-code --function-name lambda_test --s3-bucket okamoto-tf-test-bucket --s3-key deployment-package.zip

# デプロイ時の一時ファイルを削除

rm deployment-package.zip

rm -r package

それでは実行します。

$ bash ./scripts/deploy.sh

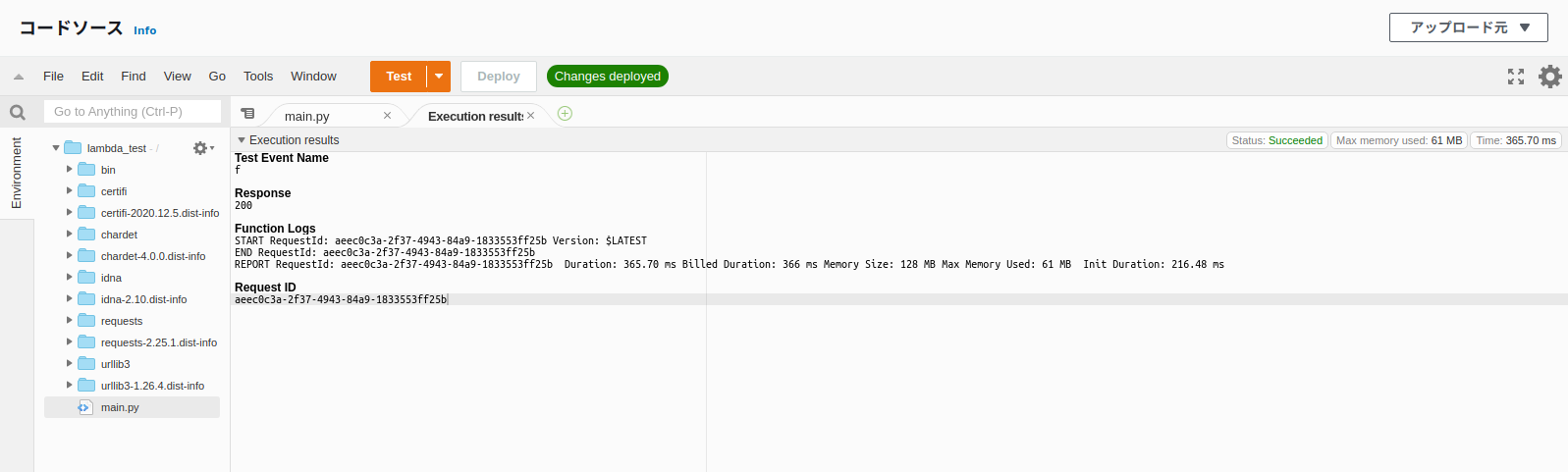

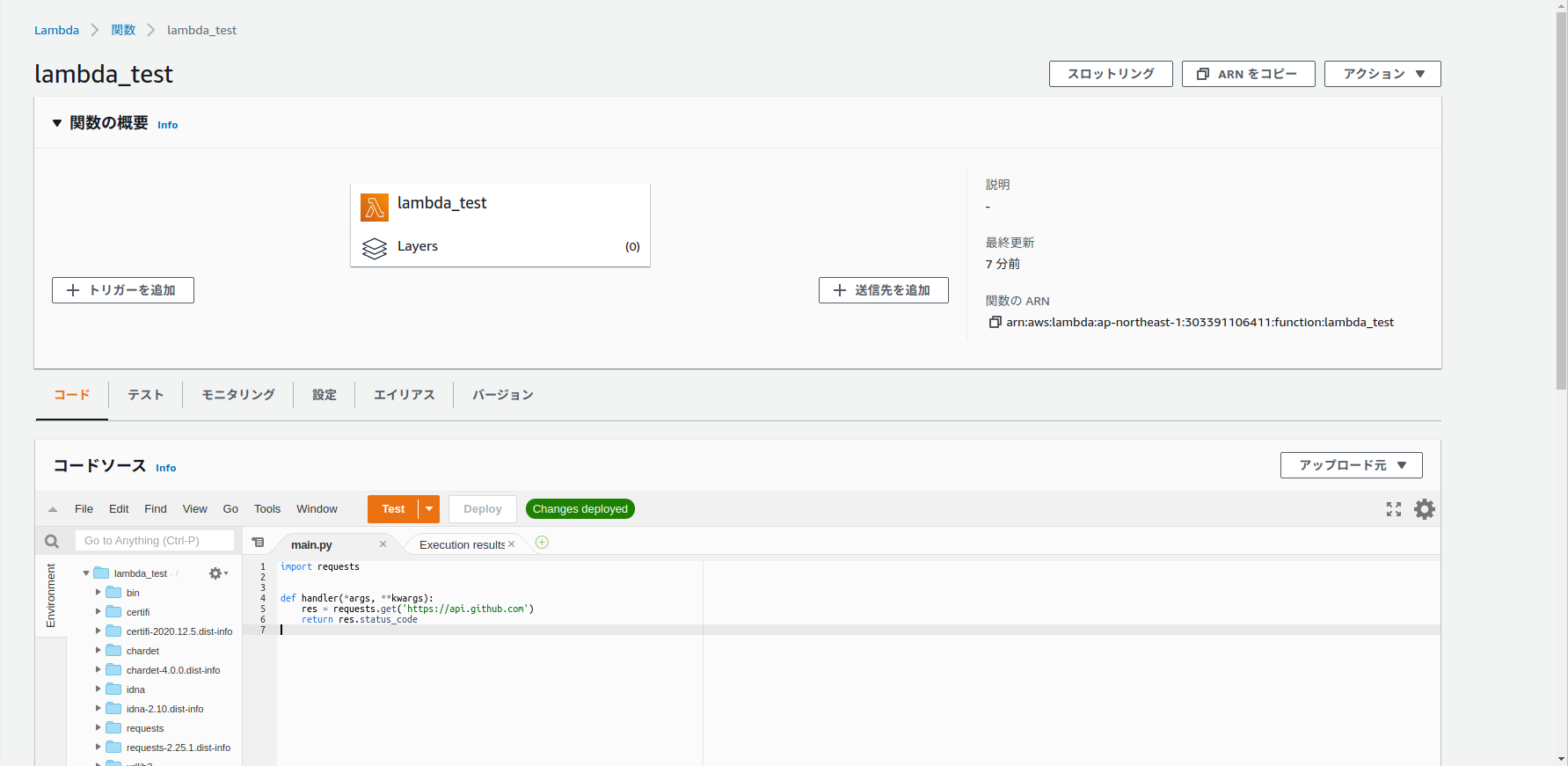

AWSコンソールを確認します。

deployment-package.zipがアップロードされていることがわかります。

うまくpackageされていることがわかります。

また実行してrequestsパッケージを参照しても落ちることなく実行できます。

また当然インフラ構成には変更がないのでデプロイ後もterraform planで差分が出ることはありません。

$ terraform plan

aws_iam_role.lambda: Refreshing state... [id=iam_for_lambda]

aws_s3_bucket.test_bucket: Refreshing state... [id=okamoto-tf-test-bucket]

aws_s3_bucket_object.lambda_file: Refreshing state... [id=initial.zip]

aws_lambda_function.lambda_test: Refreshing state... [id=lambda_test]

No changes. Infrastructure is up-to-date.

This means that Terraform did not detect any differences between your configuration and the remote

system(s). As a result, there are no actions to take.

まとめ

後半部分は、ただaws cliを使ってLambdaを更新しただけですが、TerraformとLambdaのソースコード・デプロイを分離させることができました。

今回作成したソースコードはこちらにあげておきます。

https://github.com/Cohey0727/terraform_labmda_sample