1. Computers Getting Smarter than Humans

In May 2017, one of the biggest event in human history occurred. AlphaGo, an AI software developed by Google's DeepMind, beat the best Go player in the world.

Go, which is an abstract strategy board game and has more than 200 years of history, is considered the most difficult board game for AI. It was expected that it would take much more years until AI becomes able to compete with a human in Go.

In 1996, IBM's Deep Blue beat then world chess champion. The accomplishment by Alpha go was amazing. But still, more than 20 years had passed since then. What did AI take so long?

In short, there is a tremendous difference in the number of possible combinations on board between chess and go. After the first two moves, there are 400 possible next moves in chess, on the other hand, there are close to 130,000 possibilities in go.

A Google researcher posted as below in January 2016.

But as simple as the rules are, Go is a game of profound complexity. The search space in Go is vast -- more than a googol times larger than chess (a number greater than there are atoms in the universe!). As a result, traditional “brute force” AI methods -- which construct a search tree over all possible sequences of moves -- don’t have a chance in Go. To date, computers have played Go only as well as amateurs. **Experts predicted it would be at least another 10 years until a computer could beat one of the world’s elite group of Go professionals.

Finally AI has beaten human beings in board games. They are still evolving. Really fast.

- Ref: "AI versus AI: Self-Taught AlphaGo Zero Vanquishes Its Predecessor"

Computers now can see hidden (for humans) patterns and predict what we can't. Or even in just image recognition tasks which we human beings usually do, they outperformed us according to the report more than 2 years ago. - Ref: "Microsoft's Deep Learning Project Outperforms Humans In Image Recognition"

AI is now everywhere. Knowingly or unknowingly. They gather data and improve our lives. In the near future, possibly new AI will be created by AI itself.

- Ref: "GOOGLE AI CREATES ITS OWN ‘CHILD’ AI THAT’S MORE ADVANCED THAN SYSTEMS BUILT BY HUMANS"

- Ref: "The Military Just Created An AI That Learned How To Program Software"

("AI" is now a buzzword for marketing. Therefore, in my opinion, you don't need to take these kinds of news too much seriously and not necessary to consider that your daily life would be changed dramatically soon. But, still I'm really amazed by the speed of the innovation.)

- Ref: "The Military Just Created An AI That Learned How To Program Software"

2. Computer After Moore's Law

As mentioned above, in various aspects AIs are now evolving so fast. Even though still we need to consider various factors to compare human's and AI's capability, no one can hardly disagree with the speed of the computational progress.

The latest AIs can learn without human interaction and improve themselves. Then, can we just let them do their jobs and spend our time for any things we want to do?

Unfortunately, the answer is No. There are some limitations in the current (classical) computation. To overcome the restrictions, we need to invent new computation system: Quantum Computing.

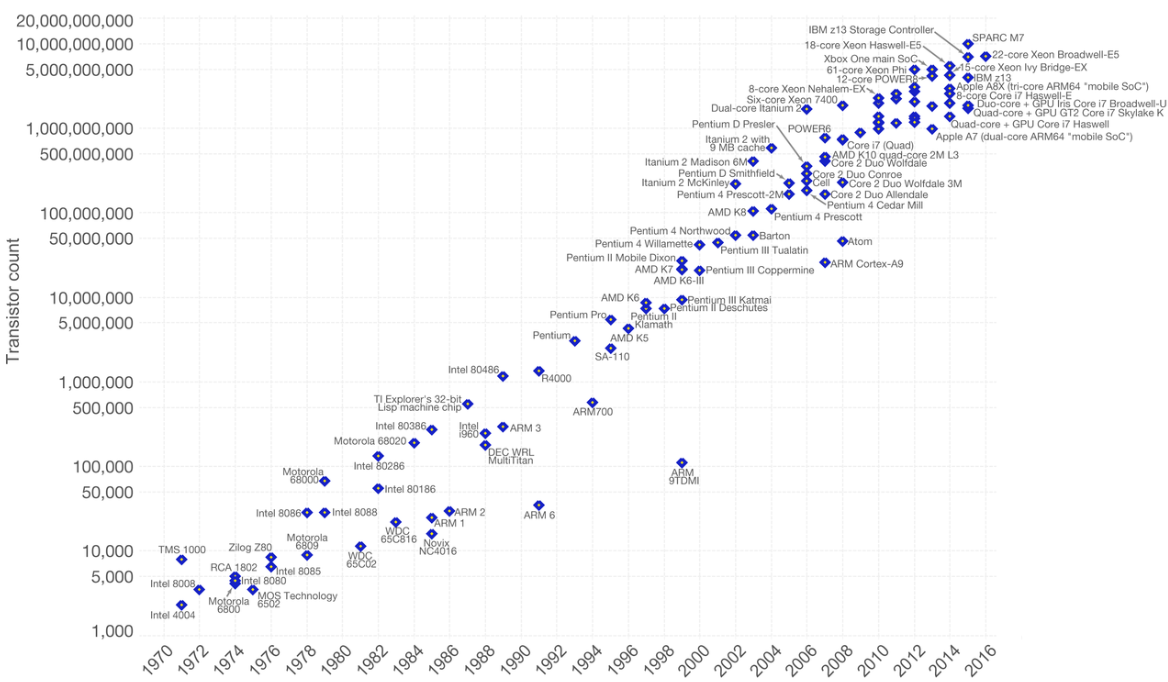

We are now reaching the limit of the number of transistors to be placed on a circuit.

Moore's law was proposed in 1965 and since then it has been following the rules mostly as predicted.

Moore's law is the observation that the number of transistors in a dense integrated circuit doubles about every two years. The observation is named after Gordon Moore, the co-founder of Fairchild Semiconductor and Intel, whose 1965 paper described a doubling every year in the number of components per integrated circuit.

However, it's now getting difficult to make transistors physically smaller further more.

And a technology roadmap for Moore’s Law maintained by an industry group, including the world’s largest chip makers, is being scrapped. Intel has suggested silicon transistors can only keep shrinking for another five years.

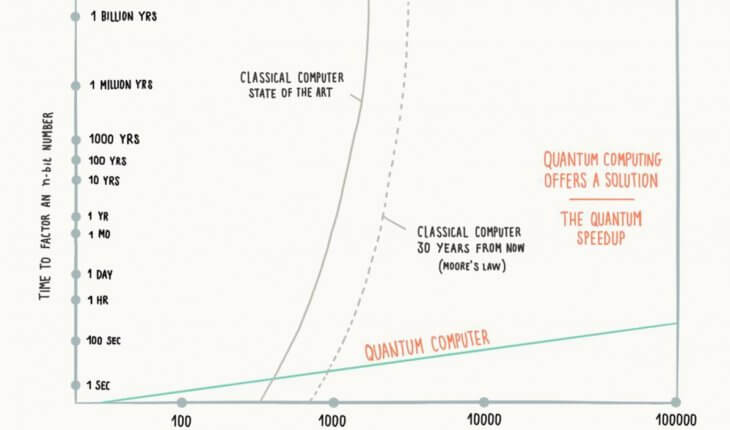

Also, in some types of computation task, quantum computers are incomparably faster than classical computers.

For example, public key cryptosystem has been widely used for data security for decades.

Roughly speaking, its security relies on the mathematical difficulties of prime factorization for large numbers. The bigger the number gets, the longer it takes to compute factorization. As it takes to long for classical computer to solve prime factorization of large numbers, we consider that kind of cryptosystem is "practically" secure.

This chart shows how exponentially the duration of computation gets longer as the number of bits to factorize increases.

Once the number of bits to factorize reaches over 1000 bits, it takes almost a million year for the current computer to finish the computation. On the other hand, for Quantum Computers, it is just like a minutes or so.

Even regarding Deep Learning, which allows AIs based on classical computing system to do complicated tasks such as driving vehicles as human beings, some argue that the current architecture is not enough to be able to do "any mental task that the average human can accomplish in a second or less".

- Ref: "Has Artificial Intelligence Hit a Wall?"

- Ref: "Should Artificial Intelligence Copy the Human Brain?"

Today’s deep-learning systems don’t resemble our brains. At best, they look like the outer portion of the retina, where a scant few layers of neurons do initial processing of an image. It’s very unlikely that such a network could be bent to all the tasks our brains are capable of. [...]

Dr. Marcus says that to get to “general intelligence”—which requires the ability to reason, learn on one’s own and build mental models of the world—will take more than what today’s AI can achieve. [...]

Until we figure out how to make our AIs more intelligent and robust, we’re going to have to hand-code into them a great deal of existing human knowledge, says Dr. Marcus. That is, a lot of the “intelligence” in artificial intelligence systems like self-driving software isn’t artificial at all. As much as companies need to train their vehicles on as many miles of real roads as possible, for now, making these systems truly capable will still require inputting a great deal of logic that reflects the decisions made by the engineers who build and test them.

(Note that this quotation is mostly just for my record. I thought this article is interesting so it's quoted in this post. However, I don't think this kind of limitation in AI algorithms will be solved by Quantum Computing. )

Anyway, because of these kind of reasons, as the next stage of computation, various companies including tech giants such as IBM, Google and Microsoft are rushing to Commercialize Quantum Computing today.

3. Flipping a "Qubit"

When the necessity of Quantum computing is mentioned on various occasions, people say this is because we simply need faster computing. Then, why can quantum computers outperform classical computers?

In a classical computer, the basic unit of information is a bit, which can have only one of two values 0 or 1. In a quantum computer, on the other hand, the basic unit of information is known as a quantum bit or “qubit”. Qubits can be both of 0 and 1 at the same time, which is called superposition.

A quantum computer, on the other hand, maintains a sequence of qubits, which can represent a one, a zero, or any quantum superposition of those two qubit states; a pair of qubits can be in any quantum superposition of 4 states, and three qubits in any superposition of 8 states. In general, a quantum computer with n qubits can be in an arbitrary superposition of up to $2^{n}$ different states simultaneously. (This compares to a normal computer that can only be in one of these $2^{n}$ states at any one time).

Some people explain this qubit's behavior with coin flipping analogy.

Ref: "What makes a quantum computer so different (and so much faster) than a conventional computer?"

Once a flipped coin hit in your hand, it must be either heads[1] or tails[0]. It can't be both at the same time. This is what a classical computer is like. On the other hand, while the coin is being flipping in the air, it can be heads[1] and tails[0] at the same time (at least for most of humans. It's up to your dynamic visual acuity). Once the coin hits the hand (once it's measured), its state has to be determined. It describes the behavior of Quantum Computer well.

This quantum behavior theoretically allows the quantum computer perform many computations simultaneously and solve a difficult subset of problems much faster than a classical computer.

4. Will Quantum Computer Make Current Computers Obsolete?

In my opinion, the answer to this question is also No. As described above, Quantum Computing has tremendous potential. But some researchers expect that the usage of its powers will not be so generally applicable.

This research has revealed that even a quantum computer would face significant limitations.

Contrary to the popular image, their work has revealed even a quantum computer would

face significant limitations. In particular, while a quantum computer could quickly factor

large numbers, and thereby break most of the cryptographic codes used on the Internet

today, there’s reason to think that not even a quantum computer could solve the crucial

class of NP-complete problems efficiently. [...] Limitations of quantum computers have also been found for games of strategy like chess, as well as for breaking cryptographic hash functions.

In addition to that, probably I should've mentioned it in the first place, any quantum computer hardware has not been developed yet for practical/commercialized usage.

This is because quantum computer hardware need very sophisticated and dedicated architecture. It depends on the implementation, but generally, the system has to keep its temperature very low as much as nearly absolute zero. As qubit contains really small energy, it easily gets affected by the temperature of external environment.

What if quantum computers become commercially available?

Even in that case, still I don't think quantum computers take over the place of classical computers. Rather, they are going to co-exist so that they can optimize their own computational resources.

Given the fact that quantum computers need specially designed and dedicated hardware resources, I assume it takes more than decades until we personally use quantum computers at our disposal as like Microsoft achieved with the mission statement "A computer on every desk and in every home" in the era of classical computers.

The cost of the hardware also needs to be considered. It will not get enough cheap for individuals soon. In the next decade or so, quantum computers will be used for such as business and education purpose.

I think they will be offered as a part of Cloud Service. (Like Qubit as a Service or QaaS?). Because these 2 technology can fit together. Quantum computer hardware needs to be sophisticatedly managed/operated. It's difficult for individual companies to maintain the special facility/equipment and trained engineers. Also, as stated above, quantum computer can be used for some specific purpose. It may not be cost efficient to own quantum computers by themselves for temporary demand.

By offering quantum computers as a service of cloud, these kind of customer's concerns can be addressed.

I guess Microsoft is now heading to this direction. They offer Q# Programming Language to implements quantum algorithms.

This is how it works.

In this model, there are three levels of computation:

- Classical computation that reads input data, sets up the quantum computation, triggers the quantum computation, processes the results of the computation, and presents the results to the user.

- Quantum computation that happens directly in the quantum device and implements a quantum algorithm.

- Classical computation that is required by the quantum algorithm during its execution.

Currently you can run Q# program on you machine by simulating qubits behavior. In the future, you may be able to write Q# code on your local machine and then it call quantum resource in your Azure Cloud subscription when it needs qubit's computational power.