Start: 2017/10/30

Finish: 2017/10/??

Very Similar Papers on context of supervised term weight

- 2015, New term weighting schemes with combination of multiple classifier for sentiment analysis PDF READ

- 2007, Supervised and Traditional Term Weighting

Methods for Automatic Text Categorization PDF - 2005, Learn to Weight Terms in Information Retrieval Using Category Information PDF READ

- 2009, Learning term-weighting functions for similarity measures PDF

IDEAs of Application

- some supervised global term weighting can be useful to score term when genre-filtering

ABSTRACT

- Supervised term weighting for text categorization.

- Proposed a new concept of Over-weighting, in context of controlling over-weighting and under-weighting

- over-weighting: assign larger weight to terms with more imbalanced distributions across categories

- under-weighting: ratios between term weights becomes too small. Because sublinear scaling and bias term shrink the ratios

- Proposed a new supervised term weighting scheme, regularized entropy (re)

- Present 3 regularization techniques: add-on smoothing, sublinear scaling, bias term

- Evaluated on a lots of datasets on both tasks of topical classification and sentiment classification

- Other terminology:

- regularization and over-weighting & under-weighting

- scaling functions

- bias term

INTRODUCTION

REVIEW of TERM WEIGHTING SCHEMES

| weight(term, doc) = local_weight_of_term x global_weight_of_term x normalization_factor_of_doc |

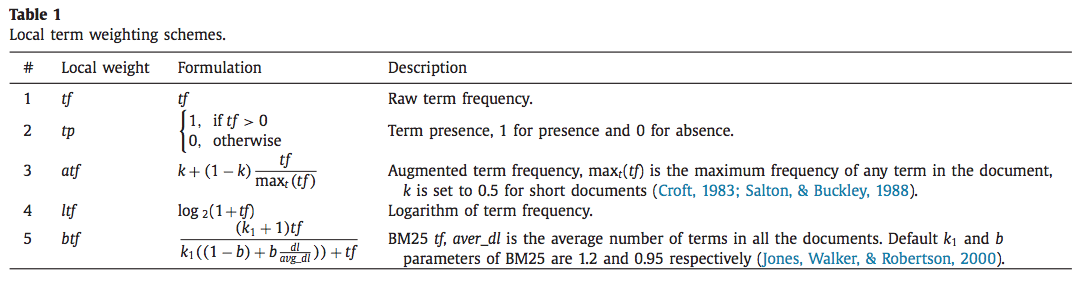

- Local term weighting

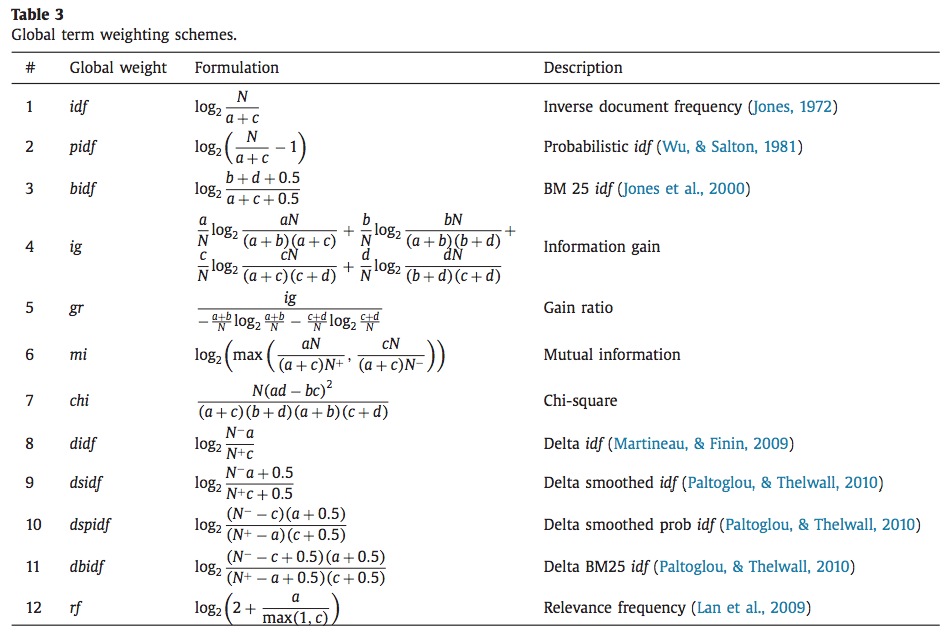

- Global term weighting