概要

![]() 記事を参考に、1台のPC内にVirtualBOX(VB)の仮想マシン(VM)でローカルKubernetes(K8s)環境「

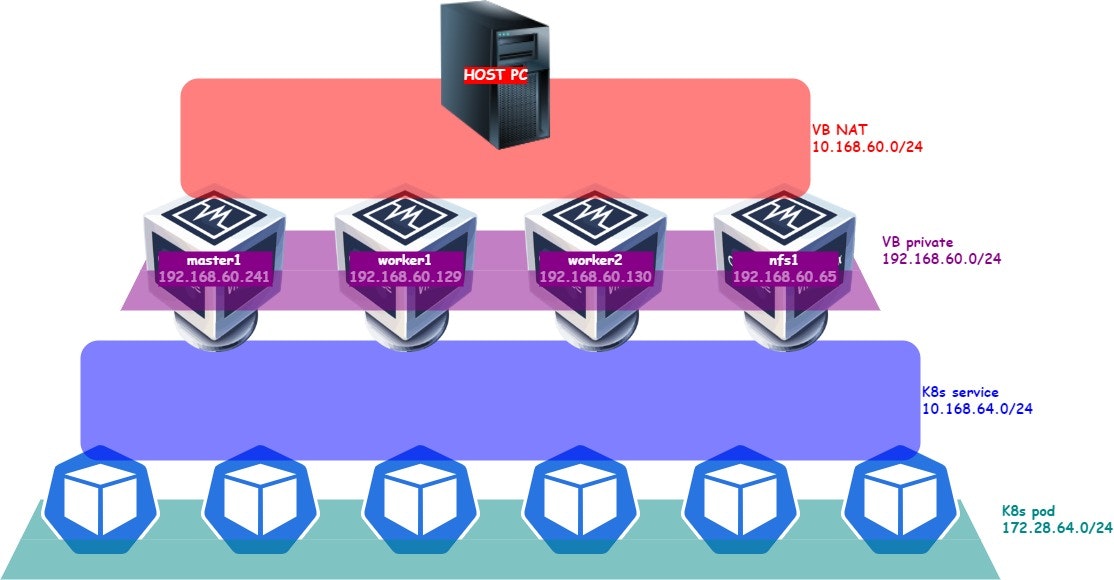

記事を参考に、1台のPC内にVirtualBOX(VB)の仮想マシン(VM)でローカルKubernetes(K8s)環境「master nodex1、worker nodex2、並びにnfs server (PersistentVolume)x1」を構築してみました。

HOST PCにVirtualBOX、Vagrantインストール済み前提でお話は進みます。あらかじめご了承ください![]()

HOST PC SPECS

【OS】Ubuntu 18.04

【CPU】Intel Core i9-10900KF

【RAM】32GB

手順

1. IPアドレスを設定する

大雑把に分けて、外部ネットワークに繋がるサービスネットワークと、内部で閉じた管理用LANの2種類があります(概要にて掲載した画像は、サービスネットワークを上下方向に、管理用LANを水平(横)方向にスケッチしてみましたが、伝わっていたら幸いです![]() )。

)。

これらを社内ネットワークで利用中のIPアドレスに重複しないよう、アドレス範囲を設定していきます。

| 名称 | IPアドレス | 概要 |

|---|---|---|

| VB NAT | 10.168.60.0/24 | HOST PC <-> VB VM間のサービスネットワーク |

| VB private | 192.168.60.0/24 | VB VM間のみの管理LAN |

| K8s service | 10.168.64.0/24 | VB VM <-> K8s service(pod)間のサービスネットワーク |

| K8s pod | 172.28.64.0/24 | K8s pod間のみの管理LAN |

VB privateについては192.168.56.0/21内のみという制限がございます。

2. Vagrant定義ファイル作成

基本的にVMを停止する順序の逆順で定義していきます。言い換えると、最初に記述するVMは一番最後まで残っていて欲しいVMになります。

Vagrant.configure("2") do |config|

#

# [2-0-1] Set Global Options

#

config.vm.box = "ubuntu/focal64"

config.vm.provision :shell, path: "bootstrap.sh"

config.vm.box_check_update = false

config.vm.synced_folder ".", "/home/vagrant/synced_folder"

#

# [2-1-1] Build VM for NFS Server

#

config.vm.define "nfs1" do |cf|

cf.vm.hostname = "nfs1.hogehoge.lab"

cf.vm.network "private_network", ip: "192.168.60.65"

cf.vm.provider "virtualbox" do |vb|

vb.memory = 4096

vb.customize ["modifyvm", :id, "--natnet1", "10.168.60/24"]

disk_file = "/mnt/ntfs/vagrant/hogehoge_lab/.nfs/nfs1.vmdk"

unless File.exists?(disk_file)

vb.customize ['createhd', '--filename', disk_file, '--format', 'VMDK', '--size', 512 * 1024]

end

vb.customize ['storageattach', :id, '--storagectl', 'SCSI', '--port', 2, '--device', 0, '--type', 'hdd', '--medium', disk_file]

end

end

#

# [2-2-1] Build VM for Worker Node 1

#

config.vm.define "worker1" do |cf|

cf.vm.hostname = "worker1.hogehoge.lab"

cf.vm.network "private_network", ip: "192.168.60.129"

cf.vm.provider "virtualbox" do |vb|

vb.memory = 4096

vb.customize ["modifyvm", :id, "--natnet1", "10.168.60/24"]

end

end

#

# [2-2-2] Build VM for Worker Node 2

#

config.vm.define "worker2" do |cf|

cf.vm.hostname = "worker2.hogehoge.lab"

cf.vm.network "private_network", ip: "192.168.60.130"

cf.vm.provider "virtualbox" do |vb|

vb.memory = 4096

vb.customize ["modifyvm", :id, "--natnet1", "10.168.60/24"]

end

end

#

# [2-3-1] Build VM for Master Node 1

#

config.vm.define "master1" do |cf|

cf.vm.hostname = "master1.hogehoge.lab"

cf.vm.network "private_network", ip: "192.168.60.241"

cf.vm.provider "virtualbox" do |vb|

vb.memory = 4096

vb.customize ["modifyvm", :id, "--natnet1", "10.168.60/24"]

end

end

end

さて、どれ位の粒度でご説明すれば読みやすい記事になるのか、ブログ初心者の私には匙加減が掴み切れておりませんが、とりあえず、2022年10月現在の肌感覚で説明を始めさせていただきます![]()

★ 共通(オプション)処理部分

config.vm.box = "ubuntu/focal64"

config.vm.provision :shell, path: "bootstrap.sh"

config.vm.box_check_update = false

config.vm.synced_folder ".", "/home/vagrant/synced_folder"

英文からご推察いただいた通りだと思われます。一応、各オプション処理内容は![]() 公式サイトでも紹介されていますので、よろしければご参照ください。

公式サイトでも紹介されていますので、よろしければご参照ください。

synced_folderをデフォルトから変えているのは、苦肉の策です![]()

ボックスイメージubuntu/bionic64なら問題ないです。

ボックスイメージubuntu/focal64であると、どうしても\vagrantの所有者がvagrantではなくrootになり、共有ディレクトリをマウントできないという事象を解決できませんでした![]()

※以下も試してみましたが、効果はありませんでした。。どうやるんですかね(わらい)

config.vm.synced_folder ".", "/vagrant", owner: "vagrant", group: "vagrant"

config.vm.synced_folder ".", "/vagrant", mount_options: ["uid=1000", "gid=1000"]

今後、ボックスイメージが更新されると解決されているかもしれません

なお、VM作成時の処理 bootstrap.sh なんですが、これを各VM毎に別々の処理を定義できればとってもオシャレです。

今回は、いつもの更新処理とK8s用のブリッジネットワーク要件処理&スワップメモリOFF程度の軽い内容となっております![]()

#!/usr/bin/env bash

# APT Upgrade

sudo apt-get update

sudo apt-get upgrade -yV

sudo apt-get dist-upgrade -yV

sudo apt-get autoremove -y

sudo apt-get autoclean -y

# K8s Network Plugin Requirements

cat <<EOF | sudo tee /etc/modules-load.d/k8s.conf

br_netfilter

EOF

cat <<EOF | sudo tee /etc/sysctl.d/k8s.conf

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

EOF

sudo sysctl -p

# Swap disabled

sudo swapoff -a

sudo reboot

★ VM定義共通部分(master1を例に)

config.vm.define "master1" do |cf|

cf.vm.hostname = "master1.hogehoge.lab"

cf.vm.network "private_network", ip: "192.168.60.241"

cf.vm.provider "virtualbox" do |vb|

vb.memory = 4096

vb.customize ["modifyvm", :id, "--natnet1", "10.168.60/24"]

end

end

こちらも英文からご察しいただいた通りと存じます。VirtualBOX向け各オプション処理内容は![]() 公式サイトでも紹介されていますので、よろしければご参照ください。

公式サイトでも紹介されていますので、よろしければご参照ください。

なお、VB NATの定義部分vb.customize ["modifyvm", :id, "--natnet1", "10.168.60/24"]について、サブネットマスク次第とは存じますが、IPアドレス範囲の最終オクテットは省略記述可能なようです。

★ NFSサーバー向け追加オプション処理内容

disk_file = "/mnt/ntfs/vagrant/hogehoge_lab/.nfs/nfs1.vmdk"

unless File.exists?(disk_file)

vb.customize ['createhd', '--filename', disk_file, '--format', 'VMDK', '--size', 512 * 1024]

end

vb.customize ['storageattach', :id, '--storagectl', 'SCSI', '--port', 2, '--device', 0, '--type', 'hdd', '--medium', disk_file]

本件ではHOST PCのルートディレクトリ(Vagrantfileを保存しているディスク)とは別にマウントしている4TBのディスクntfsにてvmdkファイルを管理しています。ルートディレクトリの空き容量に余裕があるのであれば、このオプションは不要です。

3. VMデプロイ

先ほどのVagrantfileとbootstrap.shを同ディレクトリに配置しvagrant upを実行します。

古いボックスイメージが残っている場合がありますので、デプロイ前に適宜更新していただくことをおススメいたします。

vagrant box list

vagrant box update

vagrant box prune

vagrant up

デフォルトでは、このVagrantfileを配置したディレクトリが、各VMの/home/vagrant/synced_folderにマウントされます。

4. Kubernetes初期設定

【4-1】NFSサーバーセットアップ

VMへSSH接続します。キーファイルはVagrantfile配置ディレクトリの.vagrant/machines/***vmname***/virtualbox/private_keyにそれぞれ作成されているはずです。

ssh -i .vagrant/machines/nfs1/virtualbox/private_key vagrant@192.168.60.65

VMを作り直すたびに秘密鍵は再生成されます。そのため、同名ホストに別の鍵で接続を試行するとエラーになりますので、ssh-keygen -Rや~/.ssh内の管理ファイル等で適宜管理する必要があります。

検証では構築・削除を何度も繰り返すと思われます。Vagrantfile配置ディレクトリ付近に![]() のようなシェルを置いておくととっても楽です。

のようなシェルを置いておくととっても楽です。

cd ~/synced_folder/k8s/nfs1

sudo ./nfs1.sh

#!/usr/bin/env bash

# [4-1-0] Mount Extra Disk

mkfs -t ext4 /dev/sdc

echo "--------"

echo "fdisk -l"

echo "--------"

fdisk -l

mkdir /mnt/nfs1

mount -t ext4 /dev/sdc /mnt/nfs1

echo -e '\n/dev/sdc /mnt/nfs1 ext4 defaults 0 0' >> /etc/fstab

echo "--------"

echo "df -l"

echo "--------"

df -l

echo "--------"

# [4-1-1] Configure K8s Network

cp -v /etc/hosts /etc/hosts.org

echo "192.168.60.241 master1.hogehoge.lab" >> /etc/hosts

echo "192.168.60.129 worker1.hogehoge.lab" >> /etc/hosts

echo "192.168.60.130 worker2.hogehoge.lab" >> /etc/hosts

echo "192.168.60.65 nfs1.hogehoge.lab" >> /etc/hosts

echo "--------"

echo "/etc/hosts"

echo "--------"

cat -n /etc/hosts

echo "KUBELET_EXTRA_ARGS=--node-ip=192.168.60.65" | tee /etc/default/kubelet > /dev/null

echo "--------"

echo "/etc/default/kubelet"

echo "--------"

cat -n /etc/default/kubelet

echo "--------"

# [4-1-2] Install NFS Server

apt-get install -yV nfs-kernel-server

cp -v /etc/idmapd.conf /etc/idmapd.conf.org

sed -i -e "s/# Domain = localdomain/Domain = hogehoge.lab/" /etc/idmapd.conf

echo "--------"

echo "/etc/idmapd.conf"

echo "--------"

cat -n /etc/idmapd.conf

mkdir /mnt/nfs1/export

chmod a+w /mnt/nfs1/export

mkdir /mnt/nfs1/syslog

chmod a+w /mnt/nfs1/syslog

cp -v /etc/exports /etc/exports.org

echo "/mnt/nfs1/export 192.168.60.0/24(rw,no_subtree_check)" >> /etc/exports

echo "/mnt/nfs1/syslog 192.168.60.0/24(rw,no_subtree_check)" >> /etc/exports

echo "--------"

echo "/etc/exports"

echo "--------"

cat -n /etc/exports

systemctl daemon-reload

systemctl restart nfs-server

echo "--------"

echo "END ALL"

echo "--------"

ここで各コンテナのPersistent Volume向けの/mnt/nfs1/exportとは別に各コンテナの各種ログ保管用に/mnt/nfs1/syslogも別途マウントしています。処理完了後、SSHを閉じHOST PCへ戻ります

【4-2】Workerノードセットアップ

Docker、NFSクライアント、kubeadmをわしわしインストールしていきます。いずれもIPアドレスを細かく指定する点以外は、公式手順通りのはずなので説明は割愛させていただきます![]()

Masterノードセットアップ後、K8sクラスターへJOINしますので、SSHは閉じずに別タブ等でログインを継続しておくと便利です。

ssh -i .vagrant/machines/worker1/virtualbox/private_key vagrant@192.168.60.129

cd ~/synced_folder/k8s/worker1

sudo ./worker1.sh

#!/usr/bin/env bash

# [4-2-1-0] Install Docker

#apt-get remove docker docker-engine docker.io containerd runc

apt-get install -yV ca-certificates curl gnupg lsb-release

curl -fsSL https://download.docker.com/linux/ubuntu/gpg | gpg --dearmor -o /usr/share/keyrings/docker-archive-keyring.gpg

echo \

"deb [arch=$(dpkg --print-architecture) signed-by=/usr/share/keyrings/docker-archive-keyring.gpg] https://download.docker.com/linux/ubuntu \

$(lsb_release -cs) stable" | tee /etc/apt/sources.list.d/docker.list > /dev/null

apt-get update

apt-get install -yV docker-ce docker-ce-cli containerd.io

# [4-2-1-1] Enable CRI plugin for containerd

mv -v /etc/containerd/config.toml /etc/containerd/config.toml.org

echo "--------"

echo "/etc/containerd"

echo "--------"

ls -lh /etc/containerd

systemctl restart containerd

echo "--------"

# [4-2-1-2] Configure Docker & K8s Network

cp -v /etc/hosts /etc/hosts.org

echo "192.168.60.241 master1.hogehoge.lab" >> /etc/hosts

echo "192.168.60.129 worker1.hogehoge.lab" >> /etc/hosts

echo "192.168.60.130 worker2.hogehoge.lab" >> /etc/hosts

echo "192.168.60.65 nfs1.hogehoge.lab" >> /etc/hosts

echo "--------"

echo "/etc/hosts"

echo "--------"

cat /etc/hosts

echo "KUBELET_EXTRA_ARGS=--node-ip=192.168.60.129" | tee /etc/default/kubelet > /dev/null

echo "--------"

echo "/etc/default/kubelet"

echo "--------"

cat /etc/default/kubelet

cp -v daemon.json /etc/docker

systemctl daemon-reload

systemctl restart docker

echo "--------"

# [4-2-1-3] Install NFS Client

apt-get install -yV nfs-common

cp -v /etc/idmapd.conf /etc/idmapd.conf.org

sed -i -e "s/# Domain = localdomain/Domain = hogehoge.lab/" /etc/idmapd.conf

echo "--------"

echo "/etc/idmapd.conf"

echo "--------"

cat /etc/idmapd.conf

echo "--------"

# [4-2-1-10] Install K8s

apt-get install -yV apt-transport-https

curl -fsSLo /usr/share/keyrings/kubernetes-archive-keyring.gpg https://packages.cloud.google.com/apt/doc/apt-key.gpg

echo "deb [signed-by=/usr/share/keyrings/kubernetes-archive-keyring.gpg] https://apt.kubernetes.io/ kubernetes-xenial main" | tee /etc/apt/sources.list.d/kubernetes.list

apt-get update

apt-get install -yV kubelet kubeadm kubectl

apt-mark hold kubelet kubeadm kubectl

echo "--------"

echo "END ALL"

echo "--------"

{

"exec-opts": ["native.cgroupdriver=systemd"],

"log-driver": "json-file",

"log-opts": {

"max-size": "100m"

},

"storage-driver": "overlay2",

"default-address-pools": [{

"base": "172.24.0.0/16",

"size": 24

}]

}

worker1とworker2の違いは23行目のKUBELET_EXTRA_ARGS設定部のみで、daemon.jsonは全く同内容です。

ssh -i .vagrant/machines/worker2/virtualbox/private_key vagrant@192.168.60.130

cd ~/synced_folder/k8s/worker2

sudo ./worker2.sh

#!/usr/bin/env bash

# [4-2-2-0] Install Docker

#apt-get remove docker docker-engine docker.io containerd runc

apt-get install -yV ca-certificates curl gnupg lsb-release

curl -fsSL https://download.docker.com/linux/ubuntu/gpg | gpg --dearmor -o /usr/share/keyrings/docker-archive-keyring.gpg

echo \

"deb [arch=$(dpkg --print-architecture) signed-by=/usr/share/keyrings/docker-archive-keyring.gpg] https://download.docker.com/linux/ubuntu \

$(lsb_release -cs) stable" | tee /etc/apt/sources.list.d/docker.list > /dev/null

apt-get update

apt-get install -yV docker-ce docker-ce-cli containerd.io

# [4-2-2-1] Enable CRI plugin for containerd

mv -v /etc/containerd/config.toml /etc/containerd/config.toml.org

echo "--------"

echo "/etc/containerd"

echo "--------"

ls -lh /etc/containerd

systemctl restart containerd

echo "--------"

# [4-2-2-2] Configure Docker & K8s Network

cp -v /etc/hosts /etc/hosts.org

echo "192.168.60.241 master1.hogehoge.lab" >> /etc/hosts

echo "192.168.60.129 worker1.hogehoge.lab" >> /etc/hosts

echo "192.168.60.130 worker2.hogehoge.lab" >> /etc/hosts

echo "192.168.60.65 nfs1.hogehoge.lab" >> /etc/hosts

echo "--------"

echo "/etc/hosts"

echo "--------"

cat /etc/hosts

echo "KUBELET_EXTRA_ARGS=--node-ip=192.168.60.130" | tee /etc/default/kubelet > /dev/null

echo "--------"

echo "/etc/default/kubelet"

echo "--------"

cat /etc/default/kubelet

cp -v daemon.json /etc/docker

systemctl daemon-reload

systemctl restart docker

echo "--------"

# [4-2-2-3] Install NFS Client

apt-get install -yV nfs-common

cp -v /etc/idmapd.conf /etc/idmapd.conf.org

sed -i -e "s/# Domain = localdomain/Domain = hogehoge.lab/" /etc/idmapd.conf

echo "--------"

echo "/etc/idmapd.conf"

echo "--------"

cat /etc/idmapd.conf

echo "--------"

# [4-2-2-10] Install K8s

apt-get install -yV apt-transport-https

curl -fsSLo /usr/share/keyrings/kubernetes-archive-keyring.gpg https://packages.cloud.google.com/apt/doc/apt-key.gpg

echo "deb [signed-by=/usr/share/keyrings/kubernetes-archive-keyring.gpg] https://apt.kubernetes.io/ kubernetes-xenial main" | tee /etc/apt/sources.list.d/kubernetes.list

apt-get update

apt-get install -yV kubelet kubeadm kubectl

apt-mark hold kubelet kubeadm kubectl

echo "--------"

echo "END ALL"

echo "--------"

【4-3】Masterノードセットアップ

Docker、NFSクライアント、kubeadmをわしわし以下同文。(daemon.jsonも同内容)

ssh -i .vagrant/machines/master1/virtualbox/private_key vagrant@192.168.60.241

cd ~/synced_folder/k8s/master1

sudo ./master1.sh

#!/usr/bin/env bash

# [4-3-0] Install Docker

#apt-get remove docker docker-engine docker.io containerd runc

apt-get install -yV ca-certificates curl gnupg lsb-release

curl -fsSL https://download.docker.com/linux/ubuntu/gpg | gpg --dearmor -o /usr/share/keyrings/docker-archive-keyring.gpg

echo \

"deb [arch=$(dpkg --print-architecture) signed-by=/usr/share/keyrings/docker-archive-keyring.gpg] https://download.docker.com/linux/ubuntu \

$(lsb_release -cs) stable" | tee /etc/apt/sources.list.d/docker.list > /dev/null

apt-get update

apt-get install -yV docker-ce docker-ce-cli containerd.io

# [4-3-1] Enable CRI plugin for containerd

mv -v /etc/containerd/config.toml /etc/containerd/config.toml.org

echo "--------"

echo "/etc/containerd"

echo "--------"

ls -lh /etc/containerd

systemctl restart containerd

echo "--------"

# [4-3-2] Configure Docker & K8s Network

cp -v /etc/hosts /etc/hosts.org

echo "192.168.60.241 master1.hogehoge.lab" >> /etc/hosts

echo "192.168.60.129 worker1.hogehoge.lab" >> /etc/hosts

echo "192.168.60.130 worker2.hogehoge.lab" >> /etc/hosts

echo "192.168.60.65 nfs1.hogehoge.lab" >> /etc/hosts

echo "--------"

echo "/etc/hosts"

echo "--------"

cat /etc/hosts

echo "KUBELET_EXTRA_ARGS=--node-ip=192.168.60.241" | tee /etc/default/kubelet > /dev/null

echo "--------"

echo "/etc/default/kubelet"

echo "--------"

cat -n /etc/default/kubelet

echo "--------"

cp -v daemon.json /etc/docker

systemctl daemon-reload

systemctl restart docker

echo "--------"

# [4-3-3] Install NFS Client

apt-get install -yV nfs-common

cp -v /etc/idmapd.conf /etc/idmapd.conf.org

sed -i -e "s/# Domain = localdomain/Domain = hogehoge.lab/" /etc/idmapd.conf

echo "--------"

echo "/etc/idmapd.conf"

echo "--------"

cat -n /etc/idmapd.conf

echo "--------"

# [4-3-10] Install & Init K8s

apt-get install -yV apt-transport-https

curl -fsSLo /usr/share/keyrings/kubernetes-archive-keyring.gpg https://packages.cloud.google.com/apt/doc/apt-key.gpg

echo "deb [signed-by=/usr/share/keyrings/kubernetes-archive-keyring.gpg] https://apt.kubernetes.io/ kubernetes-xenial main" | tee /etc/apt/sources.list.d/kubernetes.list

apt-get update

apt-get install -yV kubelet kubeadm kubectl

apt-mark hold kubelet kubeadm kubectl

kubeadm init --service-cidr 10.168.64.0/24 --pod-network-cidr 172.28.64.0/24 --apiserver-advertise-address 192.168.60.241

# [4-3-11] Configure kubectl

mkdir -p $HOME/.kube

cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

chown $(id -u):$(id -g) $HOME/.kube/config

echo "export KUBECONFIG=/etc/kubernetes/admin.conf" >> ~/.bashrc

echo 'source <(kubectl completion bash)' >> ~/.bashrc

echo 'alias k=kubectl' >> ~/.bashrc

echo 'complete -F __start_kubectl k' >> ~/.bashrc

echo "--------"

echo "tail ~/.bashrc"

echo "--------"

tail ~/.bashrc

source ~/.bashrc

echo "--------"

echo "END ALL"

echo "--------"

tail ~/.bashrcの出力よりちょっと上に、![]() のような出力がされるはずです。

のような出力がされるはずです。

Then you can join any number of worker nodes by running the following on each as root:

kubeadm join 192.168.60.241:6443 --token i9pgge.h8ogpxb0oqjbt0y6 \

--discovery-token-ca-cert-hash sha256:398a9e1d6e4f06bbbc46b81b0d45dc04d929542f3d4455d1ffb0f90e65bec08f

--------

tail ~/.bashrc

--------

現状はmaster1以外のVMはnode不参加のはずです。

vagrant@master1:~/synced_folder/k8s/master1$ sudo kubectl get nodes

NAME STATUS ROLES AGE VERSION

master1 NotReady control-plane 2m52s v1.25.4

先ほどの出力文で他のVMもnodeへ参加させるのですが、一度ワンライナーに記述し直してから実行します。

sudo kubeadm join 192.168.60.241:6443 --token i9pgge.h8ogpxb0oqjbt0y6 --discovery-token-ca-cert-hash sha256:398a9e1d6e4f06bbbc46b81b0d45dc04d929542f3d4455d1ffb0f90e65bec08f

node参加が成功すると、次のような表示に変わるはずです。

vagrant@master1:~/synced_folder/k8s/master1$ sudo kubectl get nodes

NAME STATUS ROLES AGE VERSION

master1 NotReady control-plane 4m2s v1.25.4

worker1 NotReady <none> 20s v1.25.4

worker2 NotReady <none> 13s v1.25.4

現状のSTATUSはNotReadyで正です。

5. CNI(Calico)設定

まずは御本家にて、現在のバージョン等をご確認ください。

一旦、設定ファイルをwget等で落とし、13行目のネットワークアドレスを変更します。

# This section includes base Calico installation configuration.

# For more information, see: https://projectcalico.docs.tigera.io/master/reference/installation/api#operator.tigera.io/v1.Installation

apiVersion: operator.tigera.io/v1

kind: Installation

metadata:

name: default

spec:

# Configures Calico networking.

calicoNetwork:

# Note: The ipPools section cannot be modified post-install.

ipPools:

- blockSize: 26

- cidr: 192.168.0.0/16

+ cidr: 172.28.64.0/24

encapsulation: VXLANCrossSubnet

natOutgoing: Enabled

nodeSelector: all()

---

# This section configures the Calico API server.

# For more information, see: https://projectcalico.docs.tigera.io/master/reference/installation/api#operator.tigera.io/v1.APIServer

apiVersion: operator.tigera.io/v1

kind: APIServer

metadata:

name: default

spec: {}

シェルにするほどのボリュームはありませんが(わらい)

sudo ./calico.sh

#!/usr/bin/env bash

# [5-0] Install Tigera Op.

kubectl create -f https://raw.githubusercontent.com/projectcalico/calico/v3.24.5/manifests/tigera-operator.yaml

# [5-1] Install Calico

# Check Original [wget -v https://raw.githubusercontent.com/projectcalico/calico/v3.24.5/manifests/custom-resources.yaml -O ./custom-resources-now.yaml]

kubectl create -f ./custom-resources.yaml

echo "--------"

echo "get pods -n calico-system --watch"

echo "--------"

kubectl get pods -n calico-system -w

当HOST PCであれば全てのpodが起動するまで1分程度かかりました。

vagrant@master1:~/synced_folder/k8s/master1$ sudo kubectl get ns

NAME STATUS AGE

calico-apiserver Active 5m23s

calico-system Active 6m18s

default Active 27m

kube-node-lease Active 27m

kube-public Active 27m

kube-system Active 27m

tigera-operator Active 6m25s

vagrant@master1:~/synced_folder/k8s/master1$ sudo kubectl get all -n calico-system -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

pod/calico-kube-controllers-6dfbd8f6f6-9wm7x 1/1 Running 0 6m43s 172.28.64.66 master1 <none> <none>

pod/calico-node-2pmd6 1/1 Running 0 6m43s 192.168.60.241 master1 <none> <none>

pod/calico-node-rcf8g 1/1 Running 0 6m43s 192.168.60.129 worker1 <none> <none>

pod/calico-node-w2m4k 1/1 Running 0 6m43s 192.168.60.130 worker2 <none> <none>

pod/calico-typha-69bc56556-9qlbr 1/1 Running 0 6m43s 192.168.60.129 worker1 <none> <none>

pod/calico-typha-69bc56556-l6rvs 1/1 Running 0 6m34s 192.168.60.130 worker2 <none> <none>

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE SELECTOR

service/calico-kube-controllers-metrics ClusterIP 10.168.64.182 <none> 9094/TCP 5m57s k8s-app=calico-kube-controllers

service/calico-typha ClusterIP 10.168.64.219 <none> 5473/TCP 6m44s k8s-app=calico-typha

NAME DESIRED CURRENT READY UP-TO-DATE AVAILABLE NODE SELECTOR AGE CONTAINERS IMAGES SELECTOR

daemonset.apps/calico-node 3 3 3 3 3 kubernetes.io/os=linux 6m43s calico-node docker.io/calico/node:v3.24.5 k8s-app=calico-node

daemonset.apps/csi-node-driver 0 0 0 0 0 kubernetes.io/os=linux 6m43s calico-csi,csi-node-driver-registrar docker.io/calico/csi:v3.24.5,docker.io/calico/node-driver-registrar:v3.24.5 k8s-app=csi-node-driver

NAME READY UP-TO-DATE AVAILABLE AGE CONTAINERS IMAGES SELECTOR

deployment.apps/calico-kube-controllers 1/1 1 1 6m43s calico-kube-controllers docker.io/calico/kube-controllers:v3.24.5 k8s-app=calico-kube-controllers

deployment.apps/calico-typha 2/2 2 2 6m43s calico-typha docker.io/calico/typha:v3.24.5 k8s-app=calico-typha

NAME DESIRED CURRENT READY AGE CONTAINERS IMAGES SELECTOR

replicaset.apps/calico-kube-controllers-6dfbd8f6f6 1 1 1 6m43s calico-kube-controllers docker.io/calico/kube-controllers:v3.24.5 k8s-app=calico-kube-controllers,pod-template-hash=6dfbd8f6f6

replicaset.apps/calico-typha-69bc56556 2 2 2 6m43s calico-typha docker.io/calico/typha:v3.24.5 k8s-app=calico-typha,pod-template-hash=69bc56556

以上でKubenetes検証環境の構築は完了です。お疲れ様です![]()

6. 動作検証(SockShop)

オマケです。

sudo kubectl create -f https://raw.githubusercontent.com/microservices-demo/microservices-demo/master/deploy/kubernetes/complete-demo.yaml

デプロイ後は全てのpodが起動するまでkubectl get po -n sock-shop -w等で観察しておきます。当HOST PCは5分程度かかりました![]()

vagrant@master1:~/synced_folder/k8s/master1$ sudo kubectl get all -n sock-shop -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

pod/carts-78d7c69cb8-gs48s 1/1 Running 0 4m8s 172.28.64.194 worker1 <none> <none>

pod/carts-db-66c4569f54-shvz4 1/1 Running 0 4m8s 172.28.64.130 worker2 <none> <none>

pod/catalogue-7dc9464f59-26msz 1/1 Running 0 4m8s 172.28.64.197 worker1 <none> <none>

pod/catalogue-db-669d5dbf48-csbt6 1/1 Running 0 4m8s 172.28.64.131 worker2 <none> <none>

pod/front-end-7d89d49d6b-6t2mb 1/1 Running 0 4m8s 172.28.64.196 worker1 <none> <none>

pod/orders-6697b9d66d-8d49r 1/1 Running 0 4m8s 172.28.64.198 worker1 <none> <none>

pod/orders-db-7fd77d9556-p7qnd 1/1 Running 0 4m8s 172.28.64.132 worker2 <none> <none>

pod/payment-ff86cd6f8-tsn9d 1/1 Running 0 4m8s 172.28.64.133 worker2 <none> <none>

pod/queue-master-747c9f9cf9-7x7xk 1/1 Running 0 4m8s 172.28.64.201 worker1 <none> <none>

pod/rabbitmq-6c7dfd98f6-4rp8r 2/2 Running 0 4m8s 172.28.64.134 worker2 <none> <none>

pod/session-db-6747f74f56-kkttd 1/1 Running 0 4m8s 172.28.64.195 worker1 <none> <none>

pod/shipping-74586cc59d-wkvhp 1/1 Running 0 4m7s 172.28.64.135 worker2 <none> <none>

pod/user-5b695f9cbd-wfsfc 1/1 Running 0 4m7s 172.28.64.199 worker1 <none> <none>

pod/user-db-bcc86b99d-whn54 1/1 Running 0 4m7s 172.28.64.200 worker1 <none> <none>

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE SELECTOR

service/carts ClusterIP 10.168.64.129 <none> 80/TCP 4m8s name=carts

service/carts-db ClusterIP 10.168.64.40 <none> 27017/TCP 4m8s name=carts-db

service/catalogue ClusterIP 10.168.64.76 <none> 80/TCP 4m8s name=catalogue

service/catalogue-db ClusterIP 10.168.64.41 <none> 3306/TCP 4m8s name=catalogue-db

service/front-end NodePort 10.168.64.150 <none> 80:30001/TCP 4m8s name=front-end

service/orders ClusterIP 10.168.64.35 <none> 80/TCP 4m8s name=orders

service/orders-db ClusterIP 10.168.64.19 <none> 27017/TCP 4m8s name=orders-db

service/payment ClusterIP 10.168.64.247 <none> 80/TCP 4m8s name=payment

service/queue-master ClusterIP 10.168.64.36 <none> 80/TCP 4m8s name=queue-master

service/rabbitmq ClusterIP 10.168.64.226 <none> 5672/TCP,9090/TCP 4m8s name=rabbitmq

service/session-db ClusterIP 10.168.64.136 <none> 6379/TCP 4m8s name=session-db

service/shipping ClusterIP 10.168.64.236 <none> 80/TCP 4m8s name=shipping

service/user ClusterIP 10.168.64.220 <none> 80/TCP 4m8s name=user

service/user-db ClusterIP 10.168.64.59 <none> 27017/TCP 4m8s name=user-db

NAME READY UP-TO-DATE AVAILABLE AGE CONTAINERS IMAGES SELECTOR

deployment.apps/carts 1/1 1 1 4m8s carts weaveworksdemos/carts:0.4.8 name=carts

deployment.apps/carts-db 1/1 1 1 4m8s carts-db mongo name=carts-db

deployment.apps/catalogue 1/1 1 1 4m8s catalogue weaveworksdemos/catalogue:0.3.5 name=catalogue

deployment.apps/catalogue-db 1/1 1 1 4m8s catalogue-db weaveworksdemos/catalogue-db:0.3.0 name=catalogue-db

deployment.apps/front-end 1/1 1 1 4m8s front-end weaveworksdemos/front-end:0.3.12 name=front-end

deployment.apps/orders 1/1 1 1 4m8s orders weaveworksdemos/orders:0.4.7 name=orders

deployment.apps/orders-db 1/1 1 1 4m8s orders-db mongo name=orders-db

deployment.apps/payment 1/1 1 1 4m8s payment weaveworksdemos/payment:0.4.3 name=payment

deployment.apps/queue-master 1/1 1 1 4m8s queue-master weaveworksdemos/queue-master:0.3.1 name=queue-master

deployment.apps/rabbitmq 1/1 1 1 4m8s rabbitmq,rabbitmq-exporter rabbitmq:3.6.8-management,kbudde/rabbitmq-exporter name=rabbitmq

deployment.apps/session-db 1/1 1 1 4m8s session-db redis:alpine name=session-db

deployment.apps/shipping 1/1 1 1 4m8s shipping weaveworksdemos/shipping:0.4.8 name=shipping

deployment.apps/user 1/1 1 1 4m8s user weaveworksdemos/user:0.4.7 name=user

deployment.apps/user-db 1/1 1 1 4m8s user-db weaveworksdemos/user-db:0.3.0 name=user-db

NAME DESIRED CURRENT READY AGE CONTAINERS IMAGES SELECTOR

replicaset.apps/carts-78d7c69cb8 1 1 1 4m8s carts weaveworksdemos/carts:0.4.8 name=carts,pod-template-hash=78d7c69cb8

replicaset.apps/carts-db-66c4569f54 1 1 1 4m8s carts-db mongo name=carts-db,pod-template-hash=66c4569f54

replicaset.apps/catalogue-7dc9464f59 1 1 1 4m8s catalogue weaveworksdemos/catalogue:0.3.5 name=catalogue,pod-template-hash=7dc9464f59

replicaset.apps/catalogue-db-669d5dbf48 1 1 1 4m8s catalogue-db weaveworksdemos/catalogue-db:0.3.0 name=catalogue-db,pod-template-hash=669d5dbf48

replicaset.apps/front-end-7d89d49d6b 1 1 1 4m8s front-end weaveworksdemos/front-end:0.3.12 name=front-end,pod-template-hash=7d89d49d6b

replicaset.apps/orders-6697b9d66d 1 1 1 4m8s orders weaveworksdemos/orders:0.4.7 name=orders,pod-template-hash=6697b9d66d

replicaset.apps/orders-db-7fd77d9556 1 1 1 4m8s orders-db mongo name=orders-db,pod-template-hash=7fd77d9556

replicaset.apps/payment-ff86cd6f8 1 1 1 4m8s payment weaveworksdemos/payment:0.4.3 name=payment,pod-template-hash=ff86cd6f8

replicaset.apps/queue-master-747c9f9cf9 1 1 1 4m8s queue-master weaveworksdemos/queue-master:0.3.1 name=queue-master,pod-template-hash=747c9f9cf9

replicaset.apps/rabbitmq-6c7dfd98f6 1 1 1 4m8s rabbitmq,rabbitmq-exporter rabbitmq:3.6.8-management,kbudde/rabbitmq-exporter name=rabbitmq,pod-template-hash=6c7dfd98f6

replicaset.apps/session-db-6747f74f56 1 1 1 4m8s session-db redis:alpine name=session-db,pod-template-hash=6747f74f56

replicaset.apps/shipping-74586cc59d 1 1 1 4m7s shipping weaveworksdemos/shipping:0.4.8 name=shipping,pod-template-hash=74586cc59d

replicaset.apps/user-5b695f9cbd 1 1 1 4m7s user weaveworksdemos/user:0.4.7 name=user,pod-template-hash=5b695f9cbd

replicaset.apps/user-db-bcc86b99d 1 1 1 4m7s user-db weaveworksdemos/user-db:0.3.0 name=user-db,pod-template-hash=bcc86b99d

ブラウザでhttp://192.168.60.241.30001は表示されましたでしょうか? 画像、画面遷移等問題なければ成功です!!!

後片付け![]()

(1)K8sは残し、SockShopのみ削除

sudo kubectl delete -n sock-shop

(2)VMも含め、一括削除

vagrant destroy

ご一読ありがとうございます

結局、NFSサーバーとは何だったのかと思われるかと存じますが、ローカルでPersistent Volumeを管理する場合、何かと便利なので併記しました。

また機会がありましたら、NFSサーバーを用いたDeploymentやStatefulSetをご紹介させていただければと存じます。

つたない技術力と文章力な内容で誠に恐縮でしたが、最後までお読みいただいたことを、心より感謝申し上げます![]()

![]()

![]()