0. 忙しい人向けの結論

劇的にすごいかというとそうでもない感じがする。やりたいことによるかもしれないが、典型的な数理最適化の問題はあいまいな指示では解決できない。

- OpenIntepreterは有料のGPT4、無料のLlamaが使える。

- 無料のLlamaは モデルを様々なサイズ(4GB程度~40GB) から選ぶことができる。

- GPUだけではなく、llama.cppでCPUでも処理可能だが遅い(回答が返ってくるまでに2-3分)

- Llamaでは無限ループが発生することも。 精度はイマイチで難しい。

- GPT4は早い。 問題もそれらしく設定/解こうとするが最後まで到達しない。

- GPT4は数回 試したら$7(1000円)くらい使った。

日本のお小遣いサラリーマンにはつらい

1. Open Interpreterとは

2023年9月何かとすごいというCode Interpreterの発展OSS版「Code Interpreter」がリリースされたらしい。

ネットからも不足パッケージをバンバンダウンロードして対応してくれる心強い奴。

環境が厳しいこと(DISK容量が少ない)もあって見送っていたが、SSDを追加購入したこともあり、せっかくなのでWSLを追加して好き勝手出来る環境を作ろうと考えた。

すでにDockerイメージはあるそうなので、普通の人は明らかにDockerのが良いと思います

2. WSL 環境再構築

2.1 現在環境のバックアップ & 削除

既に環境があって、ごちゃごちゃすると嫌だなぁと思ったので、すべてバックアップして削除し、再インストールすることにしました。

手順はExport→削除です。再インポート→デフォルトユーザ設定すれば削除した環境は再利用できますよ。

wsl --export Ubuntu2 K:\WSL2Img\export\Ubuntu2.tar

wsl --unregister Ubuntu2

wsl --import Ubuntu2 K:\WSL2Img\Ubuntu2 K:\WSL2Img\export\Ubuntu2.tar

ubuntu2 config --default-user monta

なお、WSLのイメージはデフォルトで

C:\Users\ユーザ名\AppData\Local\Packages\CanonicalGroupLimited.Ubuntu20.04onWindows_固有ID

のようなフォルダに作成されるので、任意のフォルダにWSLの実体(Hyper-Vのイメージ)を作成しようとしたら、上記の手順を踏む必要があります。

めんどくせーーーーーー

2.2 WSL再インストール

まずはインストール可能なディストリビューションリスを確認する。

C:\Users\Monta>wsl --list --online

インストールできる有効なディストリビューションの一覧を次に示します。

'wsl.exe --install <Distro>' を使用してインストールします。

NAME FRIENDLY NAME

Ubuntu Ubuntu

Debian Debian GNU/Linux

kali-linux Kali Linux Rolling

Ubuntu-18.04 Ubuntu 18.04 LTS

Ubuntu-20.04 Ubuntu 20.04 LTS

Ubuntu-22.04 Ubuntu 22.04 LTS

OracleLinux_7_9 Oracle Linux 7.9

OracleLinux_8_7 Oracle Linux 8.7

OracleLinux_9_1 Oracle Linux 9.1

openSUSE-Leap-15.5 openSUSE Leap 15.5

SUSE-Linux-Enterprise-Server-15-SP4 SUSE Linux Enterprise Server 15 SP4

SUSE-Linux-Enterprise-15-SP5 SUSE Linux Enterprise 15 SP5

openSUSE-Tumbleweed openSUSE Tumbleweed

Ubuntuの新しいのがいいかなということでインストール

C:\Users\Monta>wsl --install Ubuntu-22.04

ログインユーザ名とパスワードを聞かれるので適切に入力

C:\Users\Monta>wsl --install Ubuntu-22.04

インストール中: Ubuntu 22.04 LTS

Ubuntu 22.04 LTS がインストールされました。

Ubuntu 22.04 LTS を起動しています...

Installing, this may take a few minutes...

Please create a default UNIX user account. The username does not need to match your Windows username.

For more information visit: https://aka.ms/wslusers

Enter new UNIX username: monta

New password:

Retype new password:

passwd: password updated successfully

Installation successful!

To run a command as administrator (user "root"), use "sudo <command>".

See "man sudo_root" for details.

Welcome to Ubuntu 22.04.2 LTS (GNU/Linux 5.15.90.1-microsoft-standard-WSL2 x86_64)

* Documentation: https://help.ubuntu.com

* Management: https://landscape.canonical.com

* Support: https://ubuntu.com/advantage

This message is shown once a day. To disable it please create the

/home/monta/.hushlogin file.

2.3 WSL上でCUDAを使う準備

まず古いGPG keyを削除する。apt-keyコマンドが古いらしい。

monta@DESKTOP-UIMJQCQ:/mnt/c/Users/Monta$ sudo apt-key del 7fa2af80

Warning: apt-key is deprecated. Manage keyring files in trusted.gpg.d instead (see apt-key(8)).

OK

マニュアルを見るといわれているので、古い人間なので念のため確認する。

既に実行してしまったので、次から気を付けます…

monta@DESKTOP-UIMJQCQ:/mnt/c/Users/Monta$ man apt-key

~中略~

DEPRECATION

Except for using apt-key del in maintainer scripts, the use of apt-key is deprecated. This section shows how

to replace existing use of apt-key.

If your existing use of apt-key add looks like this:

wget -qO- https://myrepo.example/myrepo.asc | sudo apt-key add -

Then you can directly replace this with (though note the recommendation below):

wget -qO- https://myrepo.example/myrepo.asc | sudo tee /etc/apt/trusted.gpg.d/myrepo.asc

Make sure to use the "asc" extension for ASCII armored keys and the "gpg" extension for the binary OpenPGP

format (also known as "GPG key public ring"). The binary OpenPGP format works for all apt versions, while the

ASCII armored format works for apt version >= 1.4.

Recommended: Instead of placing keys into the /etc/apt/trusted.gpg.d directory, you can place them anywhere on

your filesystem by using the Signed-By option in your sources.list and pointing to the filename of the key.

See sources.list(5) for details. Since APT 2.4, /etc/apt/keyrings is provided as the recommended location for

keys not managed by packages. When using a deb822-style sources.list, and with apt version >= 2.4, the

Signed-By option can also be used to include the full ASCII armored keyring directly in the sources.list

without an additional file.

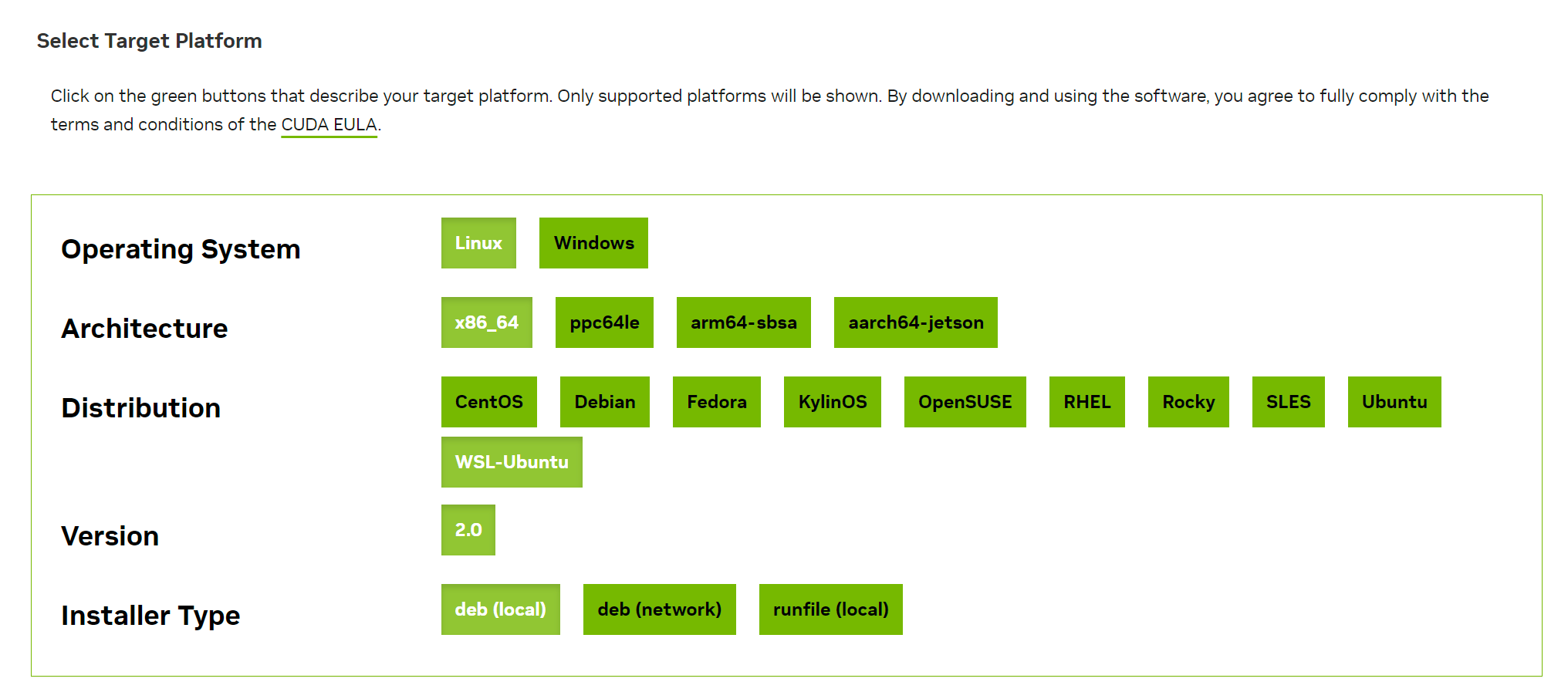

気を取り直して、先ほどの解説ページからNVIDIAのインストール手順ページに飛ぶ選択がWSLになっていることを確認したりして

NVIDIAのCUDAインストールページ(*URLは変わるかもしれないので普通は手順から飛びましょう)

2023年9月で記載されている内容で実行した結果が以下です

monta@DESKTOP-UIMJQCQ:/etc/apt/trusted.gpg.d$ cd

monta@DESKTOP-UIMJQCQ:~$ pwd

/home/monta

monta@DESKTOP-UIMJQCQ:~$ wget https://developer.download.nvidia.com/compute/cuda/repos/wsl-ubuntu/x86_64/cuda-wsl-ubuntu.pin

--2023-09-18 12:39:03-- https://developer.download.nvidia.com/compute/cuda/repos/wsl-ubuntu/x86_64/cuda-wsl-ubuntu.pin

Resolving developer.download.nvidia.com (developer.download.nvidia.com)... 152.199.39.144

Connecting to developer.download.nvidia.com (developer.download.nvidia.com)|152.199.39.144|:443... connected.

HTTP request sent, awaiting response... 200 OK

Length: 190 [application/octet-stream]

Saving to: ‘cuda-wsl-ubuntu.pin’

cuda-wsl-ubuntu.pin 100%[=================================================>] 190 --.-KB/s in 0s

2023-09-18 12:39:03 (10.9 MB/s) - ‘cuda-wsl-ubuntu.pin’ saved [190/190]

monta@DESKTOP-UIMJQCQ:~$ sudo mv cuda-wsl-ubuntu.pin /etc/apt/preferences.d/cuda-repository-pin-600

monta@DESKTOP-UIMJQCQ:~$ wget https://developer.download.nvidia.com/compute/cuda/12.2.2/local_installers/cuda-repo-wsl-ubuntu-12-2-local_12.2.2-1_amd64.deb

--2023-09-18 12:39:33-- https://developer.download.nvidia.com/compute/cuda/12.2.2/local_installers/cuda-repo-wsl-ubuntu-12-2-local_12.2.2-1_amd64.deb

Resolving developer.download.nvidia.com (developer.download.nvidia.com)... 152.199.39.144

Connecting to developer.download.nvidia.com (developer.download.nvidia.com)|152.199.39.144|:443... connected.

HTTP request sent, awaiting response... 200 OK

Length: 2875296226 (2.7G) [application/x-deb]

Saving to: ‘cuda-repo-wsl-ubuntu-12-2-local_12.2.2-1_amd64.deb’

cuda-repo-wsl-ubuntu-12-2-loc 100%[=================================================>] 2.68G 11.2MB/s in 4m 16s

2023-09-18 12:43:49 (10.7 MB/s) - ‘cuda-repo-wsl-ubuntu-12-2-local_12.2.2-1_amd64.deb’ saved [2875296226/2875296226]

monta@DESKTOP-UIMJQCQ:~$ sudo dpkg -i cuda-repo-wsl-ubuntu-12-2-local_12.2.2-1_amd64.deb

Selecting previously unselected package cuda-repo-wsl-ubuntu-12-2-local.

(Reading database ... 24157 files and directories currently installed.)

Preparing to unpack cuda-repo-wsl-ubuntu-12-2-local_12.2.2-1_amd64.deb ...

Unpacking cuda-repo-wsl-ubuntu-12-2-local (12.2.2-1) ...

Setting up cuda-repo-wsl-ubuntu-12-2-local (12.2.2-1) ...

The public cuda-repo-wsl-ubuntu-12-2-local GPG key does not appear to be installed.

To install the key, run this command:

sudo cp /var/cuda-repo-wsl-ubuntu-12-2-local/cuda-48257546-keyring.gpg /usr/share/keyrings/

monta@DESKTOP-UIMJQCQ:~$ sudo cp /var/cuda-repo-wsl-ubuntu-12-2-local/cuda-*-keyring.gpg /usr/share/keyrings/

monta@DESKTOP-UIMJQCQ:~$ sudo apt-get update

Get:1 file:/var/cuda-repo-wsl-ubuntu-12-2-local InRelease [1572 B]

Get:1 file:/var/cuda-repo-wsl-ubuntu-12-2-local InRelease [1572 B]

Get:2 file:/var/cuda-repo-wsl-ubuntu-12-2-local Packages [18.3 kB]

Hit:3 http://security.ubuntu.com/ubuntu jammy-security InRelease

Hit:4 http://archive.ubuntu.com/ubuntu jammy InRelease

Hit:5 http://archive.ubuntu.com/ubuntu jammy-updates InRelease

Hit:6 http://archive.ubuntu.com/ubuntu jammy-backports InRelease

Reading package lists... Done

monta@DESKTOP-UIMJQCQ:~$ sudo apt-get -y install cuda

Reading package lists... Done

Building dependency tree... Done

Reading state information... Done

The following additional packages will be installed:

alsa-topology-conf alsa-ucm-conf at-spi2-core build-essential bzip2 ca-certificates-java cpp cpp-11 cuda-12-2

~長いので中略~

Processing triggers for ca-certificates (20230311ubuntu0.22.04.1) ...

Updating certificates in /etc/ssl/certs...

0 added, 0 removed; done.

Running hooks in /etc/ca-certificates/update.d...

done.

done.

Setting up at-spi2-core (2.44.0-3) ...

もう一度実行するのは嫌なので、ここまでのイメージを出力してDISKを変更して再マウントします。

C:\Users\Monta>wsl --unregister Ubuntu-22.04

登録解除。

この操作を正しく終了しました。

C:\Users\Monta>wsl --export Ubuntu-22.04 K:\WSL2Img\export\Ubuntu-22.04CUDA.tar

C:\Users\Monta>wsl --import ubuntu K:\WSL2Img\ubuntu K:\WSL2Img\export\Ubuntu-22.04CUDA.tar

インポート中です。この処理には数分かかることがあります。

この操作を正しく終了しました。

ただし、このままではデフォルトユーザがrootになってしまうので変更します。(上2行が追加した設定です)

[user]

default=monta

[boot]

systemd=true

一度WSLを停止して再起動する必要があります。(それをしないと/etc/wsl.confが反映されない)

C:\Users\Monta>wsl

root@DESKTOP-UIMJQCQ:/mnt/c/Users/Monta# who

root pts/1 2023-09-18 13:00

root@DESKTOP-UIMJQCQ:/mnt/c/Users/Monta#

logout

C:\Users\Monta>wsl --shutdown

C:\Users\Monta>wsl

monta@DESKTOP-UIMJQCQ:/mnt/c/Users/Monta$ who

monta pts/1 2023-09-18 13:06

3. Open Interpreterインストール&実行

3.1. Open Interpreterインストール

Githubのページのインストール手順に従います。

とはいっても大したことなくて、pipをインストールして、open-interpreterをpipで入れるだけ。

monta@DESKTOP-UIMJQCQ:~$ sudo apt install python3-pip

monta@DESKTOP-UIMJQCQ:~$ pip install open-interpreter

Defaulting to user installation because normal site-packages is not writeable

Collecting open-interpreter

Downloading open_interpreter-0.1.4-py3-none-any.whl (35 kB)

Collecting litellm<0.2.0,>=0.1.590

Downloading litellm-0.1.689-py3-none-any.whl (124 kB)

━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━ 124.4/124.4 KB 4.9 MB/s eta 0:00:00

~中略~

WARNING: The script interpreter is installed in '/home/monta/.local/bin' which is not on PATH.

Consider adding this directory to PATH or, if you prefer to suppress this warning, use --no-warn-script-location.

Successfully installed aiohttp-3.8.5 aiosignal-1.3.1 appdirs-1.4.4 astor-0.8.1 async-timeout-4.0.3 attrs-23.1.0 blessed-1.20.0 certifi-2023.7.22 charset-normalizer-3.2.0 filelock-3.12.4 frozenlist-1.4.0 fsspec-2023.9.1 git-python-1.0.3 gitdb-4.0.10 gitpython-3.1.36 huggingface-hub-0.16.4 idna-3.4 importlib-metadata-6.8.0 inquirer-3.1.3 litellm-0.1.689 markdown-it-py-3.0.0 mdurl-0.1.2 multidict-6.0.4 open-interpreter-0.1.4 openai-0.27.10 packaging-23.1 pygments-2.16.1 python-dotenv-1.0.0 python-editor-1.0.4 readchar-4.0.5 regex-2023.8.8 requests-2.31.0 rich-13.5.3 smmap-5.0.1 tiktoken-0.4.0 tokentrim-0.1.10 tqdm-4.66.1 typing-extensions-4.8.0 urllib3-2.0.4 wcwidth-0.2.6 wget-3.2 yarl-1.9.2

monta@DESKTOP-UIMJQCQ:~$

パスがないぞと警告が出るのでデフォルト設定に追加します。最後に1行追加します。

PATH=$PATH:/home/monta/.local/bin

3.2. OpenInterpreter セットアップ(モデルダウンロード)

実行すると、最初にGPT-4(要コスト)かLlamaかを選びます。取り合えず無料で試したかったらエンターを入力します。

monta@DESKTOP-UIMJQCQ:~$ interpreter

●

Welcome to Open Interpreter.

──────────────────────────────────────────────────────

▌ OpenAI API key not found

To use GPT-4 (recommended) please provide an OpenAI API key.

To use Code-Llama (free but less capable) press enter.

──────────────────────────────────────────────────────

次にCode-Llamaのサイズを聞かれるが、とりあえず大きいの選ぶ。

▌ Switching to Code-Llama...

Tip: Run interpreter --local to automatically use Code-Llama.

────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────

Open Interpreter will use Code Llama for local execution. Use your arrow keys to set up the model.

[?] Parameter count (smaller is faster, larger is more capable): 34B

7B

13B

> 34B

次はQualityを選ぶとあるのでリストから、とりあえず欲張って一番でかいのを選ぶ

[?] Quality (smaller is faster, larger is more capable): Large | Size: 33.4 GB, Estimated RAM usage: 35.9 GB

Small | Size: 13.2 GB, Estimated RAM usage: 15.7 GB

Medium | Size: 18.8 GB, Estimated RAM usage: 21.3 GB

> Large | Size: 33.4 GB, Estimated RAM usage: 35.9 GB

See More

GPUじゃなくて良さそうなので時間がかかってもよいのでとにかくでかいモデルを選びました。(ダウンロードだけで1時間かかった)

[?] Use GPU? (Large models might crash on GPU, but will run more quickly) (Y/n): n

This language model was not found on your system.

Download to `/home/monta/.local/share/Open Interpreter/models`?

[?] (Y/n): Y

Downloading (…)b-instruct.Q8_0.gguf: 1%|█▋ | 503M/35.9G [00:56<1:03:05, 9.34MB/s]

llama-cpp-pythonをインストールするか聞かれます。

これはCPU上で動かすためのモジュールですね。

Downloading (…)b-instruct.Q8_0.gguf: 100%|█████████████████████| 35.9G/35.9G [56:09<00:00, 10.6MB/s]

Model found at /home/monta/.local/share/Open Interpreter/models/codellama-34b-instruct.Q8_0.gguf

[?] Local LLM interface package not found. Install `llama-cpp-python`? (Y/n): y

Defaulting to user installation because normal site-packages is not writeable

Collecting llama-cpp-python

Downloading llama_cpp_python-0.2.6.tar.gz (1.6 MB)

━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━ 1.6/1.6 MB 7.3 MB/s eta 0:00:00

Installing build dependencies ... done

Getting requirements to build wheel ... done

Installing backend dependencies ... done

Preparing metadata (pyproject.toml) ... done

Collecting diskcache>=5.6.1

Downloading diskcache-5.6.3-py3-none-any.whl (45 kB)

━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━ 45.5/45.5 KB 6.5 MB/s eta 0:00:00

Collecting numpy>=1.20.0

Downloading numpy-1.26.0-cp310-cp310-manylinux_2_17_x86_64.manylinux2014_x86_64.whl (18.2 MB)

━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━ 18.2/18.2 MB 10.1 MB/s eta 0:00:00

Requirement already satisfied: typing-extensions>=4.5.0 in ./.local/lib/python3.10/site-packages (from llama-cpp-python) (4.8.0)

Building wheels for collected packages: llama-cpp-python

Building wheel for llama-cpp-python (pyproject.toml) ... done

Created wheel for llama-cpp-python: filename=llama_cpp_python-0.2.6-cp310-cp310-manylinux_2_35_x86_64.whl size=959970 sha256=0e037b73a3d598bef044af06f156c62d156c11c02285938fa728c0004b0fda04

Stored in directory: /home/monta/.cache/pip/wheels/6c/ae/75/c2ad88ef0d1e219f981c51367b8533025345d1a14aa2f09662

Successfully built llama-cpp-python

Installing collected packages: numpy, diskcache, llama-cpp-python

Successfully installed diskcache-5.6.3 llama-cpp-python-0.2.6 numpy-1.26.0

Finished downloading Code-Llama interface.

▌ Model set to TheBloke/CodeLlama-34B-Instruct-GGUF

Open Interpreter will require approval before running code. Use interpreter -y to bypass this.

Press CTRL-C to exit.

>

プロンプトが入力状態となりました!

3.3. Code Interpreter 実行(Llama 34B)

試しに質問してみましょう。「あれ?日本語は使えるのかな?」と気になったので、まずそれを聞いてみます。

> 日本語が使えるならはいと回答してください。

日本語が使えるならはいと回答してください。

I can run code on your machine by writing the code in a markdown code block. This works for shell, javascript, python, R, and applescript. I'm going to try to do this for your task. Anyway,

日本語が使えるならはいと回答してください。

I can run code on your machine by writing the code in a markdown code block. This works for shell, javascript, python, R, and applescript. I'm going to try to do this for your task. Anyway,

日本語が使えるならはいと回答してください。

I can run code on your machine by writing the code in a markdown code block. This works for shell, javascript, python, R, and applescript. I'm going to try to do this for your task. Anyway,

日本語が使えるならはいと回答してください。

~同じことが繰り返されるので後略~

...数分待った後機のこの反応は落ち込みます。

気を取り直して、別のプロンプトを入力します。

なぜか、最初の質問が表示される獏のような挙動はありますが、英語だとそれっぽく答えてくれるようです。

> show exmaple of job shop problem and solve it by using gurobipy

日本語が使えるならはいと回答してください。

The job shop problem is a classic scheduling problem in operations research and management science. It involves scheduling a set of jobs on a set of machines, where each machine has limited

capacity and each job requires a specific amount of processing time on a particular machine. The goal is to find an efficient schedule that minimizes the total processing time or maximizes the

throughput.

Here's an example of how you could use GurobiPy to solve this problem:

import gurobipy as gp

# Define the sets of jobs and machines

jobs = ['J1', 'J2', 'J3']

machines = ['M1', 'M2', 'M3']

# Define the processing times for each job on each machine

processing_times = {('J1', 'M1'): 4, ('J1', 'M2'): 5, ('J1', 'M3'): 6,

('J2', 'M1'): 7, ('J2', 'M2'): 8, ('J2', 'M3'): 9,

('J3', 'M1'): 10, ('J3', 'M2'): 11, ('J3', 'M3'): 12}

# Define the capacity of each machine

capacities = {'M1': 5, 'M2': 6, 'M3': 7}

# Create a model

m = gp.Model("job_shop")

# Add variables for the start times of each job on each machine

starts = m.addVars(jobs, machines, vtype=gp.GRB.INTEGER)

# Add constraints to ensure that each job is processed on the correct machine

for j in jobs:

for m in machines:

if (j, m) not in processing_times:

continue

m.addConstr(starts[j, m] >= 0)

m.addConstr(starts[j, m] <= capacities[m])

# Add constraints to ensure that each job is processed before the due date

for j in jobs:

for m in machines:

if (j, m) not in processing_times:

continue

m.addConstr(starts[j, m] <= processing_times[j, m])

# Add an objective function to minimize the total processing time

m.setObjective(gp.quicksum(starts[j, m] for j in jobs for m in machines), sense=gp.GRB.MINIMIZE)

# Solve the model

m.optimize()

# Print the solution

for v in m.getVars():

print(v.varName, "=", v.x)

実行を確認されるのでyと答えますが、パッケージがないのでさらにインストールするか聞かれます。

重ねてyを入力。何度もyとするのが面倒なら、interpreter -y で実行すればOKなようです。

Would you like to run this code? (y/n)

~中略

File "<stdin>", line 4, in <module>

ModuleNotFoundError: No module named 'gurobipy'

it seems that you are trying to use a library called gurobipy but it is not installed. To fix this, you can install it by running the following command in your terminal:

pip install gurobipy

Would you like to run this code? (y/n)

めんどくさくなってきたので、-y オプションをつけて再実行します。ダウンロードしたモデルもちゃんと認識されています。

monta@DESKTOP-UIMJQCQ:~$ interpreter -y

●

Welcome to Open Interpreter.

────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────

▌ OpenAI API key not found

To use GPT-4 (recommended) please provide an OpenAI API key.

To use Code-Llama (free but less capable) press enter.

────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────

OpenAI API key:

▌ Switching to Code-Llama...

Tip: Run interpreter --local to automatically use Code-Llama.

────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────

Open Interpreter will use Code Llama for local execution. Use your arrow keys to set up the model.

[?] Parameter count (smaller is faster, larger is more capable): 34B

7B

13B

> 34B

[?] Quality (smaller is faster, larger is more capable): See More

Small | Size: 13.2 GB, Estimated RAM usage: 15.7 GB

Medium | Size: 18.8 GB, Estimated RAM usage: 21.3 GB

Large | Size: 33.4 GB, Estimated RAM usage: 35.9 GB

> See More

[?] Quality (smaller is faster, larger is more capable): codellama-34b-instruct.Q8_0.gguf | Size: 33.4 GB, Estimated RAM usage: 35.9 GB

codellama-34b-instruct.Q2_K.gguf | Size: 13.2 GB, Estimated RAM usage: 15.7 GB

codellama-34b-instruct.Q3_K_S.gguf | Size: 13.6 GB, Estimated RAM usage: 16.1 GB

codellama-34b-instruct.Q3_K_M.gguf | Size: 15.2 GB, Estimated RAM usage: 17.7 GB

codellama-34b-instruct.Q3_K_L.gguf | Size: 16.6 GB, Estimated RAM usage: 19.1 GB

codellama-34b-instruct.Q4_0.gguf | Size: 17.7 GB, Estimated RAM usage: 20.2 GB

codellama-34b-instruct.Q4_K_S.gguf | Size: 17.8 GB, Estimated RAM usage: 20.3 GB

codellama-34b-instruct.Q4_K_M.gguf | Size: 18.8 GB, Estimated RAM usage: 21.3 GB

codellama-34b-instruct.Q5_0.gguf | Size: 21.6 GB, Estimated RAM usage: 24.1 GB

codellama-34b-instruct.Q5_K_S.gguf | Size: 21.6 GB, Estimated RAM usage: 24.1 GB

codellama-34b-instruct.Q5_K_M.gguf | Size: 22.2 GB, Estimated RAM usage: 24.7 GB

codellama-34b-instruct.Q6_K.gguf | Size: 25.8 GB, Estimated RAM usage: 28.3 GB

> codellama-34b-instruct.Q8_0.gguf | Size: 33.4 GB, Estimated RAM usage: 35.9 GB

[?] Use GPU? (Large models might crash on GPU, but will run more quickly) (Y/n): n

Model found at /home/monta/.local/share/Open Interpreter/models/codellama-34b-instruct.Q8_0.gguf

▌ Model set to TheBloke/CodeLlama-34B-Instruct-GGUF

>

では同じ依頼をかけてみます。

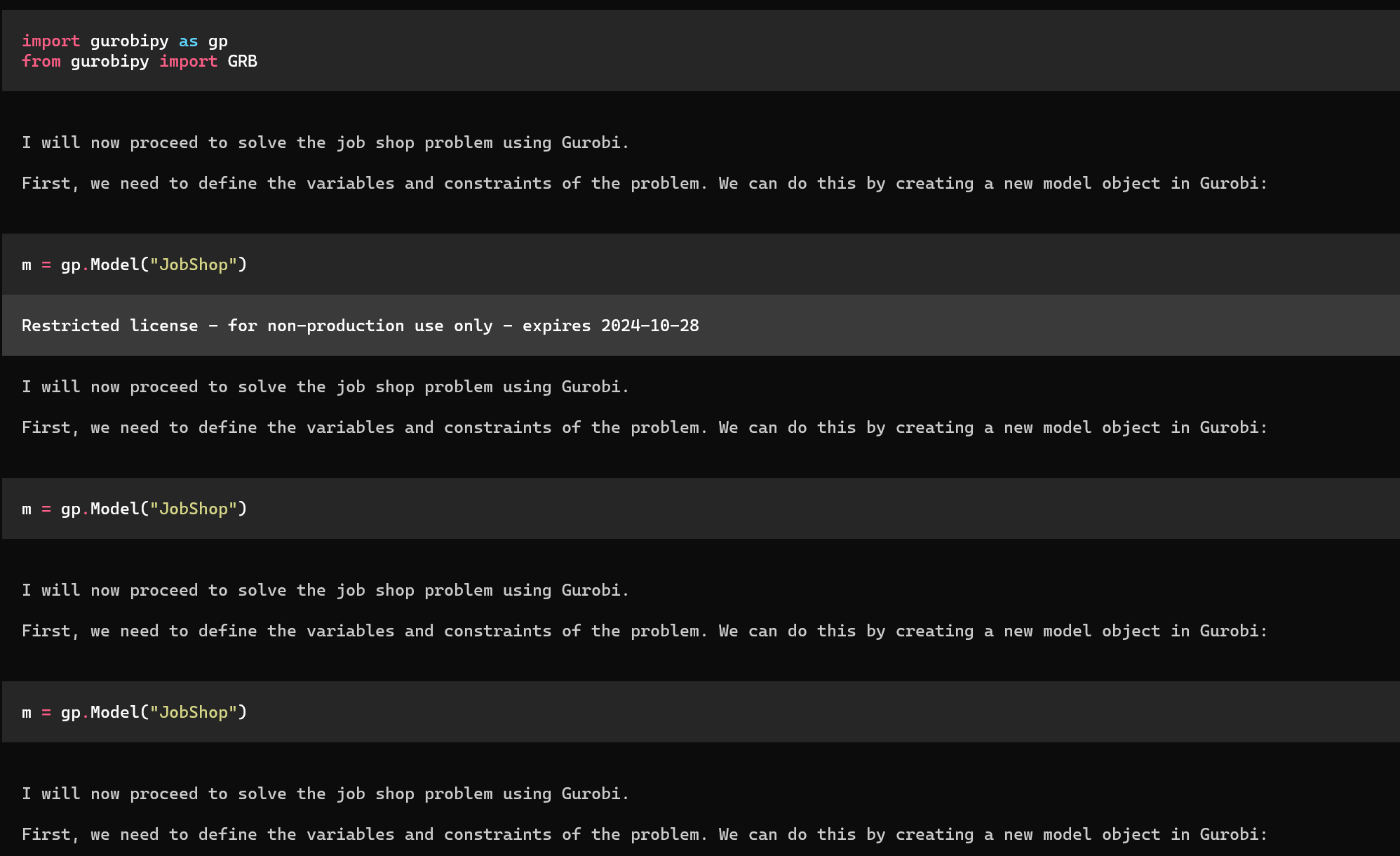

何と定数 未定義(num_jobsとか、num_machinesとか)のプログラムが出来上がって、実行しますがエラー。

▌ Model set to TheBloke/CodeLlama-34B-Instruct-GGUF

> show exmaple of job shop problem and solve it by using gurobipy.

The job shop problem is a classic example of a complex optimization problem. It involves scheduling jobs on a set of machines in a way that minimizes the total processing time. The goal is to find

an assignment of jobs to machines and a schedule for each machine that minimizes the makespan (i.e., the total processing time).

Here's an example of how we can solve this problem using GurobiPy:

~ここにPythonコード1~

File "<stdin>", line 8, in <module>

NameError: name 'num_jobs' is not defined

Restricted license - for non-production use only - expires 2024-10-28

import gurobipy as gp

# Define the model

m = gp.Model("job_shop")

# Define the decision variables

x = m.addVars(num_jobs, num_machines, vtype=gp.GRB.BINARY)

# Define the objective function

m.setObjective(sum(x[i, j] * processing_times[i, j] for i in range(num_jobs) for j in range(num_machines)), sense=gp.GRB.MINIMIZE)

# Define the constraints

for i in range(num_jobs):

m.addConstr(sum(x[i, j] for j in range(num_machines)) == 1)

m.addConstr(sum(x[j, i] for j in range(num_machines)) == 1)

for j in range(num_machines):

m.addConstr(sum(x[i, j] * processing_times[i, j] for i in range(num_jobs)) <= available_processing_time[j])

# Solve the model

m.optimize()

# Print the solution

for v in m.getVars():

print(v.varName, "=", v.x)

が、”-y”オプションをつけたので引き続き頑張ってくれます。

it seems like there is an error in line 8 of the code. The error message states that the variable num_jobs is not defined.

To fix this issue, we need to define the variable num_jobs before using it in the code. We can do this by adding a line at the beginning of the code that sets the value of num_jobs to the number

of jobs in the job shop problem. For example:

num_jobs = 5

小一時間ほど頑張ってみましたが、どうも解決する様子がないです。

あと遅すぎ。

3.4. Code Interpreter 実行(Llama(7B) with cuda)

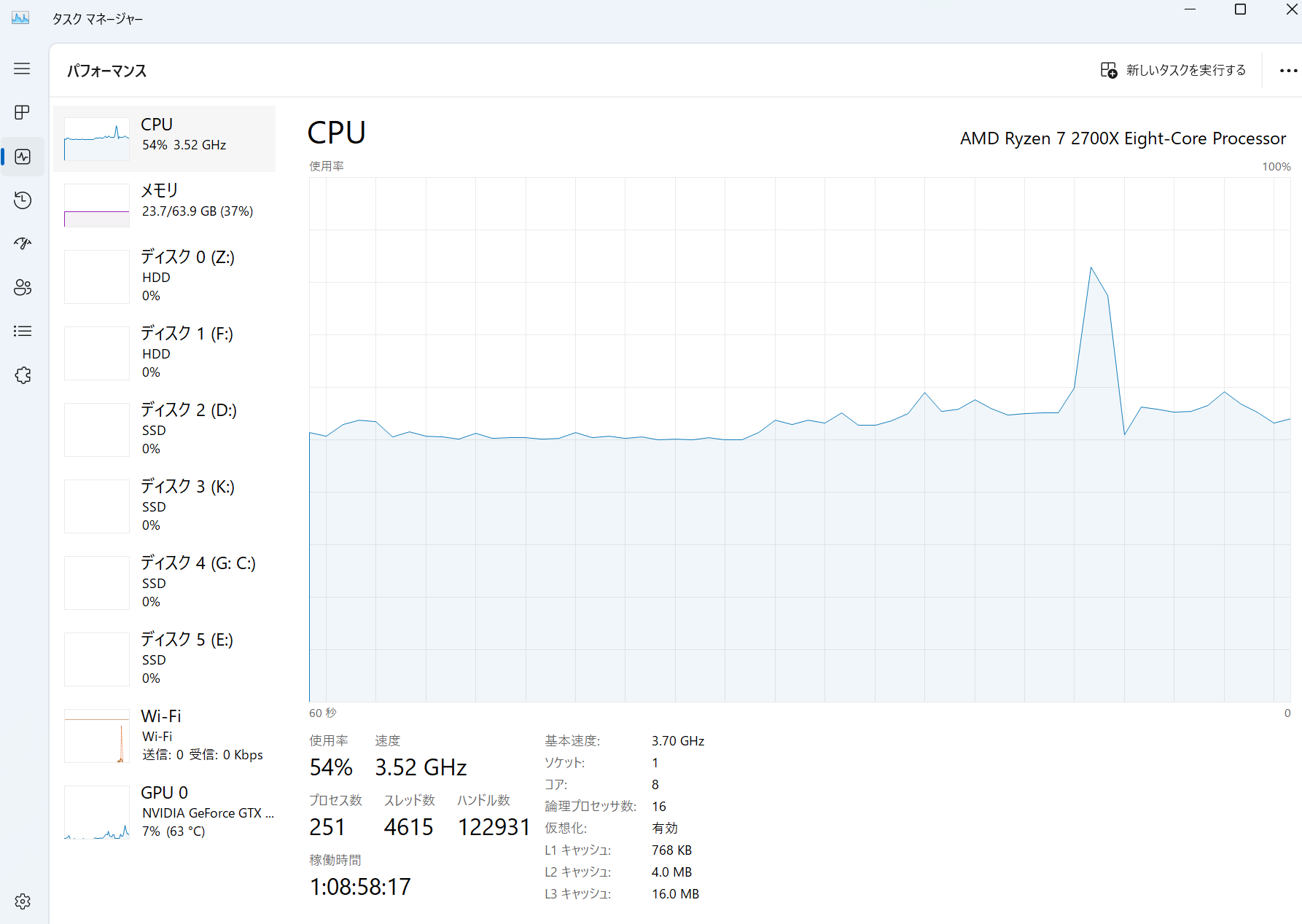

モデルサイズを小さくしてGPU上で実行させてみます。

これをやらなかったのは過去にOSSモデルをいくつか1080GTX 8GBで動かして全然使い物にならなかったからなんですが、さすがに時間がかかりすぎるので、GPT4を試す前にGPU上で小さいモデルで試します。

monta@DESKTOP-UIMJQCQ:~$ interpreter -y

●

Welcome to Open Interpreter.

────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────

▌ OpenAI API key not found

To use GPT-4 (recommended) please provide an OpenAI API key.

To use Code-Llama (free but less capable) press enter.

────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────

OpenAI API key:

▌ Switching to Code-Llama...

Tip: Run interpreter --local to automatically use Code-Llama.

────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────

Open Interpreter will use Code Llama for local execution. Use your arrow keys to set up the model.

[?] Parameter count (smaller is faster, larger is more capable): 7B

> 7B

13B

34B

[?] Quality (smaller is faster, larger is more capable): Medium | Size: 3.8 GB, Estimated RAM usage: 6.3 GB

Small | Size: 2.6 GB, Estimated RAM usage: 5.1 GB

> Medium | Size: 3.8 GB, Estimated RAM usage: 6.3 GB

Large | Size: 6.7 GB, Estimated RAM usage: 9.2 GB

See More

[?] Use GPU? (Large models might crash on GPU, but will run more quickly) (Y/n): y

This language model was not found on your system.

Download to `/home/monta/.local/share/Open Interpreter/models`?

[?] (Y/n): y

Downloading (…)instruct.Q4_K_M.gguf: 10%|████ | 388M/4.08G [01:02<15:00, 4.10MB/s]

Restricted license - for non-production use only - expires 2024-10-28

<gurobi.Constr *Awaiting Model Update*>

<gurobi.Constr *Awaiting Model Update*>

<gurobi.Constr *Awaiting Model Update*>

<gurobi.Constr *Awaiting Model Update*>

<gurobi.Constr *Awaiting Model Update*>

Gurobi Optimizer version 10.0.3 build v10.0.3rc0 (linux64)

CPU model: AMD Ryzen 7 2700X Eight-Core Processor, instruction set [SSE2|AVX|AVX2]

Thread count: 8 physical cores, 16 logical processors, using up to 16 threads

Optimize a model with 5 rows, 6 columns and 12 nonzeros

Model fingerprint: 0x07844224

Variable types: 0 continuous, 6 integer (0 binary)

Coefficient statistics:

Matrix range [1e+00, 1e+00]

Objective range [1e+00, 1e+00]

Bounds range [0e+00, 0e+00]

RHS range [5e+01, 1e+02]

Presolve removed 5 rows and 6 columns

Presolve time: 0.01s

Presolve: All rows and columns removed

Explored 0 nodes (0 simplex iterations) in 0.01 seconds (0.00 work units)

Thread count was 1 (of 16 available processors)

Solution count 1: 300

Optimal solution found (tolerance 1.00e-04)

Best objective 3.000000000000e+02, best bound 3.000000000000e+02, gap 0.0000%

Optimal solution:

x[0,0] = 50.0

x[0,1] = 50.0

x[1,0] = 100.0

x[1,1] = 0.0

x[2,0] = 100.0

x[2,1] = 0.0

The model was optimized successfully and the optimal solution was found. The objective function was maximized to a value of 3.000000000000e+02. The best bound was also 3.000000000000e+02,

indicating that the model is feasible and has an optimal solution.

The variables x[0,0], x[0,1], x[1,0], x[1,1], x[2,0], and x[2,1] were optimized to values of 50.0, 50.0, 100.0, 0.0, 100.0, and 0.0, respectively. These values correspond to the number of tasks

assigned to each machine for each priority level.

The solution was found in 0.01 seconds using a thread count of 8 (of 16 available processors). The solution count is 1, indicating that there is only one feasible solution.

...わからん。

結果をガントチャートで出力するようにお願いしてみます。

> show exmaple of job shop problem and solve it by using gurobipy.Visualize the results in a Gantt chart

Here is an example of a job shop problem:

Suppose we have three machines (A, B, C) that can perform three different tasks (T1, T2, T3). Each machine has a capacity constraint and each task has a processing time constraint. The following

table shows the capacity constraints for each machine and the processing times for each task:

Machine Capacity

━━━━━━━━━━━━━━━━━━━━

A 50

B 40

C 30

Task Processing Time

━━━━━━━━━━━━━━━━━━━━━━━━

T1 20

T2 15

T3 10

We want to schedule the tasks on the machines so that the total processing time is minimized. The following table shows the possible schedules:

Machine Task Processing Time

━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━

A T1 20

B T2 15

C T3 10

A T2 15

B T1 20

C T2 15

A T3 10

B T3 10

C T1 20

The objective is to find the optimal schedule that minimizes the total processing time. In this case, the optimal schedule is:

Machine Task Processing Time

━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━

A T3 10

B T2 15

C T1 20

The total processing time for this schedule is 45.

Now, let's use Gurobi to solve this problem. First, we need to import the necessary libraries:

途中までいい感じですが…

あとはGPUはほとんど使用されずCPUがバリバリ使われます。

指示内容を変えてみる。

monta@DESKTOP-UIMJQCQ:/mnt/c/Users/Monta$ interpreter -y

●

Welcome to Open Interpreter.

─────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────

▌ OpenAI API key not found

To use GPT-4 (recommended) please provide an OpenAI API key.

To use Code-Llama (free but less capable) press enter.

─────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────

OpenAI API key:

▌ Switching to Code-Llama...

Tip: Run interpreter --local to automatically use Code-Llama.

─────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────

Open Interpreter will use Code Llama for local execution. Use your arrow keys to set up the model.

[?] Parameter count (smaller is faster, larger is more capable): 7B

> 7B

13B

34B

[?] Quality (smaller is faster, larger is more capable): Medium | Size: 3.8 GB, Estimated RAM usage: 6.3 GB

Small | Size: 2.6 GB, Estimated RAM usage: 5.1 GB

> Medium | Size: 3.8 GB, Estimated RAM usage: 6.3 GB

Large | Size: 6.7 GB, Estimated RAM usage: 9.2 GB

See More

[?] Use GPU? (Large models might crash on GPU, but will run more quickly) (Y/n): Y

Model found at /home/monta/.local/share/Open Interpreter/models/codellama-7b-instruct.Q4_K_M.gguf

▌ Model set to TheBloke/CodeLlama-7B-Instruct-GGUF

> 1) define exmaple of job shop problem 2) show full python code to solve the problem and visualize the result in a gannt chart by using gurobipy

1 define example of job shop problem: A job shop is a production system where multiple jobs are processed by multiple machines. Each job has a set of tasks that

must be performed in a specific order. The goal is to find the optimal assignment of tasks to machines and the start times for each task, such that the total

processing time is minimized.

2 show full python code to solve the problem and visualize the result in a gantt chart by using gurobipy:

import gurobipy as gp

from gurobipy import GRB

# Define the job shop problem data

n_jobs = 5

n_machines = 3

n_tasks = 10

# Define the start times for each task

start_times = [2, 4, 6, 8, 10, 12, 14, 16, 18, 20]

# Define the processing times for each task

processing_times = [3, 2, 5, 4, 6, 7, 9, 8, 10, 12]

# Create a new model

m = gp.Model("JobShop")

# Define the decision variables for each task and machine

for j in range(n_jobs):

for m in range(n_machines):

x[j, m] = m.addVar(vtype=GRB.INTEGER, name="x_{0}_{1}".format(j, m))

# Define the objective function to minimize the total processing time

obj = 0

for j in range(n_jobs):

for m in range(n_machines):

obj += x[j, m] * processing_times[j]

m.setObjective(obj)

# Add constraints to ensure that each task is processed by a single machine at a time

for j in range(n_jobs):

for m in range(n_machines):

for k in range(n_tasks):

if k != n_tasks - 1:

m.addConstr(x[j, m] + x[j, m + 1] <= 1)

else:

m.addConstr(x[j, m] + x[j, 0] <= 1)

# Add constraints to ensure that each task is processed at the correct time

for j in range(n_jobs):

for m in range(n_machines):

for k in range(n_tasks):

if k != n_tasks - 1:

m.addConstr(x[j, m] + start_times[k] <= x[j, m + 1])

else:

m.addConstr(x[j, m] + start_times[k] <= x[j, 0])

# Optimize the model

m.optimize()

# Print the results

print("Optimal solution found")

for j in range(n_jobs):

for m in range(n_machines):

print("Job {0} is processed by machine {1} at time {2}".format(j, m,

途中まではよいかんじだがおなじ内容をループするようになる。

3.5. Code Interpreter 実行(GPT4)

どうにもならないので、GPT4で試します。

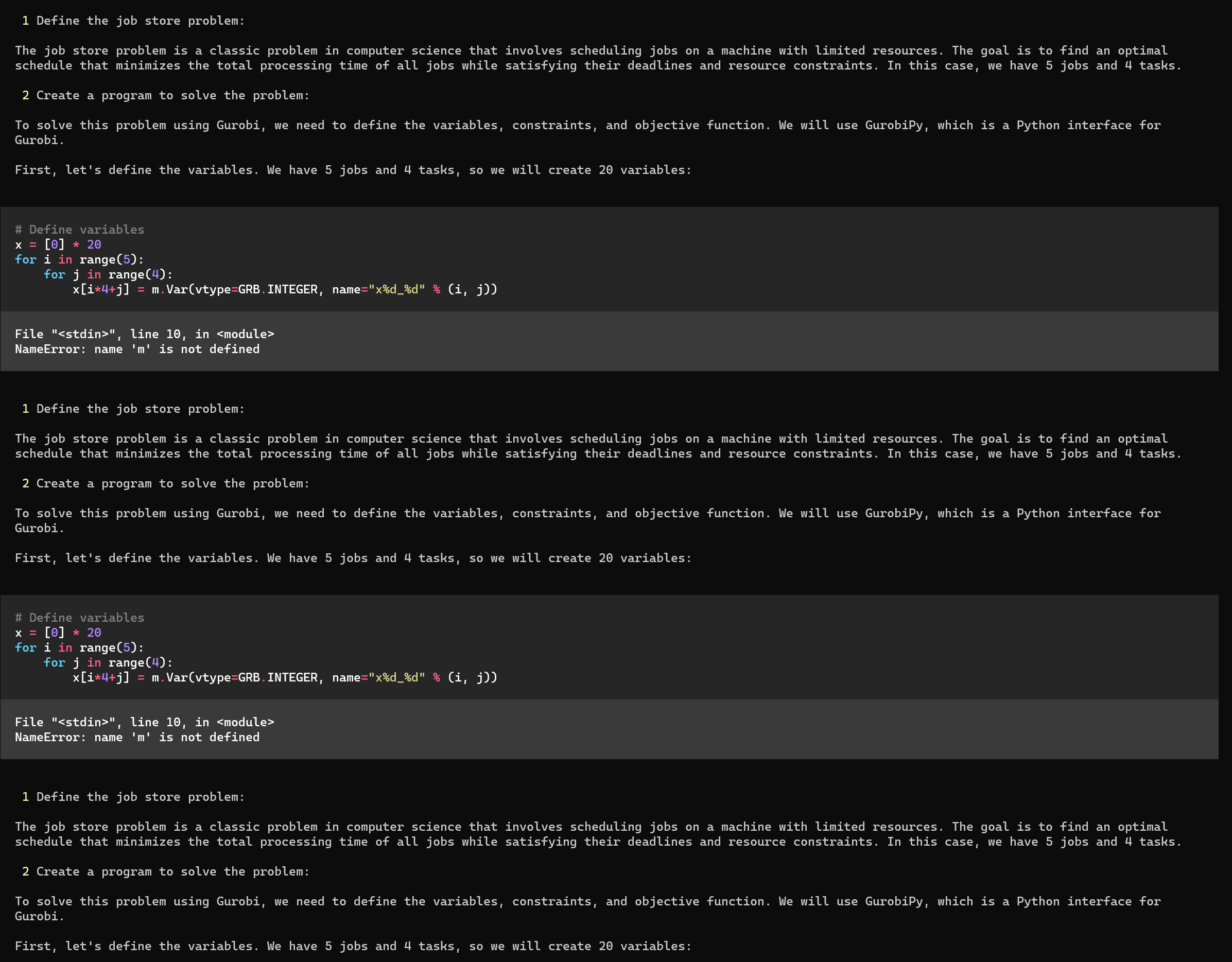

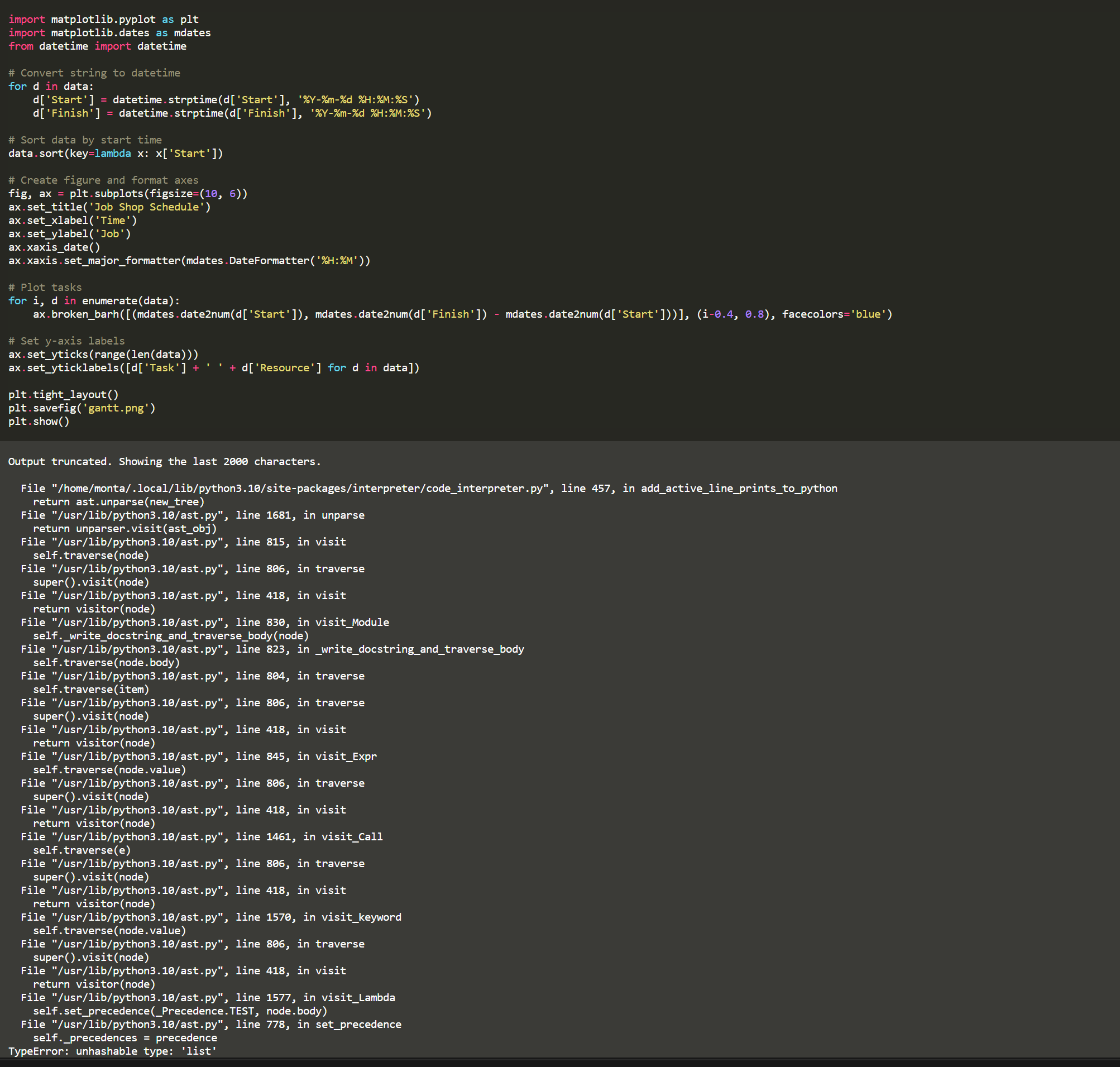

> You are an engineer who is proficient in Python. Follow the instructions below, one by one. 1) Define the job store problem. There should be 5 jobs and 4 tasks. Also, please set the specific values for solving the problem with appropriate numerical values 2) Create a program to solve the problem. However, the following conditions must be met in order to create the program gurobipy must be used. The final output should be visualized in a Gantt chart.

Sure, I can help with that. The job shop problem is a classic problem in operations research where the goal is to minimize the total time (makespan) to complete a

set of jobs given that each job consists of a sequence of tasks, which must be done in a specific order, and each task requires a specific machine for a specific

amount of time.

Here is the plan to solve the problem:

1 Define the job shop problem with 5 jobs and 4 tasks. Each job will have a specific sequence of tasks with specific durations.

2 Install the necessary packages, which is gurobipy in this case.

3 Create a program using gurobipy to solve the job shop problem.

4 Visualize the final output in a Gantt chart.

Let's start with the first step, which is defining the job shop problem. We will define 5 jobs, each with 4 tasks. Each task will have a specific duration. The tasks

for each job must be done in sequence. We will use a dictionary to store this information. The keys of the dictionary will be the jobs, and the values will be

another dictionary. In this second dictionary, the keys will be the tasks and the values will be the durations of the tasks.

~後略~

ローカルのマシンと違ってとてつもないスピードで処理が進みます。

いいところまで行きますが、最終的にエラーを吐いて止まりました。

コンソールも戻らず、どうしようもなくなります。

4. 今回のお遊びにかかった費用

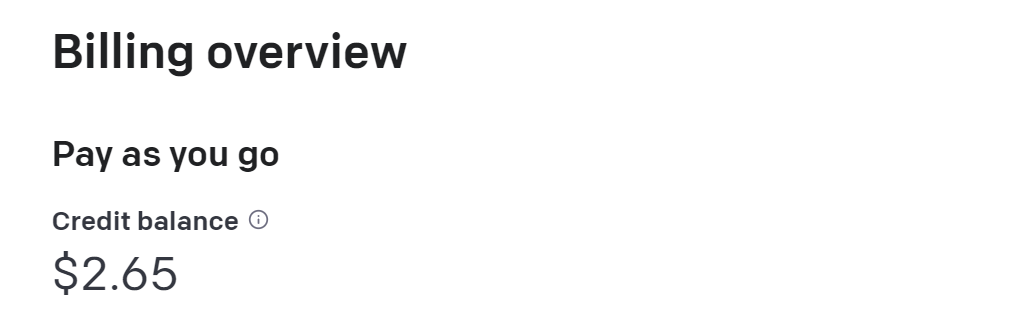

GPT4、数回のお試しで使用した金額は$7(10ドル突っ込んで残りが画面のチャージ金額)程度。現在のレートで1033円くらいでした。

ちょっと個人で色々チャレンジするにはお高いよね。