ARkit3.0で追加されたPeopleOcclusionを試してみました。

PeopleOcclusionのサンプルを動かそうとしたときにつまづいたので、まとめておきます。

こちらを参考にしたのですが、自分の環境ではうまくいかず、やってみました。

Shaderは素人なので、もし不備やもっとよいいいやり方などあれば、コメントいただけるとうれしいです。

イメージ

ARkit3.0のPeopleOcclusion試してみました。

— 二月のタンクトップ@ARゲーム開発中 (@Raliemon) September 26, 2019

これを使ってゲーム作ろ。 pic.twitter.com/Fv8GE5RBa5

環境

Unity 2019.2.3.f1

Version 11.0 beta

iPhone Xs (Xrでも動作しました)

iOS 13.0

他環境で検証していないため、動かない場合があるかもしれないです。

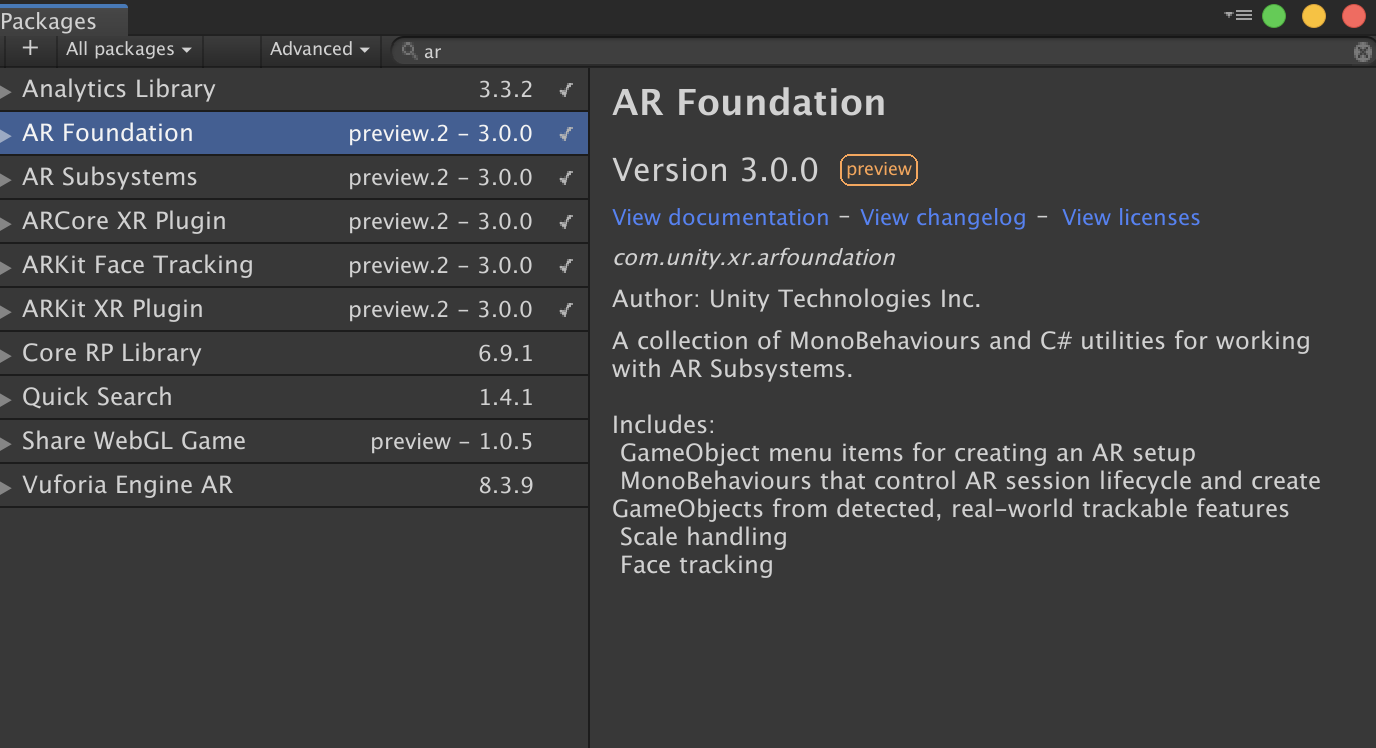

ARFundationの導入方法

Package Managerより下記2つをインストールします。

・AR Foundation

・ARKit XR Plugin

PeopleOcclusion実装方法

1.Unity上でシーンを作成

MainCameraを削除します。

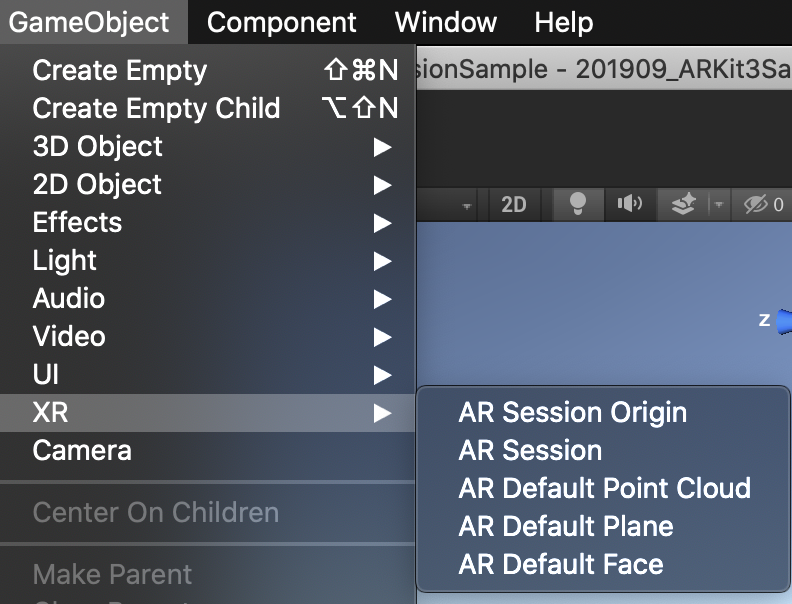

2.GameObject>XRより下記2つを追加

・AR Session

・AR SessionOrigin(こちらのカメラオブジェクトは配置されています)

3.AR Sessionはこんな感じ

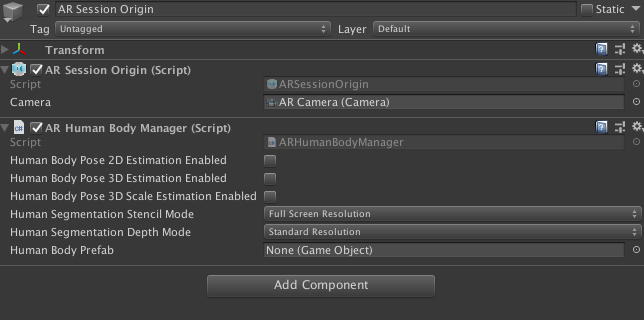

4.AR SessionOriginオブジェクトにAR Human Body Managerを追加

画像のように各項目を設定する。

※現状Human Segmentation Stencil ModeとHuman Segmentation Depth Modeはこの組み合わせじゃないと失敗しました。

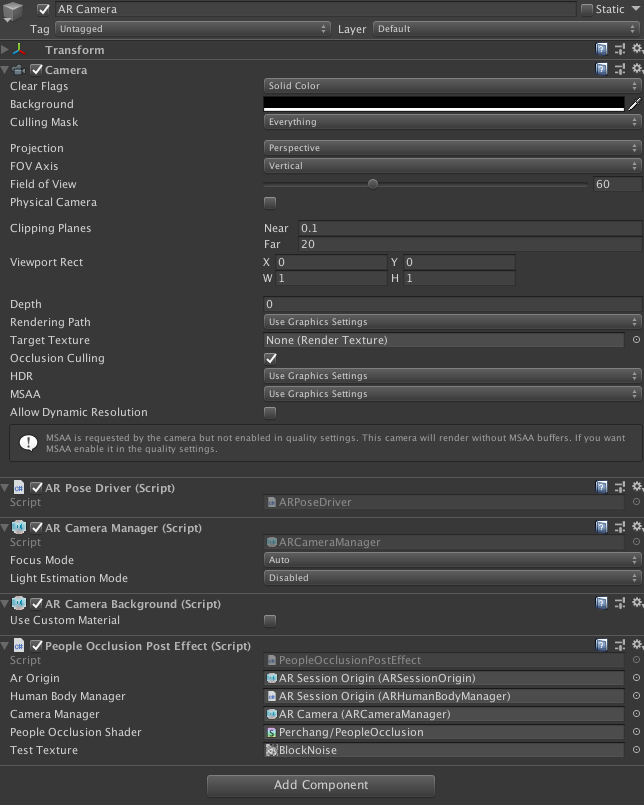

5.AR CameraにPeopleOcclusionPostEffectを追加

※PeopleOcclusionPostEffectスクリプトとPeopleOcclusionシェーダーは後述。

※textTextureのテクスチャーはお好きなのを用意してください。

6.ビルド

ビルドして完成です。

これでLandScape/Portrait両方対応できているかと思います。

7.スクリプトとシェーダーのサンプル

using UnityEngine;

using System;

using Unity.Collections;

using Unity.Collections.LowLevel.Unsafe;

using UnityEngine.XR.ARFoundation;

using UnityEngine.XR.ARSubsystems;

[RequireComponent(typeof(ARCameraManager))]

public class PeopleOcclusionPostEffect : MonoBehaviour

{

[SerializeField] private ARSessionOrigin m_arOrigin = null;

[SerializeField] private ARHumanBodyManager m_humanBodyManager = null;

[SerializeField] private ARCameraManager m_cameraManager = null;

[SerializeField] private Shader m_peopleOcclusionShader = null;

[SerializeField] Texture2D testTexture;

private Texture2D m_cameraFeedTexture = null;

private Material m_material = null;

void Awake()

{

m_material = new Material(m_peopleOcclusionShader);

GetComponent<Camera>().depthTextureMode |= DepthTextureMode.Depth;

}

private void OnEnable()

{

m_cameraManager.frameReceived += OnCameraFrameReceived;

}

private void OnDisable()

{

m_cameraManager.frameReceived -= OnCameraFrameReceived;

}

void OnRenderImage(RenderTexture source, RenderTexture destination)

{

if(PeopleOcclusionSupported())

{

if(m_cameraFeedTexture != null)

{

m_material.SetFloat("_UVMultiplierLandScape", CalculateUVMultiplierLandScape(m_cameraFeedTexture));

m_material.SetFloat("_UVMultiplierPortrait", CalculateUVMultiplierPortrait(m_cameraFeedTexture));

}

if(Input.deviceOrientation == DeviceOrientation.LandscapeRight)

{

m_material.SetFloat("_UVFlip", 0);

m_material.SetInt("_ONWIDE", 1);

}

else if(Input.deviceOrientation == DeviceOrientation.LandscapeLeft)

{

m_material.SetFloat("_UVFlip", 1);

m_material.SetInt("_ONWIDE", 1);

}

else

{

m_material.SetInt("_ONWIDE", 0);

}

m_material.SetTexture("_OcclusionDepth", m_humanBodyManager.humanDepthTexture);

m_material.SetTexture("_OcclusionStencil", m_humanBodyManager.humanStencilTexture);

// m_material.SetFloat("_ARWorldScale", 1f/m_arOrigin.transform.localScale.x);

Graphics.Blit(source, destination, m_material);

}

else

{

Graphics.Blit(source, destination);

}

}

private void OnCameraFrameReceived(ARCameraFrameEventArgs eventArgs)

{

if(PeopleOcclusionSupported())

{

RefreshCameraFeedTexture();

}

}

private bool PeopleOcclusionSupported()

{

return m_humanBodyManager.subsystem != null && m_humanBodyManager.humanDepthTexture != null && m_humanBodyManager.humanStencilTexture != null;

}

private void RefreshCameraFeedTexture()

{

XRCameraImage cameraImage;

m_cameraManager.TryGetLatestImage(out cameraImage);

if (m_cameraFeedTexture == null || m_cameraFeedTexture.width != cameraImage.width || m_cameraFeedTexture.height != cameraImage.height)

{

m_cameraFeedTexture = new Texture2D(cameraImage.width, cameraImage.height, TextureFormat.RGBA32, false);

// m_cameraFeedTexture = new Texture2D(Screen.width, Screen.height, TextureFormat.RGBA32, false);

}

CameraImageTransformation imageTransformation = Input.deviceOrientation == DeviceOrientation.LandscapeRight ? CameraImageTransformation.MirrorY : CameraImageTransformation.MirrorX;

XRCameraImageConversionParams conversionParams = new XRCameraImageConversionParams(cameraImage, TextureFormat.RGBA32, imageTransformation);

NativeArray<byte> rawTextureData = m_cameraFeedTexture.GetRawTextureData<byte>();

try

{

unsafe

{

cameraImage.Convert(conversionParams, new IntPtr(rawTextureData.GetUnsafePtr()), rawTextureData.Length);

}

}

finally

{

cameraImage.Dispose();

}

m_cameraFeedTexture.Apply();

m_material.SetTexture("_CameraFeed", testTexture);

}

private float CalculateUVMultiplierLandScape(Texture2D cameraTexture)

{

float screenAspect = (float)Screen.width / (float)Screen.height;

float cameraTextureAspect = (float)cameraTexture.width / (float)cameraTexture.height;

return screenAspect / cameraTextureAspect;

}

private float CalculateUVMultiplierPortrait(Texture2D cameraTexture)

{

float screenAspect = (float)Screen.height / (float)Screen.width;

float cameraTextureAspect = (float)cameraTexture.width / (float)cameraTexture.height;

return screenAspect / cameraTextureAspect;

}

}

Shader "Perchang/PeopleOcclusion"

{

Properties

{

_MainTex ("Texture", 2D) = "white" {}

_CameraFeed ("Texture", 2D) = "white" {}

_OcclusionDepth ("Texture", 2D) = "white" {}

_OcclusionStencil ("Texture", 2D) = "white" {}

_UVMultiplierLandScape ("UV MultiplerLandScape", Float) = 0.0

_UVMultiplierPortrait ("UV MultiplerPortrait", Float) = 0.0

_UVFlip ("Flip UV", Float) = 0.0

_ONWIDE("Onwide", Int) = 0

}

SubShader

{

// No culling or depth

Cull Off ZWrite Off ZTest Always

Pass

{

CGPROGRAM

#pragma vertex vert

#pragma fragment frag

#include "UnityCG.cginc"

struct appdata

{

float4 vertex : POSITION;

float2 uv : TEXCOORD0;

};

struct v2f

{

float2 uv : TEXCOORD0;

float2 uv1 : TEXCOORD1;

float2 uv2 : TEXCOORD2;

float4 vertex : SV_POSITION;

};

sampler2D _MainTex;

float4 _MainTex_ST;

sampler2D_float _OcclusionDepth;

sampler2D _OcclusionStencil;

sampler2D _CameraFeed;

sampler2D_float _CameraDepthTexture;

float _UVMultiplierLandScape;

float _UVMultiplierPortrait;

float _UVFlip;

int _ONWIDE;

v2f vert (appdata v)

{

v2f o;

o.vertex = UnityObjectToClipPos(v.vertex);

o.uv = v.uv;

if(_ONWIDE == 1)

{

o.uv1 = float2(v.uv.x, (1.0 - (_UVMultiplierLandScape * 0.5f)) + (v.uv.y / _UVMultiplierLandScape));

o.uv2 = float2(lerp(1.0 - o.uv1.x, o.uv1.x, _UVFlip), lerp(o.uv1.y, 1.0 - o.uv1.y, _UVFlip));

}

else

{

o.uv1 = float2((1.0 - (_UVMultiplierPortrait * 0.5f)) + (v.uv.x / _UVMultiplierPortrait), v.uv.y);

o.uv2 = float2(lerp(1.0 - o.uv1.y, o.uv1.y, 0), lerp(o.uv1.x, 1.0 - o.uv1.x, 1));

}

return o;

}

fixed4 frag (v2f i) : SV_Target

{

fixed4 col = tex2D(_MainTex, i.uv);

fixed4 cameraFeedCol = tex2D(_CameraFeed, i.uv1 * _Time);

float sceneDepth = LinearEyeDepth(SAMPLE_DEPTH_TEXTURE(_CameraDepthTexture, i.uv));

float4 stencilCol = tex2D(_OcclusionStencil, i.uv2);

float occlusionDepth = tex2D(_OcclusionDepth, i.uv2) * 0.625; //0.625 hack occlusion depth based on real world observation

float showOccluder = step(occlusionDepth, sceneDepth) * stencilCol.r; // 1 if (depth >= ocluderDepth && stencil)

return lerp(col, cameraFeedCol, showOccluder);

}

ENDCG

}

}

}