※この記事は2020年に作成しました

#概要

13個の手牌を286通りにして3つのニューラルネットワークにかけてその結果から聴牌かどうかを判断するニューラルネットワークにさらに、13個から2個取ってくる78通りの2つの牌が、対子かどうかを判断するYNと、塔子がどうかを判断するYNを付けたもの。

3つ牌を選んだ286通りを3つのYNで、2つの牌を選んだ78通りを2つのYNでの結果を聴牌YNの学習データとしている。

データ数は4942個の聴牌データと4942個のノーテンデータを使用している。

YNのモデルで判断する回数は、「データ数×((286通り×3つのYN)+(78通り×2つのYN))」なので、1002万2376回である。

_{13}C_2=78

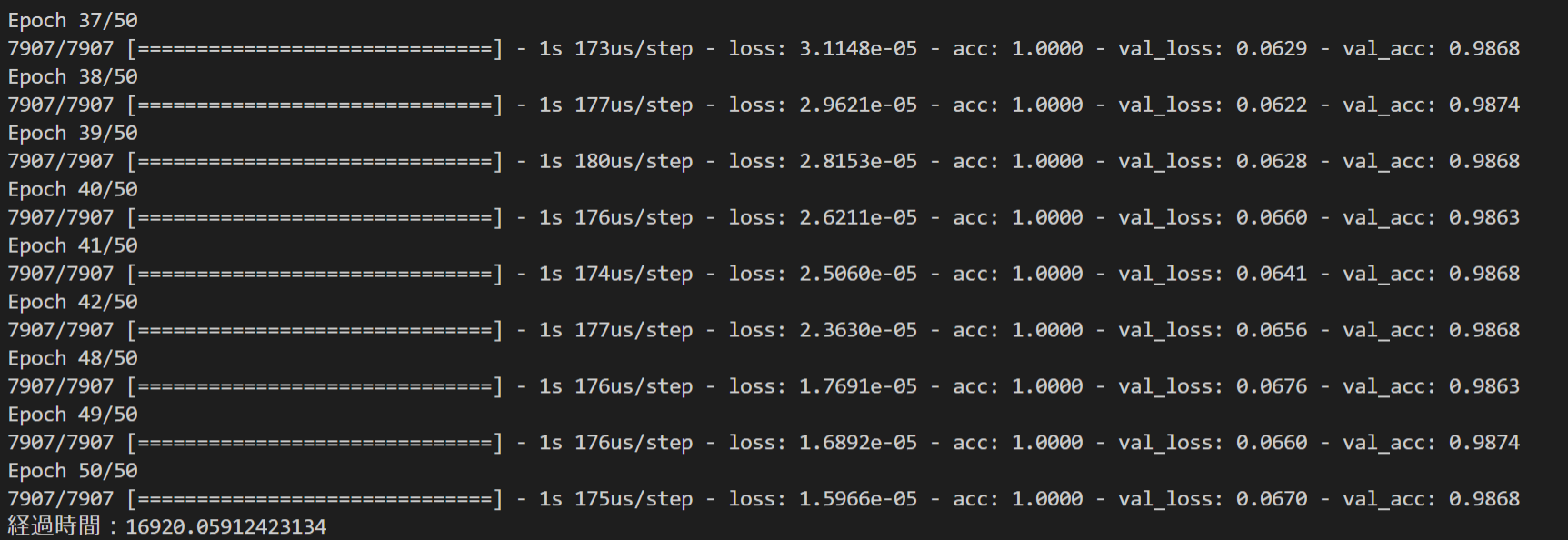

#結果

テストデータに対する正解率は、98.6%ほどになった。

#作成したプログラム

# coding: UTF-8

import numpy as np

import itertools

import time

from keras.models import load_model

from keras.utils import to_categorical

from keras.models import Sequential

from keras.layers import Dense

from keras.optimizers import Adam

np.set_printoptions(threshold=10000000,linewidth=200)

t1 = time.time()

#x_test = np.loadtxt("C:/Users/p-user/Desktop/csvdata/old/tehai_xtest.csv",delimiter=",")

#y_test = np.loadtxt("C:/Users/p-user/Desktop/csvdata/old/tehai_ytest.csv",delimiter=",")

data = 4942

x_train = np.zeros((data,34), dtype='float32')# x_trainは聴牌の手牌

with open('C:/sqlite/tenpai.csv', 'r') as fr:

for i,row in enumerate(fr.readlines(),start=0):

if i <data:

x_train[i] += np.array(list(map(np.float,row[:34])))

y_train = np.zeros((data,34), dtype='float32')# y_trainはノーテンの手牌

with open('C:/sqlite/noten.csv', 'r') as fr:

for i,row in enumerate(fr.readlines(),start=0):

if i <data:

y_train[i] += np.array(list(map(np.float,row[:34])))

tehai = np.zeros((data,13))#x_trainを13個の手牌tehaiへ

for i in range(data):

cnt = 0

for j in range(34):

if x_train[i,j] >= 1:

tehai[i,cnt] = j

cnt += 1

x_train[i,j] -=1

if x_train[i,j] >= 1:

tehai[i,cnt] = j

cnt += 1

x_train[i,j] -=1

if x_train[i,j] >= 1:

tehai[i,cnt] = j

cnt += 1

x_train[i,j] -=1

if x_train[i,j] >= 1:

tehai[i,cnt] = j

cnt += 1

x_train[i,j] -=1

tehaiN = np.zeros((data,13))#y_trainを13個の手牌tehaiNへ

for i in range(data):

cnt = 0

for j in range(34):

if y_train[i,j] >= 1:

tehaiN[i,cnt] = j

cnt += 1

y_train[i,j] -=1

if y_train[i,j] >= 1:

tehaiN[i,cnt] = j

cnt += 1

y_train[i,j] -=1

if y_train[i,j] >= 1:

tehaiN[i,cnt] = j

cnt += 1

y_train[i,j] -=1

if y_train[i,j] >= 1:

tehaiN[i,cnt] = j

cnt += 1

y_train[i,j] -=1

label=[0,1]

tehaiC = np.zeros((data,286,3))

for i in range(data):#tehaiを286通りへ

tehaiC[i,] = list(itertools.combinations(tehai[i,], 3))

#print(tehaiC[i,])

tehaiCN = np.zeros((data,286,3))

for i in range(data):#tehaiNを286通りへ

tehaiCN[i,] = list(itertools.combinations(tehaiN[i,], 3))

#print(tehaiC[i,])

tehaiC78 = np.zeros((data,78,2))

for i in range(data):#tehaiを78通りへ

tehaiC78[i,] = list(itertools.combinations(tehai[i,], 2))

tehaiCN78 = np.zeros((data,78,2))

for i in range(data):#tehaiNを78通りへ

tehaiCN78[i,] = list(itertools.combinations(tehaiN[i,], 2))

modelToitsu = load_model('toitsuYN.h5')

ToitsuYN = np.zeros((data*2,78))#対子YNの結果を入れる

for i in range(data):#tehaiC78を対子YNにかける

for j in range(78):

x_train = np.zeros((1,2))

x_train[0,] = tehaiC78[i,j]

x_train = x_train/33.

pred = modelToitsu.predict(x_train, batch_size=1, verbose=1)

pred_label = label[np.argmax(pred[0])]

ToitsuYN[i,j] = np.argmax(pred[0])

for i in range(data):#tehaiCN78を対子YNにかける

for j in range(78):

x_train = np.zeros((1,2))

x_train[0,] = tehaiCN78[i,j]

x_train = x_train/33.

pred = modelToitsu.predict(x_train, batch_size=1, verbose=1)

pred_label = label[np.argmax(pred[0])]

ToitsuYN[i+data,j] = np.argmax(pred[0])

modelTatsu = load_model('tatsuYN.h5')

TatsuYN = np.zeros((data*2,78))#塔子YNの結果を入れる

for i in range(data):#tehaiC78を塔子YNにかける

for j in range(78):

x_train = np.zeros((1,2))

x_train[0,] = tehaiC78[i,j]

x_train = x_train/33.

pred = modelTatsu.predict(x_train, batch_size=1, verbose=1)

pred_label = label[np.argmax(pred[0])]

TatsuYN[i,j] = np.argmax(pred[0])

for i in range(data):#tehaiCN78を塔子YNにかける

for j in range(78):

x_train = np.zeros((1,2))

x_train[0,] = tehaiCN78[i,j]

x_train = x_train/33.

pred = modelTatsu.predict(x_train, batch_size=1, verbose=1)

pred_label = label[np.argmax(pred[0])]

TatsuYN[i+data,j] = np.argmax(pred[0])

modelShunts = load_model('shuntsYN.h5')

ShuntsYN = np.zeros((data*2,286))#順子YNの結果を入れる

for i in range(data):#tehaiCを順子YNにかける

for j in range(286):

x_train = np.zeros((1,3))

x_train[0,] = tehaiC[i,j]

x_train = x_train/33.

pred = modelShunts.predict(x_train, batch_size=1, verbose=1)

pred_label = label[np.argmax(pred[0])]

ShuntsYN[i,j] = np.argmax(pred[0])

for i in range(data):#tehaiCNを順子YNにかける

for j in range(286):

x_train = np.zeros((1,3))

x_train[0,] = tehaiCN[i,j]

x_train = x_train/33.

pred = modelShunts.predict(x_train, batch_size=1, verbose=1)

pred_label = label[np.argmax(pred[0])]

ShuntsYN[i+data,j] = np.argmax(pred[0])

modelkotsu = load_model('kotsuYN.h5')

kotsuYN = np.zeros((data*2,286))#刻子YNの結果を入れる

for i in range(data):#tehaiCを刻子YNにかける

for j in range(286):

x_train = np.zeros((1,3))

x_train[0,] = tehaiC[i,j]

x_train = x_train/33.

pred = modelkotsu.predict(x_train, batch_size=1, verbose=1)

pred_label = label[np.argmax(pred[0])]

kotsuYN[i,j] = np.argmax(pred[0])

for i in range(data):#tehaiCNを刻子YNにかける

for j in range(286):

x_train = np.zeros((1,3))

x_train[0,] = tehaiCN[i,j]

x_train = x_train/33.

pred = modelkotsu.predict(x_train, batch_size=1, verbose=1)

pred_label = label[np.argmax(pred[0])]

kotsuYN[i+data,j] = np.argmax(pred[0])

modelkokushi = load_model('kokushiYN.h5')

kokushiYN = np.zeros((data*2,286))#国士YNの結果を入れる

for i in range(data):#tehaiCを国士YNにかける

for j in range(286):

x_train = np.zeros((1,3))

x_train[0,] = tehaiC[i,j]

x_train = x_train/33.

pred = modelkokushi.predict(x_train, batch_size=1, verbose=1)

pred_label = label[np.argmax(pred[0])]

kokushiYN[i,j] = np.argmax(pred[0])

for i in range(data):

for j in range(286):#tehaiCNを国士YNにかける

x_train = np.zeros((1,3))

x_train[0,] = tehaiCN[i,j]

x_train = x_train/33.

pred = modelkokushi.predict(x_train, batch_size=1, verbose=1)

pred_label = label[np.argmax(pred[0])]

kokushiYN[i+data,j] = np.argmax(pred[0])

YN3 = np.zeros((data*2,1014))

for i in range(data*2):

ShunKotYN = np.hstack((ShuntsYN[i,],kotsuYN[i,]))

SKKYN = np.hstack((ShunKotYN,kokushiYN[i,]))

SKKYN_toitsu = np.hstack((SKKYN,ToitsuYN[i,]))

SKKYN_tatsu = np.hstack((SKKYN_toitsu,TatsuYN[i,]))

YN3[i,] = SKKYN_tatsu

#print(YN3[0,])

#print(YN3.shape)

train = np.zeros((data*2,1015))

for i in range(data):

x = np.append(YN3[i,],1)

train[i,] = x

for i in range(data):

x = np.append(YN3[i+data,],0)

train[i+data,] = x

np.random.shuffle(train)

lavel = np.zeros((data*2,1))

for i in range(data*2):

if train[i,1014] == 1:

lavel[i,0] = 1

train=np.delete(train,1014,1)

lavel = to_categorical(lavel,2)

#trainが学習データ

#lavelが正解ラベル

model = Sequential()

#中間層、入力層、活性化関数ReLU関数

model.add(Dense(units=507,input_shape=(1014,),activation='relu'))

#出力層2、活性化関数ソフトマックス関数

model.add(Dense(units=2,activation='softmax'))

#最適化アルゴリズムAdam、損失関数クロスエントロピー

model.compile(

optimizer=Adam(lr=0.005),

loss='categorical_crossentropy',

metrics=['accuracy'],

)

history_adam=model.fit(

train,

lavel,

batch_size=100,

epochs=50,

verbose=1,

shuffle = True,

validation_split=0.2

)

#model.save('tenpaiYN.h5')

t2 = time.time()

elapsed_time = t2-t1

print(f"経過時間:{elapsed_time}")