tacotron2をAMDのROCm-Pytorchで動かしてみようとしたときのメモです

結論から言うと推論・学習共に動かなかったです。

ただしCUDAでの検証をまだしていないので本当にROCmが悪いのかどうかというのは判断しきれないです.

環境

ROCm version 2.8.13

GPU RadeonⅦ

Docker version 19.03.2, build 6a30dfc

備考 CUDA動作検証用GPUはGTX1080Tiを使用

pytorchを使うのでここらへんのメモを参照しながら構築する

https://qiita.com/T_keigo_wwk/items/e87c6e3b11cfe67785b6

環境構築

学習が走るかどうかのテストをしてみる.

※推論編で作ったDockerfileがそのまま多分使えるのでこれをbuildしてもらっても大丈夫です.

pytorchのインストール済みimageをpullして持ってきます.

sudo docker pull rocm/pytorch:rocm2.7_ubuntu18.04_py3.6_pytorch

sudo docker run --name pytroch_rocm -it --privileged --shm-size 8G --rm --device=/dev/kfd --device=/dev/dri --group-add video rocm/pytorch:rocm2.7_ubuntu18.04_py3.6_pytorch

コンテナに入って諸作業をします

git clone https://github.com/NVIDIA/tacotron2.git

cd tacotron2

wget https://data.keithito.com/data/speech/LJSpeech-1.1.tar.bz2

tar xf ./LJSpeech-1.1.tar.bz2

sed -i -- 's,DUMMY,/tacotron2/LJSpeech-1.1/wavs,g' filelists/*.txt

git submodule init; git submodule update

pip3 install -r ./requirements.txtをそのままでpip3 installすると動かないので少々イジらないと駄目なようです.

matplotlib==2.1.0

tensorflow==1.14.0

numpy==1.17.2

inflect==0.2.5

librosa==0.6.0

scipy

tensorboardX==1.9

Unidecode==1.0.22

pillow

デフォルトのままpip installするとtf2.0.0がインストールされAttributeError: module 'tensorflow' has no attribute 'contrib'などが発生してしまうので1.14.1を入れることをおすすめします

上記に書き換えたらtrainが一応開始できるようになります.

実際の動かしたときの挙動

下記のような感じになって実際なかなepoch0からtrainが進まない感じです

/usr/local/lib/python3.6/dist-packages/tensorflow/python/framework/dtypes.py:516: FutureWarning: Passing (type, 1) or '1type' as a synonym of type is deprecated; in a future version of numpy, it will be understood as (type, (1,)) / '(1,)type'.

_np_qint8 = np.dtype([("qint8", np.int8, 1)])

/usr/local/lib/python3.6/dist-packages/tensorflow/python/framework/dtypes.py:517: FutureWarning: Passing (type, 1) or '1type' as a synonym of type is deprecated; in a future version of numpy, it will be understood as (type, (1,)) / '(1,)type'.

_np_quint8 = np.dtype([("quint8", np.uint8, 1)])

/usr/local/lib/python3.6/dist-packages/tensorflow/python/framework/dtypes.py:518: FutureWarning: Passing (type, 1) or '1type' as a synonym of type is deprecated; in a future version of numpy, it will be understood as (type, (1,)) / '(1,)type'.

_np_qint16 = np.dtype([("qint16", np.int16, 1)])

/usr/local/lib/python3.6/dist-packages/tensorflow/python/framework/dtypes.py:519: FutureWarning: Passing (type, 1) or '1type' as a synonym of type is deprecated; in a future version of numpy, it will be understood as (type, (1,)) / '(1,)type'.

_np_quint16 = np.dtype([("quint16", np.uint16, 1)])

/usr/local/lib/python3.6/dist-packages/tensorflow/python/framework/dtypes.py:520: FutureWarning: Passing (type, 1) or '1type' as a synonym of type is deprecated; in a future version of numpy, it will be understood as (type, (1,)) / '(1,)type'.

_np_qint32 = np.dtype([("qint32", np.int32, 1)])

/usr/local/lib/python3.6/dist-packages/tensorflow/python/framework/dtypes.py:525: FutureWarning: Passing (type, 1) or '1type' as a synonym of type is deprecated; in a future version of numpy, it will be understood as (type, (1,)) / '(1,)type'.

np_resource = np.dtype([("resource", np.ubyte, 1)])

/usr/local/lib/python3.6/dist-packages/h5py/__init__.py:40: UserWarning: h5py is running against HDF5 1.10.0 when it was built against 1.10.4, this may cause problems

'{0}.{1}.{2}'.format(*version.hdf5_built_version_tuple)

WARNING:tensorflow:

The TensorFlow contrib module will not be included in TensorFlow 2.0.

For more information, please see:

* https://github.com/tensorflow/community/blob/master/rfcs/20180907-contrib-sunset.md

* https://github.com/tensorflow/addons

* https://github.com/tensorflow/io (for I/O related ops)

If you depend on functionality not listed there, please file an issue.

FP16 Run: False

Dynamic Loss Scaling: True

Distributed Run: False

cuDNN Enabled: True

cuDNN Benchmark: False

/root/.local/lib/python3.6/site-packages/torch/backends/cudnn/__init__.py:107: UserWarning: PyTorch was compiled without cuDNN support. To use cuDNN, rebuild PyTorch making sure the library is visible to the build system.

"PyTorch was compiled without cuDNN support. To use cuDNN, rebuild "

Epoch: 0

pytorchのプリイン済みのimageではうまく動かない感じです.

Epochも進まない上、メモリ確保も特にされてない感じでした.

環境構築時に発生したエラー

/root/.local/lib/python3.6/site-packages/torch/backends/cudnn/__init__.py:107: UserWarning: PyTorch was compiled without cuDNN support. To use cuDNN, rebuild PyTorch making sure the library is visible to the build system.

"PyTorch was compiled without cuDNN support. To use cuDNN, rebuild "

Epoch: 0

ERROR: Unexpected bus error encountered in worker. This might be caused by insufficient shared memory (shm).

Traceback (most recent call last):

File "train.py", line 290, in <module>

args.warm_start, args.n_gpus, args.rank, args.group_name, hparams)

File "train.py", line 214, in train

x, y = model.parse_batch(batch)

File "/tacotron2/model.py", line 478, in parse_batch

max_len = torch.max(input_lengths.data).item()

File "/root/.local/lib/python3.6/site-packages/torch/utils/data/_utils/signal_handling.py", line 66, in handler

_error_if_any_worker_fails()

RuntimeError: DataLoader worker (pid 1532) is killed by signal: Bus error.

上記のようなエラーがでてしまい バスエラーと出るのですが原因はDockerコンテナのlimitが掛かってることらしく

https://github.com/pytorch/pytorch/issues/2244

https://qiita.com/windyakin/items/00b085902547570eebc6

Okay. I think I solved it. Looks like the shared memory of the docker container wasn't set high enough. Setting a higher amount by adding --shm-size 8G to the docker run command seems to be the trick as mentioned here. Let me fully test it, if solved I'll close issue.

原因はここらへんにありそうです.

--shm-size 8GあたりをDocker run時にしてみるとよさそうなのでつけておく (上記のDocker runにはオプション付与済み)

推論を試してみる

学習は駄目でしたが推論を試してみます.

Dockerコンテナを使いまわすのではなく今回は別に立ち上げて再検証します.

推論デモではJupyter NoteBookを使うのでココらへんを参考にdocker runコマンドを工夫する必要があります

https://qiita.com/loftkun/items/8de5cf723eee708ac97e

Jupyter Notebook の Dockerコンテナを立ち上げる

本当はrocm-pytorch-DockerでNotebookがプリインインストール済みのimageがあればよかったのですが..

また今回、Dockerfileを作ってみました

FROM rocm/pytorch:rocm2.7_ubuntu18.04_py3.6_pytorch

RUN apt-get update -y

RUN pip3 install numpy==1.17.2 scipy matplotlib librosa==0.6.0 tensorflow==1.14.0 tensorboardX==1.9 inflect==0.2.5 Unidecode==1.0.22 jupyter

RUN git clone https://github.com/NVIDIA/tacotron2.git

WORKDIR /tacotron2

RUN git submodule init; git submodule update

RUN wget https://data.keithito.com/data/speech/LJSpeech-1.1.tar.bz2

RUN tar xf ./LJSpeech-1.1.tar.bz2

RUN sed -i -- 's,DUMMY,/tacotron2/LJSpeech-1.1/wavs,g' filelists/*.txt

sudo docker build -t tacotron:rocm_pytorch .

でDocker build可能です.

ひとまずチュートリアルにあるSetupまでの範囲が実装されています.

ホストマシンで推論モデルを格納するためのディレクトリを作っておきます

$ cd ~

$ mkdir pt_data

https://drive.google.com/file/d/1WsibBTsuRg_SF2Z6L6NFRTT-NjEy1oTx/view

https://drive.google.com/file/d/1c5ZTuT7J08wLUoVZ2KkUs_VdZuJ86ZqA/view

これをダウンロードしてpt_dataに入れます

sudo docker run --name pytroch_notebook -it -v /home/${ユーザー}/pt_data:/pt_data --privileged --shm-size 8G -p -p 8001:8000 --rm --device=/dev/kfd --device=/dev/dri --group-add video tacotron2:rocm_pytorch

コンテナに入ったら

cp ../pt_data/tacotron2_statedict.pt ./

cp ../pt_data/waveglow_256channels.pt ./

推論モデルをコピーします

jupyter notebook --port 8000 --ip=0.0.0.0 --allow-root

ブラウザでnotebookを起動します

http://127.0.0.1:8001/?token={token}

tokenが記述されたURLが表示されるのでこれをローカルマシンで使います

このときport番号が8000になってるので8001に書き換えましょう(docker runでポートフォワーディングしてるので)

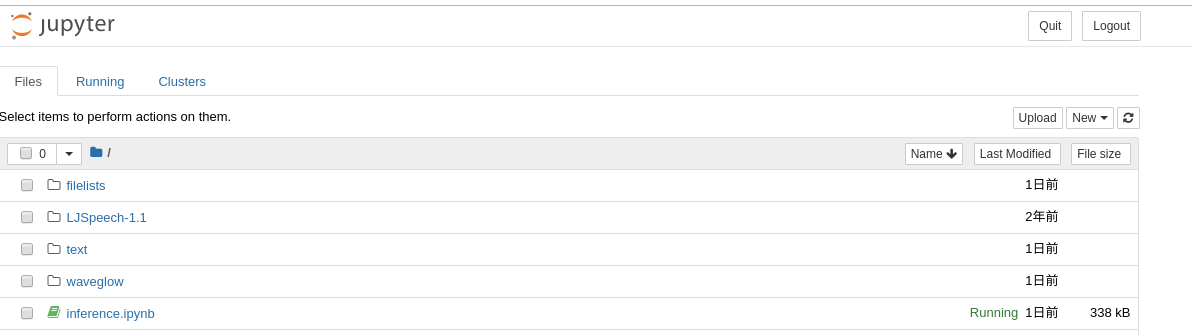

inference.ipynbをロードします

これを実行するとき、以下のようなエラーに当たることがある

https://github.com/NVIDIA/tacotron2/issues/227

---------------------------------------------------------------------------

ModuleNotFoundError Traceback (most recent call last)

<ipython-input-1-1e40ea2a3544> in <module>

16 from train import load_model

17 from text import text_to_sequence

---> 18 from denoiser import Denoiser

ModuleNotFoundError: No module named 'denoiser'

cd ./waveglow

git pull origin master

これをした後でnotebookを再起動してください.

またここでエラーが発生して

Load WaveGlow for mel2audio synthesis and denoiserのシークエンスでエラーが発生します

---------------------------------------------------------------------------

AttributeError Traceback (most recent call last)

<ipython-input-5-35c67544e07f> in <module>

4 for k in waveglow.convinv:

5 k.float()

----> 6 denoiser = Denoiser(waveglow)

/tacotron2/waveglow/denoiser.py in __init__(self, waveglow, filter_length, n_overlap, win_length, mode)

28

29 with torch.no_grad():

---> 30 bias_audio = waveglow.infer(mel_input, sigma=0.0).float()

31 bias_spec, _ = self.stft.transform(bias_audio)

32

/tacotron2/waveglow/glow.py in infer(self, spect, sigma)

274 audio_1 = audio[:,n_half:,:]

275

--> 276 output = self.WN[k]((audio_0, spect))

277

278 s = output[:, n_half:, :]

~/.local/lib/python3.6/site-packages/torch/nn/modules/module.py in __call__(self, *input, **kwargs)

543 result = self._slow_forward(*input, **kwargs)

544 else:

--> 545 result = self.forward(*input, **kwargs)

546 for hook in self._forward_hooks.values():

547 hook_result = hook(self, input, result)

/tacotron2/waveglow/glow.py in forward(self, forward_input)

157 n_channels_tensor = torch.IntTensor([self.n_channels])

158

--> 159 spect = self.cond_layer(spect)

160

161 for i in range(self.n_layers):

~/.local/lib/python3.6/site-packages/torch/nn/modules/module.py in __getattr__(self, name)

587 return modules[name]

588 raise AttributeError("'{}' object has no attribute '{}'".format(

--> 589 type(self).__name__, name))

590

591 def __setattr__(self, name, value):

AttributeError: 'WN' object has no attribute 'cond_layer'

https://github.com/NVIDIA/waveglow/issues/154

これも既知のエラーらしく

you can use convert_model.py to convert the pre-trained model to the new one. It solves the issue.

モデルを新しくせよとのことらしいです.

cd ./waveglow

python convert_model.py ../waveglow_256channels.pt

cd ..

ただこれでもruntimeエラーになってしまいました

---------------------------------------------------------------------------

RuntimeError Traceback (most recent call last)

<ipython-input-6-35c67544e07f> in <module>

4 for k in waveglow.convinv:

5 k.float()

----> 6 denoiser = Denoiser(waveglow)

/tacotron2/waveglow/denoiser.py in __init__(self, waveglow, filter_length, n_overlap, win_length, mode)

28

29 with torch.no_grad():

---> 30 bias_audio = waveglow.infer(mel_input, sigma=0.0).float()

31 bias_spec, _ = self.stft.transform(bias_audio)

32

/tacotron2/waveglow/glow.py in infer(self, spect, sigma)

274 audio_1 = audio[:,n_half:,:]

275

--> 276 output = self.WN[k]((audio_0, spect))

277

278 s = output[:, n_half:, :]

~/.local/lib/python3.6/site-packages/torch/nn/modules/module.py in __call__(self, *input, **kwargs)

543 result = self._slow_forward(*input, **kwargs)

544 else:

--> 545 result = self.forward(*input, **kwargs)

546 for hook in self._forward_hooks.values():

547 hook_result = hook(self, input, result)

/tacotron2/waveglow/glow.py in forward(self, forward_input)

164 self.in_layers[i](audio),

165 spect[:,spect_offset:spect_offset+2*self.n_channels,:],

--> 166 n_channels_tensor)

167

168 res_skip_acts = self.res_skip_layers[i](acts)

RuntimeError:

The above operation failed in interpreter, with the following stack trace:

ランタイムエラーが発生してしまい、私の技術では解決方法を見いだせませんでした

まだrocmでのpytorchは難しさがあるように感じます.