開発環境

- Windows10 (64bit、16GB メモリ、i7-7700HQ)

- Open3D v0.4.0

- Python 3.6.5

Open3Dのインストール

Python 3.6.xなら下記コマンドでインストールできます。(anacondaでpy36環境を作っておきましょう)

インストールできるPythonのバージョンの確認はこちら。

pip install open3d-python

Open3Dのサンプル

Open3D v0.4.0をダウンロードし、\Open3D-0.4.0\examples\Python以下にサンプルプログラムがあるので、やってみましょう。

Advanced

camera_trajectory.py

(py36) D:\Open3D-0.4.0\examples\Python\Advanced>python camera_trajectory.py

Testing camera in open3d ...

[[525. 0. 319.5]

[ 0. 525. 239.5]

[ 0. 0. 1. ]]

PinholeCameraIntrinsic with width = -1 and height = -1.

Access intrinsics with intrinsic_matrix.

PinholeCameraIntrinsic with width = 640 and height = 480.

Access intrinsics with intrinsic_matrix.

[[525. 0. 320.]

[ 0. 525. 240.]

[ 0. 0. 1.]]

PinholeCameraIntrinsic with width = 640 and height = 480.

Access intrinsics with intrinsic_matrix.

[[525. 0. 320.]

[ 0. 525. 240.]

[ 0. 0. 1.]]

Read a trajectory and combine all the RGB-D images.

PinholeCameraTrajectory class.

Access its data via intrinsic and extrinsic.

std::vector<Eigen::Matrix4d> with 5 elements.

Use numpy.asarray() to access data.

[[[-2.73959219e-01 8.67361738e-19 -9.61741310e-01 1.96003743e+00]

[ 2.18193459e-02 -9.99742609e-01 -6.21540418e-03 5.92858340e-01]

[-9.61493766e-01 -2.26873336e-02 2.73888704e-01 -8.68039442e-01]

[ 0.00000000e+00 0.00000000e+00 -0.00000000e+00 1.00000000e+00]]

[[-2.69333111e-01 -3.46944695e-18 -9.63047079e-01 1.96388301e+00]

[ 3.32939238e-02 -9.99402229e-01 -9.31123335e-03 6.26082021e-01]

[-9.62471397e-01 -3.45714395e-02 2.69172111e-01 -8.50365753e-01]

[ 0.00000000e+00 0.00000000e+00 -0.00000000e+00 1.00000000e+00]]

[[-2.65351855e-01 -3.46944695e-18 -9.64151644e-01 1.96642986e+00]

[ 4.52270814e-02 -9.98899182e-01 -1.24473054e-02 6.60222975e-01]

[-9.63090289e-01 -4.69086805e-02 2.65059750e-01 -8.32396694e-01]

[ 0.00000000e+00 0.00000000e+00 -0.00000000e+00 1.00000000e+00]]

[[-2.61914323e-01 -1.73472348e-18 -9.65091129e-01 1.96788675e+00]

[ 5.75424277e-02 -9.98220917e-01 -1.56163346e-02 6.95070189e-01]

[-9.63374152e-01 -5.96238282e-02 2.61448356e-01 -8.14014971e-01]

[ 0.00000000e+00 0.00000000e+00 -0.00000000e+00 1.00000000e+00]]

[[-2.58918642e-01 -0.00000000e+00 -9.65899134e-01 1.96846002e+00]

[ 7.01643085e-02 -9.97358120e-01 -1.88082242e-02 7.30411488e-01]

[-9.63347345e-01 -7.26414447e-02 2.58234610e-01 -7.95113315e-01]

[ 0.00000000e+00 0.00000000e+00 -0.00000000e+00 1.00000000e+00]]]

colored_pointcloud_registration.py

| a | b | c |

|---|---|---|

|

|

|

(py36) D:\Open3D-0.4.0\examples\Python\Advanced>python colored_pointcloud_registration.py

1. Load two point clouds and show initial pose

Reading PLY: [========================================] 100%

Reading PLY: [========================================] 100%

2. Point-to-plane ICP registration is applied on original point

clouds to refine the alignment. Distance threshold 0.02.

RegistrationResult with fitness = 0.974582, inlier_rmse = 0.004220, and correspondence_set size of 62729

Access transformation to get result.

3. Colored point cloud registration

[50, 0.04, 0]

3-1. Downsample with a voxel size 0.04

3-2. Estimate normal.

3-3. Applying colored point cloud registration

RegistrationResult with fitness = 0.876367, inlier_rmse = 0.014578, and correspondence_set size of 2084

Access transformation to get result.

[30, 0.02, 1]

3-1. Downsample with a voxel size 0.02

3-2. Estimate normal.

3-3. Applying colored point cloud registration

RegistrationResult with fitness = 0.866184, inlier_rmse = 0.008760, and correspondence_set size of 7541

Access transformation to get result.

[14, 0.01, 2]

3-1. Downsample with a voxel size 0.01

3-2. Estimate normal.

3-3. Applying colored point cloud registration

RegistrationResult with fitness = 0.843719, inlier_rmse = 0.004851, and correspondence_set size of 24737

Access transformation to get result.

color_map_optimization.py

1.fountain datasetをダウンロードします。

2.[path_to_fountain_dataset]にダウンロードしたfountain datasetのディレクトリパスを追加して実行します。

| a | b |

|---|---|

|

|

(py36) D:\Open3D-0.4.0\examples\Python\Advanced>python color_map_optimization.py

Reading PLY: [========================================] 100%

Read TriangleMesh: 1033745 triangles and 536872 vertices.

[ColorMapOptimization]

[ColorMapOptimization] :: MakingMasks

[MakeDepthMasks] Image 0/33

...

[MakeDepthMasks] Image 32/33

[ColorMapOptimization] :: VisibilityCheck

[cam 25] 14.76181 percents are visible

...

[cam 4] 38.92045 percents are visible

[ColorMapOptimization] :: Rigid Optimization

GLFW init.

Add geometry and update bounding box to [(0.0015, 0.0015, 0.4771) - (1.4985, 1.4985, 1.4846)]

Global colormap init.

[Visualizer] Screen capture to ScreenCapture_2019-01-16-06-25-11.png

[Visualizer] Screen camera capture to ScreenCamera_2019-01-16-06-25-11.json

Writing PLY: [========================================] 100%

Write TriangleMesh: 1033745 triangles and 536872 vertices.

[ColorMapOptimization]

[ColorMapOptimization] :: MakingMasks

[MakeDepthMasks] Image 0/33

...

[MakeDepthMasks] Image 32/33

[ColorMapOptimization] :: VisibilityCheck

[cam 25] 14.76181 percents are visible

...

[cam 4] 38.92045 percents are visible

[ColorMapOptimization] :: Non-Rigid Optimization

[Iteration 0001] Residual error : 21639.276499, reg : 0.000000

...

[Iteration 0300] Residual error : 5585.115116, reg : 2747.785043

Add geometry and update bounding box to [(0.0015, 0.0015, 0.4771) - (1.4985, 1.4985, 1.4846)]

[Visualizer] Screen capture to ScreenCapture_2019-01-16-06-32-08.png

[Visualizer] Screen camera capture to ScreenCamera_2019-01-16-06-32-08.json

Writing PLY: [========================================] 100%

Write TriangleMesh: 1033745 triangles and 536872 vertices.

Global colormap destruct.

GLFW destruct.

customized_visualization.py

(py36) D:\Open3D-0.4.0\examples\Python\Advanced>python customized_visualization.py

Reading PLY: [========================================] 100%

1. Customized visualization to mimic DrawGeometry

2. Changing field of view

Field of view (before changing) 60.00

Field of view (after changing) 90.00

Field of view (before changing) 60.00

Field of view (after changing) 5.00

[ViewControl] ConvertToPinholeCameraParameters() failed because orthogonal view cannot be translated to a pinhole camera.

3. Customized visualization with a rotating view

4. Customized visualization showing normal rendering

5. Customized visualization with key press callbacks

Press 'K' to change background color to black

Press 'R' to load a customized render option, showing normals

Press ',' to capture the depth buffer and show it

Press '.' to capture the screen and show it

6. Customized visualization playing a camera trajectory

[ViewControl] ConvertFromPinholeCameraParameters() failed because window height and width do not match.

Capture image 00000

...

fast_global_registration.py

| a | b | c |

|---|---|---|

|

|

|

(py36) D:\Open3D-0.4.0\examples\Python\Advanced>python fast_global_registration.py

:: Load two point clouds and disturb initial pose.

:: Downsample with a voxel size 0.050.

:: Estimate normal with search radius 0.100.

:: Compute FPFH feature with search radius 0.250.

:: Downsample with a voxel size 0.050.

:: Estimate normal with search radius 0.100.

:: Compute FPFH feature with search radius 0.250.

:: RANSAC registration on downsampled point clouds.

Since the downsampling voxel size is 0.050,

we use a liberal distance threshold 0.075.

RegistrationResult with fitness = 0.676891, inlier_rmse = 0.032296, and correspondence_set size of 3222

Access transformation to get result.

Global registration took 0.464 sec.

:: Apply fast global registration with distance threshold 0.025

Fast global registration took 0.218 sec.

global_registration.py

| a | b | c |

|---|---|---|

|

|

|

(py36) D:\Open3D-0.4.0\examples\Python\Advanced>python global_registration.py

:: Load two point clouds and disturb initial pose.

:: Downsample with a voxel size 0.050.

:: Estimate normal with search radius 0.100.

:: Compute FPFH feature with search radius 0.250.

:: Downsample with a voxel size 0.050.

:: Estimate normal with search radius 0.100.

:: Compute FPFH feature with search radius 0.250.

:: RANSAC registration on downsampled point clouds.

Since the downsampling voxel size is 0.050,

we use a liberal distance threshold 0.075.

RegistrationResult with fitness = 0.676471, inlier_rmse = 0.028703, and correspondence_set size of 3220

Access transformation to get result.

:: Point-to-plane ICP registration is applied on original point

clouds to refine the alignment. This time we use a strict

distance threshold 0.020.

RegistrationResult with fitness = 0.621033, inlier_rmse = 0.006565, and correspondence_set size of 123483

Access transformation to get result.

headless_rendering.py

(py36) D:\Open3D-0.4.0\examples\Python\Advanced>python headless_rendering.py

Reading PLY: [========================================] 100%

Customized visualization playing a camera trajectory. Ctrl+z to terminate

[ViewControl] ConvertFromPinholeCameraParameters() failed because window height and width do not match.

Capture image 00000

...

interactive_visualization.py

| a | b | c | d |

|---|---|---|---|

|

|

|

|

(py36) D:\Open3D-0.4.0\examples\Python\Advanced>python interactive_visualization.py

Demo for manual geometry cropping

1) Press 'Y' twice to align geometry with negative direction of y-axis

2) Press 'K' to lock screen and to switch to selection mode

3) Drag for rectangle selection,

or use ctrl + left click for polygon selection

4) Press 'C' to get a selected geometry and to save it

5) Press 'F' to switch to freeview mode

Demo for manual ICP

Visualization of two point clouds before manual alignment

1) Please pick at least three correspondences using [shift + left click]

Press [shift + right click] to undo point picking

2) Afther picking points, press q for close the window

1) Please pick at least three correspondences using [shift + left click]

Press [shift + right click] to undo point picking

2) Afther picking points, press q for close the window

Traceback (most recent call last):

File "interactive_visualization.py", line 75, in <module>

demo_manual_registration()

File "interactive_visualization.py", line 53, in demo_manual_registration

assert(len(picked_id_source)>=3 and len(picked_id_target)>=3)

AssertionError

multiway_registration.py

| a | b | c |

|---|---|---|

|

|

|

(py36) D:\Open3D-0.4.0\examples\Python\Advanced>python multiway_registration.py

PCD header indicates 8 fields, 32 bytes per point, and 198835 points in total.

x, F, 4, 1, 0

y, F, 4, 1, 4

z, F, 4, 1, 8

rgb, F, 4, 1, 12

normal_x, F, 4, 1, 16

normal_y, F, 4, 1, 20

normal_z, F, 4, 1, 24

curvature, F, 4, 1, 28

Compression method is 1.

Points: yes; normals: yes; colors: yes

[Purge] 0 nan points have been removed.

Read PointCloud: 198835 vertices.

Pointcloud down sampled from 198835 points to 27211 points.

PCD header indicates 8 fields, 32 bytes per point, and 137833 points in total.

x, F, 4, 1, 0

y, F, 4, 1, 4

z, F, 4, 1, 8

rgb, F, 4, 1, 12

normal_x, F, 4, 1, 16

normal_y, F, 4, 1, 20

normal_z, F, 4, 1, 24

curvature, F, 4, 1, 28

Compression method is 1.

Points: yes; normals: yes; colors: yes

[Purge] 0 nan points have been removed.

Read PointCloud: 137833 vertices.

Pointcloud down sampled from 137833 points to 19137 points.

PCD header indicates 8 fields, 32 bytes per point, and 191397 points in total.

x, F, 4, 1, 0

y, F, 4, 1, 4

z, F, 4, 1, 8

rgb, F, 4, 1, 12

normal_x, F, 4, 1, 16

normal_y, F, 4, 1, 20

normal_z, F, 4, 1, 24

curvature, F, 4, 1, 28

Compression method is 1.

Points: yes; normals: yes; colors: yes

[Purge] 0 nan points have been removed.

Read PointCloud: 191397 vertices.

Pointcloud down sampled from 191397 points to 25269 points.

GLFW init.

Add geometry and update bounding box to [(0.5513, 0.8320, 0.5617) - (3.9485, 2.4249, 2.5522)]

Add geometry and update bounding box to [(0.5513, 0.8320, 0.5617) - (3.9485, 2.6123, 2.5522)]

Add geometry and update bounding box to [(0.0039, 0.8320, 0.4857) - (3.9485, 2.9019, 2.5522)]

Global colormap init.

[Visualizer] Screen capture to ScreenCapture_2019-01-16-06-57-01.png

[Visualizer] Screen camera capture to ScreenCamera_2019-01-16-06-57-01.json

[ViewControl] SetViewPoint() failed because window height and width are not set.Full registration ...

Apply point-to-plane ICP

ICP Iteration #0: Fitness 0.6258, RMSE 0.1566

Residual : 1.96e-02 (# of elements : 17029)

...

ICP Iteration #5: Fitness 0.6391, RMSE 0.0101

Residual : 3.22e-05 (# of elements : 17390)

Build PoseGraph

Apply point-to-plane ICP

ICP Iteration #0: Fitness 0.5669, RMSE 0.1655

Residual : 1.86e-02 (# of elements : 15427)

...

ICP Iteration #5: Fitness 0.7054, RMSE 0.0104

Residual : 3.25e-05 (# of elements : 19194)

Build PoseGraph

Apply point-to-plane ICP

ICP Iteration #0: Fitness 0.7980, RMSE 0.1231

Residual : 1.25e-02 (# of elements : 15272)

...

ICP Iteration #4: Fitness 0.7594, RMSE 0.0108

Residual : 4.35e-05 (# of elements : 14532)

Build PoseGraph

Optimizing PoseGraph ...

Validating PoseGraph - finished.

[GlobalOptimizationLM] Optimizing PoseGraph having 3 nodes and 3 edges.

Line process weight : 15.342900

[Initial ] residual : 1.068329e+00, lambda : 2.960767e+00

[Iteration 00] residual : 2.144428e-01, valid edges : 1, time : 0.000 sec.

[Iteration 01] residual : 1.535337e-01, valid edges : 1, time : 0.000 sec.

Delta.norm() < 1.000000e-06 * (x.norm() + 1.000000e-06)

[GlobalOptimizationLM] total time : 0.008 sec.

[GlobalOptimizationLM] Optimizing PoseGraph having 3 nodes and 3 edges.

Line process weight : 15.342900

[Initial ] residual : 1.535309e-01, lambda : 3.050809e+00

Delta.norm() < 1.000000e-06 * (x.norm() + 1.000000e-06)

[GlobalOptimizationLM] total time : 0.001 sec.

CompensateReferencePoseGraphNode : reference : 0

Transform points and display

[[ 1.00000000e+00 -1.81265051e-19 -1.08420217e-19 1.73472348e-18]

[ 3.54695047e-20 1.00000000e+00 -1.08420217e-19 0.00000000e+00]

[-2.16840434e-19 0.00000000e+00 1.00000000e+00 0.00000000e+00]

[ 0.00000000e+00 0.00000000e+00 0.00000000e+00 1.00000000e+00]]

[[ 0.84017249 -0.14644731 0.52217178 0.34783878]

[ 0.00617134 0.96536884 0.26081585 -0.3942583 ]

[-0.54228415 -0.2159078 0.81198012 1.73003584]

[ 0. 0. 0. 1. ]]

[[ 0.9627123 -0.07179198 0.2608274 0.37654711]

[-0.00195415 0.96227383 0.27207581 -0.48957983]

[-0.27052025 -0.26244043 0.92625257 1.29771599]

[ 0. 0. 0. 1. ]]

Add geometry and update bounding box to [(0.5513, 0.8320, 0.5617) - (3.9485, 2.4249, 2.5522)]

Add geometry and update bounding box to [(0.5513, 0.8320, 0.5346) - (4.0783, 2.4252, 2.5522)]

Add geometry and update bounding box to [(0.5012, 0.8320, 0.5346) - (4.0783, 2.4442, 2.5522)]

[Visualizer] Screen capture to ScreenCapture_2019-01-16-06-57-33.png

[Visualizer] Screen camera capture to ScreenCamera_2019-01-16-06-57-33.json

Make a combined point cloud

PCD header indicates 8 fields, 32 bytes per point, and 198835 points in total.

x, F, 4, 1, 0

y, F, 4, 1, 4

z, F, 4, 1, 8

rgb, F, 4, 1, 12

normal_x, F, 4, 1, 16

normal_y, F, 4, 1, 20

normal_z, F, 4, 1, 24

curvature, F, 4, 1, 28

Compression method is 1.

Points: yes; normals: yes; colors: yes

[Purge] 0 nan points have been removed.

Read PointCloud: 198835 vertices.

Pointcloud down sampled from 198835 points to 27211 points.

PCD header indicates 8 fields, 32 bytes per point, and 137833 points in total.

x, F, 4, 1, 0

y, F, 4, 1, 4

z, F, 4, 1, 8

rgb, F, 4, 1, 12

normal_x, F, 4, 1, 16

normal_y, F, 4, 1, 20

normal_z, F, 4, 1, 24

curvature, F, 4, 1, 28

Compression method is 1.

Points: yes; normals: yes; colors: yes

[Purge] 0 nan points have been removed.

Read PointCloud: 137833 vertices.

Pointcloud down sampled from 137833 points to 19137 points.

PCD header indicates 8 fields, 32 bytes per point, and 191397 points in total.

x, F, 4, 1, 0

y, F, 4, 1, 4

z, F, 4, 1, 8

rgb, F, 4, 1, 12

normal_x, F, 4, 1, 16

normal_y, F, 4, 1, 20

normal_z, F, 4, 1, 24

curvature, F, 4, 1, 28

Compression method is 1.

Points: yes; normals: yes; colors: yes

[Purge] 0 nan points have been removed.

Read PointCloud: 191397 vertices.

Pointcloud down sampled from 191397 points to 25269 points.

Pointcloud down sampled from 71617 points to 33315 points.

Write PointCloud: 33315 vertices.

Add geometry and update bounding box to [(0.5012, 0.8335, 0.5346) - (4.0761, 2.4441, 2.5522)]

[Visualizer] Screen capture to ScreenCapture_2019-01-16-06-57-52.png

[Visualizer] Screen camera capture to ScreenCamera_2019-01-16-06-57-52.json

Global colormap destruct.

GLFW destruct.

non_blocking_visualization.py

non_blocking_visualization test#Open3D #Python pic.twitter.com/d1EjFQnWpj

— 藤本賢志(ガチ本) (@sotongshi) 2019年1月15日

(py36) D:\Open3D-0.4.0\examples\Python\Advanced>python non_blocking_visualization.py

PCD header indicates 8 fields, 32 bytes per point, and 198835 points in total.

x, F, 4, 1, 0

y, F, 4, 1, 4

z, F, 4, 1, 8

rgb, F, 4, 1, 12

normal_x, F, 4, 1, 16

normal_y, F, 4, 1, 20

normal_z, F, 4, 1, 24

curvature, F, 4, 1, 28

Compression method is 1.

Points: yes; normals: yes; colors: yes

[Purge] 0 nan points have been removed.

Read PointCloud: 198835 vertices.

PCD header indicates 8 fields, 32 bytes per point, and 137833 points in total.

x, F, 4, 1, 0

y, F, 4, 1, 4

z, F, 4, 1, 8

rgb, F, 4, 1, 12

normal_x, F, 4, 1, 16

normal_y, F, 4, 1, 20

normal_z, F, 4, 1, 24

curvature, F, 4, 1, 28

Compression method is 1.

Points: yes; normals: yes; colors: yes

[Purge] 0 nan points have been removed.

Read PointCloud: 137833 vertices.

Pointcloud down sampled from 198835 points to 27211 points.

Pointcloud down sampled from 137833 points to 19137 points.

GLFW init.

Add geometry and update bounding box to [(-0.8080, -2.7008, -2.0740) - (2.7776, -0.8999, -0.4797)]

Add geometry and update bounding box to [(-0.8080, -2.7008, -2.1014) - (3.4258, -0.8999, -0.4797)]

ICP Iteration #0: Fitness 0.0892, RMSE 0.0302

Residual : 7.28e-04 (# of elements : 2428)

Global colormap init.

ICP Iteration #0: Fitness 0.0897, RMSE 0.0300

Residual : 7.26e-04 (# of elements : 2441)

...

ICP Iteration #0: Fitness 0.6546, RMSE 0.0118

Residual : 3.98e-05 (# of elements : 17812)

Global colormap destruct.

GLFW destruct.

pointcloud_outlier_removal.py

| a | b | c | d | e |

|---|---|---|---|---|

|

|

|

|

|

(py36) D:\Open3D-0.4.0\examples\Python\Advanced>python pointcloud_outlier_removal.py

Load a ply point cloud, print it, and render it

Downsample the point cloud with a voxel of 0.02

Every 5th points are selected

Statistical oulier removal

Showing outliers (red) and inliers (gray):

Radius oulier removal

Showing outliers (red) and inliers (gray):

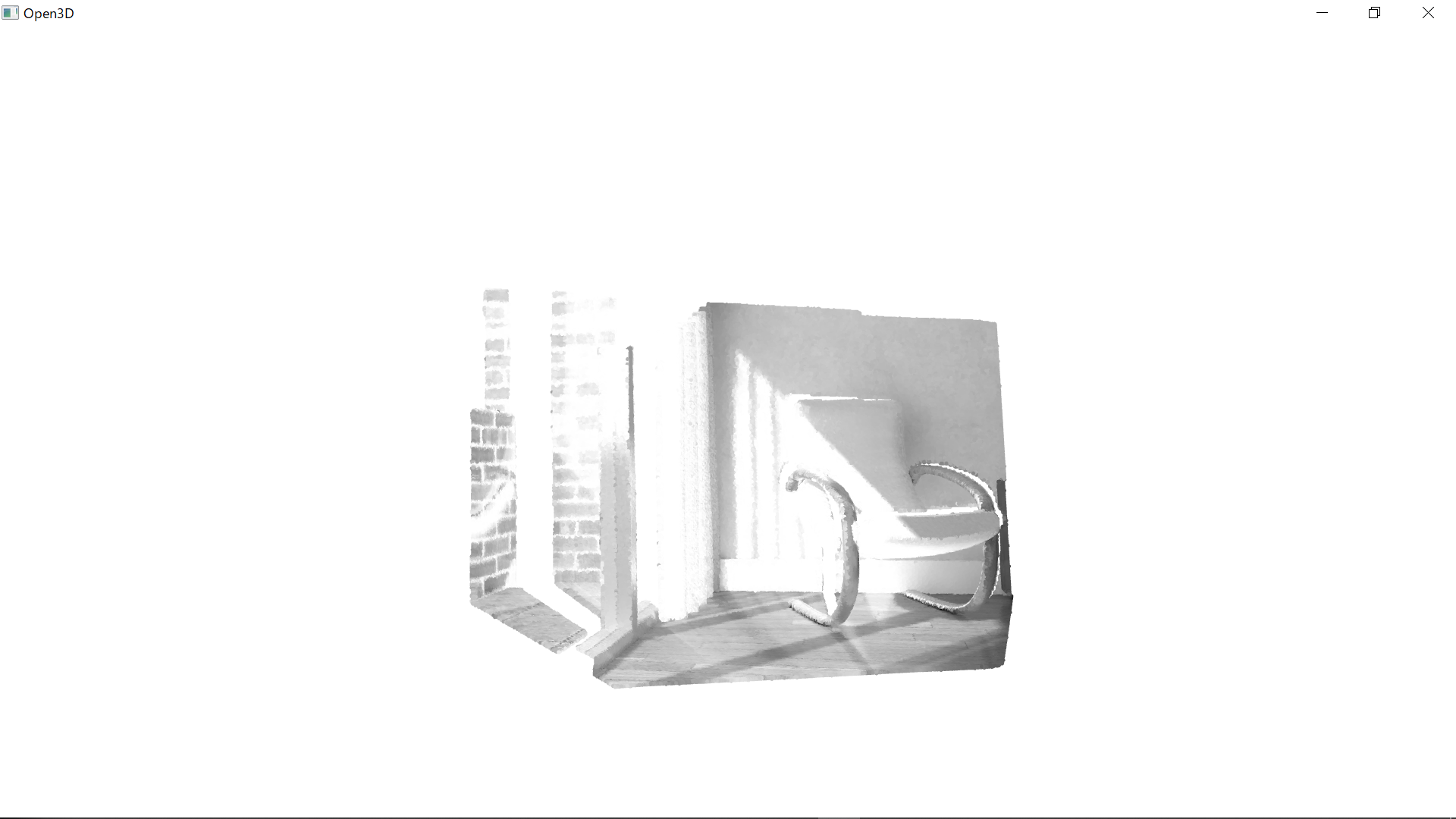

rgbd_integration.py

(py36) D:\Open3D-0.4.0\examples\Python\Advanced>python rgbd_integration.py

Integrate 0-th image into the volume.

Integrate 1-th image into the volume.

Integrate 2-th image into the volume.

Integrate 3-th image into the volume.

Integrate 4-th image into the volume.

Extract a triangle mesh from the volume and visualize it.

trajectory_io.py

カメラポーズのクラス、trajectoryのread/write関数の定義

Basic

file_io.py

(py36) D:\Open3D-0.4.0\examples\Python\Basic>python file_io.py

Testing IO for point cloud ...

PointCloud with 113662 points.

Testing IO for meshes ...

Reading PLY: [========================================] 100%

TriangleMesh with 1440 points and 2880 triangles.

Writing PLY: [========================================] 100%

Testing IO for images ...

Image of size 512x512, with 3 channels.

Use numpy.asarray to access buffer data.

icp_registration.py

| a | b | c |

|---|---|---|

|

|

|

(py36) D:\Open3D-0.4.0\examples\Python\Basic>python icp_registration.py

Initial alignment

RegistrationResult with fitness = 0.174723, inlier_rmse = 0.011771, and correspondence_set size of 34741

Access transformation to get result.

Apply point-to-point ICP

RegistrationResult with fitness = 0.372450, inlier_rmse = 0.007760, and correspondence_set size of 74056

Access transformation to get result.

Transformation is:

[[ 0.83924644 0.01006041 -0.54390867 0.64639961]

[-0.15102344 0.96521988 -0.21491604 0.75166079]

[ 0.52191123 0.2616952 0.81146378 -1.50303533]

[ 0. 0. 0. 1. ]]

Apply point-to-plane ICP

RegistrationResult with fitness = 0.620972, inlier_rmse = 0.006581, and correspondence_set size of 123471

Access transformation to get result.

Transformation is:

[[ 0.84023324 0.00618369 -0.54244126 0.64720943]

[-0.14752342 0.96523919 -0.21724508 0.81018928]

[ 0.52132423 0.26174429 0.81182576 -1.48366001]

[ 0. 0. 0. 1. ]]

kdtree.py

(py36) D:\Open3D-0.4.0\examples\Python\Basic>python kdtree.py

Testing kdtree in open3d ...

Load a point cloud and paint it gray.

Paint the 1500th point red.

Find its 200 nearest neighbors, paint blue.

Find its neighbors with distance less than 0.2, paint green.

Visualize the point cloud.

mesh.py

| a | b | c | d |

|---|---|---|---|

|

|

|

|

(py36) D:\Open3D-0.4.0\examples\Python\Basic>python mesh.py

Testing mesh in open3d ...

Reading PLY: [========================================] 100%

TriangleMesh with 1440 points and 2880 triangles.

[[ 4.51268387 28.68865967 -76.55680847]

[ 7.63622284 35.52046967 -69.78063965]

[ 6.21986008 44.22465134 -64.82303619]

...

[-22.12651634 31.28466606 -87.37570953]

[-13.91188431 25.4865818 -86.25827026]

[ -5.27768707 23.36245346 -81.43279266]]

[[ 0 12 13]

[ 0 13 1]

[ 1 13 14]

...

[1438 11 1439]

[1439 11 0]

[1439 0 1428]]

Try to render a mesh with normals (exist: False) and colors (exist: False)

A mesh with no normals and no colors does not seem good.

Computing normal and rendering it.

[[ 0.79164373 -0.53951444 0.28674793]

[ 0.8319824 -0.53303008 0.15389681]

[ 0.83488162 -0.09250101 0.54260136]

...

[ 0.16269924 -0.76215917 -0.6266118 ]

[ 0.52755226 -0.83707495 -0.14489352]

[ 0.56778973 -0.76467734 -0.30476777]]

We make a partial mesh of only the first half triangles.

std::vector<Eigen::Vector3i> with 1440 elements.

Use numpy.asarray() to access data.

Painting the mesh

pointcloud.py

| a | b | c | d | e |

|---|---|---|---|---|

|

|

|

|

|

(py36) D:\Open3D-0.4.0\examples\Python\Basic>python pointcloud.py

Load a ply point cloud, print it, and render it

Reading PLY: [========================================] 100%

PointCloud with 196133 points.

[[0.65234375 0.84686458 2.37890625]

[0.65234375 0.83984375 2.38430572]

[0.66737998 0.83984375 2.37890625]

...

[2.00839925 2.39453125 1.88671875]

[2.00390625 2.39488506 1.88671875]

[2.00390625 2.39453125 1.88793314]]

Downsample the point cloud with a voxel of 0.05

Recompute the normal of the downsampled point cloud

Print a normal vector of the 0th point

[ 0.85641574 0.01693013 -0.51600915]

Print the normal vectors of the first 10 points

[[ 8.56415744e-01 1.69301342e-02 -5.16009150e-01]

[-3.10071169e-01 3.92564590e-02 -9.49902522e-01]

[-2.21066308e-01 2.07235365e-07 -9.75258780e-01]

[-2.65577574e-01 -1.84601949e-01 -9.46250851e-01]

[-7.91944115e-01 -2.92017206e-02 -6.09894891e-01]

[-8.84912237e-02 -9.89400811e-01 1.15131831e-01]

[ 6.28492508e-01 -6.12988948e-01 -4.78791935e-01]

[ 7.28260110e-01 -4.73518839e-01 -4.95395924e-01]

[-5.07368635e-03 -9.99572767e-01 -2.87844085e-02]

[ 3.49295119e-01 1.16948013e-02 -9.36939780e-01]]

Load a polygon volume and use it to crop the original point cloud

Cropping geometry: [========================================] 100%

Paint chair

python_binding.py

(py36) D:\Open3D-0.4.0\examples\Python\Basic>python python_binding.py

PointCloud with 198835 points.

Help on package open3d.open3d in open3d:

NAME

open3d.open3d - Python binding of Open3D

PACKAGE CONTENTS

j_visualizer

open3d

FUNCTIONS

color_map_optimization(...) method of builtins.PyCapsule instance

color_map_optimization(mesh: open3d.open3d.TriangleMesh, imgs_rgbd: List[open3d.open3d.RGBDImage], camera: open3d.open3d.PinholeCameraTrajectory, option: open3d.open3d.ColorMapOptmizationOption=ColorMapOptmizationOption with

- non_rigid_camera_coordinate : 0

- number_of_vertical_anchors : 16

- non_rigid_anchor_point_weight : 0.316000

- maximum_iteration : 300.000000

- maximum_allowable_depth : 2.500000

- depth_threshold_for_visiblity_check : 0.030000

- depth_threshold_for_discontinuity_check : 0.100000

- half_dilation_kernel_size_for_discontinuity_map : 3) -> None

Function for color mapping of reconstructed scenes via optimization

compute_fpfh_feature(...) method of builtins.PyCapsule instance

compute_fpfh_feature(input: open3d.open3d.PointCloud, search_param: open3d.open3d.KDTreeSearchParam) -> open3d.open3d.Feature

Function to compute FPFH feature for a point cloud

compute_point_cloud_mahalanobis_distance(...) method of builtins.PyCapsule instance

compute_point_cloud_mahalanobis_distance(input: open3d.open3d.PointCloud) -> open3d.open3d.DoubleVector

Function to compute the Mahalanobis distance for points in a point cloud

compute_point_cloud_mean_and_covariance(...) method of builtins.PyCapsule instance

compute_point_cloud_mean_and_covariance(input: open3d.open3d.PointCloud) -> Tuple[numpy.ndarray[float64[3, 1]], numpy.ndarray[float64[3, 3]]]

Function to compute the mean and covariance matrix of a point cloud

compute_point_cloud_nearest_neighbor_distance(...) method of builtins.PyCapsule instance

compute_point_cloud_nearest_neighbor_distance(input: open3d.open3d.PointCloud) -> open3d.open3d.DoubleVector

-- More --

rgbd_nyu.py

| a | b |

|---|---|

|

|

(py36) D:\Open3D-0.4.0\examples\Python\Basic>python rgbd_nyu.py

Read NYU dataset

RGBDImage of size

Color image : 640x480, with 1 channels.

Depth image : 640x480, with 1 channels.

Use numpy.asarray to access buffer data.

rgbd_odometry.py

(py36) D:\Open3D-0.4.0\examples\Python\Basic>python rgbd_odometry.py

[[525. 0. 319.5]

[ 0. 525. 239.5]

[ 0. 0. 1. ]]

OdometryOption class.

iteration_number_per_pyramid_level = [ 20, 10, 5, ]

max_depth_diff = 0.030000

min_depth = 0.000000

max_depth = 4.000000

Using RGB-D Odometry

[[ 9.99988275e-01 -7.36617570e-05 -4.84191029e-03 2.77175781e-04]

[ 1.67413000e-05 9.99930910e-01 -1.17547746e-02 2.29264601e-02]

[ 4.84244164e-03 1.17545557e-02 9.99919187e-01 5.96549283e-04]

[ 0.00000000e+00 0.00000000e+00 0.00000000e+00 1.00000000e+00]]

Using Hybrid RGB-D Odometry

[[ 9.99992973e-01 -2.51085987e-04 -3.74035293e-03 -1.07049785e-03]

[ 2.07047515e-04 9.99930714e-01 -1.17696192e-02 2.32280935e-02]

[ 3.74304897e-03 1.17687621e-02 9.99923740e-01 1.40592008e-03]

[ 0.00000000e+00 0.00000000e+00 0.00000000e+00 1.00000000e+00]]

rgbd_redwood.py

| a | b |

|---|---|

|

|

(py36) D:\Open3D-0.4.0\examples\Python\Basic>python rgbd_redwood.py

Read Redwood dataset

RGBDImage of size

Color image : 640x480, with 1 channels.

Depth image : 640x480, with 1 channels.

Use numpy.asarray to access buffer data.

rgbd_sun.py

| a | b |

|---|---|

|

|

(py36) D:\Open3D-0.4.0\examples\Python\Basic>python rgbd_sun.py

Read SUN dataset

RGBDImage of size

Color image : 640x480, with 1 channels.

Depth image : 640x480, with 1 channels.

Use numpy.asarray to access buffer data.

rgbd_tum.py

| a | b |

|---|---|

|

|

(py36) D:\Open3D-0.4.0\examples\Python\Basic>python rgbd_tum.py

Read TUM dataset

RGBDImage of size

Color image : 640x480, with 1 channels.

Depth image : 640x480, with 1 channels.

Use numpy.asarray to access buffer data.

visualization.py

| a | b | c |

|---|---|---|

|

|

|

(py36) D:\Open3D-0.4.0\examples\Python\Basic>python visualization.py

Load a ply point cloud, print it, and render it

Reading PLY: [========================================] 100%

Let's draw some primitives

We draw a few primitives using collection.

We draw a few primitives using + operator of mesh.

Let's draw a cubic that consists of 8 points and 12 lines

working_with_numpy.py

| a | b |

|---|---|

|

|

(py36) D:\Open3D-0.4.0\examples\Python\Basic>python working_with_numpy.py

xyz

[[-3. -3. 0.17846472]

[-2.985 -3. 0.17440115]

[-2.97 -3. 0.17063709]

...

[ 2.97 3. 0.17063709]

[ 2.985 3. 0.17440115]

[ 3. 3. 0.17846472]]

Writing PLY: [========================================] 100%

Reading PLY: [========================================] 100%

xyz_load

[[-3. -3. 0.17846472]

[-2.985 -3. 0.17440115]

[-2.97 -3. 0.17063709]

...

[ 2.97 3. 0.17063709]

[ 2.985 3. 0.17440115]

[ 3. 3. 0.17846472]]

Benchmark

benchmark_fgr.py

...

office2:: matching 48-49

Reading PLY: [========================================] 100%

Reading PLY: [========================================] 100%

:: Downsample with a voxel size 0.050.

:: Estimate normal with search radius 0.100.

:: Compute FPFH feature with search radius 0.250.

:: Downsample with a voxel size 0.050.

:: Estimate normal with search radius 0.100.

:: Compute FPFH feature with search radius 0.250.

:: Apply fast global registration with distance threshold 0.025

[[ 0.95713891 -0.09688744 0.2729431 -0.19772407]

[ 0.09078796 0.99525781 0.03492042 -0.28293611]

[-0.2750321 -0.00864375 0.96139619 0.44358903]

[ 0. 0. 0. 1. ]]

benchmark_pre.py

保留

(py36) D:\Open3D-0.4.0\examples\Python\Benchmark>python benchmark_pre.py

Reading PLY: [========================================] 100%

:: Downsample with a voxel size 0.050.

:: Estimate normal with search radius 0.100.

:: Compute FPFH feature with search radius 0.250.

Traceback (most recent call last):

File "benchmark_pre.py", line 49, in <module>

pickle.dump([source_down, source_fpfh], f)

TypeError: can't pickle open3d.open3d.PointCloud objects

benchmark_ransac.py

...

office2:: matching 48-49

Reading PLY: [========================================] 100%

Reading PLY: [========================================] 100%

:: Downsample with a voxel size 0.050.

:: Estimate normal with search radius 0.100.

:: Compute FPFH feature with search radius 0.250.

:: Downsample with a voxel size 0.050.

:: Estimate normal with search radius 0.100.

:: Compute FPFH feature with search radius 0.250.

:: RANSAC registration on downsampled point clouds.

Since the downsampling voxel size is 0.050,

we use a liberal distance threshold 0.075.

[[ 0.9566918 -0.08567279 0.27821031 -0.383805 ]

[ 0.07492634 0.99598184 0.0490533 -0.33100488]

[-0.28129495 -0.02608361 0.9592668 0.62173136]

[ 0. 0. 0. 1. ]]

Misc

color_image.py

| a | b | c | d | e |

|---|---|---|---|---|

|

|

|

|

|

(py36) D:\Open3D-0.4.0\examples\Python\Misc>python color_image.py

Testing image in open3d ...

Convert an image to numpy and draw it with matplotlib.

Image of size 1582x1058, with 3 channels.

Use numpy.asarray to access buffer data.

Convet a numpy image to Image and show it with DrawGeomtries().

(512, 512, 3)

Image of size 512x512, with 3 channels.

Use numpy.asarray to access buffer data.

Render a channel of the previous image.

(512, 512)

(512, 1)

Image of size 512x512, with 1 channels.

Use numpy.asarray to access buffer data.

Write the previous image to file then load it with matplotlib.

Testing basic image processing module.

evaluate_geometric_feature.py

(py36) D:\Open3D-0.4.0\examples\Python\Misc>python evaluate_geometric_feature.py

11.39% points in source pointcloud successfully found their correspondence.

feature.py

| a | b | c |

|---|---|---|

|

|

|

(py36) D:\Open3D-0.4.0\examples\Python\Misc>python feature.py

Load two aligned point clouds.

Load their FPFH feature and evaluate.

Black : matching distance > 0.2

White : matching distance = 0

[ViewControl] SetViewPoint() failed because window height and width are not set.

Load their L32D feature and evaluate.

Black : matching distance > 0.2

White : matching distance = 0

pose_graph_optimization.py

(py36) D:\Open3D-0.4.0\examples\Python\Misc>python pose_graph_optimization.py

Parameters for PoseGraph optimization ...

GlobalOptimizationLevenbergMarquardt

GlobalOptimizationConvergenceCriteria

> max_iteration : 100

> min_relative_increment : 0.000001

> min_relative_residual_increment : 0.000001

> min_right_term : 0.000001

> min_residual : 0.000001

> max_iteration_lm : 20

> upper_scale_factor : 0.666667

> lower_scale_factor : 0.333333

GlobalOptimizationOption

> max_correspondence_distance : 0.075000

> edge_prune_threshold : 0.250000

> preference_loop_closure : 1.000000

> reference_node : -1

Optimizing Fragment PoseGraph using open3d ...

PoseGraph with 100 nodes and 4090 edges.

Validating PoseGraph - finished.

[GlobalOptimizationLM] Optimizing PoseGraph having 100 nodes and 4090 edges.

Line process weight : 1042.862563

[Initial ] residual : 1.637548e+05, lambda : 1.497962e+03

[Iteration 00] residual : 9.502428e+03, valid edges : 3989, time : 0.060 sec.

[Iteration 01] residual : 4.505665e+03, valid edges : 3989, time : 0.060 sec.

Delta.norm() < 1.000000e-06 * (x.norm() + 1.000000e-06)

[GlobalOptimizationLM] total time : 0.170 sec.

[GlobalOptimizationLM] Optimizing PoseGraph having 100 nodes and 4088 edges.

Line process weight : 1043.102250

[Initial ] residual : 2.882884e+03, lambda : 1.576682e+03

[Iteration 00] residual : 2.881731e+03, valid edges : 3989, time : 0.060 sec.

Delta.norm() < 1.000000e-06 * (x.norm() + 1.000000e-06)

[GlobalOptimizationLM] total time : 0.108 sec.

CompensateReferencePoseGraphNode : reference : -1

Optimizing Global PoseGraph using open3d ...

PoseGraph with 320 nodes and 13904 edges.

Validating PoseGraph - finished.

[GlobalOptimizationLM] Optimizing PoseGraph having 320 nodes and 13904 edges.

Line process weight : 18.104563

[Initial ] residual : 1.180879e+09, lambda : 4.157481e+01

[Iteration 00] residual : 2.998445e+05, valid edges : 390, time : 0.910 sec.

...

[Iteration 21] residual : 2.753939e+05, valid edges : 605, time : 1.038 sec.

Current_residual - new_residual < 1.000000e-06 * current_residual

[GlobalOptimizationLM] total time : 22.635 sec.

[GlobalOptimizationLM] Optimizing PoseGraph having 320 nodes and 1243 edges.

Line process weight : 28.662674

[Initial ] residual : 4.879364e+04, lambda : 6.815879e+01

[Iteration 00] residual : 4.855143e+04, valid edges : 605, time : 0.044 sec.

...

[Iteration 07] residual : 4.852427e+04, valid edges : 605, time : 0.045 sec.

Current_residual - new_residual < 1.000000e-06 * current_residual

[GlobalOptimizationLM] total time : 0.401 sec.

CompensateReferencePoseGraphNode : reference : -1

sampling.py

保留

(py36) D:\Open3D-0.4.0\examples\Python\Misc>python sampling.py

Traceback (most recent call last):

File "sampling.py", line 8, in <module>

from common import *

ModuleNotFoundError: No module named 'common'

vector.py

(py36) D:\Open3D-0.4.0\examples\Python\Misc>python vector.py

Testing vector in open3d ...

Testing IntVector ...

IntVector[1, 2, 3, 4, 5]

[1 2 3 4 5]

IntVector[10, 22, 3, 4, 5]

IntVector[5, 2, 3, 4, 5]

IntVector[2, 2, 3, 4, 5]

IntVector[40, 50, 3, 4, 5]

IntVector[1, 2, 3, 4, 5]

Testing DoubleVector ...

DoubleVector[1, 2, 3]

DoubleVector[1.1, 1.2]

DoubleVector[0.1, 0.2]

DoubleVector[1.1, 1.2, 1.3, 0.1, 0.2]

Testing Vector3dVector ...

std::vector<Eigen::Vector3d> with 2 elements.

Use numpy.asarray() to access data.

[[1. 2. 3. ]

[0.1 0.2 0.3]]

[[4. 5. 6. ]

[0.1 0.2 0.3]]

[[-1. 5. 6. ]

[ 0.1 0.2 0.3]]

[[0. 5. 6. ]

[0.1 0.2 0.3]]

[[10. 11. 3. ]

[12. 13. 0.3]]

[[ 1. 2. 3. ]

[ 0.1 0.2 0.3]

[30. 31. 32. ]]

[[1. 2. 3. ]

[0.1 0.2 0.3]

[0. 5. 6. ]

[0.1 0.2 0.3]]

Testing Vector3iVector ...

std::vector<Eigen::Vector3i> with 2 elements.

Use numpy.asarray() to access data.

[[1 2 3]

[4 5 6]]

ReconstructionSystem

run_system.py

保留

Reconstruction system

positional arguments:

config path to the config file

optional arguments:

-h, --help show this help message and exit

--make Step 1) make fragments from RGBD sequence

--register Step 2) register all fragments to detect loop closure

--refine Step 3) refine rough registrations

--integrate Step 4) integrate the whole RGBD sequence to make final mesh

--debug_mode turn on debug mode

まとめ

- PythonでOpen3Dを導入しました。

- サンプルプログラムを実行しました。

- これで簡単に3D処理ができます。