This article refers to this great post on Google AI blog.

https://ai.googleblog.com/

Introduction

These days, we have witnessed the significant explosion of AI, with the deep learning methods enabling computer vision algorithms in object recognition in pictures. Despite of these evolution, automatic photography remains a very exciting challenge.

Can a camera capture a great moment automatically?

Three Principles in Google Clips

- All computations is performed on-device

- The device captures short videos instead of just a single prefect instant in time

- The device focuses on candid moments of people and animals

Learning to Recognise great moments in life

Creation of Datasets

They attempted two methods.

- Collecting thousands of video clips in diverse scenarios and a variety of ethnicities, genders and ages, they called many professional photographers and video editors to crop the best scene from clips manually. But it turned out that these was a challenging problem in training an algorithm solely from the subjective selection of the humans. And especially labeling video clips in a range of "perfect" to "terrible" seems not working well.

- Addressing above problems, they split a video into short segments and pick randomly two out to show human raters on the net to choose which one they prefer. In this approach, they could continuously evaluate the rate of the videos which is called quality score. The aim here was creating a continuous quality score across the length of the video. Gathering the result of this rating process, they, finally, succeeded to properly evaluate the moments in video clips and then they named this approach as pairwise comparison and actually went excellent.

Example of Pairwise comparison

Datasets Description

Number of pairwise comparison: 50,000,000

Number of clips: over 1,000

Model Architecture

Given the dataset beforementioned, they train the neural network with the concept that knowing what is in the photo will help device to determine "Interestingness".

They have started with the picking the photo from the evaluated short video from video clips. Then, with those selected photos, utilising the state-of-the-art technology of Google Photos and Google Image Search functions, they asked the photographers to pick most relevant labels among over 27,000 predictable labels by beforementioned services. Having these labels, they categorised the videos because the definition of best moments quite varies depending on the category it belongs.

Using the MobileNet Image Content Model which is able to detect the most interesting object from a picture with a relatively small computation cost, all computations have become able to be performed on-device.

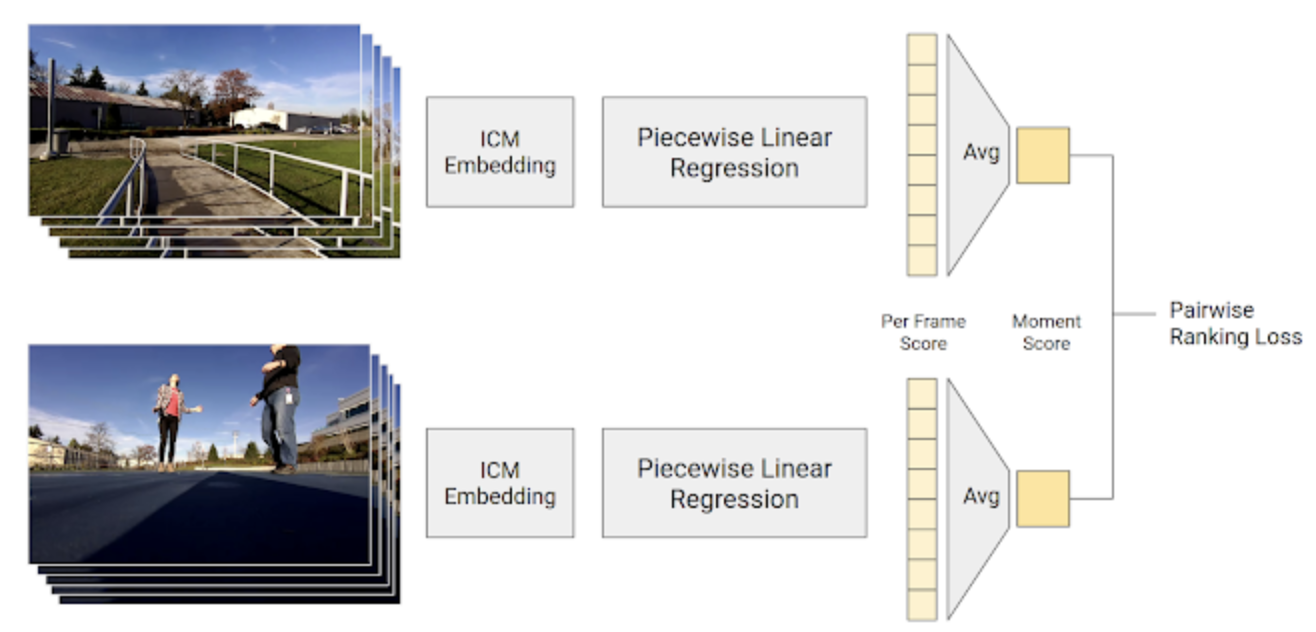

In fact, with regard to the procedure of training, firstly they process the dataset by embedding the video into vectorised format, then the base score is computed with a piecewise linear regression based on those vectors followed by averaging these base score across the length of the video clips. Finally the model could compute actual Moment score of specific short video.

So simply saying given a pairwise comparison, the model compute a moment score that is higher for the video segments preferred by humans.

Google Clips

Recently they have released Google Clips, which is a novel hands-free camera automatically capturing interesting moments in your life.