Main Question

can we teach computers to learn like humans do, by combining the power of memorization and generalization?

Study Resources

Tensorflow Tutorial

Nice Qiita Post in Japanese

Research Blog

Visual Concept

Case Study

Let's say one day you wake up with an idea for a new app called FoodIO*. A user of the app just needs to say out loud what kind of food he/she is craving for (the query). The app magically predicts the dish that the user will like best, and the dish gets delivered to the user's front door (the item). Your key metric is consumption rate—if a dish was eaten by the user, the score is 1; otherwise it's 0 (the label).

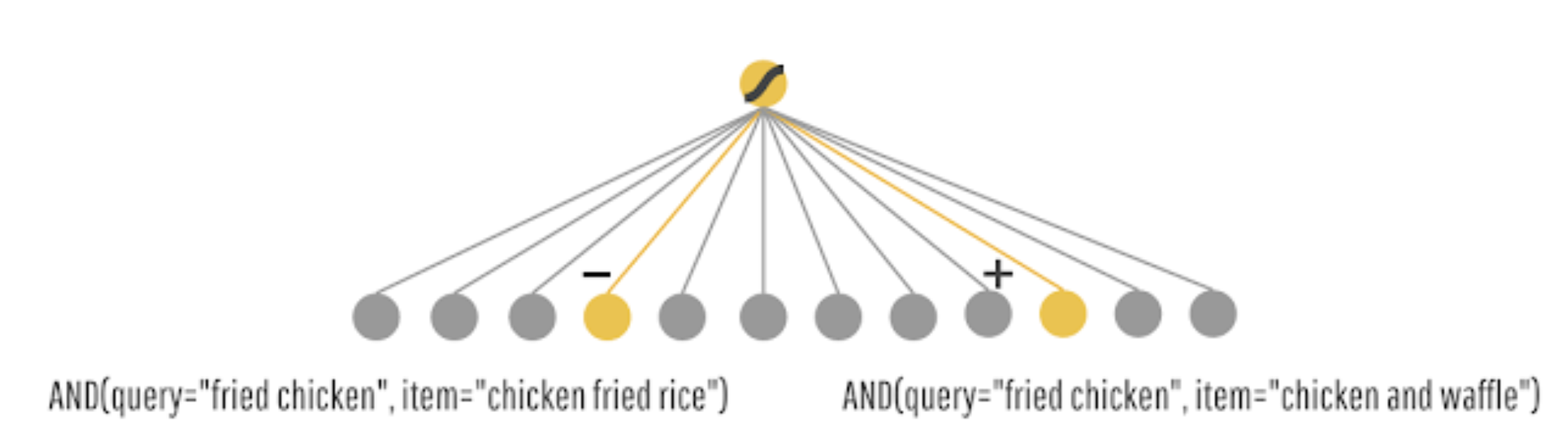

You come up with some simple rules to start, like returning the items that match the most characters in the query, and you release the first version of FoodIO. Unfortunately, you find that the consumption rate is pretty low because the matches are too crude to be really useful (people shouting “fried chicken” end up getting “chicken fried rice”), so you decide to add machine learning to learn from the data.

Wide Model

It is good at memorising all those sparse and specific rules.

Ex) AND(query="fried chicken", item="chicken and waffles")

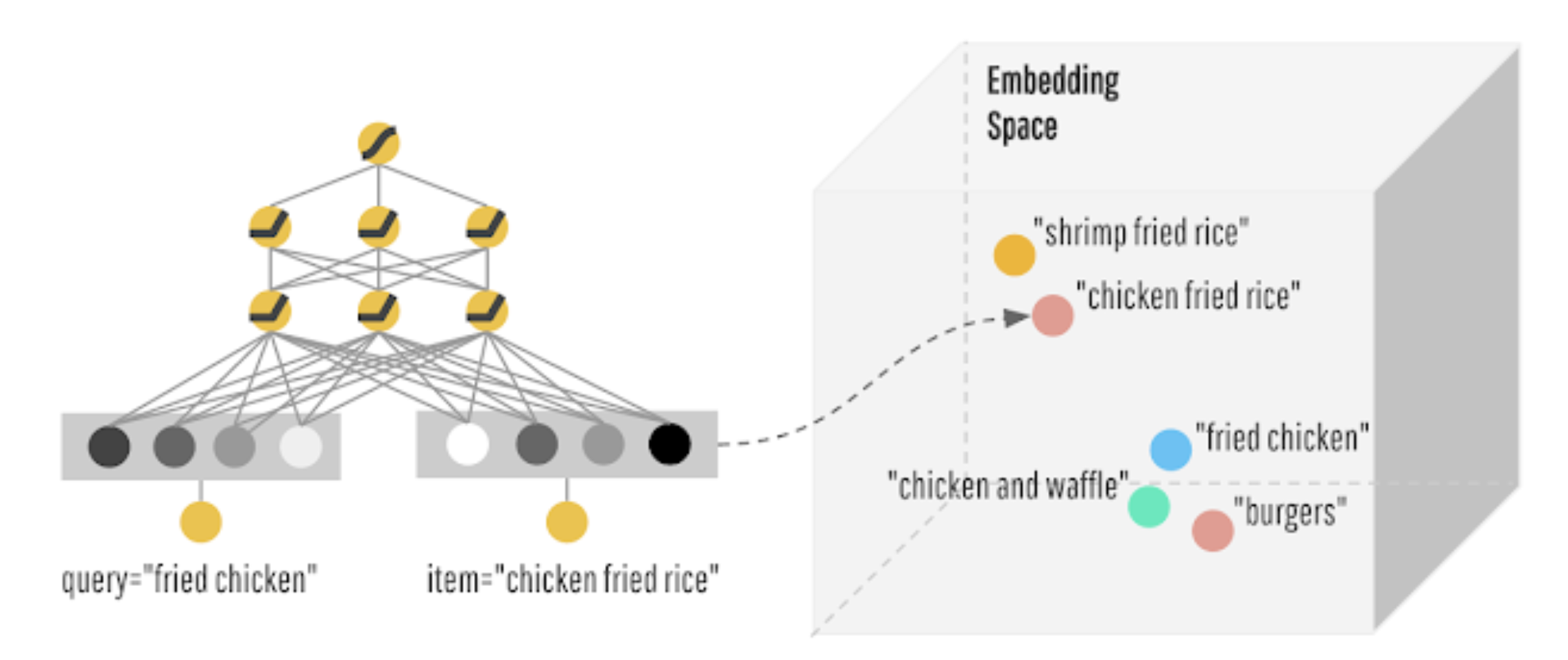

Deep Model

It is able to greatly generalise to similar items via embeddings.

query = "fried chicken", item = "chicken fried rice"

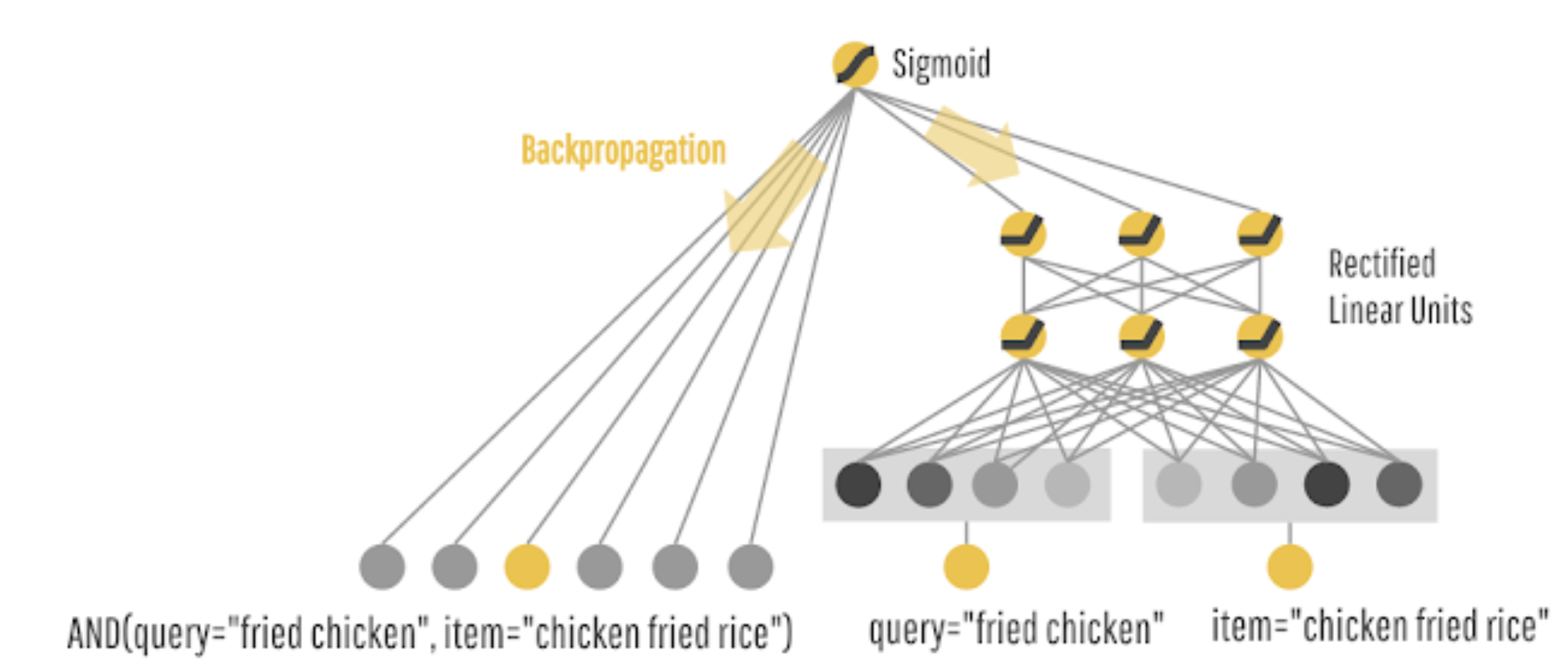

Wide & Deep Model

While analysing customer behaviour, we have found these two types of issues exist.

- Very targeted and specific query: "Hot latte with whole milk"

- More explanatory and vague query "Seafood", "Italian Food"

Approach : Why not combine both models?

Hence, the cross-feature transformation in the wide model component can memorize all those sparse, specific rules, while the deep model component can generalize to similar items via embeddings.