MobileNet-SSD-RealSense

I wrote an English article, here

◆ 前回記事

CPU単体で無理やり YoloV3 OpenVINO [4-5 FPS / CPU only] 【その3】

◆ はじめに

2019.01.04 パフォーマンスを4倍にチューニングしました。この記事より下記リンクの記事のほうが爆速です。

[24 FPS, 48 FPS] RaspberryPi3 + Neural Compute Stick 2 一本で真の力を引き出し、悟空が真のスーパーサイヤ「人」となった日

久々にまとまった時間がとれそうだったため、今回は真面目にプログラムを全部書いて投稿します。

公式にサンプルは無いため全て自力実装です。

タイトルはかなり盛っています。 すみません。

2018/12/19 に Neural Compute Stick 2 (NCS2) が RaspberryPi へ対応したため、早速、過去記事 のロジックを流用し、どれほどパフォーマンスが向上するか、を検証します。

[過去記事] [検出レート約30FPS] RaspberryPi3 Model B(プラスなし) で TX2 "より少し遅い" MobilenetSSDの物体検出レートを獲得しつつ MultiModel (VOC+WIDER FACE) に対応する

Intel公式の言い分によると、初代Neural Compute Stickの8倍の性能が出る、とのことですが、はたして本当なのかどうか?

◆ 環境

- Ubuntu 16.04 (作業用PC)

- RaspberryPi 3 (Raspbian Stretch)

- Neural Compute Stick 2 (NCS2)

- MicroSDCard 32GB

- USB Camera (PlaystationEye)

- OpenVINO R5 2018.5.445

- OpenCV 4.0.1-openvino

◆ 導入手順

● 作業用PCへのOpenVINO本体のインストール

LattePanda Alpha 864 (OS付属無し) にUbuntu16.04+OpenVINOを導入してNeural Compute Stick(NCS1) と Neural Compute Stick 2(NCS2) で爆速Semantic Segmentationを楽しむ あるいは 公式の Install the Intel® Distribution of OpenVINO™ Toolkit for Raspbian OS を参照してください。

作業用PCへはOpenVINOをフルインストールする必要があります。

● RaspberryPi3へのOpenVINO本体のインストール

$ sudo apt update

$ sudo apt upgrade

$ wget https://drive.google.com/open?id=1rBl_3kU4gsx-x2NG2I5uIhvA3fPqm8uE -o l_openvino_toolkit_ie_p_2018.5.445.tgz

$ tar -zxf l_openvino_toolkit_ie_p_2018.5.445.tgz

$ rm l_openvino_toolkit_ie_p_2018.5.445.tgz

$ sed -i "s|<INSTALLDIR>|$(pwd)/inference_engine_vpu_arm|" inference_engine_vpu_arm/bin/setupvars.sh

下記が

INSTALLDIR=<INSTALLDIR>

下記のように変わる。

INSTALLDIR=/home/pi/inference_engine_vpu_arm

$ nano ~/.bashrc

### 下記を1行追記

source /home/pi/inference_engine_vpu_arm/bin/setupvars.sh

$ source ~/.bashrc

### 下記のように表示されれば成功

[setupvars.sh] OpenVINO environment initialized

$ sudo usermod -a -G users "$(whoami)"

$ sudo reboot

$ uname -a

Linux raspberrypi 4.14.79-v7+ #1159 SMP Sun Nov 4 17:50:20 GMT 2018 armv7l GNU/Linux

$ sh inference_engine_vpu_arm/install_dependencies/install_NCS_udev_rules.sh

### 下記のように表示される

Update udev rules so that the toolkit can communicate with your neural compute stick

[install_NCS_udev_rules.sh] udev rules installed

作業が終わると、勝手に OpenCV 4.0.1 がインストールされます。

他の環境に影響を及ぼしたくない方は、導入完了後に環境変数をコネてください。

● OpenVINOの動作確認

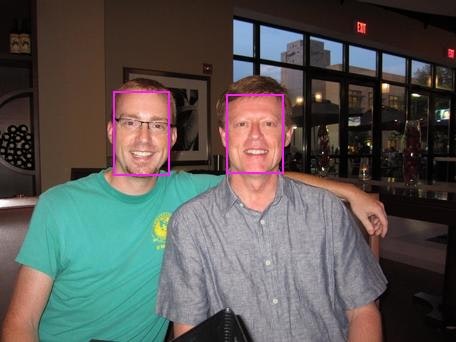

顔検出ネットワークのサンプルを使用して動作を確認します。

まずは、 Neural Compute Stick 2 を RaspberryPi へ接続し、次のコマンドを実行します。

なお、 **<path_to_image>**の部分は、顔が含まれる静止画ファイルのパスへ各自で読み替える必要があります。

$ cd inference_engine_vpu_arm/deployment_tools/inference_engine/samples

$ mkdir build && cd build

$ cmake .. -DCMAKE_BUILD_TYPE=Release -DCMAKE_CXX_FLAGS="-march=armv7-a"

$ make -j2 object_detection_sample_ssd

$ wget --no-check-certificate https://download.01.org/openvinotoolkit/2018_R4/open_model_zoo/face-detection-adas-0001/FP16/face-detection-adas-0001.bin

$ wget --no-check-certificate https://download.01.org/openvinotoolkit/2018_R4/open_model_zoo/face-detection-adas-0001/FP16/face-detection-adas-0001.xml

$ ./armv7l/Release/object_detection_sample_ssd -m face-detection-adas-0001.xml -d MYRIAD -i <path_to_image>

Scanning dependencies of target format_reader

Scanning dependencies of target ie_cpu_extension

[ 0%] Building CXX object ie_cpu_extension/CMakeFiles/ie_cpu_extension.dir/ext_argmax.cpp.o

[ 0%] Building CXX object common/format_reader/CMakeFiles/format_reader.dir/MnistUbyte.cpp.o

[ 4%] Building CXX object common/format_reader/CMakeFiles/format_reader.dir/bmp.cpp.o

[ 8%] Building CXX object common/format_reader/CMakeFiles/format_reader.dir/format_reader.cpp.o

[ 12%] Building CXX object ie_cpu_extension/CMakeFiles/ie_cpu_extension.dir/ext_base.cpp.o

[ 16%] Building CXX object common/format_reader/CMakeFiles/format_reader.dir/opencv_wraper.cpp.o

[ 20%] Building CXX object ie_cpu_extension/CMakeFiles/ie_cpu_extension.dir/ext_ctc_greedy.cpp.o

[ 20%] Linking CXX shared library ../../armv7l/Release/lib/libformat_reader.so

[ 20%] Built target format_reader

[ 24%] Building CXX object ie_cpu_extension/CMakeFiles/ie_cpu_extension.dir/ext_detectionoutput.cpp.o

[ 24%] Building CXX object ie_cpu_extension/CMakeFiles/ie_cpu_extension.dir/ext_gather.cpp.o

[ 28%] Building CXX object ie_cpu_extension/CMakeFiles/ie_cpu_extension.dir/ext_grn.cpp.o

[ 32%] Building CXX object ie_cpu_extension/CMakeFiles/ie_cpu_extension.dir/ext_interp.cpp.o

[ 36%] Building CXX object ie_cpu_extension/CMakeFiles/ie_cpu_extension.dir/ext_list.cpp.o

[ 36%] Building CXX object ie_cpu_extension/CMakeFiles/ie_cpu_extension.dir/ext_mvn.cpp.o

[ 40%] Building CXX object ie_cpu_extension/CMakeFiles/ie_cpu_extension.dir/ext_normalize.cpp.o

[ 44%] Building CXX object ie_cpu_extension/CMakeFiles/ie_cpu_extension.dir/ext_pad.cpp.o

[ 44%] Building CXX object ie_cpu_extension/CMakeFiles/ie_cpu_extension.dir/ext_powerfile.cpp.o

[ 48%] Building CXX object ie_cpu_extension/CMakeFiles/ie_cpu_extension.dir/ext_priorbox.cpp.o

[ 52%] Building CXX object ie_cpu_extension/CMakeFiles/ie_cpu_extension.dir/ext_priorbox_clustered.cpp.o

[ 56%] Building CXX object ie_cpu_extension/CMakeFiles/ie_cpu_extension.dir/ext_proposal.cpp.o

[ 56%] Building CXX object ie_cpu_extension/CMakeFiles/ie_cpu_extension.dir/ext_psroi.cpp.o

[ 60%] Building CXX object ie_cpu_extension/CMakeFiles/ie_cpu_extension.dir/ext_region_yolo.cpp.o

[ 64%] Building CXX object ie_cpu_extension/CMakeFiles/ie_cpu_extension.dir/ext_reorg_yolo.cpp.o

[ 68%] Building CXX object ie_cpu_extension/CMakeFiles/ie_cpu_extension.dir/ext_resample.cpp.o

[ 68%] Building CXX object ie_cpu_extension/CMakeFiles/ie_cpu_extension.dir/ext_simplernms.cpp.o

[ 72%] Building CXX object ie_cpu_extension/CMakeFiles/ie_cpu_extension.dir/ext_spatial_transformer.cpp.o

[ 76%] Building CXX object ie_cpu_extension/CMakeFiles/ie_cpu_extension.dir/simple_copy.cpp.o

Scanning dependencies of target gflags_nothreads_static

[ 80%] Building CXX object thirdparty/gflags/CMakeFiles/gflags_nothreads_static.dir/src/gflags.cc.o

[ 84%] Linking CXX shared library ../armv7l/Release/lib/libcpu_extension.so

[ 84%] Built target ie_cpu_extension

[ 88%] Building CXX object thirdparty/gflags/CMakeFiles/gflags_nothreads_static.dir/src/gflags_reporting.cc.o

[ 88%] Building CXX object thirdparty/gflags/CMakeFiles/gflags_nothreads_static.dir/src/gflags_completions.cc.o

[ 92%] Linking CXX static library ../../armv7l/Release/lib/libgflags_nothreads.a

[ 92%] Built target gflags_nothreads_static

Scanning dependencies of target object_detection_sample_ssd

[ 96%] Building CXX object object_detection_sample_ssd/CMakeFiles/object_detection_sample_ssd.dir/main.cpp.o

[100%] Linking CXX executable ../armv7l/Release/object_detection_sample_ssd

[100%] Built target object_detection_sample_ssd

● サンプルの動作結果

**実行時のログ**

[ INFO ] InferenceEngine:

API version ............ 1.4

Build .................. 19154

Parsing input parameters

[ INFO ] Files were added: 1

[ INFO ] 000003.jpg

[ INFO ] Loading plugin

API version ............ 1.5

Build .................. 19154

Description ....... myriadPlugin

[ INFO ] Loading network files:

face-detection-adas-0001.xml

face-detection-adas-0001.bin

[ INFO ] Preparing input blobs

[ INFO ] Batch size is 1

[ INFO ] Preparing output blobs

[ INFO ] Loading model to the plugin

[ WARNING ] Image is resized from (456, 342) to (672, 384)

[ INFO ] Batch size is 1

[ INFO ] Start inference (1 iterations)

[ INFO ] Processing output blobs

[0,1] element, prob = 1 (113.833,91.5117)-(170.777,176.511) batch id : 0 WILL BE PRINTED!

[1,1] element, prob = 1 (226.887,94.4341)-(283.887,173.672) batch id : 0 WILL BE PRINTED!

[2,1] element, prob = 0.0309601 (360.258,206.569)-(385.195,237.63) batch id : 0

[3,1] element, prob = 0.0292053 (408.129,129.419)-(420.152,148.957) batch id : 0

[4,1] element, prob = 0.0263367 (356.918,199.055)-(373.395,220.764) batch id : 0

[5,1] element, prob = 0.0258026 (366.492,213.583)-(382.523,232.286) batch id : 0

[6,1] element, prob = 0.0252533 (366.27,198.053)-(383.191,223.102) batch id : 0

[7,1] element, prob = 0.0243988 (402.34,131.84)-(417.926,161.064) batch id : 0

[8,1] element, prob = 0.0234528 (162.873,23.8799)-(408.797,283.052) batch id : 0

[9,1] element, prob = 0.0227356 (359.367,165.406)-(371.391,183.691) batch id : 0

[10,1] element, prob = 0.0223236 (404.344,119.399)-(425.719,159.311) batch id : 0

[11,1] element, prob = 0.0219727 (357.586,180.352)-(370.5,200.391) batch id : 0

[12,1] element, prob = 0.0216064 (350.684,193.043)-(387.645,241.805) batch id : 0

[13,1] element, prob = 0.021286 (353.578,176.344)-(375.398,209.742) batch id : 0

[14,1] element, prob = 0.0209961 (387.645,93.6826)-(400.559,113.388) batch id : 0

[15,1] element, prob = 0.0205231 (367.383,185.695)-(380.297,201.393) batch id : 0

[16,1] element, prob = 0.020462 (386.754,129.252)-(400.559,148.623) batch id : 0

[17,1] element, prob = 0.0201874 (418.371,74.4785)-(434.848,105.372) batch id : 0

[18,1] element, prob = 0.0201874 (181.576,128.667)-(232.898,181.854) batch id : 0

[19,1] element, prob = 0.0201263 (414.363,79.1543)-(426.832,98.5254) batch id : 0

[20,1] element, prob = 0.0201263 (414.586,114.139)-(427.055,132.675) batch id : 0

[21,1] element, prob = 0.0200195 (361.816,181.854)-(383.191,214.919) batch id : 0

[22,1] element, prob = 0.0199127 (403.676,128.5)-(416.145,147.371) batch id : 0

[23,1] element, prob = 0.0199127 (401.227,91.2612)-(418.148,121.153) batch id : 0

[24,1] element, prob = 0.0195007 (358.031,213.75)-(373.172,231.451) batch id : 0

[25,1] element, prob = 0.019455 (385.418,107.877)-(403.23,140.273) batch id : 0

[26,1] element, prob = 0.0193481 (401.004,108.461)-(417.035,140.023) batch id : 0

[27,1] element, prob = 0.0192871 (378.07,85.4165)-(390.984,105.79) batch id : 0

[28,1] element, prob = 0.0192566 (413.918,71.6396)-(426.832,91.5117) batch id : 0

[29,1] element, prob = 0.0190887 (390.094,86.9194)-(401.672,105.122) batch id : 0

[30,1] element, prob = 0.0190887 (347.344,172.503)-(382.078,225.272) batch id : 0

[31,1] element, prob = 0.0190887 (360.258,167.243)-(392.766,236.127) batch id : 0

[32,1] element, prob = 0.0189819 (402.117,98.1914)-(414.141,115.225) batch id : 0

[33,1] element, prob = 0.0189362 (389.648,113.137)-(401.227,131.005) batch id : 0

[34,1] element, prob = 0.0189362 (401.895,112.72)-(413.918,130.922) batch id : 0

[35,1] element, prob = 0.0187836 (8.01562,29.6411)-(237.574,287.561) batch id : 0

[36,1] element, prob = 0.0186768 (401.227,78.1523)-(419.484,108.879) batch id : 0

[37,1] element, prob = 0.0186005 (381.188,128.167)-(392.766,145.2) batch id : 0

[38,1] element, prob = 0.0184479 (346.23,206.235)-(375.621,235.292) batch id : 0

[39,1] element, prob = 0.0184021 (442.195,324.8)-(456,342) batch id : 0

[40,1] element, prob = 0.0183411 (355.137,92.7642)-(368.496,111.133) batch id : 0

[41,1] element, prob = 0.0183411 (367.828,222.1)-(382.078,240.803) batch id : 0

[42,1] element, prob = 0.0182953 (389.648,138.52)-(400.781,156.555) batch id : 0

[43,1] element, prob = 0.0182953 (346.008,197.385)-(362.039,218.426) batch id : 0

[44,1] element, prob = 0.0182495 (395.438,81.8262)-(423.047,130.922) batch id : 0

[45,1] element, prob = 0.0182037 (339.996,177.68)-(366.715,204.732) batch id : 0

[46,1] element, prob = 0.0181427 (402.117,150.794)-(417.703,180.686) batch id : 0

[47,1] element, prob = 0.0179901 (379.406,95.5195)-(391.43,114.724) batch id : 0

[48,1] element, prob = 0.0179901 (409.242,137.685)-(421.711,156.722) batch id : 0

[49,1] element, prob = 0.0178528 (419.262,119.399)-(435.293,152.464) batch id : 0

[50,1] element, prob = 0.0178528 (419.707,131.506)-(436.184,166.074) batch id : 0

[51,1] element, prob = 0.0177307 (403.23,138.52)-(414.809,155.72) batch id : 0

[52,1] element, prob = 0.0177307 (379.184,124.409)-(396.105,154.134) batch id : 0

[53,1] element, prob = 0.0177307 (343.113,191.54)-(368.051,225.606) batch id : 0

[54,1] element, prob = 0.0176849 (407.461,154.468)-(419.93,175.843) batch id : 0

[55,1] element, prob = 0.0174408 (407.016,102.951)-(430.617,146.035) batch id : 0

[56,1] element, prob = 0.0174408 (437.965,120.819)-(453.996,153.549) batch id : 0

[57,1] element, prob = 0.017395 (381.41,111.467)-(394.77,131.673) batch id : 0

[58,1] element, prob = 0.0173492 (379.406,182.188)-(391.875,198.554) batch id : 0

[59,1] element, prob = 0.0172577 (436.184,76.8164)-(453.996,108.378) batch id : 0

[60,1] element, prob = 0.0171967 (-2.49792,-2.47357)-(25.4106,22.9614) batch id : 0

[61,1] element, prob = 0.0171967 (409.02,90.3428)-(426.832,121.236) batch id : 0

[62,1] element, prob = 0.0171204 (421.266,139.021)-(433.734,159.394) batch id : 0

[63,1] element, prob = 0.0170746 (411.469,97.6904)-(423.492,115.559) batch id : 0

[64,1] element, prob = 0.0170746 (418.816,91.8457)-(434.402,123.24) batch id : 0

[65,1] element, prob = 0.0169373 (402.562,84.3728)-(415.031,104.203) batch id : 0

[66,1] element, prob = 0.0169373 (367.16,169.497)-(379.184,186.864) batch id : 0

[67,1] element, prob = 0.0169373 (360.035,162.483)-(380.074,189.87) batch id : 0

[68,1] element, prob = 0.0167999 (438.188,92.9312)-(454.664,124.326) batch id : 0

[69,1] element, prob = 0.0167999 (439.078,106.625)-(454.664,138.687) batch id : 0

[70,1] element, prob = 0.0166779 (347.566,183.19)-(359.145,200.558) batch id : 0

[71,1] element, prob = 0.0166779 (385.641,82.1602)-(403.008,110.716) batch id : 0

[72,1] element, prob = 0.0165405 (380.965,140.774)-(392.098,157.975) batch id : 0

[73,1] element, prob = 0.0164948 (332.426,207.905)-(347.121,230.616) batch id : 0

[74,1] element, prob = 0.0164948 (406.793,70.3037)-(431.73,115.058) batch id : 0

[75,1] element, prob = 0.0164948 (335.098,183.357)-(386.754,260.174) batch id : 0

[76,1] element, prob = 0.0164032 (349.125,88.7563)-(362.93,108.127) batch id : 0

[77,1] element, prob = 0.0164032 (358.699,158.893)-(370.277,176.845) batch id : 0

[78,1] element, prob = 0.0164032 (-6.20654,312.442)-(29.3071,348.012) batch id : 0

[79,1] element, prob = 0.0163574 (365.824,89.1738)-(383.637,120.568) batch id : 0

[80,1] element, prob = 0.0162811 (348.012,211.746)-(360.48,231.117) batch id : 0

[81,1] element, prob = 0.0162811 (377.18,89.3408)-(394.102,120.735) batch id : 0

[82,1] element, prob = 0.0162811 (396.773,101.281)-(422.156,146.703) batch id : 0

[83,1] element, prob = 0.0162811 (397.887,107.543)-(453.105,210.577) batch id : 0

[84,1] element, prob = 0.0162354 (357.586,224.271)-(372.281,241.304) batch id : 0

[85,1] element, prob = 0.0162354 (419.039,105.706)-(434.18,137.602) batch id : 0

[86,1] element, prob = 0.0161896 (403.453,157.14)-(415.477,174.006) batch id : 0

[87,1] element, prob = 0.0161896 (380.742,171.668)-(393.211,188.701) batch id : 0

[88,1] element, prob = 0.0161896 (346.008,221.933)-(361.594,243.308) batch id : 0

[89,1] element, prob = 0.0161896 (382.078,117.396)-(409.242,160.98) batch id : 0

[90,1] element, prob = 0.0161896 (385.863,130.922)-(403.676,161.648) batch id : 0

[91,1] element, prob = 0.016098 (371.391,86.168)-(398.555,130.588) batch id : 0

[92,1] element, prob = 0.0160522 (285,86.6689)-(342,169.163) batch id : 0

[93,1] element, prob = 0.015976 (381.188,152.38)-(393.656,170.917) batch id : 0

[94,1] element, prob = 0.015976 (429.059,124.493)-(453.105,170.583) batch id : 0

[95,1] element, prob = 0.0159302 (368.719,96.3545)-(381.188,115.726) batch id : 0

[96,1] element, prob = 0.0159302 (380.742,74.0193)-(408.352,120.067) batch id : 0

[97,1] element, prob = 0.0158539 (378.07,166.825)-(395.438,196.55) batch id : 0

[98,1] element, prob = 0.0158081 (347.789,154.217)-(359.812,171.835) batch id : 0

[99,1] element, prob = 0.0158081 (384.75,149.625)-(419.484,218.76) batch id : 0

[100,1] element, prob = 0.0155487 (387.199,149.792)-(405.012,180.686) batch id : 0

[101,1] element, prob = 0.0155487 (348.902,155.303)-(379.184,199.723) batch id : 0

[102,1] element, prob = 0.0155487 (343.336,215.086)-(367.828,247.816) batch id : 0

[103,1] element, prob = 0.0155487 (351.129,84.7485)-(411.246,172.67) batch id : 0

[104,1] element, prob = 0.0155182 (311.273,131.757)-(364.266,182.522) batch id : 0

[105,1] element, prob = 0.0154266 (368.719,112.97)-(380.742,130.337) batch id : 0

[106,1] element, prob = 0.0154266 (420.375,111.718)-(432.398,129.753) batch id : 0

[107,1] element, prob = 0.0154266 (368.496,141.442)-(380.074,158.643) batch id : 0

[108,1] element, prob = 0.0152969 (378.07,146.619)-(394.992,177.179) batch id : 0

[109,1] element, prob = 0.0152969 (377.848,101.03)-(426.387,155.303) batch id : 0

[110,1] element, prob = 0.015213 (412.582,57.6541)-(425.051,75.6892) batch id : 0

[111,1] element, prob = 0.015213 (356.695,126.664)-(399,189.035) batch id : 0

[112,1] element, prob = 0.0151367 (404.566,162.817)-(417.035,183.19) batch id : 0

[113,1] element, prob = 0.0150909 (402.117,69.26)-(414.586,88.1719) batch id : 0

[114,1] element, prob = 0.0150909 (435.738,134.763)-(449.543,156.138) batch id : 0

[115,1] element, prob = 0.0150909 (348.457,77.2756)-(374.285,117.229) batch id : 0

[ INFO ] Image out_0.bmp created!

total inference time: 155.659

Average running time of one iteration: 155.659 ms

Throughput: 6.42431 FPS

[ INFO ] Execution successful

まさかのまさか、OpenVINOがOpenCVに統合されているようです。

なお、下記のロジックだけで上記と同じことができるようです。

OpenCV の DNNモジュール の正体は OpenVINO だったのか、と今更気づきました。

import cv2 as cv

# Load the model

net = cv.dnn.readNet('face-detection-adas-0001.xml', 'face-detection-adas-0001.bin')

# Specify target device

net.setPreferableTarget(cv.dnn.DNN_TARGET_MYRIAD)

# Read an image

frame = cv.imread('/path/to/image')

# Prepare input blob and perform an inference

blob = cv.dnn.blobFromImage(frame, size=(672, 384), ddepth=cv.CV_8U)

net.setInput(blob)

out = net.forward()

# Draw detected faces on the frame

for detection in out.reshape(-1, 7):

confidence = float(detection[2])

xmin = int(detection[3] * frame.shape[1])

ymin = int(detection[4] * frame.shape[0])

xmax = int(detection[5] * frame.shape[1])

ymax = int(detection[6] * frame.shape[0])

cv.rectangle(frame,(xmin,ymin),(xmax,ymax),color=(0,255,0))

cv.imwrite('out.png', frame)

◆ 自作のMobileNet-SSDで試してみる

では、ここからが本番。

Neural Compute Stick 5本で爆速ブーストしていた下記のリポジトリを Neural Compute Stick 2 用にカスタマイズし、モデルを OpenVINO 向けにコンバージョンします。

"Neural Compute Stick × 5本" vs "Neural Compute Stick 2 × 1本"

Github - PINTO0309 - MobileNet-SSD-RealSense

● Caffeモデル-->OpenVINOモデルコンバージョン

OpenVINOをフルインストールした作業用PCで下記を実行し、 caffemodel を lrmodel にコンバージョンします。

RaspberryPi側には 実行環境だけ が導入されています。

モデルのコンバージョン操作はRaspberryPi側では実行できませんのでご注意願います。

$ cd ~

$ git clone https://github.com/PINTO0309/MobileNet-SSD-RealSense.git

$ cd MobileNet-SSD-RealSense

$ cp caffemodel/MobileNetSSD/deploy.prototxt caffemodel/MobileNetSSD/MobileNetSSD_deploy.prototxt

$ sudo python3 /opt/intel/computer_vision_sdk/deployment_tools/model_optimizer/mo.py \

--input_model caffemodel/MobileNetSSD/MobileNetSSD_deploy.caffemodel \

--output_dir lrmodel/MobileNetSSD \

--data_type FP16

**[mo.py] 各種コンバージョン用オプション**

optional arguments:

-h, --help show this help message and exit

--framework {tf,caffe,mxnet,kaldi,onnx}

Name of the framework used to train the input model.

Framework-agnostic parameters:

--input_model INPUT_MODEL, -w INPUT_MODEL, -m INPUT_MODEL

Tensorflow*: a file with a pre-trained model (binary

or text .pb file after freezing). Caffe*: a model

proto file with model weights

--model_name MODEL_NAME, -n MODEL_NAME

Model_name parameter passed to the final create_ir

transform. This parameter is used to name a network in

a generated IR and output .xml/.bin files.

--output_dir OUTPUT_DIR, -o OUTPUT_DIR

Directory that stores the generated IR. By default, it

is the directory from where the Model Optimizer is

launched.

--input_shape INPUT_SHAPE

Input shape(s) that should be fed to an input node(s)

of the model. Shape is defined as a comma-separated

list of integer numbers enclosed in parentheses or

square brackets, for example [1,3,227,227] or

(1,227,227,3), where the order of dimensions depends

on the framework input layout of the model. For

example, [N,C,H,W] is used for Caffe* models and

[N,H,W,C] for TensorFlow* models. Model Optimizer

performs necessary transformations to convert the

shape to the layout required by Inference Engine

(N,C,H,W). The shape should not contain undefined

dimensions (? or -1) and should fit the dimensions

defined in the input operation of the graph. If there

are multiple inputs in the model, --input_shape should

contain definition of shape for each input separated

by a comma, for example: [1,3,227,227],[2,4] for a

model with two inputs with 4D and 2D shapes.

--scale SCALE, -s SCALE

All input values coming from original network inputs

will be divided by this value. When a list of inputs

is overridden by the --input parameter, this scale is

not applied for any input that does not match with the

original input of the model.

--reverse_input_channels

Switch the input channels order from RGB to BGR (or

vice versa). Applied to original inputs of the model

if and only if a number of channels equals 3. Applied

after application of --mean_values and --scale_values

options, so numbers in --mean_values and

--scale_values go in the order of channels used in the

original model.

--log_level {CRITICAL,ERROR,WARN,WARNING,INFO,DEBUG,NOTSET}

Logger level

--input INPUT The name of the input operation of the given model.

Usually this is a name of the input placeholder of the

model.

--output OUTPUT The name of the output operation of the model. For

TensorFlow*, do not add :0 to this name.

--mean_values MEAN_VALUES, -ms MEAN_VALUES

Mean values to be used for the input image per

channel. Values to be provided in the (R,G,B) or

[R,G,B] format. Can be defined for desired input of

the model, for example: "--mean_values

data[255,255,255],info[255,255,255]". The exact

meaning and order of channels depend on how the

original model was trained.

--scale_values SCALE_VALUES

Scale values to be used for the input image per

channel. Values are provided in the (R,G,B) or [R,G,B]

format. Can be defined for desired input of the model,

for example: "--scale_values

data[255,255,255],info[255,255,255]". The exact

meaning and order of channels depend on how the

original model was trained.

--data_type {FP16,FP32,half,float}

Data type for all intermediate tensors and weights. If

original model is in FP32 and --data_type=FP16 is

specified, all model weights and biases are quantized

to FP16.

--disable_fusing Turn off fusing of linear operations to Convolution

--disable_resnet_optimization

Turn off resnet optimization

--finegrain_fusing FINEGRAIN_FUSING

Regex for layers/operations that won't be fused.

Example: --finegrain_fusing Convolution1,.*Scale.*

--disable_gfusing Turn off fusing of grouped convolutions

--move_to_preprocess Move mean values to IR preprocess section

--extensions EXTENSIONS

Directory or a comma separated list of directories

with extensions. To disable all extensions including

those that are placed at the default location, pass an

empty string.

--batch BATCH, -b BATCH

Input batch size

--version Version of Model Optimizer

--silent Prevent any output messages except those that

correspond to log level equals ERROR, that can be set

with the following option: --log_level. By default,

log level is already ERROR.

--freeze_placeholder_with_value FREEZE_PLACEHOLDER_WITH_VALUE

Replaces input layer with constant node with provided

value, e.g.: "node_name->True"

--generate_deprecated_IR_V2

Force to generate legacy/deprecated IR V2 to work with

previous versions of the Inference Engine. The

resulting IR may or may not be correctly loaded by

Inference Engine API (including the most recent and

old versions of Inference Engine) and provided as a

partially-validated backup option for specific

deployment scenarios. Use it at your own discretion.

By default, without this option, the Model Optimizer

generates IR V3.

**コンバージョン実行時のログ**

Model Optimizer arguments:

Common parameters:

- Path to the Input Model: ~/git/MobileNet-SSD-RealSense/caffemodel/MobileNetSSD/MobileNetSSD_deploy.caffemodel

- Path for generated IR: ~/git/MobileNet-SSD-RealSense/lrmodel/MobileNetSSD

- IR output name: MobileNetSSD_deploy

- Log level: ERROR

- Batch: Not specified, inherited from the model

- Input layers: Not specified, inherited from the model

- Output layers: Not specified, inherited from the model

- Input shapes: Not specified, inherited from the model

- Mean values: Not specified

- Scale values: Not specified

- Scale factor: Not specified

- Precision of IR: FP16

- Enable fusing: True

- Enable grouped convolutions fusing: True

- Move mean values to preprocess section: False

- Reverse input channels: False

Caffe specific parameters:

- Enable resnet optimization: True

- Path to the Input prototxt: ~/git/MobileNet-SSD-RealSense/caffemodel/MobileNetSSD/MobileNetSSD_deploy.prototxt

- Path to CustomLayersMapping.xml: Default

- Path to a mean file: Not specified

- Offsets for a mean file: Not specified

Model Optimizer version: 1.5.12.49d067a0

[ SUCCESS ] Generated IR model.

[ SUCCESS ] XML file: ~/git/MobileNet-SSD-RealSense/lrmodel/MobileNetSSD/MobileNetSSD_deploy.xml

[ SUCCESS ] BIN file: ~/git/MobileNet-SSD-RealSense/lrmodel/MobileNetSSD/MobileNetSSD_deploy.bin

[ SUCCESS ] Total execution time: 2.01 seconds.

● 実装

今回の記事はシングルスレッド+同期式でやっつけ実装しました。

次回はマルチプロセス+非同期式でNCS2を贅沢に使用して検証します。

シングルスレッドなため、プログラムは物凄くシンプルです。

import sys

import numpy as np

import cv2

from os import system

import io, time

from os.path import isfile, join

import re

fps = ""

detectfps = ""

framecount = 0

detectframecount = 0

time1 = 0

time2 = 0

LABELS = ('background',

'aeroplane', 'bicycle', 'bird', 'boat',

'bottle', 'bus', 'car', 'cat', 'chair',

'cow', 'diningtable', 'dog', 'horse',

'motorbike', 'person', 'pottedplant',

'sheep', 'sofa', 'train', 'tvmonitor')

camera_width = 320

camera_height = 240

cap = cv2.VideoCapture(0)

cap.set(cv2.CAP_PROP_FPS, 30)

cap.set(cv2.CAP_PROP_FRAME_WIDTH, camera_width)

cap.set(cv2.CAP_PROP_FRAME_HEIGHT, camera_height)

net = cv2.dnn.readNet('lrmodel/MobileNetSSD/MobileNetSSD_deploy.xml', 'lrmodel/MobileNetSSD/MobileNetSSD_deploy.bin')

net.setPreferableTarget(cv2.dnn.DNN_TARGET_MYRIAD)

try:

while True:

t1 = time.perf_counter()

ret, color_image = cap.read()

if not ret:

break

height = color_image.shape[0]

width = color_image.shape[1]

blob = cv2.dnn.blobFromImage(color_image, 0.007843, size=(300, 300), mean=(127.5,127.5,127.5), swapRB=False, crop=False)

net.setInput(blob)

out = net.forward()

out = out.flatten()

for box_index in range(100):

if out[box_index + 1] == 0.0:

break

base_index = box_index * 7

if (not np.isfinite(out[base_index]) or

not np.isfinite(out[base_index + 1]) or

not np.isfinite(out[base_index + 2]) or

not np.isfinite(out[base_index + 3]) or

not np.isfinite(out[base_index + 4]) or

not np.isfinite(out[base_index + 5]) or

not np.isfinite(out[base_index + 6])):

continue

if box_index == 0:

detectframecount += 1

x1 = max(0, int(out[base_index + 3] * height))

y1 = max(0, int(out[base_index + 4] * width))

x2 = min(height, int(out[base_index + 5] * height))

y2 = min(width, int(out[base_index + 6] * width))

object_info_overlay = out[base_index:base_index + 7]

min_score_percent = 60

source_image_width = width

source_image_height = height

base_index = 0

class_id = object_info_overlay[base_index + 1]

percentage = int(object_info_overlay[base_index + 2] * 100)

if (percentage <= min_score_percent):

continue

box_left = int(object_info_overlay[base_index + 3] * source_image_width)

box_top = int(object_info_overlay[base_index + 4] * source_image_height)

box_right = int(object_info_overlay[base_index + 5] * source_image_width)

box_bottom = int(object_info_overlay[base_index + 6] * source_image_height)

label_text = LABELS[int(class_id)] + " (" + str(percentage) + "%)"

box_color = (255, 128, 0)

box_thickness = 1

cv2.rectangle(color_image, (box_left, box_top), (box_right, box_bottom), box_color, box_thickness)

label_background_color = (125, 175, 75)

label_text_color = (255, 255, 255)

label_size = cv2.getTextSize(label_text, cv2.FONT_HERSHEY_SIMPLEX, 0.5, 1)[0]

label_left = box_left

label_top = box_top - label_size[1]

if (label_top < 1):

label_top = 1

label_right = label_left + label_size[0]

label_bottom = label_top + label_size[1]

cv2.rectangle(color_image, (label_left - 1, label_top - 1), (label_right + 1, label_bottom + 1), label_background_color, -1)

cv2.putText(color_image, label_text, (label_left, label_bottom), cv2.FONT_HERSHEY_SIMPLEX, 0.5, label_text_color, 1)

cv2.putText(color_image, fps, (width-170,15), cv2.FONT_HERSHEY_SIMPLEX, 0.5, (38,0,255), 1, cv2.LINE_AA)

cv2.putText(color_image, detectfps, (width-170,30), cv2.FONT_HERSHEY_SIMPLEX, 0.5, (38,0,255), 1, cv2.LINE_AA)

cv2.namedWindow('USB Camera', cv2.WINDOW_AUTOSIZE)

cv2.imshow('USB Camera', cv2.resize(color_image, (width, height)))

if cv2.waitKey(1)&0xFF == ord('q'):

break

# FPS calculation

framecount += 1

if framecount >= 15:

fps = "(Playback) {:.1f} FPS".format(time1/15)

detectfps = "(Detection) {:.1f} FPS".format(detectframecount/time2)

framecount = 0

detectframecount = 0

time1 = 0

time2 = 0

t2 = time.perf_counter()

elapsedTime = t2-t1

time1 += 1/elapsedTime

time2 += elapsedTime

except:

import traceback

traceback.print_exc()

finally:

print("\n\nFinished\n\n")

2018/12/24 ロジックを改善して OpenVINO の Inference Engine API を直接叩くように変更したところ、 Core i7 機でRaspberryPiの3倍のパフォーマンス 21 FPS が得られましたので追記します。

なお、RaspberryPi3 ではパフォーマンスは改善しませんのでご注意願います。

import sys

import numpy as np

import cv2

from os import system

import io, time

from os.path import isfile, join

import re

from openvino.inference_engine import IENetwork, IEPlugin

fps = ""

detectfps = ""

framecount = 0

detectframecount = 0

time1 = 0

time2 = 0

LABELS = ('background',

'aeroplane', 'bicycle', 'bird', 'boat',

'bottle', 'bus', 'car', 'cat', 'chair',

'cow', 'diningtable', 'dog', 'horse',

'motorbike', 'person', 'pottedplant',

'sheep', 'sofa', 'train', 'tvmonitor')

camera_width = 320

camera_height = 240

cap = cv2.VideoCapture(0)

cap.set(cv2.CAP_PROP_FPS, 30)

cap.set(cv2.CAP_PROP_FRAME_WIDTH, camera_width)

cap.set(cv2.CAP_PROP_FRAME_HEIGHT, camera_height)

plugin = IEPlugin(device="MYRIAD")

plugin.set_config({"VPU_FORCE_RESET": "NO"})

net = IENetwork("lrmodel/MobileNetSSD/MobileNetSSD_deploy.xml", "lrmodel/MobileNetSSD/MobileNetSSD_deploy.bin")

input_blob = next(iter(net.inputs))

exec_net = plugin.load(network=net)

try:

while True:

t1 = time.perf_counter()

ret, color_image = cap.read()

if not ret:

break

height = color_image.shape[0]

width = color_image.shape[1]

prepimg = cv2.resize(color_image, (300, 300))

prepimg = prepimg - 127.5

prepimg = prepimg * 0.007843

prepimg = prepimg[np.newaxis, :, :, :]

prepimg = prepimg.transpose((0, 3, 1, 2)) #NHWC to NCHW

out = exec_net.infer(inputs={input_blob: prepimg})

out = out["detection_out"].flatten()

for box_index in range(100):

if out[box_index + 1] == 0.0:

break

base_index = box_index * 7

if (not np.isfinite(out[base_index]) or

not np.isfinite(out[base_index + 1]) or

not np.isfinite(out[base_index + 2]) or

not np.isfinite(out[base_index + 3]) or

not np.isfinite(out[base_index + 4]) or

not np.isfinite(out[base_index + 5]) or

not np.isfinite(out[base_index + 6])):

continue

if box_index == 0:

detectframecount += 1

x1 = max(0, int(out[base_index + 3] * height))

y1 = max(0, int(out[base_index + 4] * width))

x2 = min(height, int(out[base_index + 5] * height))

y2 = min(width, int(out[base_index + 6] * width))

object_info_overlay = out[base_index:base_index + 7]

min_score_percent = 60

source_image_width = width

source_image_height = height

base_index = 0

class_id = object_info_overlay[base_index + 1]

percentage = int(object_info_overlay[base_index + 2] * 100)

if (percentage <= min_score_percent):

continue

box_left = int(object_info_overlay[base_index + 3] * source_image_width)

box_top = int(object_info_overlay[base_index + 4] * source_image_height)

box_right = int(object_info_overlay[base_index + 5] * source_image_width)

box_bottom = int(object_info_overlay[base_index + 6] * source_image_height)

label_text = LABELS[int(class_id)] + " (" + str(percentage) + "%)"

box_color = (255, 128, 0)

box_thickness = 1

cv2.rectangle(color_image, (box_left, box_top), (box_right, box_bottom), box_color, box_thickness)

label_background_color = (125, 175, 75)

label_text_color = (255, 255, 255)

label_size = cv2.getTextSize(label_text, cv2.FONT_HERSHEY_SIMPLEX, 0.5, 1)[0]

label_left = box_left

label_top = box_top - label_size[1]

if (label_top < 1):

label_top = 1

label_right = label_left + label_size[0]

label_bottom = label_top + label_size[1]

cv2.rectangle(color_image, (label_left - 1, label_top - 1), (label_right + 1, label_bottom + 1), label_background_color, -1)

cv2.putText(color_image, label_text, (label_left, label_bottom), cv2.FONT_HERSHEY_SIMPLEX, 0.5, label_text_color, 1)

cv2.putText(color_image, fps, (width-170,15), cv2.FONT_HERSHEY_SIMPLEX, 0.5, (38,0,255), 1, cv2.LINE_AA)

cv2.putText(color_image, detectfps, (width-170,30), cv2.FONT_HERSHEY_SIMPLEX, 0.5, (38,0,255), 1, cv2.LINE_AA)

cv2.namedWindow('USB Camera', cv2.WINDOW_AUTOSIZE)

cv2.imshow('USB Camera', cv2.resize(color_image, (width, height)))

if cv2.waitKey(1)&0xFF == ord('q'):

break

# FPS calculation

framecount += 1

if framecount >= 15:

fps = "(Playback) {:.1f} FPS".format(time1/15)

detectfps = "(Detection) {:.1f} FPS".format(detectframecount/time2)

framecount = 0

detectframecount = 0

time1 = 0

time2 = 0

t2 = time.perf_counter()

elapsedTime = t2-t1

time1 += 1/elapsedTime

time2 += elapsedTime

except:

import traceback

traceback.print_exc()

finally:

print("\n\nFinished\n\n")

◆ 結果

「見せてもらおうか! NCS2の性能とやらを!」

RaspberryPi3 + NCS2 1本 + OpenVINO + SingleThread同期 [9-10 FPS]

RaspberryPi3 + NCS 5本 + NCSDK + MultiProcess非同期 [30 FPS+]

Core i7 + NCS2 1本 + OpenVINO + SingleThread同期 [21 FPS+]

Σ(゚Д`;)ア…ア…アッハァァァァァァァァ?!!

惨敗。。。

さすがに旧型5本には敵わなかったです。

でも、旧型の2倍のパフォーマンスです。

同期式なので枠は一切ズレません。

めちゃくちゃキレイに枠が打てます。

◆ おわりに

- 実装に非効率な部分があるのか、パフォーマンスはNCSの2倍しか出ませんでした。

- RaspberryPi3で、コレの3倍のパフォーマンスが欲しいです。

- 過去記事のようにMultiProcessで実装すれば、2本で約20FPSです。

- 次回、アホみたいに、MultiStick + MultiProcess (NCS2を4本予定) も実装しようと思います。

- Intel、話を大げさに盛り過ぎ。

- ただ、 Corei7 の端末で改善ロジックを実行すると、 3倍のパフォーマンスの

21 FPS出ます。 ARMのCPUが非力過ぎなだけかもしれません。 - 見方によっては、RaspberryPiでこれだけのパフォーマンスが出せれば十分使えるレベル、とも言えます。

- NCSDK導入の闇を経験している方にしか分からない話ですが、OpenVINOの導入、わずか15分で終わります。 ヒャッハー!!!

- ライブラリでも何でも無い、クソつまらない検証用リポジトリに

を付けてくださる奇特な方、多いですね。

を付けてくださる奇特な方、多いですね。

◆ 参考記事

https://software.intel.com/en-us/articles/OpenVINO-Install-RaspberryPI

Boost RaspberryPi3 with Neural Compute Stick 2 (1 x NCS2) and feel the explosion performance of MobileNet-SSD (If it is Core i7, 21 FPS)

◆ Previous article

Forced by YoloV3 CPU alone, OpenVINO [4-5 FPS / CPU only] 【Part3】

◆ Introduction

Because it seemed that time was gathered for a long time, this time I will write all the programs seriously and post.

Since there is no official sample, it is all self-implementing.

The title is quite exaggeration. I'm sorry.

Because Neural Compute Stick 2 (NCS 2) corresponded to RaspberryPi on December 19, 2018, Past articles We will examine the performance by using logic.

[Past articles] [Detection rate about 30 FPS] It corresponds to MultiModel (VOC + WIDER FACE) while acquiring the object detection rate of Mobilenet-SSD with RaspberryPi3 Model B (plus none) and slightly later than TX2"

According to Intel official saying, it is 8 times better performance than the original Neural Compute Stick, is it really true or not?

◆ Environment

- Ubuntu 16.04 (Working PC)

- RaspberryPi 3 (Raspbian Stretch)

- Neural Compute Stick 2 (NCS2)

- MicroSDCard 32GB

- USB Camera (PlaystationEye)

- OpenVINO R5 2018.5.445

- OpenCV 4.0.1-openvino

◆ Installation procedure

● Installation of OpenVINO main unit on working PC

LattePanda Alpha 864 (OS not included) Introducing Ubuntu 16.04 + OpenVINO to enjoy Explosion Semantic Segmentation with Neural Compute Stick (NCS 1) and Neural Compute Stick 2 (NCS 2) or Please refer to official Install the Intel® Distribution of OpenVINO™ Toolkit for Raspbian OS.

It is necessary to install OpenVINO fully to the work PC.

● Installation of OpenVINO main unit to RaspberryPi3

$ sudo apt update

$ sudo apt upgrade

$ wget https://drive.google.com/open?id=1rBl_3kU4gsx-x2NG2I5uIhvA3fPqm8uE -o l_openvino_toolkit_ie_p_2018.5.445.tgz

$ tar -zxf l_openvino_toolkit_ie_p_2018.5.445.tgz

$ rm l_openvino_toolkit_ie_p_2018.5.445.tgz

$ sed -i "s|<INSTALLDIR>|$(pwd)/inference_engine_vpu_arm|" inference_engine_vpu_arm/bin/setupvars.sh

The following,

INSTALLDIR=<INSTALLDIR>

It changes as follows.

INSTALLDIR=/home/pi/inference_engine_vpu_arm

$ nano ~/.bashrc

### Add 1 row below

source /home/pi/inference_engine_vpu_arm/bin/setupvars.sh

$ source ~/.bashrc

### Successful if displayed as below

[setupvars.sh] OpenVINO environment initialized

$ sudo usermod -a -G users "$(whoami)"

$ sudo reboot

$ uname -a

Linux raspberrypi 4.14.79-v7+ #1159 SMP Sun Nov 4 17:50:20 GMT 2018 armv7l GNU/Linux

$ sh inference_engine_vpu_arm/install_dependencies/install_NCS_udev_rules.sh

### It is displayed as follows

Update udev rules so that the toolkit can communicate with your neural compute stick

[install_NCS_udev_rules.sh] udev rules installed

OpenCV 4.0.1 will be installed without permission when the work is finished.

If you do not want to affect other environments, please edit environment variables after installation is completed.

● OpenVINO operation check

Use the sample of the face detection network to check the operation.

First, connect Neural Compute Stick 2 to RaspberryPi and execute the following command.

The part <path_to_image> needs to be read by each person to the path of the still image file containing the face.

$ cd inference_engine_vpu_arm/deployment_tools/inference_engine/samples

$ mkdir build && cd build

$ cmake .. -DCMAKE_BUILD_TYPE=Release -DCMAKE_CXX_FLAGS="-march=armv7-a"

$ make -j2 object_detection_sample_ssd

$ wget --no-check-certificate https://download.01.org/openvinotoolkit/2018_R4/open_model_zoo/face-detection-adas-0001/FP16/face-detection-adas-0001.bin

$ wget --no-check-certificate https://download.01.org/openvinotoolkit/2018_R4/open_model_zoo/face-detection-adas-0001/FP16/face-detection-adas-0001.xml

$ ./armv7l/Release/object_detection_sample_ssd -m face-detection-adas-0001.xml -d MYRIAD -i <path_to_image>

Scanning dependencies of target format_reader

Scanning dependencies of target ie_cpu_extension

[ 0%] Building CXX object ie_cpu_extension/CMakeFiles/ie_cpu_extension.dir/ext_argmax.cpp.o

[ 0%] Building CXX object common/format_reader/CMakeFiles/format_reader.dir/MnistUbyte.cpp.o

[ 4%] Building CXX object common/format_reader/CMakeFiles/format_reader.dir/bmp.cpp.o

[ 8%] Building CXX object common/format_reader/CMakeFiles/format_reader.dir/format_reader.cpp.o

[ 12%] Building CXX object ie_cpu_extension/CMakeFiles/ie_cpu_extension.dir/ext_base.cpp.o

[ 16%] Building CXX object common/format_reader/CMakeFiles/format_reader.dir/opencv_wraper.cpp.o

[ 20%] Building CXX object ie_cpu_extension/CMakeFiles/ie_cpu_extension.dir/ext_ctc_greedy.cpp.o

[ 20%] Linking CXX shared library ../../armv7l/Release/lib/libformat_reader.so

[ 20%] Built target format_reader

[ 24%] Building CXX object ie_cpu_extension/CMakeFiles/ie_cpu_extension.dir/ext_detectionoutput.cpp.o

[ 24%] Building CXX object ie_cpu_extension/CMakeFiles/ie_cpu_extension.dir/ext_gather.cpp.o

[ 28%] Building CXX object ie_cpu_extension/CMakeFiles/ie_cpu_extension.dir/ext_grn.cpp.o

[ 32%] Building CXX object ie_cpu_extension/CMakeFiles/ie_cpu_extension.dir/ext_interp.cpp.o

[ 36%] Building CXX object ie_cpu_extension/CMakeFiles/ie_cpu_extension.dir/ext_list.cpp.o

[ 36%] Building CXX object ie_cpu_extension/CMakeFiles/ie_cpu_extension.dir/ext_mvn.cpp.o

[ 40%] Building CXX object ie_cpu_extension/CMakeFiles/ie_cpu_extension.dir/ext_normalize.cpp.o

[ 44%] Building CXX object ie_cpu_extension/CMakeFiles/ie_cpu_extension.dir/ext_pad.cpp.o

[ 44%] Building CXX object ie_cpu_extension/CMakeFiles/ie_cpu_extension.dir/ext_powerfile.cpp.o

[ 48%] Building CXX object ie_cpu_extension/CMakeFiles/ie_cpu_extension.dir/ext_priorbox.cpp.o

[ 52%] Building CXX object ie_cpu_extension/CMakeFiles/ie_cpu_extension.dir/ext_priorbox_clustered.cpp.o

[ 56%] Building CXX object ie_cpu_extension/CMakeFiles/ie_cpu_extension.dir/ext_proposal.cpp.o

[ 56%] Building CXX object ie_cpu_extension/CMakeFiles/ie_cpu_extension.dir/ext_psroi.cpp.o

[ 60%] Building CXX object ie_cpu_extension/CMakeFiles/ie_cpu_extension.dir/ext_region_yolo.cpp.o

[ 64%] Building CXX object ie_cpu_extension/CMakeFiles/ie_cpu_extension.dir/ext_reorg_yolo.cpp.o

[ 68%] Building CXX object ie_cpu_extension/CMakeFiles/ie_cpu_extension.dir/ext_resample.cpp.o

[ 68%] Building CXX object ie_cpu_extension/CMakeFiles/ie_cpu_extension.dir/ext_simplernms.cpp.o

[ 72%] Building CXX object ie_cpu_extension/CMakeFiles/ie_cpu_extension.dir/ext_spatial_transformer.cpp.o

[ 76%] Building CXX object ie_cpu_extension/CMakeFiles/ie_cpu_extension.dir/simple_copy.cpp.o

Scanning dependencies of target gflags_nothreads_static

[ 80%] Building CXX object thirdparty/gflags/CMakeFiles/gflags_nothreads_static.dir/src/gflags.cc.o

[ 84%] Linking CXX shared library ../armv7l/Release/lib/libcpu_extension.so

[ 84%] Built target ie_cpu_extension

[ 88%] Building CXX object thirdparty/gflags/CMakeFiles/gflags_nothreads_static.dir/src/gflags_reporting.cc.o

[ 88%] Building CXX object thirdparty/gflags/CMakeFiles/gflags_nothreads_static.dir/src/gflags_completions.cc.o

[ 92%] Linking CXX static library ../../armv7l/Release/lib/libgflags_nothreads.a

[ 92%] Built target gflags_nothreads_static

Scanning dependencies of target object_detection_sample_ssd

[ 96%] Building CXX object object_detection_sample_ssd/CMakeFiles/object_detection_sample_ssd.dir/main.cpp.o

[100%] Linking CXX executable ../armv7l/Release/object_detection_sample_ssd

[100%] Built target object_detection_sample_ssd

● Sample operation result

**Runtime log**

[ INFO ] InferenceEngine:

API version ............ 1.4

Build .................. 19154

Parsing input parameters

[ INFO ] Files were added: 1

[ INFO ] 000003.jpg

[ INFO ] Loading plugin

API version ............ 1.5

Build .................. 19154

Description ....... myriadPlugin

[ INFO ] Loading network files:

face-detection-adas-0001.xml

face-detection-adas-0001.bin

[ INFO ] Preparing input blobs

[ INFO ] Batch size is 1

[ INFO ] Preparing output blobs

[ INFO ] Loading model to the plugin

[ WARNING ] Image is resized from (456, 342) to (672, 384)

[ INFO ] Batch size is 1

[ INFO ] Start inference (1 iterations)

[ INFO ] Processing output blobs

[0,1] element, prob = 1 (113.833,91.5117)-(170.777,176.511) batch id : 0 WILL BE PRINTED!

[1,1] element, prob = 1 (226.887,94.4341)-(283.887,173.672) batch id : 0 WILL BE PRINTED!

[2,1] element, prob = 0.0309601 (360.258,206.569)-(385.195,237.63) batch id : 0

[3,1] element, prob = 0.0292053 (408.129,129.419)-(420.152,148.957) batch id : 0

[4,1] element, prob = 0.0263367 (356.918,199.055)-(373.395,220.764) batch id : 0

[5,1] element, prob = 0.0258026 (366.492,213.583)-(382.523,232.286) batch id : 0

[6,1] element, prob = 0.0252533 (366.27,198.053)-(383.191,223.102) batch id : 0

[7,1] element, prob = 0.0243988 (402.34,131.84)-(417.926,161.064) batch id : 0

[8,1] element, prob = 0.0234528 (162.873,23.8799)-(408.797,283.052) batch id : 0

[9,1] element, prob = 0.0227356 (359.367,165.406)-(371.391,183.691) batch id : 0

[10,1] element, prob = 0.0223236 (404.344,119.399)-(425.719,159.311) batch id : 0

[11,1] element, prob = 0.0219727 (357.586,180.352)-(370.5,200.391) batch id : 0

[12,1] element, prob = 0.0216064 (350.684,193.043)-(387.645,241.805) batch id : 0

[13,1] element, prob = 0.021286 (353.578,176.344)-(375.398,209.742) batch id : 0

[14,1] element, prob = 0.0209961 (387.645,93.6826)-(400.559,113.388) batch id : 0

[15,1] element, prob = 0.0205231 (367.383,185.695)-(380.297,201.393) batch id : 0

[16,1] element, prob = 0.020462 (386.754,129.252)-(400.559,148.623) batch id : 0

[17,1] element, prob = 0.0201874 (418.371,74.4785)-(434.848,105.372) batch id : 0

[18,1] element, prob = 0.0201874 (181.576,128.667)-(232.898,181.854) batch id : 0

[19,1] element, prob = 0.0201263 (414.363,79.1543)-(426.832,98.5254) batch id : 0

[20,1] element, prob = 0.0201263 (414.586,114.139)-(427.055,132.675) batch id : 0

[21,1] element, prob = 0.0200195 (361.816,181.854)-(383.191,214.919) batch id : 0

[22,1] element, prob = 0.0199127 (403.676,128.5)-(416.145,147.371) batch id : 0

[23,1] element, prob = 0.0199127 (401.227,91.2612)-(418.148,121.153) batch id : 0

[24,1] element, prob = 0.0195007 (358.031,213.75)-(373.172,231.451) batch id : 0

[25,1] element, prob = 0.019455 (385.418,107.877)-(403.23,140.273) batch id : 0

[26,1] element, prob = 0.0193481 (401.004,108.461)-(417.035,140.023) batch id : 0

[27,1] element, prob = 0.0192871 (378.07,85.4165)-(390.984,105.79) batch id : 0

[28,1] element, prob = 0.0192566 (413.918,71.6396)-(426.832,91.5117) batch id : 0

[29,1] element, prob = 0.0190887 (390.094,86.9194)-(401.672,105.122) batch id : 0

[30,1] element, prob = 0.0190887 (347.344,172.503)-(382.078,225.272) batch id : 0

[31,1] element, prob = 0.0190887 (360.258,167.243)-(392.766,236.127) batch id : 0

[32,1] element, prob = 0.0189819 (402.117,98.1914)-(414.141,115.225) batch id : 0

[33,1] element, prob = 0.0189362 (389.648,113.137)-(401.227,131.005) batch id : 0

[34,1] element, prob = 0.0189362 (401.895,112.72)-(413.918,130.922) batch id : 0

[35,1] element, prob = 0.0187836 (8.01562,29.6411)-(237.574,287.561) batch id : 0

[36,1] element, prob = 0.0186768 (401.227,78.1523)-(419.484,108.879) batch id : 0

[37,1] element, prob = 0.0186005 (381.188,128.167)-(392.766,145.2) batch id : 0

[38,1] element, prob = 0.0184479 (346.23,206.235)-(375.621,235.292) batch id : 0

[39,1] element, prob = 0.0184021 (442.195,324.8)-(456,342) batch id : 0

[40,1] element, prob = 0.0183411 (355.137,92.7642)-(368.496,111.133) batch id : 0

[41,1] element, prob = 0.0183411 (367.828,222.1)-(382.078,240.803) batch id : 0

[42,1] element, prob = 0.0182953 (389.648,138.52)-(400.781,156.555) batch id : 0

[43,1] element, prob = 0.0182953 (346.008,197.385)-(362.039,218.426) batch id : 0

[44,1] element, prob = 0.0182495 (395.438,81.8262)-(423.047,130.922) batch id : 0

[45,1] element, prob = 0.0182037 (339.996,177.68)-(366.715,204.732) batch id : 0

[46,1] element, prob = 0.0181427 (402.117,150.794)-(417.703,180.686) batch id : 0

[47,1] element, prob = 0.0179901 (379.406,95.5195)-(391.43,114.724) batch id : 0

[48,1] element, prob = 0.0179901 (409.242,137.685)-(421.711,156.722) batch id : 0

[49,1] element, prob = 0.0178528 (419.262,119.399)-(435.293,152.464) batch id : 0

[50,1] element, prob = 0.0178528 (419.707,131.506)-(436.184,166.074) batch id : 0

[51,1] element, prob = 0.0177307 (403.23,138.52)-(414.809,155.72) batch id : 0

[52,1] element, prob = 0.0177307 (379.184,124.409)-(396.105,154.134) batch id : 0

[53,1] element, prob = 0.0177307 (343.113,191.54)-(368.051,225.606) batch id : 0

[54,1] element, prob = 0.0176849 (407.461,154.468)-(419.93,175.843) batch id : 0

[55,1] element, prob = 0.0174408 (407.016,102.951)-(430.617,146.035) batch id : 0

[56,1] element, prob = 0.0174408 (437.965,120.819)-(453.996,153.549) batch id : 0

[57,1] element, prob = 0.017395 (381.41,111.467)-(394.77,131.673) batch id : 0

[58,1] element, prob = 0.0173492 (379.406,182.188)-(391.875,198.554) batch id : 0

[59,1] element, prob = 0.0172577 (436.184,76.8164)-(453.996,108.378) batch id : 0

[60,1] element, prob = 0.0171967 (-2.49792,-2.47357)-(25.4106,22.9614) batch id : 0

[61,1] element, prob = 0.0171967 (409.02,90.3428)-(426.832,121.236) batch id : 0

[62,1] element, prob = 0.0171204 (421.266,139.021)-(433.734,159.394) batch id : 0

[63,1] element, prob = 0.0170746 (411.469,97.6904)-(423.492,115.559) batch id : 0

[64,1] element, prob = 0.0170746 (418.816,91.8457)-(434.402,123.24) batch id : 0

[65,1] element, prob = 0.0169373 (402.562,84.3728)-(415.031,104.203) batch id : 0

[66,1] element, prob = 0.0169373 (367.16,169.497)-(379.184,186.864) batch id : 0

[67,1] element, prob = 0.0169373 (360.035,162.483)-(380.074,189.87) batch id : 0

[68,1] element, prob = 0.0167999 (438.188,92.9312)-(454.664,124.326) batch id : 0

[69,1] element, prob = 0.0167999 (439.078,106.625)-(454.664,138.687) batch id : 0

[70,1] element, prob = 0.0166779 (347.566,183.19)-(359.145,200.558) batch id : 0

[71,1] element, prob = 0.0166779 (385.641,82.1602)-(403.008,110.716) batch id : 0

[72,1] element, prob = 0.0165405 (380.965,140.774)-(392.098,157.975) batch id : 0

[73,1] element, prob = 0.0164948 (332.426,207.905)-(347.121,230.616) batch id : 0

[74,1] element, prob = 0.0164948 (406.793,70.3037)-(431.73,115.058) batch id : 0

[75,1] element, prob = 0.0164948 (335.098,183.357)-(386.754,260.174) batch id : 0

[76,1] element, prob = 0.0164032 (349.125,88.7563)-(362.93,108.127) batch id : 0

[77,1] element, prob = 0.0164032 (358.699,158.893)-(370.277,176.845) batch id : 0

[78,1] element, prob = 0.0164032 (-6.20654,312.442)-(29.3071,348.012) batch id : 0

[79,1] element, prob = 0.0163574 (365.824,89.1738)-(383.637,120.568) batch id : 0

[80,1] element, prob = 0.0162811 (348.012,211.746)-(360.48,231.117) batch id : 0

[81,1] element, prob = 0.0162811 (377.18,89.3408)-(394.102,120.735) batch id : 0

[82,1] element, prob = 0.0162811 (396.773,101.281)-(422.156,146.703) batch id : 0

[83,1] element, prob = 0.0162811 (397.887,107.543)-(453.105,210.577) batch id : 0

[84,1] element, prob = 0.0162354 (357.586,224.271)-(372.281,241.304) batch id : 0

[85,1] element, prob = 0.0162354 (419.039,105.706)-(434.18,137.602) batch id : 0

[86,1] element, prob = 0.0161896 (403.453,157.14)-(415.477,174.006) batch id : 0

[87,1] element, prob = 0.0161896 (380.742,171.668)-(393.211,188.701) batch id : 0

[88,1] element, prob = 0.0161896 (346.008,221.933)-(361.594,243.308) batch id : 0

[89,1] element, prob = 0.0161896 (382.078,117.396)-(409.242,160.98) batch id : 0

[90,1] element, prob = 0.0161896 (385.863,130.922)-(403.676,161.648) batch id : 0

[91,1] element, prob = 0.016098 (371.391,86.168)-(398.555,130.588) batch id : 0

[92,1] element, prob = 0.0160522 (285,86.6689)-(342,169.163) batch id : 0

[93,1] element, prob = 0.015976 (381.188,152.38)-(393.656,170.917) batch id : 0

[94,1] element, prob = 0.015976 (429.059,124.493)-(453.105,170.583) batch id : 0

[95,1] element, prob = 0.0159302 (368.719,96.3545)-(381.188,115.726) batch id : 0

[96,1] element, prob = 0.0159302 (380.742,74.0193)-(408.352,120.067) batch id : 0

[97,1] element, prob = 0.0158539 (378.07,166.825)-(395.438,196.55) batch id : 0

[98,1] element, prob = 0.0158081 (347.789,154.217)-(359.812,171.835) batch id : 0

[99,1] element, prob = 0.0158081 (384.75,149.625)-(419.484,218.76) batch id : 0

[100,1] element, prob = 0.0155487 (387.199,149.792)-(405.012,180.686) batch id : 0

[101,1] element, prob = 0.0155487 (348.902,155.303)-(379.184,199.723) batch id : 0

[102,1] element, prob = 0.0155487 (343.336,215.086)-(367.828,247.816) batch id : 0

[103,1] element, prob = 0.0155487 (351.129,84.7485)-(411.246,172.67) batch id : 0

[104,1] element, prob = 0.0155182 (311.273,131.757)-(364.266,182.522) batch id : 0

[105,1] element, prob = 0.0154266 (368.719,112.97)-(380.742,130.337) batch id : 0

[106,1] element, prob = 0.0154266 (420.375,111.718)-(432.398,129.753) batch id : 0

[107,1] element, prob = 0.0154266 (368.496,141.442)-(380.074,158.643) batch id : 0

[108,1] element, prob = 0.0152969 (378.07,146.619)-(394.992,177.179) batch id : 0

[109,1] element, prob = 0.0152969 (377.848,101.03)-(426.387,155.303) batch id : 0

[110,1] element, prob = 0.015213 (412.582,57.6541)-(425.051,75.6892) batch id : 0

[111,1] element, prob = 0.015213 (356.695,126.664)-(399,189.035) batch id : 0

[112,1] element, prob = 0.0151367 (404.566,162.817)-(417.035,183.19) batch id : 0

[113,1] element, prob = 0.0150909 (402.117,69.26)-(414.586,88.1719) batch id : 0

[114,1] element, prob = 0.0150909 (435.738,134.763)-(449.543,156.138) batch id : 0

[115,1] element, prob = 0.0150909 (348.457,77.2756)-(374.285,117.229) batch id : 0

[ INFO ] Image out_0.bmp created!

total inference time: 155.659

Average running time of one iteration: 155.659 ms

Throughput: 6.42431 FPS

[ INFO ] Execution successful

OpenVINO seems to be integrated into OpenCV.

In addition, it seems that you can do the same thing as above with just the logic below.

I noticed that the identity of OpenCV's DNN module was OpenVINO.

import cv2 as cv

# Load the model

net = cv.dnn.readNet('face-detection-adas-0001.xml', 'face-detection-adas-0001.bin')

# Specify target device

net.setPreferableTarget(cv.dnn.DNN_TARGET_MYRIAD)

# Read an image

frame = cv.imread('/path/to/image')

# Prepare input blob and perform an inference

blob = cv.dnn.blobFromImage(frame, size=(672, 384), ddepth=cv.CV_8U)

net.setInput(blob)

out = net.forward()

# Draw detected faces on the frame

for detection in out.reshape(-1, 7):

confidence = float(detection[2])

xmin = int(detection[3] * frame.shape[1])

ymin = int(detection[4] * frame.shape[0])

xmax = int(detection[5] * frame.shape[1])

ymax = int(detection[6] * frame.shape[0])

cv.rectangle(frame,(xmin,ymin),(xmax,ymax),color=(0,255,0))

cv.imwrite('out.png', frame)

◆ Try on your own MobileNet-SSD

Well, here is the real production.

We customize the following repositories for Neural Compute Stick 2 and convert models to OpenVINO.

"Neural Compute Stick × 5 piece" vs "Neural Compute Stick 2 × 1 piece"

Github - PINTO0309 - MobileNet-SSD-RealSense

● Caffe model-->OpenVINO model conversion

Run the following on a working PC with full OpenVINO installed and convert caffemodel to lrmodel.

On the RaspberryPi side Only the execution environment has been introduced.

Please note that the conversion operation of the model can not be executed on RaspberryPi side.

$ cd ~

$ git clone https://github.com/PINTO0309/MobileNet-SSD-RealSense.git

$ cd MobileNet-SSD-RealSense

$ cp caffemodel/MobileNetSSD/deploy.prototxt caffemodel/MobileNetSSD/MobileNetSSD_deploy.prototxt

$ sudo python3 /opt/intel/computer_vision_sdk/deployment_tools/model_optimizer/mo.py \

--input_model caffemodel/MobileNetSSD/MobileNetSSD_deploy.caffemodel \

--output_dir lrmodel/MobileNetSSD \

--data_type FP16

**[mo.py] Various Conversion Options**

optional arguments:

-h, --help show this help message and exit

--framework {tf,caffe,mxnet,kaldi,onnx}

Name of the framework used to train the input model.

Framework-agnostic parameters:

--input_model INPUT_MODEL, -w INPUT_MODEL, -m INPUT_MODEL

Tensorflow*: a file with a pre-trained model (binary

or text .pb file after freezing). Caffe*: a model

proto file with model weights

--model_name MODEL_NAME, -n MODEL_NAME

Model_name parameter passed to the final create_ir

transform. This parameter is used to name a network in

a generated IR and output .xml/.bin files.

--output_dir OUTPUT_DIR, -o OUTPUT_DIR

Directory that stores the generated IR. By default, it

is the directory from where the Model Optimizer is

launched.

--input_shape INPUT_SHAPE

Input shape(s) that should be fed to an input node(s)

of the model. Shape is defined as a comma-separated

list of integer numbers enclosed in parentheses or

square brackets, for example [1,3,227,227] or

(1,227,227,3), where the order of dimensions depends

on the framework input layout of the model. For

example, [N,C,H,W] is used for Caffe* models and

[N,H,W,C] for TensorFlow* models. Model Optimizer

performs necessary transformations to convert the

shape to the layout required by Inference Engine

(N,C,H,W). The shape should not contain undefined

dimensions (? or -1) and should fit the dimensions

defined in the input operation of the graph. If there

are multiple inputs in the model, --input_shape should

contain definition of shape for each input separated

by a comma, for example: [1,3,227,227],[2,4] for a

model with two inputs with 4D and 2D shapes.

--scale SCALE, -s SCALE

All input values coming from original network inputs

will be divided by this value. When a list of inputs

is overridden by the --input parameter, this scale is

not applied for any input that does not match with the

original input of the model.

--reverse_input_channels

Switch the input channels order from RGB to BGR (or

vice versa). Applied to original inputs of the model

if and only if a number of channels equals 3. Applied

after application of --mean_values and --scale_values

options, so numbers in --mean_values and

--scale_values go in the order of channels used in the

original model.

--log_level {CRITICAL,ERROR,WARN,WARNING,INFO,DEBUG,NOTSET}

Logger level

--input INPUT The name of the input operation of the given model.

Usually this is a name of the input placeholder of the

model.

--output OUTPUT The name of the output operation of the model. For

TensorFlow*, do not add :0 to this name.

--mean_values MEAN_VALUES, -ms MEAN_VALUES

Mean values to be used for the input image per

channel. Values to be provided in the (R,G,B) or

[R,G,B] format. Can be defined for desired input of

the model, for example: "--mean_values

data[255,255,255],info[255,255,255]". The exact

meaning and order of channels depend on how the

original model was trained.

--scale_values SCALE_VALUES

Scale values to be used for the input image per

channel. Values are provided in the (R,G,B) or [R,G,B]

format. Can be defined for desired input of the model,

for example: "--scale_values

data[255,255,255],info[255,255,255]". The exact

meaning and order of channels depend on how the

original model was trained.

--data_type {FP16,FP32,half,float}

Data type for all intermediate tensors and weights. If

original model is in FP32 and --data_type=FP16 is

specified, all model weights and biases are quantized

to FP16.

--disable_fusing Turn off fusing of linear operations to Convolution

--disable_resnet_optimization

Turn off resnet optimization

--finegrain_fusing FINEGRAIN_FUSING

Regex for layers/operations that won't be fused.

Example: --finegrain_fusing Convolution1,.*Scale.*

--disable_gfusing Turn off fusing of grouped convolutions

--move_to_preprocess Move mean values to IR preprocess section

--extensions EXTENSIONS

Directory or a comma separated list of directories

with extensions. To disable all extensions including

those that are placed at the default location, pass an

empty string.

--batch BATCH, -b BATCH

Input batch size

--version Version of Model Optimizer

--silent Prevent any output messages except those that

correspond to log level equals ERROR, that can be set

with the following option: --log_level. By default,

log level is already ERROR.

--freeze_placeholder_with_value FREEZE_PLACEHOLDER_WITH_VALUE

Replaces input layer with constant node with provided

value, e.g.: "node_name->True"

--generate_deprecated_IR_V2

Force to generate legacy/deprecated IR V2 to work with

previous versions of the Inference Engine. The

resulting IR may or may not be correctly loaded by

Inference Engine API (including the most recent and

old versions of Inference Engine) and provided as a

partially-validated backup option for specific

deployment scenarios. Use it at your own discretion.

By default, without this option, the Model Optimizer

generates IR V3.

**Log at conversion execution**

Model Optimizer arguments:

Common parameters:

- Path to the Input Model: ~/git/MobileNet-SSD-RealSense/caffemodel/MobileNetSSD/MobileNetSSD_deploy.caffemodel

- Path for generated IR: ~/git/MobileNet-SSD-RealSense/lrmodel/MobileNetSSD

- IR output name: MobileNetSSD_deploy

- Log level: ERROR

- Batch: Not specified, inherited from the model

- Input layers: Not specified, inherited from the model

- Output layers: Not specified, inherited from the model

- Input shapes: Not specified, inherited from the model

- Mean values: Not specified

- Scale values: Not specified

- Scale factor: Not specified

- Precision of IR: FP16

- Enable fusing: True

- Enable grouped convolutions fusing: True

- Move mean values to preprocess section: False

- Reverse input channels: False

Caffe specific parameters:

- Enable resnet optimization: True

- Path to the Input prototxt: ~/git/MobileNet-SSD-RealSense/caffemodel/MobileNetSSD/MobileNetSSD_deploy.prototxt

- Path to CustomLayersMapping.xml: Default

- Path to a mean file: Not specified

- Offsets for a mean file: Not specified

Model Optimizer version: 1.5.12.49d067a0

[ SUCCESS ] Generated IR model.

[ SUCCESS ] XML file: ~/git/MobileNet-SSD-RealSense/lrmodel/MobileNetSSD/MobileNetSSD_deploy.xml

[ SUCCESS ] BIN file: ~/git/MobileNet-SSD-RealSense/lrmodel/MobileNetSSD/MobileNetSSD_deploy.bin

[ SUCCESS ] Total execution time: 2.01 seconds.

● Implementation

I implemented this article in single thread + synchronous system.

Next time I will verify using NCS2 luxuriously with multiprocess + asynchronous type.

Because it is single-threaded, the program is terribly simple.

import sys

import numpy as np

import cv2

from os import system

import io, time

from os.path import isfile, join

import re

fps = ""

detectfps = ""

framecount = 0

detectframecount = 0

time1 = 0

time2 = 0

LABELS = ('background',

'aeroplane', 'bicycle', 'bird', 'boat',

'bottle', 'bus', 'car', 'cat', 'chair',

'cow', 'diningtable', 'dog', 'horse',

'motorbike', 'person', 'pottedplant',

'sheep', 'sofa', 'train', 'tvmonitor')

camera_width = 320

camera_height = 240

cap = cv2.VideoCapture(0)

cap.set(cv2.CAP_PROP_FPS, 30)

cap.set(cv2.CAP_PROP_FRAME_WIDTH, camera_width)

cap.set(cv2.CAP_PROP_FRAME_HEIGHT, camera_height)

net = cv2.dnn.readNet('lrmodel/MobileNetSSD/MobileNetSSD_deploy.xml', 'lrmodel/MobileNetSSD/MobileNetSSD_deploy.bin')

net.setPreferableTarget(cv2.dnn.DNN_TARGET_MYRIAD)

try:

while True:

t1 = time.perf_counter()

ret, color_image = cap.read()

if not ret:

break

height = color_image.shape[0]

width = color_image.shape[1]

blob = cv2.dnn.blobFromImage(color_image, 0.007843, size=(300, 300), mean=(127.5,127.5,127.5), swapRB=False, crop=False)

net.setInput(blob)

out = net.forward()

out = out.flatten()

for box_index in range(100):

if out[box_index + 1] == 0.0:

break

base_index = box_index * 7

if (not np.isfinite(out[base_index]) or

not np.isfinite(out[base_index + 1]) or

not np.isfinite(out[base_index + 2]) or

not np.isfinite(out[base_index + 3]) or

not np.isfinite(out[base_index + 4]) or

not np.isfinite(out[base_index + 5]) or

not np.isfinite(out[base_index + 6])):

continue

if box_index == 0:

detectframecount += 1

x1 = max(0, int(out[base_index + 3] * height))

y1 = max(0, int(out[base_index + 4] * width))

x2 = min(height, int(out[base_index + 5] * height))

y2 = min(width, int(out[base_index + 6] * width))

object_info_overlay = out[base_index:base_index + 7]

min_score_percent = 60

source_image_width = width

source_image_height = height

base_index = 0

class_id = object_info_overlay[base_index + 1]

percentage = int(object_info_overlay[base_index + 2] * 100)

if (percentage <= min_score_percent):

continue

box_left = int(object_info_overlay[base_index + 3] * source_image_width)

box_top = int(object_info_overlay[base_index + 4] * source_image_height)

box_right = int(object_info_overlay[base_index + 5] * source_image_width)

box_bottom = int(object_info_overlay[base_index + 6] * source_image_height)

label_text = LABELS[int(class_id)] + " (" + str(percentage) + "%)"

box_color = (255, 128, 0)

box_thickness = 1

cv2.rectangle(color_image, (box_left, box_top), (box_right, box_bottom), box_color, box_thickness)

label_background_color = (125, 175, 75)

label_text_color = (255, 255, 255)

label_size = cv2.getTextSize(label_text, cv2.FONT_HERSHEY_SIMPLEX, 0.5, 1)[0]

label_left = box_left

label_top = box_top - label_size[1]

if (label_top < 1):

label_top = 1

label_right = label_left + label_size[0]

label_bottom = label_top + label_size[1]

cv2.rectangle(color_image, (label_left - 1, label_top - 1), (label_right + 1, label_bottom + 1), label_background_color, -1)

cv2.putText(color_image, label_text, (label_left, label_bottom), cv2.FONT_HERSHEY_SIMPLEX, 0.5, label_text_color, 1)

cv2.putText(color_image, fps, (width-170,15), cv2.FONT_HERSHEY_SIMPLEX, 0.5, (38,0,255), 1, cv2.LINE_AA)

cv2.putText(color_image, detectfps, (width-170,30), cv2.FONT_HERSHEY_SIMPLEX, 0.5, (38,0,255), 1, cv2.LINE_AA)

cv2.namedWindow('USB Camera', cv2.WINDOW_AUTOSIZE)

cv2.imshow('USB Camera', cv2.resize(color_image, (width, height)))

if cv2.waitKey(1)&0xFF == ord('q'):

break

# FPS calculation

framecount += 1

if framecount >= 15:

fps = "(Playback) {:.1f} FPS".format(time1/15)

detectfps = "(Detection) {:.1f} FPS".format(detectframecount/time2)

framecount = 0

detectframecount = 0

time1 = 0

time2 = 0

t2 = time.perf_counter()

elapsedTime = t2-t1

time1 += 1/elapsedTime

time2 += elapsedTime

except:

import traceback

traceback.print_exc()

finally:

print("\n\nFinished\n\n")

import sys

import numpy as np

import cv2

from os import system

import io, time

from os.path import isfile, join

import re

from openvino.inference_engine import IENetwork, IEPlugin

fps = ""

detectfps = ""

framecount = 0

detectframecount = 0

time1 = 0

time2 = 0

LABELS = ('background',

'aeroplane', 'bicycle', 'bird', 'boat',

'bottle', 'bus', 'car', 'cat', 'chair',

'cow', 'diningtable', 'dog', 'horse',

'motorbike', 'person', 'pottedplant',

'sheep', 'sofa', 'train', 'tvmonitor')

camera_width = 320

camera_height = 240

cap = cv2.VideoCapture(0)

cap.set(cv2.CAP_PROP_FPS, 30)

cap.set(cv2.CAP_PROP_FRAME_WIDTH, camera_width)

cap.set(cv2.CAP_PROP_FRAME_HEIGHT, camera_height)

plugin = IEPlugin(device="MYRIAD")

plugin.set_config({"VPU_FORCE_RESET": "NO"})

net = IENetwork("lrmodel/MobileNetSSD/MobileNetSSD_deploy.xml", "lrmodel/MobileNetSSD/MobileNetSSD_deploy.bin")

input_blob = next(iter(net.inputs))

exec_net = plugin.load(network=net)

try:

while True:

t1 = time.perf_counter()

ret, color_image = cap.read()

if not ret:

break

height = color_image.shape[0]

width = color_image.shape[1]

prepimg = cv2.resize(color_image, (300, 300))

prepimg = prepimg - 127.5

prepimg = prepimg * 0.007843

prepimg = prepimg[np.newaxis, :, :, :]

prepimg = prepimg.transpose((0, 3, 1, 2)) #NHWC to NCHW

out = exec_net.infer(inputs={input_blob: prepimg})

out = out["detection_out"].flatten()

for box_index in range(100):

if out[box_index + 1] == 0.0:

break

base_index = box_index * 7

if (not np.isfinite(out[base_index]) or

not np.isfinite(out[base_index + 1]) or

not np.isfinite(out[base_index + 2]) or

not np.isfinite(out[base_index + 3]) or

not np.isfinite(out[base_index + 4]) or

not np.isfinite(out[base_index + 5]) or

not np.isfinite(out[base_index + 6])):

continue

if box_index == 0:

detectframecount += 1

x1 = max(0, int(out[base_index + 3] * height))

y1 = max(0, int(out[base_index + 4] * width))

x2 = min(height, int(out[base_index + 5] * height))

y2 = min(width, int(out[base_index + 6] * width))

object_info_overlay = out[base_index:base_index + 7]

min_score_percent = 60

source_image_width = width

source_image_height = height

base_index = 0

class_id = object_info_overlay[base_index + 1]

percentage = int(object_info_overlay[base_index + 2] * 100)

if (percentage <= min_score_percent):

continue

box_left = int(object_info_overlay[base_index + 3] * source_image_width)

box_top = int(object_info_overlay[base_index + 4] * source_image_height)

box_right = int(object_info_overlay[base_index + 5] * source_image_width)

box_bottom = int(object_info_overlay[base_index + 6] * source_image_height)

label_text = LABELS[int(class_id)] + " (" + str(percentage) + "%)"

box_color = (255, 128, 0)

box_thickness = 1

cv2.rectangle(color_image, (box_left, box_top), (box_right, box_bottom), box_color, box_thickness)

label_background_color = (125, 175, 75)

label_text_color = (255, 255, 255)

label_size = cv2.getTextSize(label_text, cv2.FONT_HERSHEY_SIMPLEX, 0.5, 1)[0]

label_left = box_left

label_top = box_top - label_size[1]

if (label_top < 1):

label_top = 1

label_right = label_left + label_size[0]

label_bottom = label_top + label_size[1]

cv2.rectangle(color_image, (label_left - 1, label_top - 1), (label_right + 1, label_bottom + 1), label_background_color, -1)

cv2.putText(color_image, label_text, (label_left, label_bottom), cv2.FONT_HERSHEY_SIMPLEX, 0.5, label_text_color, 1)

cv2.putText(color_image, fps, (width-170,15), cv2.FONT_HERSHEY_SIMPLEX, 0.5, (38,0,255), 1, cv2.LINE_AA)

cv2.putText(color_image, detectfps, (width-170,30), cv2.FONT_HERSHEY_SIMPLEX, 0.5, (38,0,255), 1, cv2.LINE_AA)

cv2.namedWindow('USB Camera', cv2.WINDOW_AUTOSIZE)

cv2.imshow('USB Camera', cv2.resize(color_image, (width, height)))

if cv2.waitKey(1)&0xFF == ord('q'):

break

# FPS calculation

framecount += 1

if framecount >= 15:

fps = "(Playback) {:.1f} FPS".format(time1/15)

detectfps = "(Detection) {:.1f} FPS".format(detectframecount/time2)

framecount = 0

detectframecount = 0

time1 = 0

time2 = 0

t2 = time.perf_counter()

elapsedTime = t2-t1

time1 += 1/elapsedTime

time2 += elapsedTime

except:

import traceback

traceback.print_exc()

finally:

print("\n\nFinished\n\n")

◆ Result

「Show me! Performance of NCS2!」

RaspberryPi3 + NCS2 1 piece + OpenVINO + SingleThread Sync [9-10 FPS]

RaspberryPi3 + NCS 5 pieces + NCSDK + MultiProcess asynchronous [30 FPS+]

Core i7 + NCS2 1 piece + OpenVINO + SingleThread Sync [21 FPS+]

As expected it was not an enemy to the old five.

But it is twice the performance of the old model.

Since it is synchronous type, the frame does not shift at all.

I can draw frames beautifully.

◆ Reference article

https://software.intel.com/en-us/articles/OpenVINO-Install-RaspberryPI