今回はSpeech Frameworkを使用して音声ファイルから文字起こしを行ってみます。

環境: Xcode 12.4、Swift 5

Info.plist

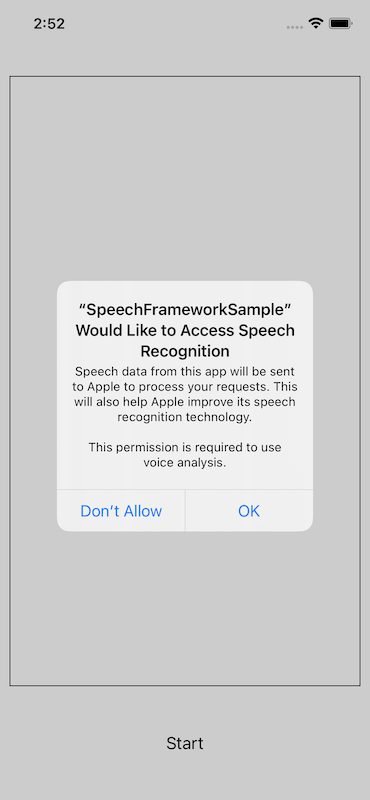

まずSpeech Frameworkを使用する許可をユーザーから得る為にInfo.plistにNSSpeechRecognitionUsageDescriptionを追加します。

<key>NSSpeechRecognitionUsageDescription</key>

<string>This permission is required to use voice analysis.</string>

その上でSFSpeechRecognizerのrequestAuthorizationを実行することでユーザーにSpeech Frameworkを使用する許可を求めるダイアログが表示されます。

SFSpeechRecognizer.requestAuthorization { (authStatus) in

}

音声ファイルの分割

さて、早速音声ファイルをSpeech Frameworkで読み込ませたいところですが、ここで一つ留意しなくてはならないことがあります。

この記事の執筆時点(2021年3月28日)ではSpeech Frameworkの使用時間は60秒までに制限されています。

その為、音声ファイルをただ単にSpeech Frameworkに読み込ませるだけだと最初の60秒分しか文字起こしが行われません。

音声ファイルの長さが60秒以上ある場合は、あらかじめ60秒単位で音声ファイルを分割して、それぞれのファイル毎にSpeech Frameworkへの読み込みを行わせる必要があります。

音声ファイルの分割はAVAssetExportSessionで行うことができます。

private var avAudioFile: AVAudioFile!

private var croppedFileInfos: [FileInfo] = []

private var croppedFileCount: Int = 0

private struct FileInfo {

var url: URL

var startTime: Double

var endTime: Double

}

private func cropFile() {

// sample.m4aを60秒単位でcroppedFile_連番.m4aというファイルに分割しLibraryディレクトリ下に配置

if let audioPath = Bundle.main.path(forResource:"sample" , ofType:"m4a") {

let audioFileUrl = URL(fileURLWithPath : audioPath)

do {

self.avAudioFile = try AVAudioFile(forReading: audioFileUrl)

}catch let error{

print(error)

}

let recordTime = Double(self.avAudioFile.length) / self.avAudioFile.fileFormat.sampleRate

let oneFileTime: Double = 60

var startTime: Double = 0

while startTime < recordTime {

let fullPath = NSHomeDirectory() + "/Library/croppedFile_" + String(self.croppedFileInfos.count) + ".m4a"

if FileManager.default.fileExists(atPath: fullPath) {

do {

try FileManager.default.removeItem(atPath: fullPath)

}catch let error {

print(error)

}

}

let url = URL(fileURLWithPath: fullPath)

let endTime: Double = startTime + oneFileTime <= recordTime ? startTime + oneFileTime : recordTime

self.croppedFileInfos.append(FileInfo(url: url, startTime: startTime, endTime: endTime))

startTime += oneFileTime

}

for cropeedFileInfo in self.croppedFileInfos {

self.exportAsynchronously(fileInfo: cropeedFileInfo)

}

}

}

private func exportAsynchronously(fileInfo: FileInfo) {

let startCMTime = CMTimeMake(value: Int64(fileInfo.startTime), timescale: 1)

let endCMTime = CMTimeMake(value: Int64(fileInfo.endTime), timescale: 1)

let exportTimeRange = CMTimeRangeFromTimeToTime(start: startCMTime, end: endCMTime)

let asset = AVAsset(url: self.avAudioFile.url)

if let exporter = AVAssetExportSession(asset: asset, presetName: AVAssetExportPresetPassthrough) {

exporter.outputFileType = .m4a

exporter.timeRange = exportTimeRange

exporter.outputURL = fileInfo.url

exporter.exportAsynchronously(completionHandler: {

switch exporter.status {

case .completed:

self.croppedFileCount += 1

if self.croppedFileInfos.count == self.croppedFileCount {

// 音声ファイルの分割完了 -> Speech Frameworkによる文字起こし開始

DispatchQueue.main.async {

self.initalizeSpeechFramework()

}

}

case .failed, .cancelled:

if let error = exporter.error {

print(error)

}

default:

break

}

})

}

}

private func self.initalizeSpeechFramework() {

Speech Frameworkによる文字起こし

では、いよいよ文字起こしを行ってみたいと思います。

音声ファイルから文字起こしを行う場合はSFSpeechURLRecognitionRequestで音声ファイルを読み込ませます。

private let textView: UITextView = UITextView()

private var speechRecognizer: SFSpeechRecognizer?

private var recognitionRequest: SFSpeechURLRecognitionRequest?

private var recognitionTask: SFSpeechRecognitionTask?

private var speechText: String = ""

private var croppedFileInfos: [FileInfo] = []

private var currentIndex: Int = 0

private func initalizeSpeechFramework() {

self.recognitionRequest = SFSpeechURLRecognitionRequest(url: self.croppedFileInfos[self.currentIndex].url)

let location = NSLocale.preferredLanguages

self.speechRecognizer = SFSpeechRecognizer(locale: Locale(identifier: location[0]))!

self.recognitionTask = self.speechRecognizer?.recognitionTask(with: self.recognitionRequest!, resultHandler: { (result: SFSpeechRecognitionResult?, error: Error?) -> Void in

if let error = error {

print(error)

} else {

if let result = result {

// 文字起こし文をUITextViewに表示

self.textView.text = self.speechText + result.bestTranscription.formattedString

if result.isFinal {

// 音声ファイルの読み込みが終了

self.finishOrRestartSpeechFramework()

}

}

}

})

}

private func finishOrRestartSpeechFramework() {

// Speech Frameworkを停止

self.recognitionTask?.cancel()

self.recognitionTask?.finish()

self.speechText = self.textView.text

self.currentIndex += 1

if self.currentIndex < self.croppedFileInfos.count {

// まだ音声ファイルが残っている場合は次の音声ファイルの文字起こしを開始

self.initalizeSpeechFramework()

}

}

60秒単位に分割した音声ファイルを一つずつSpeech Frameworkに読み込ませています。

音声ファイルの読み込みが終了するとresult.isFinalがtrueになりますので、次の音声ファイルの読み込みに移っています。全ての音声ファイルの読み込みが終わると終了です。

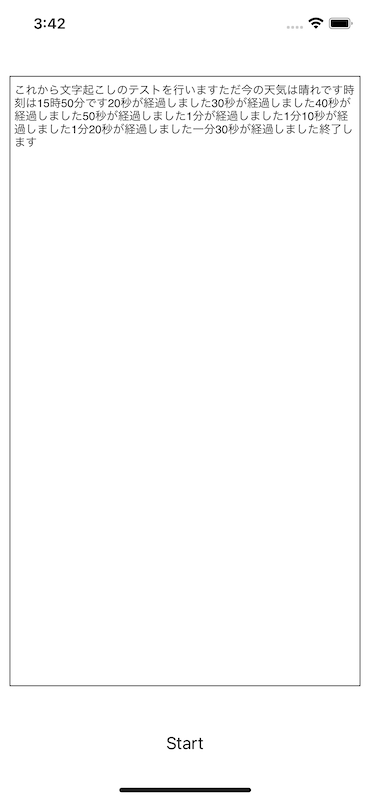

実際に動かすと以下の様に文字起こしが行われました。

サンプルプログラム全文

このサンプルプログラムはGitHubで公開しています。

<?xml version="1.0" encoding="UTF-8"?>

<!DOCTYPE plist PUBLIC "-//Apple//DTD PLIST 1.0//EN" "http://www.apple.com/DTDs/PropertyList-1.0.dtd">

<plist version="1.0">

<dict>

---------------(中略)---------------

<key>NSSpeechRecognitionUsageDescription</key>

<string>This permission is required to use voice analysis.</string>

</dict>

</plist>

import UIKit

import AVFoundation

import Speech

class ViewController: UIViewController {

private let textView: UITextView = UITextView()

private let startButton: UIButton = UIButton()

private var speechRecognizer: SFSpeechRecognizer?

private var recognitionRequest: SFSpeechURLRecognitionRequest?

private var recognitionTask: SFSpeechRecognitionTask?

private var avAudioFile: AVAudioFile!

private var speechText: String = ""

private var croppedFileInfos: [FileInfo] = []

private var croppedFileCount: Int = 0

private var currentIndex: Int = 0

private struct FileInfo {

var url: URL

var startTime: Double

var endTime: Double

}

override func viewDidLoad() {

super.viewDidLoad()

self.textView.backgroundColor = UIColor.white

self.textView.layer.borderWidth = 1

self.textView.layer.borderColor = UIColor.black.cgColor

self.view.addSubview(self.textView)

self.startButton.setTitle("Start", for: .normal)

self.startButton.setTitleColor(UIColor.black, for: .normal)

self.startButton.addTarget(self, action: #selector(self.touchUpStartButton(_:)), for: .touchUpInside)

self.view.addSubview(self.startButton)

SFSpeechRecognizer.requestAuthorization { (authStatus) in

}

}

override func viewDidLayoutSubviews() {

super.viewDidLayoutSubviews()

let width: CGFloat = self.view.frame.width

let height: CGFloat = self.view.frame.height

self.textView.frame = CGRect(x: 10, y: 80, width: width - 20, height: height - 200)

self.startButton.frame = CGRect(x: width / 2 - 40, y: height - 100, width: 80, height: 80)

}

@objc private func touchUpStartButton(_ sender: UIButton) {

self.textView.text = ""

self.speechText = ""

cropFile()

}

private func cropFile() {

if let audioPath = Bundle.main.path(forResource:"sample" , ofType:"m4a") {

let audioFileUrl = URL(fileURLWithPath : audioPath)

do {

self.avAudioFile = try AVAudioFile(forReading: audioFileUrl)

}catch let error{

print(error)

}

let recordTime = Double(self.avAudioFile.length) / self.avAudioFile.fileFormat.sampleRate

let oneFileTime: Double = 60

var startTime: Double = 0

while startTime < recordTime {

let fullPath = NSHomeDirectory() + "/Library/croppedFile_" + String(self.croppedFileInfos.count) + ".m4a"

if FileManager.default.fileExists(atPath: fullPath) {

do {

try FileManager.default.removeItem(atPath: fullPath)

}catch let error {

print(error)

}

}

let url = URL(fileURLWithPath: fullPath)

let endTime: Double = startTime + oneFileTime <= recordTime ? startTime + oneFileTime : recordTime

self.croppedFileInfos.append(FileInfo(url: url, startTime: startTime, endTime: endTime))

startTime += oneFileTime

}

for cropeedFileInfo in self.croppedFileInfos {

self.exportAsynchronously(fileInfo: cropeedFileInfo)

}

}

}

private func exportAsynchronously(fileInfo: FileInfo) {

let startCMTime = CMTimeMake(value: Int64(fileInfo.startTime), timescale: 1)

let endCMTime = CMTimeMake(value: Int64(fileInfo.endTime), timescale: 1)

let exportTimeRange = CMTimeRangeFromTimeToTime(start: startCMTime, end: endCMTime)

let asset = AVAsset(url: self.avAudioFile.url)

if let exporter = AVAssetExportSession(asset: asset, presetName: AVAssetExportPresetPassthrough) {

exporter.outputFileType = .m4a

exporter.timeRange = exportTimeRange

exporter.outputURL = fileInfo.url

exporter.exportAsynchronously(completionHandler: {

switch exporter.status {

case .completed:

self.croppedFileCount += 1

if self.croppedFileInfos.count == self.croppedFileCount {

DispatchQueue.main.async {

self.initalizeSpeechFramework()

}

}

case .failed, .cancelled:

if let error = exporter.error {

print(error)

}

default:

break

}

})

}

}

private func initalizeSpeechFramework() {

self.recognitionRequest = SFSpeechURLRecognitionRequest(url: self.croppedFileInfos[self.currentIndex].url)

let location = NSLocale.preferredLanguages

self.speechRecognizer = SFSpeechRecognizer(locale: Locale(identifier: location[0]))!

self.recognitionTask = self.speechRecognizer?.recognitionTask(with: self.recognitionRequest!, resultHandler: { (result: SFSpeechRecognitionResult?, error: Error?) -> Void in

if let error = error {

print(error)

} else {

if let result = result {

self.textView.text = self.speechText + result.bestTranscription.formattedString

if result.isFinal {

self.finishOrRestartSpeechFramework()

}

}

}

})

}

private func finishOrRestartSpeechFramework() {

self.recognitionTask?.cancel()

self.recognitionTask?.finish()

self.speechText = self.textView.text

self.currentIndex += 1

if self.currentIndex < self.croppedFileInfos.count {

self.initalizeSpeechFramework()

}

}

}

参考文献

Speech | Apple Developer Documentation

SFSpeechURLRecognitionRequest | Apple Developer Documentation

Qiita | 【iOS】Speech Frameworkの実装

Qiita | Swiftでの音声ファイルトリミング(Crop)と結合(Concat)