AI visual inspection system for Developers

補足(開発機が日本語入力入れていないので、ほぼ英語ですがリマインドで載せておきます)

MMDetection 2.0.0(Pytorch2.0対応とあるので、先に導入済みとの絡みでどうしてもこのバージョンでいきたかった。

Preface

Caution

- It is procedure which is describe to process and warning point for developers.

- This document is internal release and limited internal use available.

0. system information

This is main system frame of object detection.

It is available multi platform of object detection models.

サンプルcomponents

terminal

python tools/train.py configs/mask_rcnn/mask_cup.py --work-dir work_d

05/17 15:25:55 - mmengine - INFO -

------------------------------------------------------------

System environment:

sys.platform: linux

Python: 3.8.16 (default, Mar 2 2023, 03:21:46) [GCC 11.2.0]

CUDA available: True

numpy_random_seed: 63419852

GPU 0: NVIDIA GeForce RTX 3090

CUDA_HOME: /usr/local/cuda

NVCC: Cuda compilation tools, release 11.8, V11.8.89

GCC: gcc (Ubuntu 11.3.0-1ubuntu1~22.04) 11.3.0

PyTorch: 1.13.1+cu117

PyTorch compiling details: PyTorch built with:

- GCC 9.3

- C++ Version: 201402

- Intel(R) oneAPI Math Kernel Library Version 2023.1-Product Build 20230303 for Intel(R) 64 architecture applications

- Intel(R) MKL-DNN v2.6.0 (Git Hash 52b5f107dd9cf10910aaa19cb47f3abf9b349815)

- OpenMP 201511 (a.k.a. OpenMP 4.5)

- LAPACK is enabled (usually provided by MKL)

- NNPACK is enabled

- CPU capability usage: AVX2

- CUDA Runtime 11.7

- NVCC architecture flags: -gencode;arch=compute_37,code=sm_37;-gencode;arch=compute_50,code=sm_50;-gencode;arch=compute_60,code=sm_60;-gencode;arch=compute_70,code=sm_70;-gencode;arch=compute_75,code=sm_75;-gencode;arch=compute_80,code=sm_80;-gencode;arch=compute_86,code=sm_86

- CuDNN 8.5

- Magma 2.6.1

- Build settings: BLAS_INFO=mkl, BUILD_TYPE=Release, CUDA_VERSION=11.7, CUDNN_VERSION=8.5.0, CXX_COMPILER=/opt/rh/devtoolset-9/root/usr/bin/c++, CXX_FLAGS= -fabi-version=11 -Wno-deprecated -fvisibility-inlines-hidden -DUSE_PTHREADPOOL -fopenmp -DNDEBUG -DUSE_KINETO -DUSE_FBGEMM -DUSE_QNNPACK -DUSE_PYTORCH_QNNPACK -DUSE_XNNPACK -DSYMBOLICATE_MOBILE_DEBUG_HANDLE -DEDGE_PROFILER_USE_KINETO -O2 -fPIC -Wno-narrowing -Wall -Wextra -Werror=return-type -Werror=non-virtual-dtor -Wno-missing-field-initializers -Wno-type-limits -Wno-array-bounds -Wno-unknown-pragmas -Wunused-local-typedefs -Wno-unused-parameter -Wno-unused-function -Wno-unused-result -Wno-strict-overflow -Wno-strict-aliasing -Wno-error=deprecated-declarations -Wno-stringop-overflow -Wno-psabi -Wno-error=pedantic -Wno-error=redundant-decls -Wno-error=old-style-cast -fdiagnostics-color=always -faligned-new -Wno-unused-but-set-variable -Wno-maybe-uninitialized -fno-math-errno -fno-trapping-math -Werror=format -Werror=cast-function-type -Wno-stringop-overflow, LAPACK_INFO=mkl, PERF_WITH_AVX=1, PERF_WITH_AVX2=1, PERF_WITH_AVX512=1, TORCH_VERSION=1.13.1, USE_CUDA=ON, USE_CUDNN=ON, USE_EXCEPTION_PTR=1, USE_GFLAGS=OFF, USE_GLOG=OFF, USE_MKL=ON, USE_MKLDNN=ON, USE_MPI=OFF, USE_NCCL=ON, USE_NNPACK=ON, USE_OPENMP=ON, USE_ROCM=OFF,

TorchVision: 0.14.1+cu117

OpenCV: 4.7.0

MMEngine: 0.7.3

Runtime environment:

cudnn_benchmark: False

mp_cfg: {'mp_start_method': 'fork', 'opencv_num_threads': 0}

dist_cfg: {'backend': 'nccl'}

seed: None

Distributed launcher: none

Distributed training: False

GPU number: 1

MMdetection folder tree

terminal

tree -d

.

├── checkpoints

├── configs

│ ├── albu_example

│ ├── atss

│ ├── autoassign

│ ├── _base_

│ │ ├── datasets

│ │ ├── models

│ │ └── schedules

│ ├── boxinst

│ ├── carafe

│ ├── cascade_rcnn

│ ├── cascade_rpn

│ ├── centernet

│ ├── centripetalnet

│ ├── cityscapes

│ ├── common

│ ├── condinst

│ ├── conditional_detr

│ ├── convnext

│ ├── cornernet

│ ├── crowddet

│ ├── dab_detr

│ ├── dcn

│ ├── dcnv2

│ ├── ddod

│ ├── deepfashion

│ ├── deformable_detr

│ ├── detectors

│ ├── detr

│ ├── dino

│ ├── double_heads

│ ├── dyhead

│ ├── dynamic_rcnn

│ ├── efficientnet

│ ├── empirical_attention

│ ├── faster_rcnn

│ ├── fast_rcnn

│ ├── fcos

│ ├── foveabox

│ ├── fpg

│ ├── free_anchor

│ ├── fsaf

│ ├── gcnet

│ ├── gfl

│ ├── ghm

│ ├── gn

│ ├── gn+ws

│ ├── grid_rcnn

│ ├── groie

│ ├── guided_anchoring

│ ├── hrnet

│ ├── htc

│ ├── instaboost

│ ├── lad

│ ├── ld

│ ├── legacy_1.x

│ ├── libra_rcnn

│ ├── lvis

│ ├── mask2former

│ ├── maskformer

│ ├── mask_rcnn

│ ├── misc

│ ├── ms_rcnn

│ ├── nas_fcos

│ ├── nas_fpn

│ ├── objects365

│ ├── openimages

│ ├── paa

│ ├── pafpn

│ ├── panoptic_fpn

│ ├── pascal_voc

│ ├── pisa

│ ├── point_rend

│ ├── pvt

│ ├── queryinst

│ ├── regnet

│ ├── reppoints

│ ├── res2net

│ ├── resnest

│ ├── resnet_strikes_back

│ ├── retinanet

│ ├── rpn

│ ├── rtmdet

│ │ └── classification

│ ├── sabl

│ ├── scnet

│ ├── scratch

│ ├── seesaw_loss

│ ├── selfsup_pretrain

│ ├── simple_copy_paste

│ ├── soft_teacher

│ ├── solo

│ ├── solov2

│ ├── sparse_rcnn

│ ├── ssd

│ ├── strong_baselines

│ ├── swin

│ ├── timm_example

│ ├── tood

│ ├── tridentnet

│ ├── vfnet

│ ├── wider_face

│ ├── yolact

│ ├── yolo

│ ├── yolof

│ └── yolox

├── data

│ └── cup

│ ├── test

│ ├── train

│ └── val

├── demo

├── docker

│ ├── serve

│ └── serve_cn

├── docs

│ ├── en

│ │ ├── advanced_guides

│ │ ├── migration

│ │ ├── notes

│ │ ├── _static

│ │ │ ├── css

│ │ │ └── image

│ │ └── user_guides

│ └── zh_cn

│ ├── advanced_guides

│ ├── migration

│ ├── notes

│ ├── _static

│ │ ├── css

│ │ └── image

│ └── user_guides

├── mmdet

│ ├── apis

│ │ └── __pycache__

│ ├── datasets

│ │ ├── api_wrappers

│ │ │ └── __pycache__

│ │ ├── __pycache__

│ │ ├── samplers

│ │ │ └── __pycache__

│ │ └── transforms

│ │ └── __pycache__

│ ├── engine

│ │ ├── hooks

│ │ ├── optimizers

│ │ ├── runner

│ │ └── schedulers

│ ├── evaluation

│ │ ├── functional

│ │ │ └── __pycache__

│ │ ├── metrics

│ │ │ └── __pycache__

│ │ └── __pycache__

│ ├── models

│ │ ├── backbones

│ │ ├── data_preprocessors

│ │ ├── dense_heads

│ │ ├── detectors

│ │ ├── layers

│ │ │ └── transformer

│ │ ├── losses

│ │ ├── necks

│ │ ├── roi_heads

│ │ │ ├── bbox_heads

│ │ │ ├── mask_heads

│ │ │ ├── roi_extractors

│ │ │ └── shared_heads

│ │ ├── seg_heads

│ │ │ └── panoptic_fusion_heads

│ │ ├── task_modules

│ │ │ ├── assigners

│ │ │ ├── coders

│ │ │ ├── prior_generators

│ │ │ └── samplers

│ │ ├── test_time_augs

│ │ └── utils

│ ├── __pycache__

│ ├── structures

│ │ ├── bbox

│ │ │ └── __pycache__

│ │ ├── mask

│ │ │ └── __pycache__

│ │ └── __pycache__

│ ├── testing

│ ├── utils

│ │ └── __pycache__

│ └── visualization

├── mmdet.egg-info

├── outputs

│ ├── preds

│ └── vis

├── projects

│ ├── ConvNeXt-V2

│ │ └── configs

│ ├── Detic

│ │ ├── configs

│ │ └── detic

│ ├── DiffusionDet

│ │ ├── configs

│ │ ├── diffusiondet

│ │ └── model_converters

│ ├── EfficientDet

│ │ ├── configs

│ │ │ └── tensorflow

│ │ └── efficientdet

│ │ └── tensorflow

│ │ └── api_wrappers

│ ├── example_project

│ │ ├── configs

│ │ └── dummy

│ ├── LabelStudio

│ │ └── backend_template

│ └── SparseInst

│ ├── configs

│ └── sparseinst

├── requirements

├── resources

├── tests

│ ├── data

│ │ ├── configs_mmtrack

│ │ ├── crowdhuman_dataset

│ │ ├── custom_dataset

│ │ │ └── images

│ │ ├── Objects365

│ │ ├── OpenImages

│ │ │ ├── annotations

│ │ │ └── challenge2019

│ │ ├── VOCdevkit

│ │ │ ├── VOC2007

│ │ │ │ ├── Annotations

│ │ │ │ ├── ImageSets

│ │ │ │ │ └── Main

│ │ │ │ └── JPEGImages

│ │ │ └── VOC2012

│ │ │ ├── Annotations

│ │ │ ├── ImageSets

│ │ │ │ └── Main

│ │ │ └── JPEGImages

│ │ └── WIDERFace

│ │ └── WIDER_train

│ │ ├── 0--Parade

│ │ └── Annotations

│ ├── test_apis

│ ├── test_datasets

│ │ ├── test_samplers

│ │ └── test_transforms

│ ├── test_engine

│ │ ├── test_hooks

│ │ ├── test_optimizers

│ │ ├── test_runner

│ │ └── test_schedulers

│ ├── test_evaluation

│ │ └── test_metrics

│ ├── test_models

│ │ ├── test_backbones

│ │ ├── test_data_preprocessors

│ │ ├── test_dense_heads

│ │ ├── test_detectors

│ │ ├── test_layers

│ │ ├── test_losses

│ │ ├── test_necks

│ │ ├── test_roi_heads

│ │ │ ├── test_bbox_heads

│ │ │ ├── test_mask_heads

│ │ │ └── test_roi_extractors

│ │ ├── test_seg_heads

│ │ ├── test_task_modules

│ │ │ ├── test_assigners

│ │ │ ├── test_coder

│ │ │ ├── test_prior_generators

│ │ │ └── test_samplers

│ │ ├── test_tta

│ │ └── test_utils

│ ├── test_structures

│ │ ├── test_bbox

│ │ └── test_mask

│ ├── test_utils

│ └── test_visualization

├── tools

│ ├── analysis_tools

│ ├── dataset_converters

│ ├── deployment

│ ├── misc

│ └── model_converters

├── tutorial_exps

└── work_d

├── 20230517_161312

│ └── vis_data

├── 20230517_161820

│ └── vis_data

├── 20230517_162327

│ └── vis_data

├── 20230517_162445

│ └── vis_data

└── 20230517_162528

└── vis_data

298 directories

(openmmlab) kanengi@askengi-MS-7B86:~/notebook/mmdetection$

1. Preparation

- Dataset is created by coco annotator.

- Spend 5hours to create object detection data.

- Dataset is generate valid sample 80 : defective data 20

- Platform is MMdetection which is multi engine object detecton program which is based on Pytorch.

2. example of failure

refer to non exist folder and try training.

terminal

Traceback (most recent call last):

File "tools/train.py", line 133, in <module>

main()

File "tools/train.py", line 122, in main

runner = Runner.from_cfg(cfg)

File "/home/kanengi/anaconda3/envs/openmmlab/lib/python3.8/site-packages/mmengine/runner/runner.py", line 439, in from_cfg

runner = cls(

File "/home/kanengi/anaconda3/envs/openmmlab/lib/python3.8/site-packages/mmengine/runner/runner.py", line 406, in __init__

self.model = self.build_model(model)

File "/home/kanengi/anaconda3/envs/openmmlab/lib/python3.8/site-packages/mmengine/runner/runner.py", line 813, in build_model

model = MODELS.build(model)

File "/home/kanengi/anaconda3/envs/openmmlab/lib/python3.8/site-packages/mmengine/registry/registry.py", line 548, in build

return self.build_func(cfg, *args, **kwargs, registry=self)

File "/home/kanengi/anaconda3/envs/openmmlab/lib/python3.8/site-packages/mmengine/registry/build_functions.py", line 250, in build_model_from_cfg

return build_from_cfg(cfg, registry, default_args)

File "/home/kanengi/anaconda3/envs/openmmlab/lib/python3.8/site-packages/mmengine/registry/build_functions.py", line 144, in build_from_cfg

raise type(e)(

TypeError: class `FasterRCNN` in mmdet/models/detectors/faster_rcnn.py: __init__() got an unexpected keyword argument 'bbox_head'

(openmmlab) kanengi@askengi-MS-7B86:~/notebook/mmdetection$ cd ..

- cause : actual data is Segmentation. but in this program try to train by bounding box.

- Resolution : modify code for Segmentation.

refer to out of scope folders

terminal

Traceback (most recent call last):

File "tools/train.py", line 133, in <module>

main()

File "tools/train.py", line 129, in main

runner.train()

File "/home/kanengi/anaconda3/envs/openmmlab/lib/python3.8/site-packages/mmengine/runner/runner.py", line 1687, in train

self._train_loop = self.build_train_loop(

File "/home/kanengi/anaconda3/envs/openmmlab/lib/python3.8/site-packages/mmengine/runner/runner.py", line 1479, in build_train_loop

loop = LOOPS.build(

File "/home/kanengi/anaconda3/envs/openmmlab/lib/python3.8/site-packages/mmengine/registry/registry.py", line 548, in build

return self.build_func(cfg, *args, **kwargs, registry=self)

File "/home/kanengi/anaconda3/envs/openmmlab/lib/python3.8/site-packages/mmengine/registry/build_functions.py", line 144, in build_from_cfg

raise type(e)(

FileNotFoundError: class `EpochBasedTrainLoop` in mmengine/runner/loops.py: class `CocoDataset` in mmdet/datasets/coco.py: [Errno 2] No such file or directory: 'data/coco/annotations/instances_train2017.json'

Cause: looks refer to old environment setting.

Resolution: replace train.py generated data save to config folder and remove extra folder indication.

作者にはあっさり文法エラーだろ!とあしらわれたので、configをちょこちょこっと編集。

注意点

- jsonファイルはcocoフォーマットで。coco annotatorでも読めないとか言われる。

- Label studioなら完璧につくってくれるが、クセがあってどうも私には向かないみたい。

-coco annotatorはIDが通しになっている!?ので、コンバータを用意してみました。

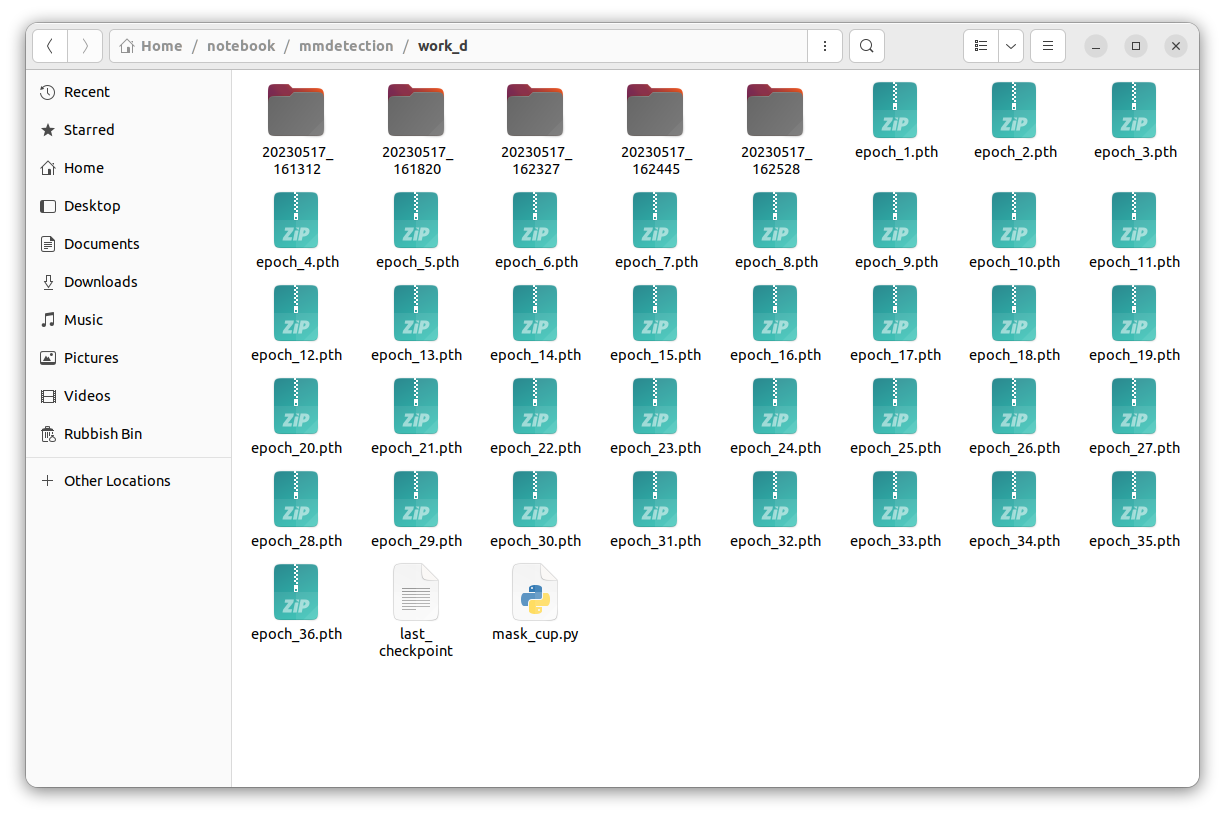

3. Output

- Generated mask RCNN file

- Training epoch is around 30-50.

- Inference and Inspection time indicate these pth file which is best score of detection.

- over 10 epoch 99.78% accuracy.

インフォメーション

Due to language setting, this page is written by English

To be continued!

Thx