ビックデータ解析において分散処理フレームワークのSparkがある。SparkをPythonから使用する為のAPIとしてpysparkというものがある。今回はVMware上のUbuntuにpysparkを入れるまでの導入メモである。

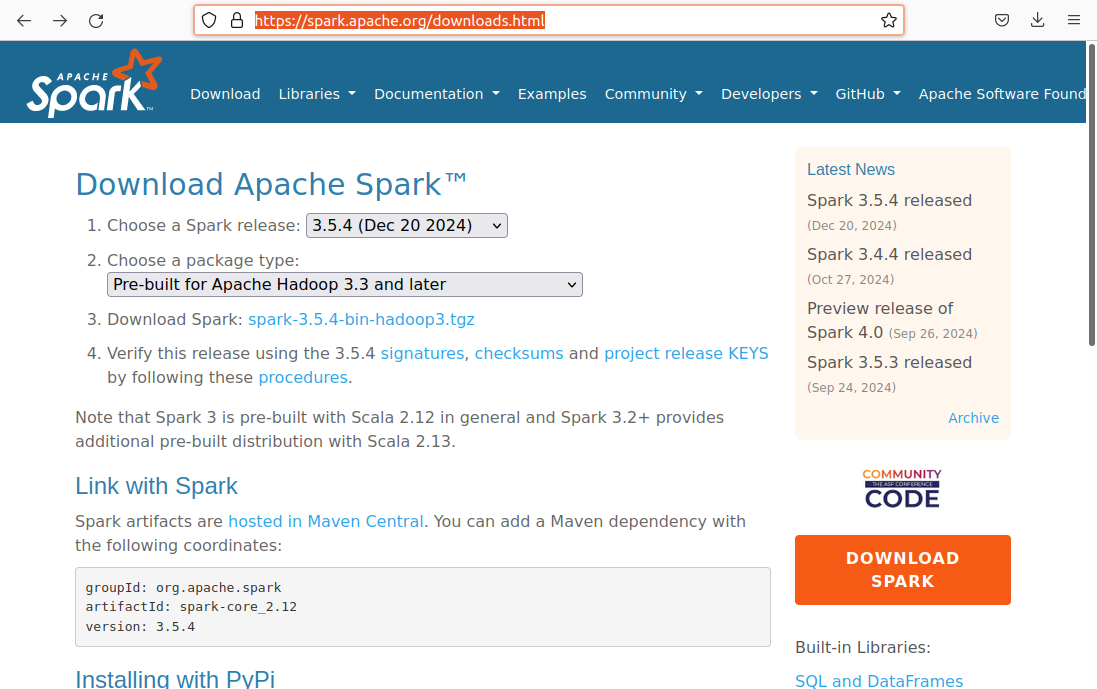

Sparkのダウンロード

Downloadからtgzファイルをローカルにダウンロードして取得する。

gunzipで解凍をして、tarも解凍する。

Javaのダウンロード

下記からJDKをdebをダウンロードしたのだが、下記の通りpysparkが起動しない。

https://www.oracle.com/java/technologies/downloads/#jdk23-linux

takuma@takuma-virtual-machine:~/Downloads/spark-3.5.4-bin-hadoop3/bin$ ./pyspark

Python 3.10.12 (main, Jan 17 2025, 14:35:34) [GCC 11.4.0] on linux

Type "help", "copyright", "credits" or "license" for more information.

25/02/16 17:27:59 WARN Utils: Your hostname, takuma-virtual-machine resolves to a loopback address: 127.0.1.1; using 192.168.190.30 instead (on interface ens33)

25/02/16 17:28:00 WARN Utils: Set SPARK_LOCAL_IP if you need to bind to another address

Setting default log level to "WARN".

To adjust logging level use sc.setLogLevel(newLevel). For SparkR, use setLogLevel(newLevel).

25/02/16 17:28:00 WARN NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

25/02/16 17:28:00 WARN SparkContext: Another SparkContext is being constructed (or threw an exception in its constructor). This may indicate an error, since only one SparkContext should be running in this JVM (see SPARK-2243). The other SparkContext was created at:

org.apache.spark.api.java.JavaSparkContext.<init>(JavaSparkContext.scala:58)

java.base/jdk.internal.reflect.DirectConstructorHandleAccessor.newInstance(DirectConstructorHandleAccessor.java:62)

java.base/java.lang.reflect.Constructor.newInstanceWithCaller(Constructor.java:501)

java.base/java.lang.reflect.Constructor.newInstance(Constructor.java:485)

py4j.reflection.MethodInvoker.invoke(MethodInvoker.java:247)

py4j.reflection.ReflectionEngine.invoke(ReflectionEngine.java:374)

py4j.Gateway.invoke(Gateway.java:238)

py4j.commands.ConstructorCommand.invokeConstructor(ConstructorCommand.java:80)

py4j.commands.ConstructorCommand.execute(ConstructorCommand.java:69)

py4j.ClientServerConnection.waitForCommands(ClientServerConnection.java:182)

py4j.ClientServerConnection.run(ClientServerConnection.java:106)

java.base/java.lang.Thread.run(Thread.java:1575)

/home/takuma/Downloads/spark-3.5.4-bin-hadoop3/python/pyspark/shell.py:74: UserWarning: Failed to initialize Spark session.

warnings.warn("Failed to initialize Spark session.")

Traceback (most recent call last):

File "/home/takuma/Downloads/spark-3.5.4-bin-hadoop3/python/pyspark/shell.py", line 69, in <module>

spark = SparkSession._create_shell_session()

File "/home/takuma/Downloads/spark-3.5.4-bin-hadoop3/python/pyspark/sql/session.py", line 1145, in _create_shell_session

return SparkSession._getActiveSessionOrCreate()

File "/home/takuma/Downloads/spark-3.5.4-bin-hadoop3/python/pyspark/sql/session.py", line 1161, in _getActiveSessionOrCreate

spark = builder.getOrCreate()

File "/home/takuma/Downloads/spark-3.5.4-bin-hadoop3/python/pyspark/sql/session.py", line 497, in getOrCreate

sc = SparkContext.getOrCreate(sparkConf)

File "/home/takuma/Downloads/spark-3.5.4-bin-hadoop3/python/pyspark/context.py", line 515, in getOrCreate

SparkContext(conf=conf or SparkConf())

File "/home/takuma/Downloads/spark-3.5.4-bin-hadoop3/python/pyspark/context.py", line 203, in __init__

self._do_init(

File "/home/takuma/Downloads/spark-3.5.4-bin-hadoop3/python/pyspark/context.py", line 296, in _do_init

self._jsc = jsc or self._initialize_context(self._conf._jconf)

File "/home/takuma/Downloads/spark-3.5.4-bin-hadoop3/python/pyspark/context.py", line 421, in _initialize_context

return self._jvm.JavaSparkContext(jconf)

File "/home/takuma/Downloads/spark-3.5.4-bin-hadoop3/python/lib/py4j-0.10.9.7-src.zip/py4j/java_gateway.py", line 1587, in __call__

return_value = get_return_value(

File "/home/takuma/Downloads/spark-3.5.4-bin-hadoop3/python/lib/py4j-0.10.9.7-src.zip/py4j/protocol.py", line 326, in get_return_value

raise Py4JJavaError(

py4j.protocol.Py4JJavaError: An error occurred while calling None.org.apache.spark.api.java.JavaSparkContext.

: java.lang.UnsupportedOperationException: getSubject is supported only if a security manager is allowed

at java.base/javax.security.auth.Subject.getSubject(Subject.java:347)

at org.apache.hadoop.security.UserGroupInformation.getCurrentUser(UserGroupInformation.java:577)

at org.apache.spark.util.Utils$.$anonfun$getCurrentUserName$1(Utils.scala:2416)

at scala.Option.getOrElse(Option.scala:189)

at org.apache.spark.util.Utils$.getCurrentUserName(Utils.scala:2416)

at org.apache.spark.SparkContext.<init>(SparkContext.scala:329)

at org.apache.spark.api.java.JavaSparkContext.<init>(JavaSparkContext.scala:58)

at java.base/jdk.internal.reflect.DirectConstructorHandleAccessor.newInstance(DirectConstructorHandleAccessor.java:62)

at java.base/java.lang.reflect.Constructor.newInstanceWithCaller(Constructor.java:501)

at java.base/java.lang.reflect.Constructor.newInstance(Constructor.java:485)

at py4j.reflection.MethodInvoker.invoke(MethodInvoker.java:247)

at py4j.reflection.ReflectionEngine.invoke(ReflectionEngine.java:374)

at py4j.Gateway.invoke(Gateway.java:238)

at py4j.commands.ConstructorCommand.invokeConstructor(ConstructorCommand.java:80)

at py4j.commands.ConstructorCommand.execute(ConstructorCommand.java:69)

at py4j.ClientServerConnection.waitForCommands(ClientServerConnection.java:182)

at py4j.ClientServerConnection.run(ClientServerConnection.java:106)

at java.base/java.lang.Thread.run(Thread.java:1575)

JavaのJDKが新しすぎたようで、Sparkのバージョンにあるjdkを再度インストール。

takuma@takuma-virtual-machine:sudo apt install openjdk-17-jdk

javaのバージョンを確認する

takuma@takuma-virtual-machine:/usr/local$ java -version

openjdk version "17.0.14" 2025-01-21

OpenJDK Runtime Environment (build 17.0.14+7-Ubuntu-122.04.1)

OpenJDK 64-Bit Server VM (build 17.0.14+7-Ubuntu-122.04.1, mixed mode, sharing)

環境変数の諸々の設定

pysparkを起動する為に、環境変数$JAVA_HOMEの設定が必要なので、.bashrcに入れておく

echo 'export JAVA_HOME=/usr/lib/jvm/java-17-openjdk-amd64' >> ~/.bashrc

echo 'export PATH=$JAVA_HOME/bin:$PATH' >> ~/.bashrc

source ~/.bashrc

pysparkも/opt/spark配下にどうしておく。PATHも設定しておく

export JAVA_HOME=/usr/lib/jvm/java-17-openjdk-amd64

export PATH=$JAVA_HOME/bin:$PATH

export SPARK_HOME=/opt/spark

export PATH=$SPARK_HOME/bin:$PATH

export PYSPARK_PYTHON=python3

これでSparkを起動することができました。

takuma@takuma-virtual-machine:/usr/local$

takuma@takuma-virtual-machine:/usr/local$ pyspark

Python 3.10.12 (main, Jan 17 2025, 14:35:34) [GCC 11.4.0] on linux

Type "help", "copyright", "credits" or "license" for more information.

25/02/16 18:19:45 WARN Utils: Your hostname, takuma-virtual-machine resolves to a loopback address: 127.0.1.1; using 192.168.190.30 instead (on interface ens33)

25/02/16 18:19:45 WARN Utils: Set SPARK_LOCAL_IP if you need to bind to another address

Setting default log level to "WARN".

To adjust logging level use sc.setLogLevel(newLevel). For SparkR, use setLogLevel(newLevel).

25/02/16 18:19:46 WARN NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

Welcome to

____ __

/ __/__ ___ _____/ /__

_\ \/ _ \/ _ `/ __/ '_/

/__ / .__/\_,_/_/ /_/\_\ version 3.5.4

/_/

Using Python version 3.10.12 (main, Jan 17 2025 14:35:34)

Spark context Web UI available at http://192.168.190.30:4040

Spark context available as 'sc' (master = local[*], app id = local-1739697586660).

SparkSession available as 'spark'.

>>>

>>>