あらすじ

GPUサーバを触る機会があったので、Deep Learningのために使ってみたときのメモ。

この記事ではテストプログラムを動かすところまで。

- GPUサーバの状態確認

- Tensorflowのインストール

- テスト

なぜGPU?

GPUはCPUに比べて、コア数が多く、各コアの処理速度は低い。そのため、並列計算に向いている。

機械学習とは、大量のデータ解析から学習して、何かを判断・予想するためのアルゴリズム。

ディープラーニングとは、機械学習を実装するための1つの手法。

別名hierarchical learningと呼ばれるように、人間の神経のように多層な構造を使って機械学習を行う手法のこと。多数のノードで単純な計算を繰り返すため、並列計算が発生しやすく、CPUよりもGPUが適しているといえる。

GPUサーバの状態確認

環境

OS: Ubuntu 16.04.2 LTS (Xenial Xerus)

GPU: NVIDIA Corporation GK210GL [Tesla K80] (rev a1)

Driver: NVIDIA UNIX x86_64 Kernel Module 375.74

確認方法

# cat /etc/os-release

NAME="Ubuntu"

VERSION="16.04.2 LTS (Xenial Xerus)"

# lspci | grep -i nvidia

07:00.0 3D controller: NVIDIA Corporation GK210GL [Tesla K80] (rev a1)

08:00.0 3D controller: NVIDIA Corporation GK210GL [Tesla K80] (rev a1)

# cat /proc/driver/nvidia/version

NVRM version: NVIDIA UNIX x86_64 Kernel Module 375.74 Wed Jun 14 01:39:39 PDT 2017

GCC version: gcc version 5.4.0 20160609 (Ubuntu 5.4.0-6ubuntu1~16.04.4)

Tensorflowのインストール

公式に従ってインストール。最新(1.3)を使用。

Prerequisites

1.3を使用するには、以下のような環境が必要。

- CUDA Toolkit 8.0

- The NVIDIA drivers associated with CUDA Toolkit 8.0

- cuDNN v6

- GPU card with CUDA Compute Capability 3.0 or higher

- The libcupti-dev library

CUDA and NVIDIA driver

CUDA(Compute Unified Device Architecture)というのは、NVIDIAによって開発された、GPU向けの並列計算プラットフォーム、およびプログラミングモデル。

cuda toolkitのバージョン確認は、

# cat /usr/local/cuda/version.txt

CUDA Version 8.0.61

# /usr/local/cuda-8.0/bin/nvcc -V

nvcc: NVIDIA (R) Cuda compiler driver

Copyright (c) 2005-2016 NVIDIA Corporation

Built on Tue_Jan_10_13:22:03_CST_2017

Cuda compilation tools, release 8.0, V8.0.61

NVIDIAドライバのバージョン確認は、

(env) root@R720GPUnode1:~/ngckn-test# cat /proc/driver/nvidia/version

NVRM version: NVIDIA UNIX x86_64 Kernel Module 375.74 Wed Jun 14 01:39:39 PDT 2017

GCC version: gcc version 5.4.0 20160609 (Ubuntu 5.4.0-6ubuntu1~16.04.4)

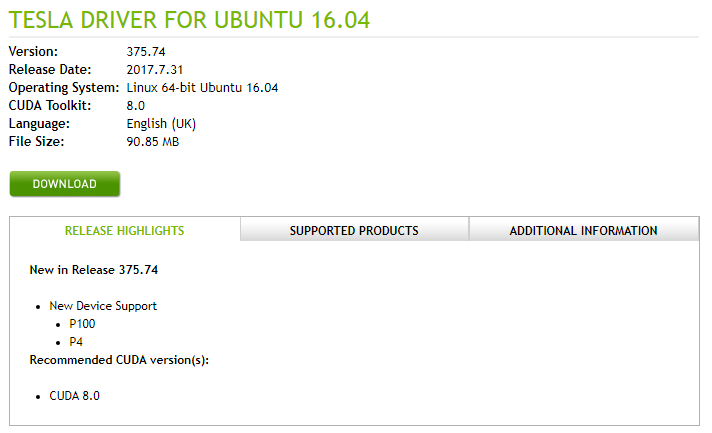

このバージョンが、Toolkit 8.0に関連づいたものかどうかの確認方法はよくわからないが、NVIDIAの公式に以下のような記載があるのでよしとする。

cuDNN

cuDNN(CUDA Deep Neural Network library)は、GPU使用を想定した、多層ニューラルネットワーク(Deep Neural Network)の基本要素を提供するライブラリ。

バージョンの確認は、

# ls /usr/local/cuda/lib64/libcudnn*

/usr/local/cuda/lib64/libcudnn.so /usr/local/cuda/lib64/libcudnn.so.5.1.10

/usr/local/cuda/lib64/libcudnn.so.5 /usr/local/cuda/lib64/libcudnn_static.a

# cat /usr/local/cuda/include/cudnn.h | grep CUDNN_MAJOR

# define CUDNN_MAJOR 5

# define CUDNN_VERSION (CUDNN_MAJOR * 1000 + CUDNN_MINOR * 100 + CUDNN_PATCHLEVEL)

# cat /usr/local/cuda/include/cudnn.h | grep CUDNN_MINOR

# define CUDNN_MINOR 1

# define CUDNN_VERSION (CUDNN_MAJOR * 1000 + CUDNN_MINOR * 100 + CUDNN_PATCHLEVEL)

# cat /usr/local/cuda/include/cudnn.h | grep CUDNN_PATCH

# define CUDNN_PATCHLEVEL 10

# define CUDNN_VERSION (CUDNN_MAJOR * 1000 + CUDNN_MINOR * 100 + CUDNN_PATCHLEVEL)

Tensorflow1.3にはv6が必要なので、アップデートする。

v6の入手

https://developer.nvidia.com/rdp/cudnn-download

libcudnn6_6.0.21-1+cuda8.0_amd64.deb

libcudnn6-dev_6.0.21-1+cuda8.0_amd64.deb

libcudnn6-doc_6.0.21-1+cuda8.0_amd64.deb

v5.1のアンインストール

# ls libcudnn.**

libcudnn.so libcudnn.so.5 libcudnn.so.5.1.10 libcudnn_static.a

# mkdir ~/backup

# mv libcudnn* ~/backup/

# cd /usr/local/cuda-8.0/include/

# ls cudnn*

cudnn.h

# mv cudnn* ~/backup/

# ldconfig

v6のインストール

# dpkg -i libcudnn6_6.0.21-1+cuda8.0_amd64.deb

Selecting previously unselected package libcudnn6.

(Reading database ... 215725 files and directories currently installed.)

Preparing to unpack libcudnn6_6.0.21-1+cuda8.0_amd64.deb ...

Unpacking libcudnn6 (6.0.21-1+cuda8.0) ...

Setting up libcudnn6 (6.0.21-1+cuda8.0) ...

Processing triggers for libc-bin (2.23-0ubuntu9) ...

# dpkg -i libcudnn6-dev_6.0.21-1+cuda8.0_amd64.deb

Selecting previously unselected package libcudnn6-dev.

(Reading database ... 215731 files and directories currently installed.)

Preparing to unpack libcudnn6-dev_6.0.21-1+cuda8.0_amd64.deb ...

Unpacking libcudnn6-dev (6.0.21-1+cuda8.0) ...

Setting up libcudnn6-dev (6.0.21-1+cuda8.0) ...

update-alternatives: error: unable to read link '/etc/alternatives/libcudnn': Invalid argument

update-alternatives: error: no alternatives for libcudnn

dpkg: error processing package libcudnn6-dev (--install):

subprocess installed post-installation script returned error exit status 2

Errors were encountered while processing:

libcudnn6-dev

上記のように最初は失敗。/etc/alternatives/libcudnnを確認すると、どこにもリンクされていない。

# ls -l /etc/alternatives/libcudnn

-rw-r--r-- 1 root root 0 Apr 5 11:22 /etc/alternatives/libcudnn

# cat /etc/alternatives/libcudnn

#

こちらを参考に、リンクを張ってリトライ。

# rm /etc/alternatives/libcudnn

# ln -s /usr/include/x86_64-linux-gnu/cudnn_v6.h /etc/alternatives/libcudnn

# ls -l /etc/alternatives/libcudnn

lrwxrwxrwx 1 root root 40 Sep 21 07:16 /etc/alternatives/libcudnn -> /usr/include/x86_64-linux-gnu/cudnn_v6.h

# dpkg -i libcudnn6-dev_6.0.21-1+cuda8.0_amd64.deb

(Reading database ... 215737 files and directories currently installed.)

Preparing to unpack libcudnn6-dev_6.0.21-1+cuda8.0_amd64.deb ...

Unpacking libcudnn6-dev (6.0.21-1+cuda8.0) over (6.0.21-1+cuda8.0) ...

Setting up libcudnn6-dev (6.0.21-1+cuda8.0) ...

update-alternatives: warning: /etc/alternatives/libcudnn has been changed (manually or by a script); switching to manual updates only

update-alternatives: warning: forcing reinstallation of alternative /usr/include/x86_64-linux-gnu/cudnn_v6.h because link group libcudnn is broken

# dpkg -l | grep cudnn

ii libcudnn5 5.1.10-1+cuda8.0 amd64 cuDNN runtime libraries

ii libcudnn6 6.0.21-1+cuda8.0 amd64 cuDNN runtime libraries

ii libcudnn6-dev 6.0.21-1+cuda8.0 amd64 cuDNN development libraries and headers

問題なさそうなので次へ。

# dpkg -i libcudnn6-doc_6.0.21-1+cuda8.0_amd64.deb

Selecting previously unselected package libcudnn6-doc.

(Reading database ... 215737 files and directories currently installed.)

Preparing to unpack libcudnn6-doc_6.0.21-1+cuda8.0_amd64.deb ...

Unpacking libcudnn6-doc (6.0.21-1+cuda8.0) ...

Setting up libcudnn6-doc (6.0.21-1+cuda8.0) ...

# dpkg -l | grep cudnn

ii libcudnn5 5.1.10-1+cuda8.0 amd64 cuDNN runtime libraries

ii libcudnn6 6.0.21-1+cuda8.0 amd64 cuDNN runtime libraries

ii libcudnn6-dev 6.0.21-1+cuda8.0 amd64 cuDNN development libraries and headers

ii libcudnn6-doc 6.0.21-1+cuda8.0 amd64 cuDNN documents and samples

#

libcupti-dev library

こちらはすでに最新が入っていたようなので、スルー。

root@R720GPUnode1:/home/kento# apt-get install libcupti-dev

Reading package lists... Done

Building dependency tree

Reading state information... Done

libcupti-dev is already the newest version (7.5.18-0ubuntu1).

The following packages will be REMOVED:

linux-image-4.4.0-72-generic linux-image-4.4.0-75-generic linux-image-4.4.0-78-generic linux-image-4.4.0-79-generic linux-image-4.4.0-81-generic

linux-image-4.4.0-83-generic linux-image-4.4.0-87-generic linux-image-extra-4.4.0-72-generic linux-image-extra-4.4.0-75-generic

linux-image-extra-4.4.0-78-generic linux-image-extra-4.4.0-79-generic linux-image-extra-4.4.0-81-generic linux-image-extra-4.4.0-83-generic

linux-image-extra-4.4.0-87-generic

0 upgraded, 0 newly installed, 14 to remove and 177 not upgraded.

19 not fully installed or removed.

After this operation, 1,533 MB disk space will be freed.

Do you want to continue? [Y/n] n

Abort.

Tensorflowインストール

公式に従って、virtualenv上にインストールする。

# mkdir tensorflow

# virtualenv --system-site-packages tensorflow

New python executable in /root/tensorflow/bin/python

Installing setuptools, pip, wheel...done.

# source ~/tensorflow/bin/activate

(tensorflow) #

(tensorflow) # easy_install -U pip

Searching for pip

Reading https://pypi.python.org/simple/pip/

Downloading https://pypi.python.org/packages/11/b6/abcb525026a4be042b486df43905d6893fb04f05aac21c32c638e939e447/pip-9.0.1.tar.gz#md5=35f01da33009719497f01a4ba69d63c9

Best match: pip 9.0.1

(中略)

Finished processing dependencies for pip

(tensorflow) # pip install --upgrade tensorflow-gpu

Collecting tensorflow-gpu

(中略)

Successfully installed backports.weakref-1.0.post1 bleach-1.5.0 html5lib-0.9999999 markdown-2.6.9 mock-2.0.0 numpy-1.13.1 pbr-3.1.1 protobuf-3.4.0 six-1.11.0 tensorflow-gpu-1.3.0 tensorflow-tensorboard-0.1.6 werkzeug-0.12.2

Hello World

(tensorflow) # python

Python 2.7.12 (default, Nov 19 2016, 06:48:10)

[GCC 5.4.0 20160609] on linux2

Type "help", "copyright", "credits" or "license" for more information.

>>>

>>> # Python

... import tensorflow as tf

Traceback (most recent call last):

File "<stdin>", line 2, in <module>

File "/root/tensorflow/lib/python2.7/site-packages/tensorflow/__init__.py", line 24, in <module>

from tensorflow.python import *

File "/root/tensorflow/lib/python2.7/site-packages/tensorflow/python/__init__.py", line 49, in <module>

from tensorflow.python import pywrap_tensorflow

File "/root/tensorflow/lib/python2.7/site-packages/tensorflow/python/pywrap_tensorflow.py", line 52, in <module>

raise ImportError(msg)

ImportError: Traceback (most recent call last):

File "/root/tensorflow/lib/python2.7/site-packages/tensorflow/python/pywrap_tensorflow.py", line 41, in <module>

from tensorflow.python.pywrap_tensorflow_internal import *

File "/root/tensorflow/lib/python2.7/site-packages/tensorflow/python/pywrap_tensorflow_internal.py", line 28, in <module>

_pywrap_tensorflow_internal = swig_import_helper()

File "/root/tensorflow/lib/python2.7/site-packages/tensorflow/python/pywrap_tensorflow_internal.py", line 24, in swig_import_helper

_mod = imp.load_module('_pywrap_tensorflow_internal', fp, pathname, description)

ImportError: libcusolver.so.8.0: cannot open shared object file: No such file or directory

Failed to load the native TensorFlow runtime.

こちらを参考に、

(tensorflow) # ls /usr/local/cuda/lib64/libcusolver

libcusolver.so libcusolver.so.8.0 libcusolver.so.8.0.61 libcusolver_static.a

(tensorflow) # diff .bashrc .bashrc.orig

103,106d102

<

< ### Add

< export LD_LIBRARY_PATH=$LD_LIBRARY_PATH:/usr/local/cuda/lib64/

<

もう一度

(tensorflow) root@R720GPUnode1:~# python

Python 2.7.12 (default, Nov 19 2016, 06:48:10)

[GCC 5.4.0 20160609] on linux2

Type "help", "copyright", "credits" or "license" for more information.

>>> import tensorflow as tf

>>> hello = tf.constant('Hello, TensorFlow!')

>>> sess = tf.Session()

2017-09-21 07:49:55.316516: W tensorflow/core/platform/cpu_feature_guard.cc:45] The TensorFlow library wasn't compiled to use SSE4.1 instructions, but these are available on your machine and could speed up CPU computations.

2017-09-21 07:49:55.317927: W tensorflow/core/platform/cpu_feature_guard.cc:45] The TensorFlow library wasn't compiled to use SSE4.2 instructions, but these are available on your machine and could speed up CPU computations.

2017-09-21 07:49:55.318219: W tensorflow/core/platform/cpu_feature_guard.cc:45] The TensorFlow library wasn't compiled to use AVX instructions, but these are available on your machine and could speed up CPU computations.

2017-09-21 07:50:08.701313: I tensorflow/core/common_runtime/gpu/gpu_device.cc:955] Found device 0 with properties:

name: Tesla K80

major: 3 minor: 7 memoryClockRate (GHz) 0.8235

pciBusID 0000:07:00.0

Total memory: 11.17GiB

Free memory: 11.11GiB

2017-09-21 07:50:09.471663: W tensorflow/stream_executor/cuda/cuda_driver.cc:523] A non-primary context 0x3f17010 exists before initializing the StreamExecutor. We haven't verified StreamExecutor works with that.

2017-09-21 07:50:09.473081: I tensorflow/core/common_runtime/gpu/gpu_device.cc:955] Found device 1 with properties:

name: Tesla K80

major: 3 minor: 7 memoryClockRate (GHz) 0.8235

pciBusID 0000:08:00.0

Total memory: 11.17GiB

Free memory: 11.11GiB

2017-09-21 07:50:09.474019: I tensorflow/core/common_runtime/gpu/gpu_device.cc:976] DMA: 0 1

2017-09-21 07:50:09.474053: I tensorflow/core/common_runtime/gpu/gpu_device.cc:986] 0: Y Y

2017-09-21 07:50:09.474064: I tensorflow/core/common_runtime/gpu/gpu_device.cc:986] 1: Y Y

2017-09-21 07:50:09.474093: I tensorflow/core/common_runtime/gpu/gpu_device.cc:1045] Creating TensorFlow device (/gpu:0) -> (device: 0, name: Tesla K80, pci bus id: 0000:07:00.0)

2017-09-21 07:50:09.474110: I tensorflow/core/common_runtime/gpu/gpu_device.cc:1045] Creating TensorFlow device (/gpu:1) -> (device: 1, name: Tesla K80, pci bus id: 0000:08:00.0)

>>> print(sess.run(hello))

Hello, TensorFlow!

このサーバにはGPUが2つ搭載されているが、上記のログより2つともTensorflowよりデバイスとして認識はされていることがわかる。