はじめに

SwiftUIとAVFoundationをどうやったら組み合わせられるのかなっと考えてみました。

AVFoundationをObservableObjectにしたらカメラの画像を表示せずに顔検出とかできるのはないかと考えていました。

ということでまず作ってみました。

作る上で必要なファイル一式

NewFile...で下記のファイルを新規作成してプロジェクトに追加してください

| 種別 | ファイル名 | 概要 |

|---|---|---|

| SwftUI View | CALayerView | CALayer用のView |

| Swift File | AVFoundationVM | AVFoundationをObservableObject化するファイル |

作り方

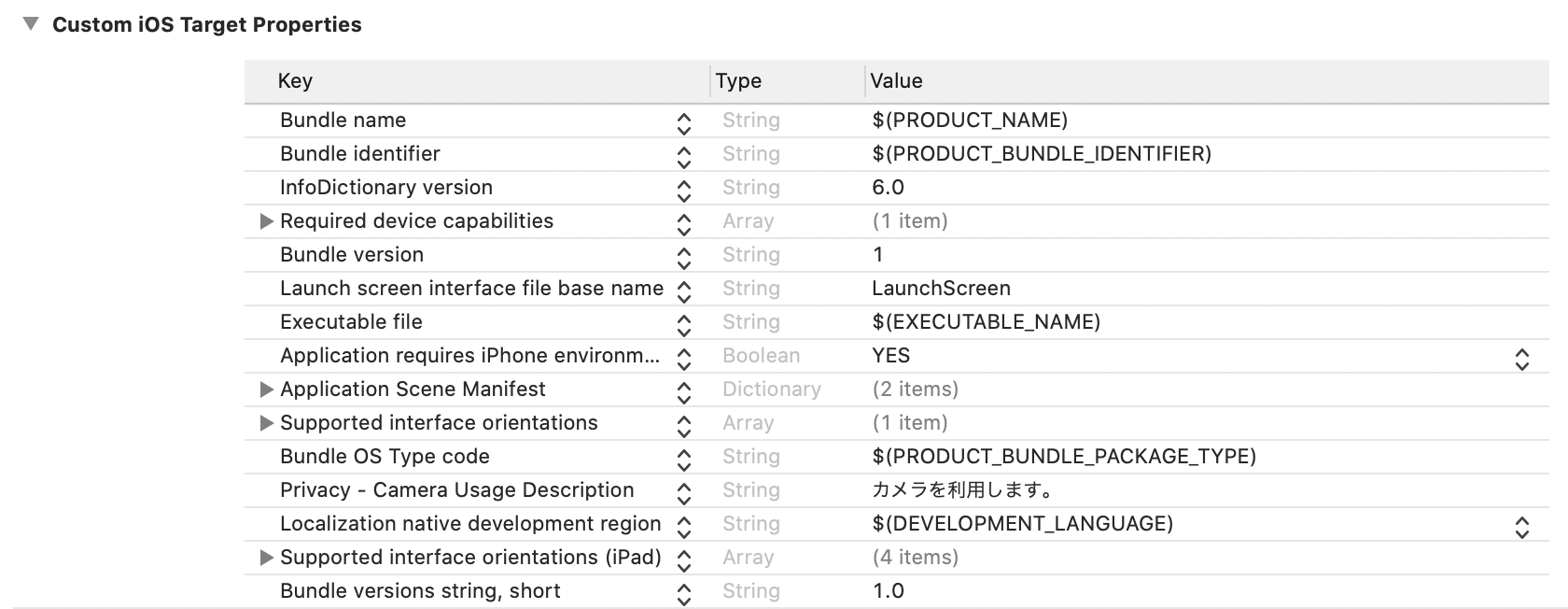

Step1.カメラのアクセス許可

Privacy - Camera Usage Descriptionを追加し利用用途を記載してください。

今回は、「カメラを利用します。」とします。

Step2.AVFoundationをObservableObject化

インスタンス生成時にAVFoundationの初期化処理を行い、startSession() endSession()を呼び出すことでカメラの制御を開始終了します。takePhoto()を呼び出すと画像をimageに格納します。

imageは@Publishedしてありますので格納するとSwiftUI側で画像表示などに使えると思います。

import UIKit

import Combine

import AVFoundation

class AVFoundationVM: NSObject, AVCaptureVideoDataOutputSampleBufferDelegate, ObservableObject {

///撮影した画像

@Published var image: UIImage?

///プレビュー用レイヤー

var previewLayer:CALayer!

///撮影開始フラグ

private var _takePhoto:Bool = false

///セッション

private let captureSession = AVCaptureSession()

///撮影デバイス

private var capturepDevice:AVCaptureDevice!

override init() {

super.init()

prepareCamera()

beginSession()

}

func takePhoto() {

_takePhoto = true

}

private func prepareCamera() {

captureSession.sessionPreset = .photo

if let availableDevice = AVCaptureDevice.DiscoverySession(deviceTypes: [.builtInWideAngleCamera], mediaType: AVMediaType.video, position: .back).devices.first {

capturepDevice = availableDevice

}

}

private func beginSession() {

do {

let captureDeviceInput = try AVCaptureDeviceInput(device: capturepDevice)

captureSession.addInput(captureDeviceInput)

} catch {

print(error.localizedDescription)

}

let previewLayer = AVCaptureVideoPreviewLayer(session: captureSession)

self.previewLayer = previewLayer

let dataOutput = AVCaptureVideoDataOutput()

dataOutput.videoSettings = [kCVPixelBufferPixelFormatTypeKey as String:kCVPixelFormatType_32BGRA]

if captureSession.canAddOutput(dataOutput) {

captureSession.addOutput(dataOutput)

}

captureSession.commitConfiguration()

let queue = DispatchQueue(label: "FromF.github.com.AVFoundationSwiftUI.AVFoundation")

dataOutput.setSampleBufferDelegate(self, queue: queue)

}

func startSession() {

if captureSession.isRunning { return }

captureSession.startRunning()

}

func endSession() {

if !captureSession.isRunning { return }

captureSession.stopRunning()

}

// MARK: - AVCaptureVideoDataOutputSampleBufferDelegate

func captureOutput(_ output: AVCaptureOutput, didOutput sampleBuffer: CMSampleBuffer, from connection: AVCaptureConnection) {

if _takePhoto {

_takePhoto = false

if let image = getImageFromSampleBuffer(buffer: sampleBuffer) {

DispatchQueue.main.async {

self.image = image

}

}

}

}

private func getImageFromSampleBuffer (buffer: CMSampleBuffer) -> UIImage? {

if let pixelBuffer = CMSampleBufferGetImageBuffer(buffer) {

let ciImage = CIImage(cvPixelBuffer: pixelBuffer)

let context = CIContext()

let imageRect = CGRect(x: 0, y: 0, width: CVPixelBufferGetWidth(pixelBuffer), height: CVPixelBufferGetHeight(pixelBuffer))

if let image = context.createCGImage(ciImage, from: imageRect) {

return UIImage(cgImage: image, scale: UIScreen.main.scale, orientation: .right)

}

}

return nil

}

}

Step3.CALayerをSwiftUIで利用できるようにする

AVFoundationのカメラのライブビュー画像を表示できるように準備します。

import SwiftUI

struct CALayerView: UIViewControllerRepresentable {

var caLayer:CALayer

func makeUIViewController(context: UIViewControllerRepresentableContext<CALayerView>) -> UIViewController {

let viewController = UIViewController()

viewController.view.layer.addSublayer(caLayer)

caLayer.frame = viewController.view.layer.frame

return viewController

}

func updateUIViewController(_ uiViewController: UIViewController, context: UIViewControllerRepresentableContext<CALayerView>) {

caLayer.frame = uiViewController.view.layer.frame

}

}

Step4.最後にSwiftUIを作成する

今回は、シャッターボタンを押すと撮影画像を表示するように作りました。

import SwiftUI

struct ContentView: View {

@ObservedObject private var avFoundationVM = AVFoundationVM()

var body: some View {

VStack {

if avFoundationVM.image == nil {

Spacer()

ZStack(alignment: .bottom) {

CALayerView(caLayer: avFoundationVM.previewLayer)

Button(action: {

self.avFoundationVM.takePhoto()

}) {

Image(systemName: "camera.circle.fill")

.renderingMode(.original)

.resizable()

.frame(width: 80, height: 80, alignment: .center)

}

.padding(.bottom, 100.0)

}.onAppear {

self.avFoundationVM.startSession()

}.onDisappear {

self.avFoundationVM.endSession()

}

Spacer()

} else {

ZStack(alignment: .topLeading) {

VStack {

Spacer()

Image(uiImage: avFoundationVM.image!)

.resizable()

.scaledToFill()

.aspectRatio(contentMode: .fit)

Spacer()

}

Button(action: {

self.avFoundationVM.image = nil

}) {

Image(systemName: "xmark.circle.fill")

.renderingMode(.original)

.resizable()

.frame(width: 30, height: 30, alignment: .center)

.foregroundColor(.white)

.background(Color.gray)

}

.frame(width: 80, height: 80, alignment: .center)

}

}

}

}

}

struct ContentView_Previews: PreviewProvider {

static var previews: some View {

Group {

ContentView()

}

}

}