Introduction

ML Kit for Firebase is a machine learning toolkit made by Google for Android and iOS. (Well still in Beta version) With this kit you can use on-device pre-trained APIs :- Text recognition

- Face detection

- Barcode scanning

- Image labeling

- Object detection & tracking

- Language identification

- Translation

- Smart reply generator (only in english)

- Text recognition

- Image labeling

- Landmark recognition

Android dependency

In app/build.gradle you should add :android {

//...

aaptOptions {

noCompress "tflite"

}

}

dependencies {

//...

// ml-vision general

implementation 'com.google.firebase:firebase-ml-vision:24.0.1'

// Face Detection (contours)

implementation 'com.google.firebase:firebase-ml-vision-face-model:19.0.0'

// Barcode Scanning

implementation 'com.google.firebase:firebase-ml-vision-barcode-model:16.0.1'

// Image labeling

implementation 'com.google.firebase:firebase-ml-vision-image-label-model:19.0.0'

// Object detection

implementation 'com.google.firebase:firebase-ml-vision-object-detection-model:19.0.3'

// ml-natural general

implementation 'com.google.firebase:firebase-ml-natural-language:22.0.0'

// Langauge identification

implementation 'com.google.firebase:firebase-ml-natural-language-language-id-model:20.0.7'

// Translation

implementation 'com.google.firebase:firebase-ml-natural-language-translate-model:20.0.7'

// Smart Replies

implementation 'com.google.firebase:firebase-ml-natural-language-smart-reply-model:20.0.7'

}

apply plugin: 'com.google.gms.google-services'

If you want to use a custom pre-trained model(AutoML-trined model) to load your own model you will need to add :

implementation 'com.google.firebase:firebase-ml-vision-automl:18.0.3'

Text recognition

// Create FirebaseVisionImage Object (here from an url)

FirebaseVisionImage image = FirebaseVisionImage.fromFilePath(context, uri);

// Create an instance of FirebaseVisionTextRecognizer with on-device model

FirebaseVisionTextRecognizer detector = FirebaseVision.getInstance().getOnDeviceTextRecognizer();

// Or with cloud model

// FirebaseVisionTextRecognizer detector = FirebaseVision.getInstance().getCloudTextRecognizer();

// Process the image

Task<FirebaseVisionText> result =

detector.processImage(image)

.addOnSuccessListener(new OnSuccessListener<FirebaseVisionText>() {

@Override

public void onSuccess(FirebaseVisionText firebaseVisionText) {

// Task completed successfully

// ...

}

})

.addOnFailureListener(

new OnFailureListener() {

@Override

public void onFailure(@NonNull Exception e) {

// Task failed with an exception

// ...

}

});

FirebaseVisionText will contain bounding box, text, language recognized, paragraph, confidence score.

Face detection

Face detection is done on device only and you can get facial contours too (optional).

// Create FirebaseVisionImage Object (here from an url)

FirebaseVisionImage image = FirebaseVisionImage.fromFilePath(context, uri);

// Set Options

FirebaseVisionFaceDetectorOptions options =

new FirebaseVisionFaceDetectorOptions.Builder()

.setPerformanceMode(FirebaseVisionFaceDetectorOptions.ACCURATE)

.setClassificationMode(FirebaseVisionFaceDetectorOptions.ALL_CLASSIFICATIONS)

.setLandmarkMode(FirebaseVisionFaceDetectorOptions.ALL_LANDMARKS)

.setContourMode(FirebaseVisionFaceDetectorOptions.ALL_CONTOURS)

.build();

// Create an instance of FirebaseVisionFaceDetector

FirebaseVisionFaceDetector detector = FirebaseVision.getInstance().getVisionFaceDetector(options);

// Process the image

Task<List<FirebaseVisionFace>> result =

detector.detectInImage(image)

.addOnSuccessListener(

new OnSuccessListener<List<FirebaseVisionFace>>() {

@Override

public void onSuccess(List<FirebaseVisionFace> faces) {

// Task completed successfully

// ...

}

})

.addOnFailureListener(

new OnFailureListener() {

@Override

public void onFailure(@NonNull Exception e) {

// Task failed with an exception

// ...

}

});

As a result FirebaseVisionFace contains : Face bounds, head rotation, eyes ears, mouth nose coordinate, classification probability (smiling, eyes opened, happy ...)

You can also get a tracking Id in case of video streaming.

Barcode scanning

Many different formats are supported :

Code 128, Code 39, Code 93, Codabar, EAN-13, EAN-8, ITF, UPC-A, UPC-E, QR Code, PDF417, Aztec, Data Matrix.

// Create FirebaseVisionImage Object (here from an url)

FirebaseVisionImage image = FirebaseVisionImage.fromFilePath(context, uri);

// Set Options

FirebaseVisionBarcodeDetectorOptions options =

new FirebaseVisionBarcodeDetectorOptions.Builder()

.setBarcodeFormats(

FirebaseVisionBarcode.FORMAT_QR_CODE,

FirebaseVisionBarcode.FORMAT_AZTEC)

.build();

// Create an instance of FirebaseVisionBarcodeDetector

FirebaseVisionBarcodeDetector detector = FirebaseVision.getInstance().getVisionBarcodeDetector();

// Process the image

Task<List<FirebaseVisionBarcode>> result = detector.detectInImage(image)

.addOnSuccessListener(new OnSuccessListener<List<FirebaseVisionBarcode>>() {

@Override

public void onSuccess(List<FirebaseVisionBarcode> barcodes) {

// Task completed successfully

// ...

}

})

.addOnFailureListener(new OnFailureListener() {

@Override

public void onFailure(@NonNull Exception e) {

// Task failed with an exception

// ...

}

});

The results will depend on the barcode type

Image labeling

Image labeling can be used on-device with ~400 labels or on-cloud with ~10000 labels

Image labeling can be used on-device with ~400 labels or on-cloud with ~10000 labels

// Create FirebaseVisionImage Object (here from an url)

FirebaseVisionImage image = FirebaseVisionImage.fromFilePath(context, uri);

// Create an instance of FirebaseVisionImageLabeler with on-device model

FirebaseVisionImageLabeler labeler = FirebaseVision.getInstance().getOnDeviceImageLabeler();

// Or with cloud model

// FirebaseVisionCloudImageLabelerOptions options = new FirebaseVisionCloudImageLabelerOptions.Builder().setConfidenceThreshold(0.7f).build();

// FirebaseVisionImageLabeler labeler = FirebaseVision.getInstance().getOnDeviceImageLabeler(options);

// Process the image

labeler.processImage(image)

.addOnSuccessListener(new OnSuccessListener<List<FirebaseVisionImageLabel>>() {

@Override

public void onSuccess(List<FirebaseVisionImageLabel> labels) {

// Task completed successfully

// ...

}

})

.addOnFailureListener(new OnFailureListener() {

@Override

public void onFailure(@NonNull Exception e) {

// Task failed with an exception

// ...

}

});

Return is just a list of FirebaseVisionImageLabel who contains : label and confidence score.

You can use AutoML Vision Edge to use your own model of classification.

Object detection & tracking

With this you can identify main object and track it (when streaming)

With this you can identify main object and track it (when streaming)

// Create FirebaseVisionImage Object (here from an url)

FirebaseVisionImage image = FirebaseVisionImage.fromFilePath(context, uri);

// Multiple object detection in static images

FirebaseVisionObjectDetectorOptions options =

new FirebaseVisionObjectDetectorOptions.Builder()

.setDetectorMode(FirebaseVisionObjectDetectorOptions.SINGLE_IMAGE_MODE)

.enableMultipleObjects()

.enableClassification() // Optional

.build();

// Create an instance of FirebaseVisionObjectDetector

FirebaseVisionObjectDetector objectDetector = FirebaseVision.getInstance().getOnDeviceObjectDetector(options);

// Process the image

objectDetector.processImage(image)

.addOnSuccessListener(

new OnSuccessListener<List<FirebaseVisionObject>>() {

@Override

public void onSuccess(List<FirebaseVisionObject> detectedObjects) {

// Task completed successfully

// ...

}

})

.addOnFailureListener(

new OnFailureListener() {

@Override

public void onFailure(@NonNull Exception e) {

// Task failed with an exception

// ...

}

});

Result is a list of FirebaseVisionObject who contains: tracking Id, bounds, category, confidence score.

Landmark recognition

You can recognize well-known landmarks in an image. This api can only be use on-cloud.

You can recognize well-known landmarks in an image. This api can only be use on-cloud.

// Create FirebaseVisionImage Object (here from an url)

FirebaseVisionImage image = FirebaseVisionImage.fromFilePath(context, uri);

// Set the options

FirebaseVisionCloudDetectorOptions options =

new FirebaseVisionCloudDetectorOptions.Builder()

.setModelType(FirebaseVisionCloudDetectorOptions.LATEST_MODEL)

.setMaxResults(15)

.build();

// Create an instance of FirebaseVisionCloudLandmarkDetector

FirebaseVisionCloudLandmarkDetector detector = FirebaseVision.getInstance().getVisionCloudLandmarkDetector(options);

// Process the image

Task<List<FirebaseVisionCloudLandmark>> result = detector.detectInImage(image)

.addOnSuccessListener(new OnSuccessListener<List<FirebaseVisionCloudLandmark>>() {

@Override

public void onSuccess(List<FirebaseVisionCloudLandmark> firebaseVisionCloudLandmarks) {

// Task completed successfully

// ...

}

})

.addOnFailureListener(new OnFailureListener() {

@Override

public void onFailure(@NonNull Exception e) {

// Task failed with an exception

// ...

}

});

Result is a list of FirebaseVisionCloudLandmark who contains: Name, bounds, latitude, longitude, confidence score.

Language identification

This api doesn't use image but String.FirebaseLanguageIdentification languageIdentifier =

FirebaseNaturalLanguage.getInstance().getLanguageIdentification();

languageIdentifier.identifyAllLanguages(text)

.addOnSuccessListener(

new OnSuccessListener<String>() {

@Override

public void onSuccess(List<IdentifiedLanguage> identifiedLanguages) {

for (IdentifiedLanguage identifiedLanguage : identifiedLanguages) {

String language = identifiedLanguage.getLanguageCode();

float confidence = identifiedLanguage.getConfidence();

Log.i(TAG, language + " (" + confidence + ")");

}

}

})

.addOnFailureListener(

new OnFailureListener() {

@Override

public void onFailure(@NonNull Exception e) {

// Model couldn’t be loaded or other internal error.

// ...

}

});

Result is a list of IdentifiedLanguage who contains: Language Code and confidence score.

Translation

Translation can be done with a on-device api but it can be use only for casual and simple translation over 59 languages (Japanese is supported). Model is trained to translate to and from English; so if you choose to translate between non-English languages, English will be used as an intermediate translation, which can affect quality.// Create an English-Japanese translator:

FirebaseTranslatorOptions options =

new FirebaseTranslatorOptions.Builder()

.setSourceLanguage(FirebaseTranslateLanguage.EN)

.setTargetLanguage(FirebaseTranslateLanguage.JP)

.build();

final FirebaseTranslator englishJapaneseTranslator =

FirebaseNaturalLanguage.getInstance().getTranslator(options);

final String text = "Merry Christmas";

// We need to download the model first

// Each model is around 30MB and are stored locally to be reused

FirebaseModelDownloadConditions conditions = new FirebaseModelDownloadConditions.Builder()

.requireWifi()

.build();

englishJapaneseTranslator.downloadModelIfNeeded(conditions)

.addOnSuccessListener(

new OnSuccessListener<Void>() {

@Override

public void onSuccess(Void v) {

// Model downloaded successfully. We can start translation

englishJapaneseTranslator.translate(text)

.addOnSuccessListener(

new OnSuccessListener<String>() {

@Override

public void onSuccess(@NonNull String translatedText) {

// Translation successful.

// translatedText <- "メリークリスマス"

}

})

.addOnFailureListener(

new OnFailureListener() {

@Override

public void onFailure(@NonNull Exception e) {

// Error during the translation.

}

});

}

})

.addOnFailureListener(

new OnFailureListener() {

@Override

public void onFailure(@NonNull Exception e) {

// Model couldn’t be downloaded or other internal error.

}

});

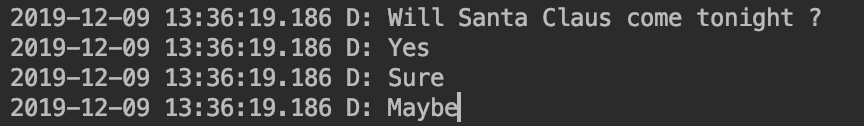

Smart reply generator (only in english)

The model work with the 10 most recent messages and provides a maximum of 3 suggested responses.// Define a conversation history

// Local User speaks to Remote User

// Smart reply is about what Local User may answer.

List<FirebaseTextMessage> conversation = new ArrayList<>();

conversation.add(FirebaseTextMessage.createForRemoteUser("It's Christmas time", System.currentTimeMillis(),"userId1"));

conversation.add(FirebaseTextMessage.createForLocalUser("Kids are happy", System.currentTimeMillis()));

conversation.add(FirebaseTextMessage.createForRemoteUser("Will Santa Claus come tonight ?", System.currentTimeMillis(),"userId1"));

FirebaseSmartReply smartReply = FirebaseNaturalLanguage.getInstance().getSmartReply();

smartReply.suggestReplies(conversation)

.addOnSuccessListener(new OnSuccessListener<SmartReplySuggestionResult>() {

@Override

public void onSuccess(SmartReplySuggestionResult result) {

if (result.getStatus() == SmartReplySuggestionResult.STATUS_NOT_SUPPORTED_LANGUAGE) {

// The conversation's language isn't supported, so the

// the result doesn't contain any suggestions.

} else if (result.getStatus() == SmartReplySuggestionResult.STATUS_SUCCESS) {

// Task completed successfully

for (SmartReplySuggestion suggestion : result.getSuggestions()) {

String replyText = suggestion.getText();

}

}

}

})

.addOnFailureListener(new OnFailureListener() {

@Override

public void onFailure(@NonNull Exception e) {

// Task failed with an exception

}

});

As result a list of SmartReplySuggestion Object. Each one will contain only a text.