![]()

Huggingface Transformerは、バージョンアップが次々とされていて、メソッドや学習済みモデル(Pretrained model)の名前がバージョンごとに変わっているらしい。。

この記事では、__version.3.5.1__から、__東北大学が公開している*'cl-tohoku/bert-base-japanese-char-whole-word-masking'*__の呼び出しに__成功__したコードを紹介します。

実行環境

% python --version

Python 3.6.3

% python

動かしたもの

・ @ichiroexさん 「huggingface/transformersのBertModelで日本語文章ベクトルを作成」

エラー

>>> from transformers.tokenization_bert_japanese import BertJapaneseTokenizer

Traceback (most recent call last):

File "<stdin>", line 1, in <module>

ModuleNotFoundError: No module named 'transformers.tokenization_bert_japanese'

>>> quit()

こんなことが書いてあるサイトが・・・

・Python Error: No module named transformers.tokenization_bert_japanese

Python Error: No module named transformers.tokenization_bert_japanese

Install specific version:

pip install "transformers==2.5.1"

・teratail 最近まで使えていたはずのモジュールがインポートできなくなった

!pip install transformers

from transformers.modeling_bert import BertModel

from transformers.tokenization_bert_japanese import BertJapaneseTokenizer

ModuleNotFoundError: No module named 'transformers.modeling_bert'

ModuleNotFoundError: No module named 'transformers.tokenization_bert_japanese'数日前にtransformersのバージョンが更新されていたようです。transformers==3.5.1 でうまくいきました。

とりあえず2.5.1を入れてみる

% pip install "transformers==2.5.1"

Collecting transformers==2.5.1

Downloading transformers-2.5.1-py3-none-any.whl (499 kB)

|████████████████████████████████| 499 kB 8.4 MB/s

Successfully installed boto3-1.16.33 botocore-1.19.33 jmespath-0.10.0 python-dateutil-2.8.1 s3transfer-0.3.3 sentencepiece-0.1.94 tokenizers-0.5.2 transformers-2.5.1

%

実行成功

>>> import torch

>>> from transformers.tokenization_bert_japanese import BertJapaneseTokenizer

>>> from transformers.modeling_bert import BertModel

以下はうまくいかない

>>> tokenizer = BertJapaneseTokenizer.from_pretrained('bert-base-japanese')

Traceback (most recent call last):

File "<stdin>", line 1, in <module>

File "/Users/ocean/.pyenv/versions/3.6.3/lib/python3.6/site-packages/transformers/tokenization_utils.py", line 390, in from_pretrained

return cls._from_pretrained(*inputs, **kwargs)

File "/Users/ocean/.pyenv/versions/3.6.3/lib/python3.6/site-packages/transformers/tokenization_utils.py", line 493, in _from_pretrained

list(cls.vocab_files_names.values()),

OSError: Model name 'bert-base-japanese' was not found in tokenizers model name list (bert-base-japanese, bert-base-japanese-whole-word-masking, bert-base-japanese-char, bert-base-japanese-char-whole-word-masking). We assumed 'bert-base-japanese' was a path, a model identifier, or url to a directory containing vocabulary files named ['vocab.txt'] but couldn't find such vocabulary files at this path or url.

>>>

3.5.1を入れてみる

% pip install "transformers==3.5.1"

Collecting transformers==3.5.1

Downloading transformers-3.5.1-py3-none-any.whl (1.3 MB)

|████████████████████████████████| 1.3 MB 9.2 MB/s

( ・・・省略・・・ )

% pip install "transformers==3.5.1"

Collecting transformers==3.5.1

Downloading transformers-3.5.1-py3-none-any.whl (1.3 MB)

|████████████████████████████████| 1.3 MB 9.2 MB/s

やっぱりだめ

% python

Python 3.6.3 (default, Dec 10 2020, 22:43:16)

[GCC Apple LLVM 12.0.0 (clang-1200.0.32.27)] on darwin

Type "help", "copyright", "credits" or "license" for more information.

>>>

>>> import torch

>>> from transformers.tokenization_bert_japanese import BertJapaneseTokenizer

>>> from transformers.modeling_bert import BertModel

>>> tokenizer = BertJapaneseTokenizer.from_pretrained('bert-base-japanese')

Traceback (most recent call last):

File "<stdin>", line 1, in <module>

File "/Users/ocean/.pyenv/versions/3.6.3/lib/python3.6/site-packages/transformers/tokenization_utils_base.py", line 1644, in from_pretrained

raise EnvironmentError(msg)

OSError: Can't load tokenizer for 'bert-base-japanese'. Make sure that:

- 'bert-base-japanese' is a correct model identifier listed on 'https://huggingface.co/models'

- or 'bert-base-japanese' is the correct path to a directory containing relevant tokenizer files

>>>

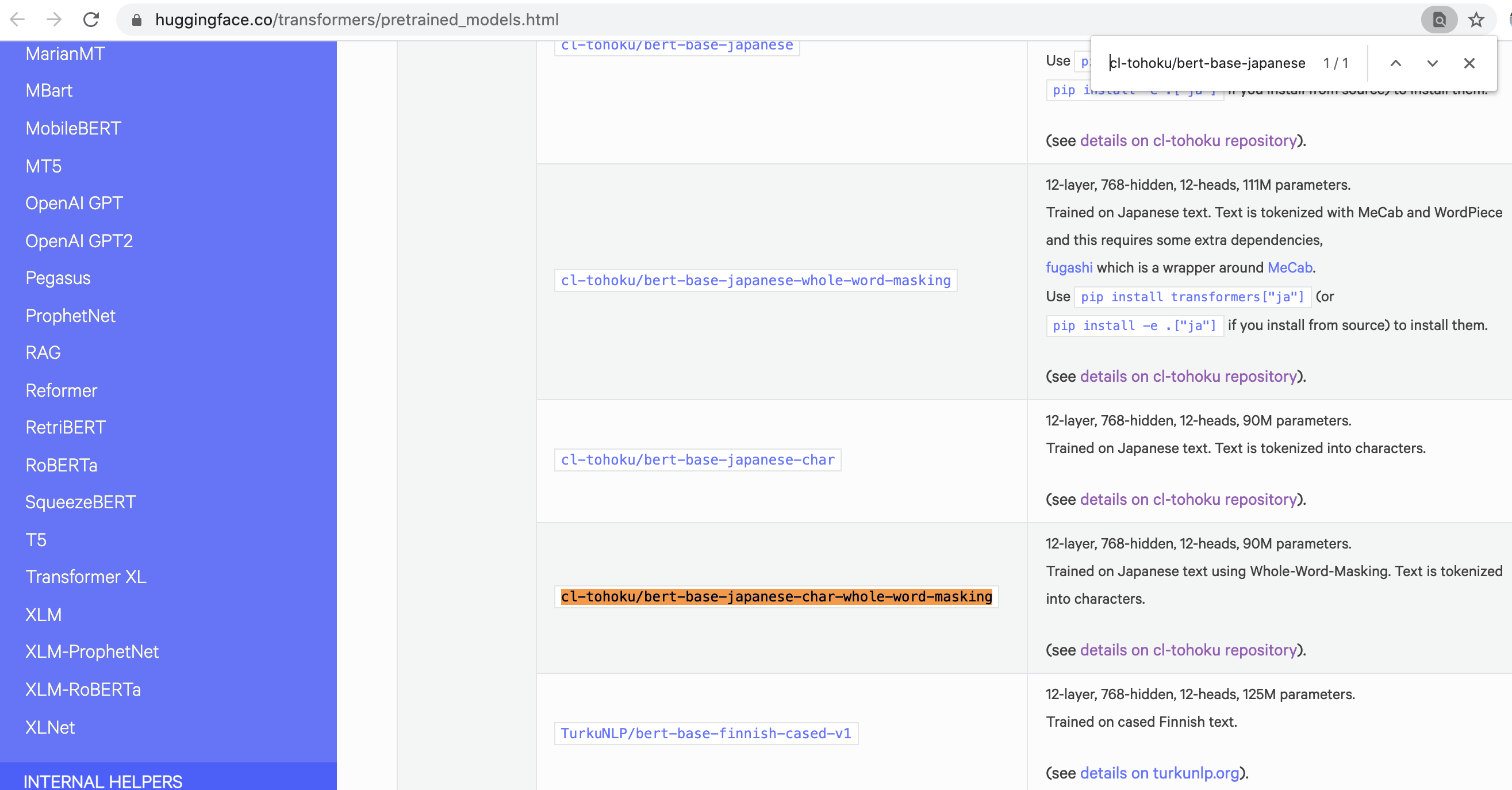

Transformer 3.5.1バージョンで使える日本語の学習済みモデルの名前は、'cl-tohoku/bert-base-japanese-char-whole-word-masking'だったみたい

・ https://huggingface.co/models?search=japanese

・ https://huggingface.co/cl-tohoku/bert-base-japanese-char-whole-word-masking

・ https://huggingface.co/cl-tohoku

・ https://huggingface.co/transformers/pretrained_models.html

fugashiを入れればうまくいきそう

>>> tokenizer = BertJapaneseTokenizer.from_pretrained('cl-tohoku/bert-base-japanese-char-whole-word-masking')

Downloading: 100%|████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████| 15.7k/15.7k [00:00<00:00, 91.5kB/s]

Traceback (most recent call last):

File "/Users/ocean/.pyenv/versions/3.6.3/lib/python3.6/site-packages/transformers/tokenization_bert_japanese.py", line 230, in __init__

import fugashi

ModuleNotFoundError: No module named 'fugashi'

During handling of the above exception, another exception occurred:

Traceback (most recent call last):

File "<stdin>", line 1, in <module>

File "/Users/ocean/.pyenv/versions/3.6.3/lib/python3.6/site-packages/transformers/tokenization_utils_base.py", line 1653, in from_pretrained

resolved_vocab_files, pretrained_model_name_or_path, init_configuration, *init_inputs, **kwargs

File "/Users/ocean/.pyenv/versions/3.6.3/lib/python3.6/site-packages/transformers/tokenization_utils_base.py", line 1725, in _from_pretrained

tokenizer = cls(*init_inputs, **init_kwargs)

File "/Users/ocean/.pyenv/versions/3.6.3/lib/python3.6/site-packages/transformers/tokenization_bert_japanese.py", line 151, in __init__

do_lower_case=do_lower_case, never_split=never_split, **(mecab_kwargs or {})

File "/Users/ocean/.pyenv/versions/3.6.3/lib/python3.6/site-packages/transformers/tokenization_bert_japanese.py", line 233, in __init__

"You need to install fugashi to use MecabTokenizer."

ModuleNotFoundError: You need to install fugashi to use MecabTokenizer.See https://pypi.org/project/fugashi/ for installation.

>>>

fugashiを入れる

% pip install fugashi

Collecting fugashi

Downloading fugashi-1.0.5-cp36-cp36m-macosx_10_14_x86_64.whl (283 kB)

|████████████████████████████████| 283 kB 8.7 MB/s

Installing collected packages: fugashi

Successfully installed fugashi-1.0.5

ipadicを入れればうまくいきそう

>>> import torch

>>> from transformers.tokenization_bert_japanese import BertJapaneseTokenizer

>>> from transformers.modeling_bert import BertModel

>>> tokenizer = BertJapaneseTokenizer.from_pretrained('cl-tohoku/bert-base-japanese-char-whole-word-masking')

Traceback (most recent call last):

File "/Users/ocean/.pyenv/versions/3.6.3/lib/python3.6/site-packages/transformers/tokenization_bert_japanese.py", line 242, in __init__

import ipadic

ModuleNotFoundError: No module named 'ipadic'

During handling of the above exception, another exception occurred:

Traceback (most recent call last):

File "<stdin>", line 1, in <module>

File "/Users/ocean/.pyenv/versions/3.6.3/lib/python3.6/site-packages/transformers/tokenization_utils_base.py", line 1653, in from_pretrained

resolved_vocab_files, pretrained_model_name_or_path, init_configuration, *init_inputs, **kwargs

File "/Users/ocean/.pyenv/versions/3.6.3/lib/python3.6/site-packages/transformers/tokenization_utils_base.py", line 1725, in _from_pretrained

tokenizer = cls(*init_inputs, **init_kwargs)

File "/Users/ocean/.pyenv/versions/3.6.3/lib/python3.6/site-packages/transformers/tokenization_bert_japanese.py", line 151, in __init__

do_lower_case=do_lower_case, never_split=never_split, **(mecab_kwargs or {})

File "/Users/ocean/.pyenv/versions/3.6.3/lib/python3.6/site-packages/transformers/tokenization_bert_japanese.py", line 245, in __init__

"The ipadic dictionary is not installed. "

ModuleNotFoundError: The ipadic dictionary is not installed. See https://github.com/polm/ipadic-py for installation.

>>>

ipadicを入れる

% pip install ipadic

Collecting ipadic

Downloading ipadic-1.0.0.tar.gz (13.4 MB)

|████████████████████████████████| 13.4 MB 8.5 MB/s

Building wheels for collected packages: ipadic

Building wheel for ipadic (setup.py) ... done

Created wheel for ipadic: filename=ipadic-1.0.0-py3-none-any.whl size=13556723 sha256=7065d9649eb32a0b0d6dab0b266857ca6825322bc66a5982813185f8b9799c5b

Stored in directory: /Users/ocean/Library/Caches/pip/wheels/99/39/4c/e723d99fed7aad240a3bea84ef21430209f58b313a9e70f7d6

Successfully built ipadic

Installing collected packages: ipadic

Successfully installed ipadic-1.0.0

%

成功!

% python

Python 3.6.3 (default, Dec 10 2020, 22:43:16)

[GCC Apple LLVM 12.0.0 (clang-1200.0.32.27)] on darwin

Type "help", "copyright", "credits" or "license" for more information.

>>> import torch

>>> from transformers.tokenization_bert_japanese import BertJapaneseTokenizer

>>> from transformers.modeling_bert import BertModel

>>> tokenizer = BertJapaneseTokenizer.from_pretrained('cl-tohoku/bert-base-japanese-char-whole-word-masking')

>>>

ここからは、__@kenta1984さんのQiitaの記事__から、コードを写経して実行してみる

今度は、'cl-tohoku/bert-base-japanese-whole-word-masking'を使う。

実行成功

Python 3.6.3 (default, Dec 10 2020, 22:43:16)

[GCC Apple LLVM 12.0.0 (clang-1200.0.32.27)] on darwin

Type "help", "copyright", "credits" or "license" for more information.

>>> import torch

>>> from transformers import BertJapaneseTokenizer, BertForMaskedLM

>>> from transformers.tokenization_bert_japanese import BertJapaneseTokenizer

>>> from transformers.modeling_bert import BertModel

>>> tokenizer = BertJapaneseTokenizer.from_pretrained('cl-tohoku/bert-base-japanese-whole-word-masking')

Downloading: 100%|███████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████| 258k/258k [00:00<00:00, 362kB/s]

>>>

>>> text = '新型コロナウイルス対策について厚生労働省に助言する専門家の会合が開かれ、感染状況について引き続き最大限の警戒が必要な状況だと評価しました。そのうえで、これまでの対策について「全体として必ずしも新規感染者数を減少させることに成功しているとは言い難い」と評価し、今月中旬をめどに感染拡大が沈静化に向かうかどうか評価してさらなる対策について早急に検討する必要があるとしています。'

>>>

>>> tokenized_text = tokenizer.tokenize(text)

>>> print(tokenized_text)

['新型', 'コロナ', 'ウイルス', '対策', 'について', '厚生', '労働省', 'に', '助言', 'する', '専門', '家', 'の', '会合', 'が', '開か', 'れ', '、', '感染', '状況', 'について', '引き続き', '最大限', 'の', '警戒', 'が', '必要', 'な', '状況', 'だ', 'と', '評価', 'し', 'まし', 'た', '。', 'その', '##う', '##え', '##で', '、', 'これ', 'まで', 'の', '対策', 'について', '「', '全体', 'として', '必ずしも', '新規', '感染', '者', '数', 'を', '減少', 'さ', 'せる', 'こと', 'に', '成功', 'し', 'て', 'いる', 'と', 'は', '言い', '難い', '」', 'と', '評価', 'し', '、', '今', '##月', '中旬', 'を', 'め', '##ど', 'に', '感染', '拡大', 'が', '沈', '##静', '化', 'に', '向かう', 'か', 'どう', 'か', '評価', 'し', 'て', 'さらなる', '対策', 'について', '早', '##急', 'に', '検討', 'する', '必要', 'が', 'ある', 'と', 'し', 'て', 'い', 'ます', '。']

>>>

>>> masked_index = 2

>>> tokenized_text[masked_index] = '[MASK]'

>>> print(tokenized_text[masked_index])

[MASK]

>>>

>>> indexed_tokens = tokenizer.convert_tokens_to_ids(tokenized_text)

>>> tokens_tensor = torch.tensor([indexed_tokens])

>>> model = BertForMaskedLM.from_pretrained('cl-tohoku/bert-base-japanese-whole-word-masking')

Downloading: 100%|█████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████| 479/479 [00:00<00:00, 305kB/s]

Downloading: 100%|██████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████| 445M/445M [00:26<00:00, 16.6MB/s]

Some weights of the model checkpoint at cl-tohoku/bert-base-japanese-whole-word-masking were not used when initializing BertForMaskedLM: ['cls.seq_relationship.weight', 'cls.seq_relationship.bias']

- This IS expected if you are initializing BertForMaskedLM from the checkpoint of a model trained on another task or with another architecture (e.g. initializing a BertForSequenceClassification model from a BertForPreTraining model).

- This IS NOT expected if you are initializing BertForMaskedLM from the checkpoint of a model that you expect to be exactly identical (initializing a BertForSequenceClassification model from a BertForSequenceClassification model).

>>>

>>> model.eval()

BertForMaskedLM(

(bert): BertModel(

(embeddings): BertEmbeddings(

(word_embeddings): Embedding(32000, 768, padding_idx=0)

(position_embeddings): Embedding(512, 768)

(token_type_embeddings): Embedding(2, 768)

(LayerNorm): LayerNorm((768,), eps=1e-12, elementwise_affine=True)

(dropout): Dropout(p=0.1, inplace=False)

)

(encoder): BertEncoder(

(layer): ModuleList(

(0): BertLayer(

(attention): BertAttention(

(self): BertSelfAttention(

(query): Linear(in_features=768, out_features=768, bias=True)

(key): Linear(in_features=768, out_features=768, bias=True)

(value): Linear(in_features=768, out_features=768, bias=True)

(dropout): Dropout(p=0.1, inplace=False)

( ・・・省略・・・ )

(intermediate): BertIntermediate(

(dense): Linear(in_features=768, out_features=3072, bias=True)

)

(output): BertOutput(

(dense): Linear(in_features=3072, out_features=768, bias=True)

(LayerNorm): LayerNorm((768,), eps=1e-12, elementwise_affine=True)

(dropout): Dropout(p=0.1, inplace=False)

)

)

)

)

)

(cls): BertOnlyMLMHead(

(predictions): BertLMPredictionHead(

(transform): BertPredictionHeadTransform(

(dense): Linear(in_features=768, out_features=768, bias=True)

(LayerNorm): LayerNorm((768,), eps=1e-12, elementwise_affine=True)

)

(decoder): Linear(in_features=768, out_features=32000, bias=True)

)

)

)

>>>

>>> with torch.no_grad():

... outputs = model(tokens_tensor)

... predictions = outputs[0][0, masked_index].topk(5) # 予測結果の上位5件を抽出

...

>>>

>>> for i, index_t in enumerate(predictions.indices):

... index = index_t.item()

... token = tokenizer.convert_ids_to_tokens([index])[0]

... print(i, token)

...

0 の

1 感染

2 、

3 ##病

4 は

>>>