Introduction of the paper

This work, named Combating Noisy Labels by Agreement: A Joint Training Method with Co-Regularization[CVPR2020], is generally based on co-teaching[NIPS2018]. To explain what this paper is about, we need some basic conception about co-teaching.

co-teaching

co-teaching is a framework that is proposed for robust training against noise.

This paper aims to mitigate the negative impact of noisy labels on model training.

The specific method is:

- Train two models at the same time.

- In the later stage of training, let the two models judge whether the input label is reliable respectively.

- Pass the list of data they consider reliable to each other.

- Use the data on the list given by each other for training.

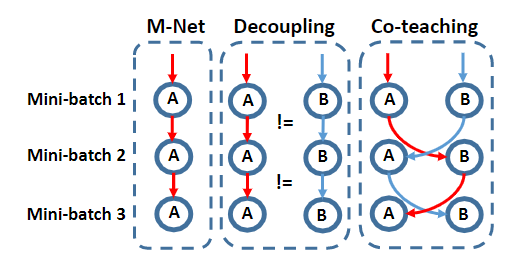

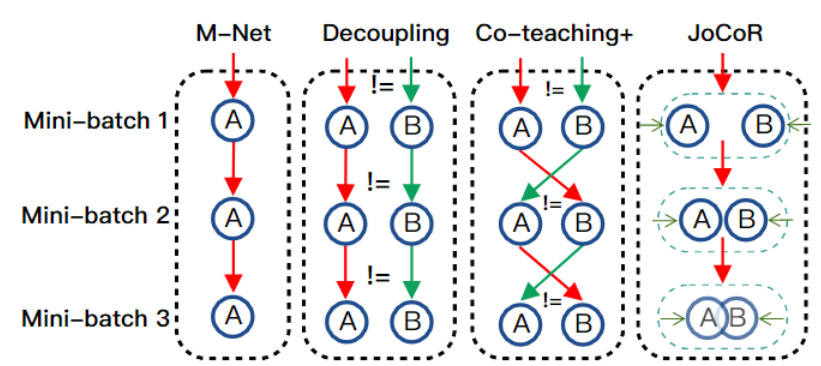

This is the concept of co-teaching. A and B denote two models.

JoCoR-Loss

This work follows most of the settings of co-teaching, like

- Training two models at the same time

- Making a judgment of labels in a mini-batch by the value of losses

- Using the same training schedule and optimizer parameters as co-teaching.

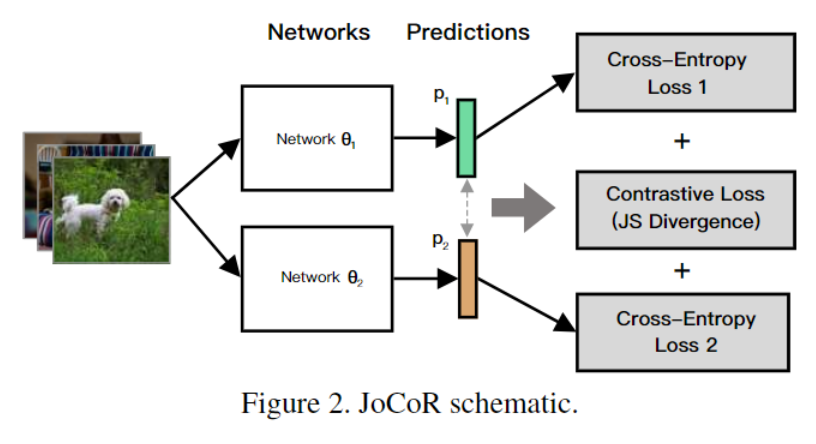

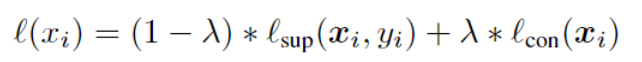

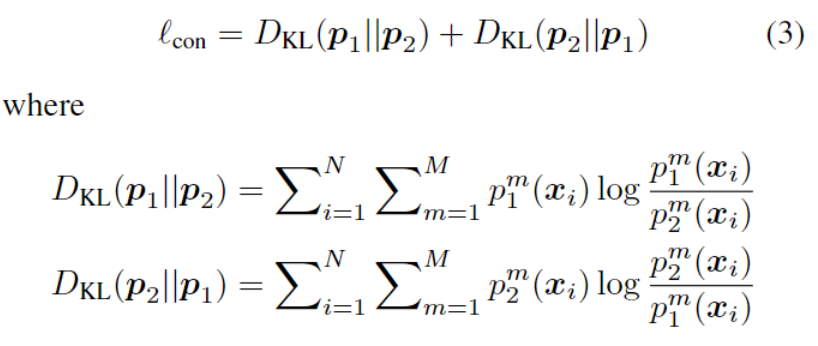

The core technology that this paper proposed, is a novel loss, which named JoCoR Loss.

This new loss function forces the two models to give the same prediction. According to the author, this Contrastive loss function should be lower when the label is clean, so the model can distinguish whether a label is clean by this loss.

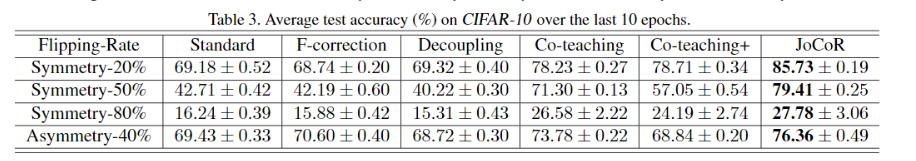

Here are the experiment results on the noisy CIFAR-10 dataset. This method absolutely outperforms the previous method.

My opinion about JoCoR loss

Since this loss compares 2 prediction vectors, but not two Cross_Entropy Loss. So this KL-divergences has nothing to do with the labels. So I don't think that this loss is helpful for selecting data with clean samples. I think the prediction of 2 models will be similar for the images that have significant features which are easy to be distinguished. But this novel loss did help the model to achieve better performance, I think the reason should be:

- Optimizing two models towards the direction of fitting each other is just like two students study separately and then communicate to each other about the knowledge they learned. This gives the models more possibility to jump out the local minimum or saddle point. Furthermore, If the common pattern learned from noisy data is more stable than random noisy knowledge, optimizing two models towards each other may force the two models to forget the random noisy knowledge.

To support my opinion, I ran an easy experiment. I let the model select clean sample without JoCor loss( the original way ), and backward the loss with JoCoR loss . And I got a similar test accuracy..

So for me, the meaning of this paper is showing that training two models and force them predicting samely is useful. And I think this idea can apply to other tasks.

What I have done

-

I re-produce co-teaching and JoCoR-loss by python 3.7 and PyTorch 1.3.1 since their original source code is written in python 2.7 and PyTorch 0.3.0 (which are both old).

-

The main structure of co-teaching is similar to the original code and what I have done is making them work on a relatively new environment, and then I write the code about JoCoR loss by myself.

-

Ran an easy experiment to confirm that JoCoR loss doesn't contribute to the process of selecting clean sample

-

Here is my code.