#これはなに

「OpenStackクラウドインテグレーション オープンソースクラウドによるサービス構築入門」の実習をSoftLayerの無料ベアメタルで行う記録である。

第13章 Serf/Consulを用いた自律クラスター構築

当章ではSerfによるwebサーバーの自動スケールとConsulでdbsの自動フェイルオーダーを行う。

当章の支援ファイルはこちら。

13.1 Serf/Consulの概要

Serf/Consulの概要の説明。

13.2 SerfによるWEBサーバーの自動スケール

13.2.1 Serfによるクラスター管理の概要

Serfではメンバーの追加・離脱の際の自動発行イベントやユーザー発行のカスタムイベントをもとにコマンドを実行することができる。

13.2.2 ロードバランサーとSerf環境の準備

当章専用のネットワーク serf-consul-net を 10.20.20.0/24 で作成しセキュリティ設定をする。

実行内容はこちら。

user01@test:~$ source openrc

user01@test:~$ neutron net-create serf-consul-net

Created a new network:

+-----------------+--------------------------------------+

| Field | Value |

+-----------------+--------------------------------------+

| admin_state_up | True |

| id | 76a1f690-f9d0-4e1f-afda-c55935b8985d |

| name | serf-consul-net |

| router:external | False |

| shared | False |

| status | ACTIVE |

| subnets | |

| tenant_id | 106e169743964758bcad1f06cc69c472 |

+-----------------+--------------------------------------+

user01@test:~$ neutron subnet-create --ip-version 4 --gateway 10.20.0.254 \

> --name serf-consul-subnet serf-consul-net 10.20.0.0/24

Created a new subnet:

+-------------------+----------------------------------------------+

| Field | Value |

+-------------------+----------------------------------------------+

| allocation_pools | {"start": "10.20.0.1", "end": "10.20.0.253"} |

| cidr | 10.20.0.0/24 |

| dns_nameservers | |

| enable_dhcp | True |

| gateway_ip | 10.20.0.254 |

| host_routes | |

| id | c8a123c6-7f28-4dde-b6bc-2b63fcb6c6ab |

| ip_version | 4 |

| ipv6_address_mode | |

| ipv6_ra_mode | |

| name | serf-consul-subnet |

| network_id | 76a1f690-f9d0-4e1f-afda-c55935b8985d |

| tenant_id | 106e169743964758bcad1f06cc69c472 |

+-------------------+----------------------------------------------+

user01@test:~$ neutron router-interface-add Ext-Router serf-consul-subnet

Added interface d803143b-e2ed-4b16-af4c-8e4a773981da to router Ext-Router.

user01@test:~$ neutron security-group-create sg-for-chap13

Created a new security_group:

+----------------------+-------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------+

| Field | Value |

+----------------------+-------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------+

| description | |

| id | 4579f92e-03c6-404a-9cd1-f3094cfb8f4e |

| name | sg-for-chap13 |

| security_group_rules | {"remote_group_id": null, "direction": "egress", "remote_ip_prefix": null, "protocol": null, "tenant_id": "106e169743964758bcad1f06cc69c472", "port_range_max": null, "security_group_id": "4579f92e-03c6-404a-9cd1-f3094cfb8f4e", "port_range_min": null, "ethertype": "IPv4", "id": "3ce0dc6a-7b86-49ab-ada3-b7c4f709a5eb"} |

| | {"remote_group_id": null, "direction": "egress", "remote_ip_prefix": null, "protocol": null, "tenant_id": "106e169743964758bcad1f06cc69c472", "port_range_max": null, "security_group_id": "4579f92e-03c6-404a-9cd1-f3094cfb8f4e", "port_range_min": null, "ethertype": "IPv6", "id": "f00aebe3-c7e5-49ca-b282-62b9a9acccfa"} |

| tenant_id | 106e169743964758bcad1f06cc69c472 |

+----------------------+-------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------+

[root@serf-step-server ~]# neutron security-group-rule-create --ethertype IPv4 \

> --remote-ip-prefix 10.20.0.0/24 sg-for-chap13

Created a new security_group_rule:

+-------------------+--------------------------------------+

| Field | Value |

+-------------------+--------------------------------------+

| direction | ingress |

| ethertype | IPv4 |

| id | d9a1fc5b-6236-499f-887c-f90a89cc910b |

| port_range_max | |

| port_range_min | |

| protocol | |

| remote_group_id | |

| remote_ip_prefix | 10.20.0.0/24 |

| security_group_id | 4579f92e-03c6-404a-9cd1-f3094cfb8f4e |

| tenant_id | 106e169743964758bcad1f06cc69c472 |

+-------------------+--------------------------------------+

user01@test:~$ neutron security-group-rule-create --ethertype IPv4 --protocol tcp \

> --port-range-min 22 --port-range-max 22 --remote-ip-prefix 0.0.0.0/0 sg-for-chap13

Created a new security_group_rule:

+-------------------+--------------------------------------+

| Field | Value |

+-------------------+--------------------------------------+

| direction | ingress |

| ethertype | IPv4 |

| id | 34541cd1-dcef-471f-ad84-edba6110f19c |

| port_range_max | 22 |

| port_range_min | 22 |

| protocol | tcp |

| remote_group_id | |

| remote_ip_prefix | 0.0.0.0/0 |

| security_group_id | 4579f92e-03c6-404a-9cd1-f3094cfb8f4e |

| tenant_id | 106e169743964758bcad1f06cc69c472 |

+-------------------+--------------------------------------+

Serf用踏み台サーバーを起動する。

userdataはこちら。起動後install_serf.shでSerfを自動導入する。起動コマンドはこちら。

user01@test:~$ function get_uuid () { cat - | grep " id " | awk '{print $4}'; }

user01@test:~$ export MY_C13_NET=`neutron net-show serf-consul-net | get_uuid`

user01@test:~$ echo $MY_C13_NET

76a1f690-f9d0-4e1f-afda-c55935b8985d

user01@test:~$ nova boot --flavor standard.xsmall \

> --image "centos-base" \

> --key-name key-for-step-server \

> --user-data userdata_serf-step-server.txt \

> --security-groups sg-for-chap13 \

> --availability-zone az1 \

> --nic net-id=${MY_C13_NET} \

> serf-step-server

+--------------------------------------+----------------------------------------------------+

| Property | Value |

+--------------------------------------+----------------------------------------------------+

| OS-DCF:diskConfig | MANUAL |

| OS-EXT-AZ:availability_zone | nova |

| OS-EXT-STS:power_state | 0 |

| OS-EXT-STS:task_state | scheduling |

| OS-EXT-STS:vm_state | building |

| OS-SRV-USG:launched_at | - |

| OS-SRV-USG:terminated_at | - |

| accessIPv4 | |

| accessIPv6 | |

| adminPass | 6H9uoQGP2ACv |

| config_drive | |

| created | 2015-04-01T04:34:49Z |

| flavor | standard.xsmall (100) |

| hostId | |

| id | c996f567-247c-412b-80a2-17dcf1ca67d7 |

| image | centos-base (098f948e-e80b-4b1a-8a46-f8d2dd57e149) |

| key_name | key-for-step-server |

| metadata | {} |

| name | serf-step-server |

| os-extended-volumes:volumes_attached | [] |

| progress | 0 |

| security_groups | sg-for-chap13 |

| status | BUILD |

| tenant_id | 106e169743964758bcad1f06cc69c472 |

| updated | 2015-04-01T04:34:50Z |

| user_id | 98dd78b670884b64b879568215777c53 |

+--------------------------------------+----------------------------------------------------+

Foating IPをつけログインする。key-for-internal.pem を踏み台サーバーにコピーする。

user01@test:~$ nova floating-ip-create Ext-Net

+-----------------+-----------+----------+---------+

| Ip | Server Id | Fixed Ip | Pool |

+-----------------+-----------+----------+---------+

| 192.168.100.137 | | - | Ext-Net |

+-----------------+-----------+----------+---------+

user01@test:~$ nova floating-ip-associate serf-step-server 192.168.100.137

user01@test:~$ scp -i key-for-step-server.pem key-for-internal.pem root@192.168.100.137:/root/key-for-internal.pem

key-for-internal.pem 100% 1680 1.6KB/s 00:00

user01@test:~$ ssh -i key-for-step-server.pem root@192.168.100.137

ロードバランサーを起動する。

userdataはこちら。起動コマンドはこちら。

[root@serf-step-server ~]# source openrc

[root@serf-step-server ~]# function get_uuid () { cat - | grep " id " | awk '{print $4}'; }

[root@serf-step-server ~]# export MY_C13_NET=`neutron net-show serf-consul-net | get_uuid`

[root@serf-step-server ~]# nova boot --flavor standard.xsmall \

> --image "centos-base" \

> --key-name key-for-internal \

> --user-data userdata_serf-lbs.txt \

> --security-groups sg-for-chap13 \

> --availability-zone az1 \

> --nic net-id=${MY_C13_NET} \

> serf-nginx

+--------------------------------------+----------------------------------------------------+

| Property | Value |

+--------------------------------------+----------------------------------------------------+

| OS-DCF:diskConfig | MANUAL |

| OS-EXT-AZ:availability_zone | nova |

| OS-EXT-STS:power_state | 0 |

| OS-EXT-STS:task_state | scheduling |

| OS-EXT-STS:vm_state | building |

| OS-SRV-USG:launched_at | - |

| OS-SRV-USG:terminated_at | - |

| accessIPv4 | |

| accessIPv6 | |

| adminPass | bXxxbgtvpJ4A |

| config_drive | |

| created | 2015-04-01T04:49:07Z |

| flavor | standard.xsmall (100) |

| hostId | |

| id | 5849ae47-d713-426e-8488-11bf828f8e7c |

| image | centos-base (098f948e-e80b-4b1a-8a46-f8d2dd57e149) |

| key_name | key-for-internal |

| metadata | {} |

| name | serf-nginx |

| os-extended-volumes:volumes_attached | [] |

| progress | 0 |

| security_groups | sg-for-chap13 |

| status | BUILD |

| tenant_id | 106e169743964758bcad1f06cc69c472 |

| updated | 2015-04-01T04:49:07Z |

| user_id | 98dd78b670884b64b879568215777c53 |

+--------------------------------------+----------------------------------------------------+

ロードバランサー serf-nginx のIPアドレスを取得しておく。

[root@serf-step-server ~]# export MY_SERF_LBS_IP=`nova show serf-nginx | grep "serf-consul-net" | awk '{print $5}'`

[root@serf-step-server ~]# echo $MY_SERF_LBS_IP

10.20.0.3

踏み台サーバーとロードバランサーでSerfエージェントを起動し、クラスターを構成する。

ロードバランサーに入りserf agentを起動する。

[root@serf-step-server ~]# ssh -i key-for-step-server.pem root@$MY_SERF_LBS_IP

[root@serf-nginx ~]# ==> Starting Serf agent...

==> Starting Serf agent RPC...

==> Serf agent running!

Node name: 'serf-nginx'

Bind addr: '0.0.0.0:7946'

RPC addr: '127.0.0.1:7373'

Encrypted: false

Snapshot: false

Profile: lan

==> Log data will now stream in as it occurs:

2015/04/01 14:23:52 [INFO] agent: Serf agent starting

2015/04/01 14:23:52 [INFO] serf: EventMemberJoin: serf-nginx 10.20.0.3

2015/04/01 14:23:53 [INFO] agent: Received event: member-join

踏み台サーバーからserfクラスターにjoinする。

[root@serf-step-server ~]# serf agent -join=${MY_SERF_LBS_IP} &

[1] 26064

[root@serf-step-server ~]# ==> Starting Serf agent...

==> Starting Serf agent RPC...

==> Serf agent running!

Node name: 'serf-step-server'

Bind addr: '0.0.0.0:7946'

RPC addr: '127.0.0.1:7373'

Encrypted: false

Snapshot: false

Profile: lan

==> Joining cluster...(replay: false)

Join completed. Synced with 1 initial agents

==> Log data will now stream in as it occurs:

2015/04/01 14:27:12 [INFO] agent: Serf agent starting

2015/04/01 14:27:12 [INFO] serf: EventMemberJoin: serf-step-server 10.20.0.1

2015/04/01 14:27:12 [INFO] agent: joining: [10.20.0.3] replay: false

2015/04/01 14:27:12 [INFO] serf: EventMemberJoin: serf-nginx 10.20.0.3

2015/04/01 14:27:12 [INFO] agent: joined: 1 nodes

2015/04/01 14:27:13 [INFO] agent: Received event: member-join

serf membersでメンバーが確認できる。

[root@serf-step-server ~]# serf members

2015/04/01 14:28:10 [INFO] agent.ipc: Accepted client: 127.0.0.1:39197

serf-step-server 10.20.0.1:7946 alive

serf-nginx 10.20.0.3:7946 alive

13.2.3 負荷分散設定の自動化概要

nova bootでwebサーバーを起動したときにserf agent -joinさせる。それをきっかけに振り分け先に追加する。nova deleteで自動発生する離イベントで振り分け先から除去する。

ロードバランサーの設定ファイルは下記のとおり

/etc/nginx/conf.d/lbs.conf - port 80 のアクセスをwebサーバーに転送

/etc/nginx/conf.d/lbs_upstream.conf - 転送されるwebサーバー

/opt/serf/serf_lbs.sh - serf イベント時に実行される。lbs_members.pl を実行する。

/opt/serf/lbs_members.pl - lbs_upstream.confにIPを登録

内容を理解した上で支援ファイルをコピーする。

[root@serf-nginx ~]# git clone https://github.com/josug-book1-materials/chapter13.git

Initialized empty Git repository in /root/chapter13/.git/

remote: Counting objects: 53, done.

remote: Total 53 (delta 0), reused 0 (delta 0), pack-reused 53

Unpacking objects: 100% (53/53), done.

[root@serf-nginx ~]# cp chapter13/list13/list-13-2-4_lbs.conf /etc/nginx/conf.d/lbs.conf

[root@serf-nginx ~]# mkdir -p /opt/serf

[root@serf-nginx ~]# cp chapter13/list13/list-13-2-5_serf_lbs.sh /opt/serf/serf_lbs.sh

[root@serf-nginx ~]# chmod 700 /opt/serf/serf_lbs.sh

[root@serf-nginx ~]# cp chapter13/list13/list-13-2-6_lbs_members.pl /opt/serf/lbs_members.pl

[root@serf-nginx ~]# chmod 700 /opt/serf/lbs_members.pl

serf-nginx でserf agentを停止する。

[root@serf-nginx serf]# jobs -l

[1]+ 1640 Running serf agent & (wd: ~)

[root@serf-nginx serf]# fg

serf agent (wd: ~)

^C==> Caught signal: interrupt

==> Gracefully shutting down agent...

2015/04/01 16:41:41 [INFO] agent: requesting graceful leave from Serf

2015/04/01 16:41:41 [INFO] serf: EventMemberLeave: serf-nginx 10.20.0.3

2015/04/01 16:41:42 [INFO] agent: requesting serf shutdown

2015/04/01 16:41:42 [INFO] agent: shutdown complete

-event-handler=/opt/serf/serf_lbs.sh を指定してserf agentを起動する。join先は生き残っている踏み台サーバー。

[root@serf-nginx serf]# serf agent -join=10.20.0.1 -event-handler=/opt/serf/serf_lbs.sh &

[1] 25770

[root@serf-nginx serf]# ==> Starting Serf agent...

==> Starting Serf agent RPC...

==> Serf agent running!

Node name: 'serf-nginx'

Bind addr: '0.0.0.0:7946'

RPC addr: '127.0.0.1:7373'

Encrypted: false

Snapshot: false

Profile: lan

==> Joining cluster...(replay: false)

Join completed. Synced with 1 initial agents

==> Log data will now stream in as it occurs:

2015/04/01 16:45:48 [INFO] agent: Serf agent starting

2015/04/01 16:45:48 [INFO] serf: EventMemberJoin: serf-nginx 10.20.0.3

2015/04/01 16:45:48 [INFO] agent: joining: [10.20.0.1] replay: false

2015/04/01 16:45:48 [INFO] serf: EventMemberJoin: serf-step-server 10.20.0.1

2015/04/01 16:45:48 [INFO] agent: joined: 1 nodes

2015/04/01 16:45:49 [INFO] agent: Received event: member-join

2015/04/01 16:45:49 [INFO] agent.ipc: Accepted client: 127.0.0.1:49170

2015/04/01 16:45:50 [WARN] memberlist: Refuting a suspect message (from: serf-step-server)

13.2.4 SNSアプリケーションのデプロイとWEBのバランシング

app、dbsを起動する。実行内容はこちら。userdata_app.txtとuserdata_dbs.txtは6章のものを利用する。

[root@serf-step-server ~]# function get_uuid () { cat - | grep " id " | awk '{print $4}'; }

[root@serf-step-server ~]# export MY_C13_NET=`neutron net-show serf-consul-net | get_uuid`

[root@serf-step-server ~]# nova boot --flavor standard.xsmall --image "centos-base" \

> --key-name key-for-internal --user-data userdata_app.txt \

> --availability-zone az1 \

> --security-groups sg-for-chap13 --nic net-id=${MY_C13_NET} \

> serf-app01

+--------------------------------------+----------------------------------------------------+

| Property | Value |

+--------------------------------------+----------------------------------------------------+

| OS-DCF:diskConfig | MANUAL |

| OS-EXT-AZ:availability_zone | nova |

| OS-EXT-STS:power_state | 0 |

| OS-EXT-STS:task_state | scheduling |

| OS-EXT-STS:vm_state | building |

| OS-SRV-USG:launched_at | - |

| OS-SRV-USG:terminated_at | - |

| accessIPv4 | |

| accessIPv6 | |

| adminPass | A5siDDYaouSr |

| config_drive | |

| created | 2015-04-01T07:00:32Z |

| flavor | standard.xsmall (100) |

| hostId | |

| id | 121dc11d-2f3a-4677-8e99-665a52a08109 |

| image | centos-base (098f948e-e80b-4b1a-8a46-f8d2dd57e149) |

| key_name | key-for-internal |

| metadata | {} |

| name | serf-app01 |

| os-extended-volumes:volumes_attached | [] |

| progress | 0 |

| security_groups | sg-for-chap13 |

| status | BUILD |

| tenant_id | 106e169743964758bcad1f06cc69c472 |

| updated | 2015-04-01T07:00:33Z |

| user_id | 98dd78b670884b64b879568215777c53 |

+--------------------------------------+----------------------------------------------------+

[root@serf-step-server ~]# nova boot --flavor standard.xsmall --image "centos-base" \

> --key-name key-for-internal --user-data userdata_dbs.txt \

> --availability-zone az1 \

> --security-groups sg-for-chap13 --nic net-id=${MY_C13_NET} \

> serf-dbs01

+--------------------------------------+----------------------------------------------------+

| Property | Value |

+--------------------------------------+----------------------------------------------------+

| OS-DCF:diskConfig | MANUAL |

| OS-EXT-AZ:availability_zone | nova |

| OS-EXT-STS:power_state | 0 |

| OS-EXT-STS:task_state | scheduling |

| OS-EXT-STS:vm_state | building |

| OS-SRV-USG:launched_at | - |

| OS-SRV-USG:terminated_at | - |

| accessIPv4 | |

| accessIPv6 | |

| adminPass | FisTkYgGU4qn |

| config_drive | |

| created | 2015-04-01T07:00:41Z |

| flavor | standard.xsmall (100) |

| hostId | |

| id | 6bd05b63-ea34-44ac-a329-7225f854471d |

| image | centos-base (098f948e-e80b-4b1a-8a46-f8d2dd57e149) |

| key_name | key-for-internal |

| metadata | {} |

| name | serf-dbs01 |

| os-extended-volumes:volumes_attached | [] |

| progress | 0 |

| security_groups | sg-for-chap13 |

| status | BUILD |

| tenant_id | 106e169743964758bcad1f06cc69c472 |

| updated | 2015-04-01T07:00:42Z |

| user_id | 98dd78b670884b64b879568215777c53 |

+--------------------------------------+----------------------------------------------------+

appサーバーのendpoint.confにdbsサーバーのIPをセットしサービスを起動する。

[root@serf-step-server ~]# ssh -i key-for-internal.pem root@10.20.0.4

The authenticity of host '10.20.0.4 (10.20.0.4)' can't be established.

RSA key fingerprint is d2:4b:5c:9d:b5:14:15:4b:b0:f4:a4:a5:b0:99:26:16.

Are you sure you want to continue connecting (yes/no)? yes

Warning: Permanently added '10.20.0.4' (RSA) to the list of known hosts.

[root@serf-app01 ~]# vi /root/sample-app/endpoint.conf

[root@serf-app01 ~]# cat /root/sample-app/endpoint.conf

[rest-server]

rest_host = 127.0.0.1

rest_endpoint = http://%(rest_host)s:5555/bbs

[db-server]

db_host = 10.20.0.5

db_endpoint = mysql://user:password@%(db_host)s/sample_bbs?charset=utf8

[root@serf-app01 ~]# sh /root/sample-app/server-setup/rest.init.sh start

Starting rest.py [ OK ]

appサーバーのIPを保管しておく。

[root@serf-step-server ~]# export MY_SERF_APP_IP=`nova show serf-app01 | grep "serf-consul-net" | awk '{print $5}'`

webサーバーを起動する。

userdataはこちら。

一台をweb01として起動する。起動コマンドはこちら。

[root@serf-step-server ~]# env | grep MY_

MY_C13_NET=76a1f690-f9d0-4e1f-afda-c55935b8985d

MY_SERF_APP_IP=10.20.0.4

MY_SERF_LBS_IP=10.20.0.3

[root@serf-step-server ~]# nova boot --flavor standard.xsmall \

> --image "centos-base" \

> --key-name key-for-internal \

> --user-data userdata_serf-web.txt \

> --availability-zone az1 \

> --security-groups sg-for-chap13 --nic net-id=${MY_C13_NET} \

> --meta LBS=${MY_SERF_LBS_IP} \

> --meta APP=${MY_SERF_APP_IP} \

> serf-web01

+--------------------------------------+----------------------------------------------------+

| Property | Value |

+--------------------------------------+----------------------------------------------------+

| OS-DCF:diskConfig | MANUAL |

| OS-EXT-AZ:availability_zone | nova |

| OS-EXT-STS:power_state | 0 |

| OS-EXT-STS:task_state | scheduling |

| OS-EXT-STS:vm_state | building |

| OS-SRV-USG:launched_at | - |

| OS-SRV-USG:terminated_at | - |

| accessIPv4 | |

| accessIPv6 | |

| adminPass | aZB9bWwZSueW |

| config_drive | |

| created | 2015-04-01T07:35:11Z |

| flavor | standard.xsmall (100) |

| hostId | |

| id | e738b360-0b10-4f68-85e0-024d81f05454 |

| image | centos-base (098f948e-e80b-4b1a-8a46-f8d2dd57e149) |

| key_name | key-for-internal |

| metadata | {"LBS": "10.20.0.3", "APP": "10.20.0.4"} |

| name | serf-web01 |

| os-extended-volumes:volumes_attached | [] |

| progress | 0 |

| security_groups | sg-for-chap13 |

| status | BUILD |

| tenant_id | 106e169743964758bcad1f06cc69c472 |

| updated | 2015-04-01T07:35:12Z |

| user_id | 98dd78b670884b64b879568215777c53 |

+--------------------------------------+----------------------------------------------------+

しばらくすると、イベンドが表示される。

2015/04/01 16:36:17 [INFO] serf: EventMemberJoin: serf-web01 10.20.0.6

2015/04/01 16:36:18 [INFO] agent: Received event: member-join

lbs_upstream.conf が作成され、web01のアドレスが記載された。

[root@serf-nginx serf]# cat /etc/nginx/conf.d/lbs_upstream.conf

upstream web-server {

server 10.20.0.7:80;

}

もう一台、「web02 の名前で起動](https://github.com/josug-book1-materials/chapter13/blob/master/scripts/06_boot_web02.sh)する。

[root@serf-step-server ~]# nova boot --flavor standard.xsmall \

> --image "centos-base" \

> --key-name key-for-internal \

> --user-data userdata_serf-web.txt \

> --availability-zone az1 \

> --security-groups sg-for-chap13 --nic net-id=${MY_C13_NET} \

> --meta LBS=${MY_SERF_LBS_IP} \

> --meta APP=${MY_SERF_APP_IP} \

> serf-web02

+--------------------------------------+----------------------------------------------------+

| Property | Value |

+--------------------------------------+----------------------------------------------------+

| OS-DCF:diskConfig | MANUAL |

| OS-EXT-AZ:availability_zone | nova |

| OS-EXT-STS:power_state | 0 |

| OS-EXT-STS:task_state | scheduling |

| OS-EXT-STS:vm_state | building |

| OS-SRV-USG:launched_at | - |

| OS-SRV-USG:terminated_at | - |

| accessIPv4 | |

| accessIPv6 | |

| adminPass | H7mzDxk9UQpn |

| config_drive | |

| created | 2015-04-01T07:52:19Z |

| flavor | standard.xsmall (100) |

| hostId | |

| id | bb287f3b-76ad-4350-a55d-70835547ef69 |

| image | centos-base (098f948e-e80b-4b1a-8a46-f8d2dd57e149) |

| key_name | key-for-internal |

| metadata | {"LBS": "10.20.0.3", "APP": "10.20.0.4"} |

| name | serf-web02 |

| os-extended-volumes:volumes_attached | [] |

| progress | 0 |

| security_groups | sg-for-chap13 |

| status | BUILD |

| tenant_id | 106e169743964758bcad1f06cc69c472 |

| updated | 2015-04-01T07:52:20Z |

| user_id | 98dd78b670884b64b879568215777c53 |

+--------------------------------------+----------------------------------------------------+

メンバーは4台。

[root@serf-step-server ~]# serf members

2015/04/01 16:54:03 [INFO] agent.ipc: Accepted client: 127.0.0.1:39515

serf-step-server 10.20.0.1:7946 alive

serf-nginx 10.20.0.3:7946 alive

serf-web01 10.20.0.7:7946 alive role=web

serf-web02 10.20.0.8:7946 alive role=web

lbs_upstream.conf にも追加された。

[root@serf-nginx serf]# cat /etc/nginx/conf.d/lbs_upstream.conf

upstream web-server {

server 10.20.0.7:80;

server 10.20.0.8:80;

}

serf-web01をnova deleteする。member-failed イベンドが発生した。

[root@serf-step-server ~]# nova delete serf-web01

[root@serf-step-server ~]# 2015/04/01 16:55:29 [INFO] memberlist: Suspect serf-web01 has failed, no acks received

2015/04/01 16:55:31 [INFO] memberlist: Suspect serf-web01 has failed, no acks received

2015/04/01 16:55:33 [INFO] memberlist: Marking serf-web01 as failed, suspect timeout reached

2015/04/01 16:55:33 [INFO] serf: EventMemberFailed: serf-web01 10.20.0.7

2015/04/01 16:55:34 [INFO] agent: Received event: member-failed

2015/04/01 16:55:37 [INFO] serf: attempting reconnect to serf-web01 10.20.0.7:7946

serf-web01 の 10.20.0.7:80 が down になっている。

[root@serf-nginx serf]# cat /etc/nginx/conf.d/lbs_upstream.conf

upstream web-server {

server 10.20.0.7:80 down;

server 10.20.0.8:80;

}

13.2.5 応用と課題

Serfはノードの増減か任意のイベントしか監視できない。サービス監視で自動化するなら次節のConsulが使える。

13.3 ConsulによるDBサーバーの可用性向上

13.3.1 Consulでサービスレベルの監視と自動化

SerfとConsulの比較。ConsulはC/S型でサービスモニタリングが出来る。

13.3.2 ConsulによるMySQLの監視

監視をフェイルオーバーの流れの説明。

13.3.3 DBサーバーの環境構築

サーバーはSerf環境のものを使う。9章と同様にdbsのMySQL領域をCinderにしスナップショット運用がされていることとする。

まず、MySQL用のボリュームを作成し、serf-dbs01にアタッチする。手順はこちら。

[root@serf-step-server ~]# function get_uuid () { cat - | grep " id " | awk '{print $4}'; }

[root@serf-step-server ~]# cinder create --availability-zone az1 --display-name consul_db01 1

+---------------------+--------------------------------------+

| Property | Value |

+---------------------+--------------------------------------+

| attachments | [] |

| availability_zone | az1 |

| bootable | false |

| created_at | 2015-04-01T11:52:31.009404 |

| display_description | None |

| display_name | consul_db01 |

| encrypted | False |

| id | db295dfc-810c-4d73-aaaf-d2cf2d3dd5c6 |

| metadata | {} |

| size | 1 |

| snapshot_id | None |

| source_volid | None |

| status | creating |

| volume_type | None |

+---------------------+--------------------------------------+

[root@serf-step-server ~]# export MY_CONSUL_DB01=`cinder show consul_db01 |get_uuid`

[root@serf-step-server ~]# nova volume-attach serf-dbs01 $MY_CONSUL_DB01

+----------+--------------------------------------+

| Property | Value |

+----------+--------------------------------------+

| device | /dev/vdc |

| id | db295dfc-810c-4d73-aaaf-d2cf2d3dd5c6 |

| serverId | 6bd05b63-ea34-44ac-a329-7225f854471d |

| volumeId | db295dfc-810c-4d73-aaaf-d2cf2d3dd5c6 |

+----------+--------------------------------------+

serf-dbs01にログインして/dev/vdcにパーティションを作成しボリュームをフォーマットする。

[root@serf-step-server ~]# ssh -i key-for-internal.pem root@10.20.0.5

The authenticity of host '10.20.0.5 (10.20.0.5)' can't be established.

RSA key fingerprint is 5d:f9:dd:c2:da:91:37:d8:8d:c9:2e:d7:29:7d:b5:93.

Are you sure you want to continue connecting (yes/no)? yes

Warning: Permanently added '10.20.0.5' (RSA) to the list of known hosts.

[root@serf-dbs01 ~]# lsblk

NAME MAJ:MIN RM SIZE RO TYPE MOUNTPOINT

sr0 11:0 1 422K 0 rom

vda 252:0 0 10G 0 disk

└─vda1 252:1 0 10G 0 part /

vdb 252:16 0 10G 0 disk /mnt

vdc 252:32 0 1G 0 disk

[root@serf-dbs01 ~]# fdisk /dev/vdc

Device contains neither a valid DOS partition table, nor Sun, SGI or OSF disklabel

Building a new DOS disklabel with disk identifier 0xd336e85f.

Changes will remain in memory only, until you decide to write them.

After that, of course, the previous content won't be recoverable.

Warning: invalid flag 0x0000 of partition table 4 will be corrected by w(rite)

WARNING: DOS-compatible mode is deprecated. It's strongly recommended to

switch off the mode (command 'c') and change display units to

sectors (command 'u').

Command (m for help): n

Command action

e extended

p primary partition (1-4)

p

Partition number (1-4): 1

First cylinder (1-2080, default 1):

Using default value 1

Last cylinder, +cylinders or +size{K,M,G} (1-2080, default 2080):

Using default value 2080

Command (m for help): p

Disk /dev/vdc: 1073 MB, 1073741824 bytes

16 heads, 63 sectors/track, 2080 cylinders

Units = cylinders of 1008 * 512 = 516096 bytes

Sector size (logical/physical): 512 bytes / 512 bytes

I/O size (minimum/optimal): 512 bytes / 512 bytes

Disk identifier: 0xd336e85f

Device Boot Start End Blocks Id System

/dev/vdc1 1 2080 1048288+ 83 Linux

Command (m for help): w

The partition table has been altered!

Calling ioctl() to re-read partition table.

Syncing disks.

[root@serf-dbs01 ~]# mkfs.ext4 -L mysql_data /dev/vdc1

mke2fs 1.41.12 (17-May-2010)

Filesystem label=mysql_data

OS type: Linux

Block size=4096 (log=2)

Fragment size=4096 (log=2)

Stride=0 blocks, Stripe width=0 blocks

65536 inodes, 262072 blocks

13103 blocks (5.00%) reserved for the super user

First data block=0

Maximum filesystem blocks=268435456

8 block groups

32768 blocks per group, 32768 fragments per group

8192 inodes per group

Superblock backups stored on blocks:

32768, 98304, 163840, 229376

Writing inode tables: done

Creating journal (4096 blocks): done

Writing superblocks and filesystem accounting information: done

This filesystem will be automatically checked every 23 mounts or

180 days, whichever comes first. Use tune2fs -c or -i to override.

[root@serf-dbs01 ~]# tune2fs -c 0 -i 0 -r 0 /dev/vdc1

tune2fs 1.41.12 (17-May-2010)

Setting maximal mount count to -1

Setting interval between checks to 0 seconds

Setting reserved blocks count to 0

MySQLのデータをボリュームに移行し、MySQLから利用できるようにする。

[root@serf-dbs01 ~]# mkdir /tmp/data

[root@serf-dbs01 ~]# mount LABEL=mysql_data /tmp/data

[root@serf-dbs01 ~]# service mysqld stop

Stopping mysqld: [ OK ]

[root@serf-dbs01 ~]# mv /var/lib/mysql/* /tmp/data

[root@serf-dbs01 ~]# chown -R mysql:mysql /tmp/data

[root@serf-dbs01 ~]# umount /tmp/data

[root@serf-dbs01 ~]# echo "LABEL=mysql_data /var/lib/mysql ext4 defaults,noatime 0 2" >> /etc/fstab

[root@serf-dbs01 ~]# mount /var/lib/mysql

[root@serf-dbs01 ~]# df

Filesystem 1K-blocks Used Available Use% Mounted on

/dev/vda1 10189112 1326504 8338372 14% /

tmpfs 510172 0 510172 0% /dev/shm

/dev/vdb 10190136 23028 9642820 1% /mnt

/dev/vdc1 1015416 22780 992636 3% /var/lib/mysql

[root@serf-dbs01 ~]# service mysqld start

Starting mysqld: [ OK ]

MySQLをいったん停止したのでappサーバーのサービスも再起動する。

[root@serf-app01 ~]# sh /root/sample-app/server-setup/rest.init.sh restart

Starting rest.py [ OK ]

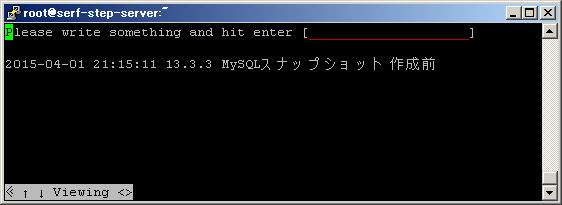

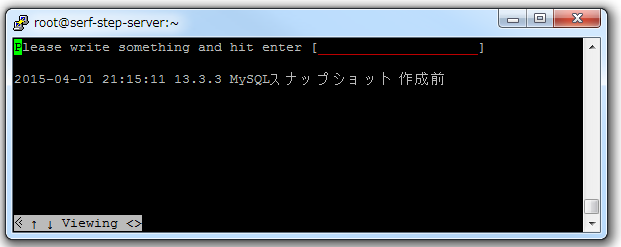

アプリケーションの稼動確認も兼ねてメッセージを投稿しておく。

ボリュームのスナップショットを作る。

まず、MySQLを停止してボリュームをアンマウントする。

[root@serf-dbs01 ~]# service mysqld stop

Stopping mysqld: [ OK ]

[root@serf-dbs01 ~]# umount /var/lib/mysql/

ボリュームをデタッチする。Statusがavailableになるまで待つ。

[root@serf-step-server ~]# nova volume-detach serf-dbs01 $MY_CONSUL_DB01

[root@serf-step-server ~]# cinder show $MY_CONSUL_DB01

+---------------------------------------+--------------------------------------+

| Property | Value |

+---------------------------------------+--------------------------------------+

| attachments | [] |

| availability_zone | az1 |

| bootable | false |

| created_at | 2015-04-01T11:52:31.000000 |

| display_description | None |

| display_name | consul_db01 |

| encrypted | False |

| id | db295dfc-810c-4d73-aaaf-d2cf2d3dd5c6 |

| metadata | {u'readonly': u'False'} |

| os-vol-tenant-attr:tenant_id | 106e169743964758bcad1f06cc69c472 |

| os-volume-replication:driver_data | None |

| os-volume-replication:extended_status | None |

| size | 1 |

| snapshot_id | None |

| source_volid | None |

| status | available |

| volume_type | None |

+---------------------------------------+--------------------------------------+

スナップショットを作成する。

[root@serf-step-server ~]# cinder snapshot-create --display-name consul_db01_snap consul_db01

+---------------------+--------------------------------------+

| Property | Value |

+---------------------+--------------------------------------+

| created_at | 2015-04-01T12:29:26.003305 |

| display_description | None |

| display_name | consul_db01_snap |

| id | e7010900-4285-4b14-aa52-c7ea92af15a9 |

| metadata | {} |

| size | 1 |

| status | creating |

| volume_id | db295dfc-810c-4d73-aaaf-d2cf2d3dd5c6 |

+---------------------+--------------------------------------+

環境変数にスナップショットのUUIDを保管しておく。

[root@serf-step-server ~]# export MY_CONSUL_SNAP=`cinder snapshot-show consul_db01_snap | get_uuid`

[root@serf-step-server ~]# echo $MY_CONSUL_SNAP

e7010900-4285-4b14-aa52-c7ea92af15a9

13.3.4 Consulサーバー環境の構築

Consulを踏み台サーバーに導入する。導入用のシェルはこちら。

[root@serf-step-server ~]# cd chapter13/install_chap13/

[root@serf-step-server install_chap13]# sh install_consul.sh

--2015-04-01 21:46:38-- https://dl.bintray.com/mitchellh/consul/0.4.1_linux_amd64.zip

Resolving dl.bintray.com... 5.153.24.114

~~~~

100%[=================================================================>] 4,073,679 11.0M/s in 0.4s

2015-04-01 21:46:38 (11.0 MB/s) - “0.4.1_linux_amd64.zip” saved [4073679/4073679]

Archive: ./0.4.1_linux_amd64.zip

inflating: consul

踏み台サーバーをDNSサーバーとして設定する。Consul自体にDNSサーバーの機能はあるが、ポート8600で稼動するため通常のDNSとしては使えない。

dnsmasqというDNSプロキシーのようなものを動かし、ConsulのDNSサービスを、通常の名前解決で利用できるようにする。

dnsmasqを導入し、ポート8600から転送するようにする。

[root@serf-step-server ~]# yum install -y dnsmasq

~~~~~~~

Installed:

dnsmasq.x86_64 0:2.48-14.el6

Complete!

[root@serf-step-server ~]# echo "server=/consul/127.0.0.1#8600" >> /etc/dnsmasq.conf

[root@serf-step-server ~]# echo "strict-order" >> /etc/dnsmasq.conf

DNSサーバーとしてlocalhostを参照するようにしてdnsmasqを起動する。

[root@serf-step-server ~]# vi /etc/resolv.conf

[root@serf-step-server ~]# cat /etc/resolv.conf

nameserver 127.0.0.1

nameserver 8.8.8.8

nameserver 8.8.4.4

[root@serf-step-server ~]# service dnsmasq start

dnsdomainname: Unknown host

Starting dnsmasq: [ OK ]

counsul watch でフェイルオーバー用のスクリプトを自動実行するように設定する。

Counsulサーバーを起動する。実行内容はこちら

[root@serf-step-server ~]# mkdir -p /opt/consul/conf /opt/consul/dat

[root@serf-step-server ~]# consul agent \

> -bootstrap \

> -server \

> -node=consul-server \

> -dc=openstack \

> -data-dir=/opt/consul/dat \

> -config-file=/opt/consul/conf

==> WARNING: Bootstrap mode enabled! Do not enable unless necessary

==> WARNING: It is highly recommended to set GOMAXPROCS higher than 1

==> Starting Consul agent...

==> Starting Consul agent RPC...

==> Consul agent running!

Node name: 'consul-server'

Datacenter: 'openstack'

Server: true (bootstrap: true)

Client Addr: 127.0.0.1 (HTTP: 8500, DNS: 8600, RPC: 8400)

Cluster Addr: 10.20.0.1 (LAN: 8301, WAN: 8302)

Gossip encrypt: false, RPC-TLS: false, TLS-Incoming: false

==> Log data will now stream in as it occurs:

2015/04/02 09:54:10 [INFO] raft: Node at 10.20.0.1:8300 [Follower] entering Follower state

2015/04/02 09:54:10 [INFO] serf: EventMemberJoin: consul-server 10.20.0.1

2015/04/02 09:54:10 [INFO] serf: EventMemberJoin: consul-server.openstack 10.20.0.1

2015/04/02 09:54:10 [INFO] consul: adding server consul-server (Addr: 10.20.0.1:8300) (DC: openstack)

2015/04/02 09:54:10 [INFO] consul: adding server consul-server.openstack (Addr: 10.20.0.1:8300) (DC: openstack)

2015/04/02 09:54:10 [ERR] agent: failed to sync remote state: No cluster leader

2015/04/02 09:54:11 [WARN] raft: Heartbeat timeout reached, starting election

2015/04/02 09:54:11 [INFO] raft: Node at 10.20.0.1:8300 [Candidate] entering Candidate state

2015/04/02 09:54:11 [INFO] raft: Election won. Tally: 1

2015/04/02 09:54:11 [INFO] raft: Node at 10.20.0.1:8300 [Leader] entering Leader state

2015/04/02 09:54:11 [INFO] consul: cluster leadership acquired

2015/04/02 09:54:11 [INFO] consul: New leader elected: consul-server

2015/04/02 09:54:11 [INFO] raft: Disabling EnableSingleNode (bootstrap)

2015/04/02 09:54:11 [INFO] consul: member 'consul-server' joined, marking health alive

2015/04/02 09:54:14 [INFO] agent: Synced service 'consul'

==> Newer Consul version available: 0.5.0

「&」を付け忘れたのでフォアグラウンドで稼動している。別のターミナルからメンバー確認する。

[root@serf-step-server ~]# consul members

Node Address Status Type Build Protocol

consul-server 10.20.0.1:8301 alive server 0.4.1 2

ボリュームconsul_db01をserf-dbs01にアタッチする。

[root@serf-step-server ~]# function get_uuid () { cat - | grep " id " | awk '{print $4}'; }

[root@serf-step-server ~]# export MY_CONSUL_DB01=`cinder show consul_db01 |get_uuid`

[root@serf-step-server ~]# nova volume-attach serf-dbs01 $MY_CONSUL_DB01

+----------+--------------------------------------+

| Property | Value |

+----------+--------------------------------------+

| device | /dev/vdc |

| id | db295dfc-810c-4d73-aaaf-d2cf2d3dd5c6 |

| serverId | 6bd05b63-ea34-44ac-a329-7225f854471d |

| volumeId | db295dfc-810c-4d73-aaaf-d2cf2d3dd5c6 |

+----------+--------------------------------------+

serf-dbs01がConsulクラスターに参加するときに、踏み台サーバーのアドレスを知る必要がある。変数 MY_CONSUL_SERVER に保管し、serf-dbs01のmetadataにセットする。

[root@serf-step-server ~]# export MY_CONSUL_SERVER=`nova show serf-step-server | grep "serf-consul-net" | awk '{print $5}' | sed -e "s/,//g"`

[root@serf-step-server ~]# echo $MY_CONSUL_SERVER

10.20.0.1

[root@serf-step-server ~]# nova meta serf-dbs01 set CONSUL_SERVER=${MY_CONSUL_SERVER}

serf-dbs01でマウントを確認し、mysqlを起動する。

[root@serf-dbs01 ~]# mount -a

[root@serf-dbs01 ~]# df -h /var/lib/mysql/

Filesystem Size Used Avail Use% Mounted on

/dev/vdc1 992M 23M 970M 3% /var/lib/mysql

[root@serf-dbs01 ~]# service mysqld start

Starting mysqld: [ OK ]

serf-app01でアプリを再始動する。

[root@serf-app01 ~]# sh /root/sample-app/server-setup/rest.init.sh restart

Starting rest.py [ OK ]

serf-dbs01でConsulエージェントをセットアップする。

まず、Consulを導入する。

[root@serf-dbs01 ~]# git clone -q https://github.com/josug-book1-materials/chapter13.git

Unpacking objects: 100% (53/53), done.

[root@serf-dbs01 ~]# cd chapter13/install_chap13

[root@serf-dbs01 install_chap13]# sh install_consul.sh

~~~~~~~

Archive: ./0.4.1_linux_amd64.zip

inflating: consul

MySQLの監視用ファイルを用意する。内容はこちら。

[root@serf-dbs01 ~]# mkdir -p /opt/consul/conf

[root@serf-dbs01 ~]# cp chapter13/list13/chap13-3-4-consul_conf_mysql.json /opt/consul/conf/_mysql.json

Consulエージェントを起動する。手順はこちら。

[root@serf-dbs01 ~]# yum install -y jq

~~~~~~~

Installed:

jq.x86_64 0:1.3-2.el6

Complete!

[root@serf-dbs01 ~]# CONSUL_SERVER=`curl -s http://169.254.169.254/openstack/latest/meta_data.json | jq -r '.meta["CONSUL_SERVER"]'`

[root@serf-dbs01 ~]# echo $CONSUL_SERVER

10.20.0.1

[root@serf-dbs01 ~]# /usr/local/bin/consul agent \

> -dc=openstack \

> -data-dir=/opt/consul/dat \

> -config-file=/opt/consul/conf \

> -join=${CONSUL_SERVER} &

[1] 27516

[root@serf-dbs01 ~]# ==> WARNING: It is highly recommended to set GOMAXPROCS higher than 1

==> Starting Consul agent...

==> Starting Consul agent RPC...

==> Joining cluster...

Join completed. Synced with 1 initial agents

==> Consul agent running!

Node name: 'serf-dbs01'

Datacenter: 'openstack'

Server: false (bootstrap: false)

Client Addr: 127.0.0.1 (HTTP: 8500, DNS: 8600, RPC: 8400)

Cluster Addr: 10.20.0.5 (LAN: 8301, WAN: 8302)

Gossip encrypt: false, RPC-TLS: false, TLS-Incoming: false

==> Log data will now stream in as it occurs:

2015/04/02 10:30:37 [INFO] serf: EventMemberJoin: serf-dbs01 10.20.0.5

2015/04/02 10:30:37 [INFO] agent: (LAN) joining: [10.20.0.1]

2015/04/02 10:30:37 [INFO] serf: EventMemberJoin: consul-server 10.20.0.1

2015/04/02 10:30:37 [INFO] agent: (LAN) joined: 1 Err: <nil>

2015/04/02 10:30:37 [ERR] agent: failed to sync remote state: No known Consul servers

2015/04/02 10:30:37 [INFO] consul: adding server consul-server (Addr: 10.20.0.1:8300) (DC: openstack)

2015/04/02 10:30:37 [INFO] agent: Synced service 'mysql'

2015/04/02 10:30:37 [INFO] agent: Synced check 'service:mysql'

==> Newer Consul version available: 0.5.0

メンバーにいる。

[root@serf-step-server ~]# consul members

Node Address Status Type Build Protocol

consul-server 10.20.0.1:8301 alive server 0.4.1 2

serf-dbs01 10.20.0.5:8301 alive client 0.4.1 2

「mysql.service.openstack.consul」の名前でserf-dbs01のIPが取得できる。

[root@serf-step-server ~]# dig mysql.service.openstack.consul a

; <<>> DiG 9.8.2rc1-RedHat-9.8.2-0.30.rc1.el6_6.2 <<>> mysql.service.openstack.consul a

;; global options: +cmd

;; Got answer:

;; ->>HEADER<<- opcode: QUERY, status: NOERROR, id: 20213

;; flags: qr aa rd ra; QUERY: 1, ANSWER: 1, AUTHORITY: 0, ADDITIONAL: 0

;; QUESTION SECTION:

;mysql.service.openstack.consul. IN A

;; ANSWER SECTION:

mysql.service.openstack.consul. 0 IN A 10.20.0.5

;; Query time: 1 msec

;; SERVER: 127.0.0.1#53(127.0.0.1)

;; WHEN: Thu Apr 2 10:32:58 2015

;; MSG SIZE rcvd: 94

appサーバーでip決め打ちだったdbsの参照をホスト名に変更する。

[root@serf-app01 ~]# cat /root/sample-app/endpoint.conf

[rest-server]

rest_host = 127.0.0.1

rest_endpoint = http://%(rest_host)s:5555/bbs

[db-server]

db_host = mysql.service.openstack.consul

db_endpoint = mysql://user:password@%(db_host)s/sample_bbs?charset=utf8

[root@serf-app01 ~]# cat /etc/resolv.conf

nameserver 10.20.0.1

nameserver 8.8.8.8

nameserver 8.8.4.4

10.20.0.1を使ったアドレス解決に成功!

[root@serf-app01 ~]# dig mysql.service.openstack.consul a @10.20.0.1

; <<>> DiG 9.8.2rc1-RedHat-9.8.2-0.30.rc1.el6_6.2 <<>> mysql.service.openstack.consul a @10.20.0.1

;; global options: +cmd!

;; Got answer:

;; ->>HEADER<<- opcode: QUERY, status: NOERROR, id: 50721

;; flags: qr aa rd ra; QUERY: 1, ANSWER: 1, AUTHORITY: 0, ADDITIONAL: 0

;; QUESTION SECTION:

;mysql.service.openstack.consul. IN A

;; ANSWER SECTION:

mysql.service.openstack.consul. 0 IN A 10.20.0.5

;; Query time: 1 msec

;; SERVER: 10.20.0.1#53(10.20.0.1)

;; WHEN: Thu Apr 2 11:05:32 2015

;; MSG SIZE rcvd: 94

MySQLにもホスト名で接続できる。

[root@serf-app01 ~]# mysql -h mysql.service.openstack.consul -uuser -ppassword

Welcome to the MySQL monitor. Commands end with ; or \g.

Your MySQL connection id is 336

Server version: 5.1.73 Source distribution

Copyright (c) 2000, 2013, Oracle and/or its affiliates. All rights reserved.

Oracle is a registered trademark of Oracle Corporation and/or its

affiliates. Other names may be trademarks of their respective

owners.

Type 'help;' or '\h' for help. Type '\c' to clear the current input statement.

mysql> exit

Bye

サービスを再起動する。

[root@serf-app01 ~]# sh /root/sample-app/server-setup/rest.init.sh restart

Starting rest.py [ OK ]

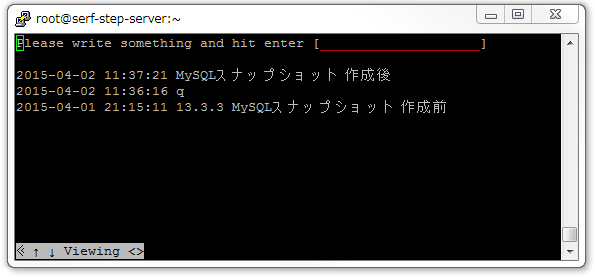

メッセージを投稿しておく。

13.3.5 フェイルオーバー実行と動作確認

dbs起動用のuserdataを用意する。内容はこちら。

[root@serf-step-server ~]# cp chapter13/list13/list-13-3-4_userdata_consul-dbs.txt userdata_consul-dbs.txt

フェイルオーバーのためのdbsを自動起動するスクリプト /opt/consul/consul_mysql_recovery.sh を用意する。内容はこちら。

[root@serf-step-server ~]# cp chapter13/list13/list-13-3-5_consul_mysql_recovery.sh /opt/consul/consul_mysql_recovery.sh

[root@serf-step-server ~]# chmod 700 /opt/consul/consul_mysql_recovery.sh

フェイルオーバーの設定はConsulサーバー上でconsul watchで行う。

consul経由でmysqlの状況を確認する /optn/consul/consul_watch.sh を用意する。内容はこちら。

[root@serf-step-server ~]# cp chapter13/list13/list-13-3-6_consul_watch.sh /opt/consul/consul_watch.sh

[root@serf-step-server ~]# chmod 700 /opt/consul/consul_watch.sh

consul watch で監視を開始する。実行内容はこちら。

MySQLを停止すると警告があがる。

[root@serf-dbs01 ~]# service mysqld stop

Stopping mysqld: [ OK ]

2015/04/02 13:38:43 [WARN] Check 'service:mysql' is now warning

2015/04/02 13:38:43 [INFO] agent: Synced check 'service:mysql'

2015/04/02 13:38:53 [WARN] Check 'service:mysql' is now warning

dbsのconsulをkillallする。

[root@serf-dbs01 ~]# killall -9 consul

[1]+ Killed /usr/local/bin/consul agent -dc=openstack -data-dir=/opt/consul/dat -config-file=/opt/consul/conf -join=${CONSUL_SERVER}

ボリューム consul_db01_copy が snapshot から作成された、serf-dbs02 が nova boot される。

[root@serf-step-server ~]# consul watch -http-addr=127.0.0.1:8500 -type=service -service=mysql /opt/consul/consul_watch.sh

+---------------------+--------------------------------------+

| Property | Value |

+---------------------+--------------------------------------+

| attachments | [] |

| availability_zone | az1 |

| bootable | false |

| created_at | 2015-04-02T05:05:42.147738 |

| display_description | None |

| display_name | consul_db01_copy |

| encrypted | False |

| id | 8cad16b1-5dbe-44a9-8e17-446013f0c434 |

| metadata | {} |

| size | 1 |

| snapshot_id | e7010900-4285-4b14-aa52-c7ea92af15a9 |

| source_volid | None |

| status | creating |

| volume_type | None |

+---------------------+--------------------------------------+

+--------------------------------------+----------------------------------------------------+

| Property | Value |

+--------------------------------------+----------------------------------------------------+

| OS-DCF:diskConfig | MANUAL |

| OS-EXT-AZ:availability_zone | nova |

| OS-EXT-STS:power_state | 0 |

| OS-EXT-STS:task_state | scheduling |

| OS-EXT-STS:vm_state | building |

| OS-SRV-USG:launched_at | - |

| OS-SRV-USG:terminated_at | - |

| accessIPv4 | |

| accessIPv6 | |

| adminPass | a75eMnmWCayn |

| config_drive | |

| created | 2015-04-02T05:05:45Z |

| flavor | standard.xsmall (100) |

| hostId | |

| id | 1b22ecb8-11d2-4e6e-b388-c81c9939a566 |

| image | centos-base (098f948e-e80b-4b1a-8a46-f8d2dd57e149) |

| key_name | key-for-internal |

| metadata | {"CONSUL_SERVER": "10.20.0.1"} |

| name | serf-dbs02 |

| os-extended-volumes:volumes_attached | [{"id": "8cad16b1-5dbe-44a9-8e17-446013f0c434"}] |

| progress | 0 |

| security_groups | sg-for-chap13 |

| status | BUILD |

| tenant_id | 106e169743964758bcad1f06cc69c472 |

| updated | 2015-04-02T05:05:45Z |

| user_id | 98dd78b670884b64b879568215777c53 |

+--------------------------------------+----------------------------------------------------+

確かに serf-dbs02 ができている。

[root@serf-step-server ~]# nova list --field name,networks --name serf-dbs

+--------------------------------------+------------+---------------------------+

| ID | Name | Networks |

+--------------------------------------+------------+---------------------------+

| 6bd05b63-ea34-44ac-a329-7225f854471d | serf-dbs01 | serf-consul-net=10.20.0.5 |

| 1b22ecb8-11d2-4e6e-b388-c81c9939a566 | serf-dbs02 | serf-consul-net=10.20.0.9 |

+--------------------------------------+------------+---------------------------+

mysql.service.openstack.consulのアドレスが serf-dbs02 の 10.20.0.9 になった。

[root@serf-step-server ~]# dig mysql.service.openstack.consul a

; <<>> DiG 9.8.2rc1-RedHat-9.8.2-0.30.rc1.el6_6.2 <<>> mysql.service.openstack.consul a

;; global options: +cmd

;; Got answer:

;; ->>HEADER<<- opcode: QUERY, status: NOERROR, id: 52430

;; flags: qr aa rd ra; QUERY: 1, ANSWER: 1, AUTHORITY: 0, ADDITIONAL: 0

;; QUESTION SECTION:

;mysql.service.openstack.consul. IN A

;; ANSWER SECTION:

mysql.service.openstack.consul. 0 IN A 10.20.0.9

今回は自動化されていないのでappサーバーでサービスを再起動する。

[root@serf-app01 ~]# sh /root/sample-app/server-setup/rest.init.sh restart

Starting rest.py [ OK ]

lbsに接続するとデータがスナップショット作成時のものに戻っている。

dbsのフェイルオーバー時にappサーバーでサービスを自動再起動する方法はこちら。ただ、Consulの方法はフェイルオーバー時にすぐに再起動してフェイルオーバー先のdbsの稼動を待たない気もする。Serfの方はmember-joinで再起動しているので、起動後の実行になるけれど...

第13章の完了。