はじめに

- これまで、CNNやRNNなどを使って、いわゆる「教師あり学習」を行ってきました。

- 今回は、かの有名なAlphaGoのような「強化学習」を簡単に作ってみました。

- といっても既に作られている方のに少し改良を加えてだけです。

- ○✕ゲームは、正式には三目並べと呼ばれるみたいです。(知らなかった)

- 細かいところは今までの実施内容と同等なので二度書きはいたしません。

- 複雑ではないので、slimや定数化しておりません。

参考

第8回 TensorFlow で○×ゲームの AI を作ってみよう

環境

| # | OS/ソフトウェア/ライブラリ | バージョン |

|---|---|---|

| 1 | Mac OS X | EI Capitan |

| 2 | Python | 2.7系 |

| 3 | TensorFlow | 1.2系 |

詳細

学習データ

こちらをそのまま使わせていただいております。

https://github.com/sfujiwara/tictactoe-tensorflow/tree/master/data

ソースコード

ticktacktoo.py

# !/usr/local/bin/python

# -*- coding: utf-8 -*-

import os

import shutil

import numpy as np

import tensorflow as tf

def inference(squares_placeholder):

# 隠れ層第1レイヤーを作成

with tf.name_scope('hidden1') as scope:

hidden1 = tf.layers.dense(squares_placeholder, 32, activation=tf.nn.relu)

# 隠れ層第2レイヤーを作成

with tf.name_scope('hidden2') as scope:

hidden2 = tf.layers.dense(hidden1, 32, activation=tf.nn.relu)

# 高密度層レイヤーを作成

with tf.name_scope('logits') as scope:

logits = tf.layers.dense(hidden2, 3)

# ソフトマックス関数による正規化

with tf.name_scope('softmax') as scope:

logits = tf.nn.softmax(logits)

return logits

# 誤差(loss)を元に誤差逆伝播を用いて設計した学習モデルを訓練する

def loss(labels_placeholder, logits):

cross_entropy = tf.losses.softmax_cross_entropy(

onehot_labels=labels_placeholder,

logits=logits,

label_smoothing=1e-5

)

# TensorBoardで表示するよう指定

tf.summary.scalar("cross_entropy", cross_entropy)

return cross_entropy

# 誤差(loss)を元に誤差逆伝播を用いて設計した学習モデルを訓練する

def training(learning_rate, loss):

# この関数がその当たりの全てをやってくれる様

train_step = tf.train.AdamOptimizer(learning_rate).minimize(loss)

return train_step

# inferenceで学習モデルが出した予測結果の正解率を算出する

def accuracy(logits, labels):

# 予測ラベルと正解ラベルが等しいか比べる。同じ値であればTrueが返される

correct_prediction = tf.equal(tf.argmax(logits, 1), tf.argmax(labels, 1))

# booleanのcorrect_predictionをfloatに直して正解率の算出

accuracy = tf.reduce_mean(tf.cast(correct_prediction, tf.float32))

# TensorBoardで表示する様設定

tf.summary.scalar("accuracy", accuracy)

return accuracy

if __name__ == '__main__':

np.random.seed(1)

mat = np.loadtxt('/workspace/tictactoe/data.csv', skiprows=1, delimiter=",")

ind_train = np.random.choice(5890, 4000, replace=False)

ind_test = np.array([i for i in range(5890) if i not in ind_train])

train_square = mat[ind_train, :-1]

test_square = mat[ind_test, :-1]

all_label = np.zeros([len(mat), 3])

for i, j in enumerate(mat[:, -1]):

if j == 1:

# x win

all_label[i][0] = 1.

elif j == -1:

# o win

all_label[i][1] = 1.

else:

# draw

all_label[i][2] = 1.

train_label = all_label[ind_train]

test_label = all_label[ind_test]

with tf.Graph().as_default() as graph:

tf.set_random_seed(0)

# 画像を入れるためのTensor(28*28*3(IMAGE_PIXELS)次元の画像が任意の枚数(None)分はいる)

squares_placeholder = tf.placeholder(tf.float32, [None, 9])

# ラベルを入れるためのTensor(3(NUM_CLASSES)次元のラベルが任意の枚数(None)分入る)

labels_placeholder = tf.placeholder(tf.float32, [None, 3])

# モデルを生成する

logits = inference(squares_placeholder)

# loss()を呼び出して損失を計算

loss = loss(labels_placeholder, logits)

# training()を呼び出して訓練して学習モデルのパラメーターを調整する

train_step = training(0.01, loss)

# 精度の計算

accuracy = accuracy(logits, labels_placeholder)

# 保存の準備

saver = tf.train.Saver()

# Sessionの作成(TensorFlowの計算は絶対Sessionの中でやらなきゃだめ)

sess = tf.Session()

# 変数の初期化(Sessionを開始したらまず初期化)

sess.run(tf.global_variables_initializer())

# TensorBoard表示の設定(TensorBoardの宣言的な?)

summary_step = tf.summary.merge_all()

# train_dirでTensorBoardログを出力するpathを指定

summary_writer = tf.summary.FileWriter('/workspace/tictactoe/data', sess.graph)

for step in range(10000):

ind = np.random.choice(len(train_label), 1000)

sess.run(

train_step,

feed_dict={squares_placeholder: train_square[ind], labels_placeholder: train_label[ind]}

)

if step % 100 == 0:

train_loss = sess.run(

loss,

feed_dict={squares_placeholder: train_square, labels_placeholder: train_label}

)

train_accuracy, labels_pred = sess.run(

[accuracy, logits],

feed_dict={squares_placeholder: train_square, labels_placeholder: train_label}

)

test_accuracy = sess.run(

accuracy,

feed_dict={squares_placeholder: test_square, labels_placeholder: test_label}

)

summary = sess.run(

summary_step,

feed_dict={squares_placeholder: train_square, labels_placeholder: train_label}

)

summary_writer.add_summary(summary, step)

print "Iteration: {0} Loss: {1} Train Accuracy: {2} Test Accuracy{3}".format(

step, train_loss, train_accuracy, test_accuracy

)

save_path = saver.save(sess, 'tictactoe.ckpt')

学習実行

2017-07-04 14:19:57.084696: W tensorflow/core/platform/cpu_feature_guard.cc:45] The TensorFlow library wasn't compiled to use SSE4.2 instructions, but these are available on your machine and could speed up CPU computations.

2017-07-04 14:19:57.084722: W tensorflow/core/platform/cpu_feature_guard.cc:45] The TensorFlow library wasn't compiled to use AVX instructions, but these are available on your machine and could speed up CPU computations.

2017-07-04 14:19:57.084728: W tensorflow/core/platform/cpu_feature_guard.cc:45] The TensorFlow library wasn't compiled to use AVX2 instructions, but these are available on your machine and could speed up CPU computations.

2017-07-04 14:19:57.084733: W tensorflow/core/platform/cpu_feature_guard.cc:45] The TensorFlow library wasn't compiled to use FMA instructions, but these are available on your machine and could speed up CPU computations.

Iteration: 0 Loss: 1.08315229416 Train Accuracy: 0.425999999046 Test Accuracy0.426455020905

Iteration: 100 Loss: 0.748883843422 Train Accuracy: 0.814249992371 Test Accuracy0.785185158253

Iteration: 200 Loss: 0.629662871361 Train Accuracy: 0.934000015259 Test Accuracy0.894179880619

Iteration: 300 Loss: 0.600810408592 Train Accuracy: 0.960250020027 Test Accuracy0.914285719395

Iteration: 400 Loss: 0.594145357609 Train Accuracy: 0.964749991894 Test Accuracy0.913227498531

Iteration: 500 Loss: 0.582351207733 Train Accuracy: 0.975499987602 Test Accuracy0.925396800041

Iteration: 600 Loss: 0.575868725777 Train Accuracy: 0.981500029564 Test Accuracy0.920634925365

Iteration: 700 Loss: 0.571496605873 Train Accuracy: 0.98425000906 Test Accuracy0.924867749214

Iteration: 800 Loss: 0.571447372437 Train Accuracy: 0.98474997282 Test Accuracy0.919576704502

Iteration: 900 Loss: 0.567611455917 Train Accuracy: 0.98575001955 Test Accuracy0.925396800041

Iteration: 1000 Loss: 0.567007541656 Train Accuracy: 0.98575001955 Test Accuracy0.925396800041

Iteration: 1100 Loss: 0.566512107849 Train Accuracy: 0.986000001431 Test Accuracy0.928571403027

Iteration: 1200 Loss: 0.566121637821 Train Accuracy: 0.986000001431 Test Accuracy0.925925910473

Iteration: 1300 Loss: 0.565603733063 Train Accuracy: 0.986500024796 Test Accuracy0.924338638783

Iteration: 1400 Loss: 0.56520396471 Train Accuracy: 0.986750006676 Test Accuracy0.925396800041

Iteration: 1500 Loss: 0.564830541611 Train Accuracy: 0.986999988556 Test Accuracy0.926455020905

Iteration: 1600 Loss: 0.564735352993 Train Accuracy: 0.986999988556 Test Accuracy0.926455020905

Iteration: 1700 Loss: 0.564707398415 Train Accuracy: 0.986999988556 Test Accuracy0.926984131336

Iteration: 1800 Loss: 0.56460750103 Train Accuracy: 0.986999988556 Test Accuracy0.9280423522

Iteration: 1900 Loss: 0.564545154572 Train Accuracy: 0.986999988556 Test Accuracy0.926455020905

Iteration: 2000 Loss: 0.564533174038 Train Accuracy: 0.986999988556 Test Accuracy0.928571403027

Iteration: 2100 Loss: 0.564481317997 Train Accuracy: 0.986999988556 Test Accuracy0.927513241768

Iteration: 2200 Loss: 0.564553022385 Train Accuracy: 0.986999988556 Test Accuracy0.92962962389

Iteration: 2300 Loss: 0.583365738392 Train Accuracy: 0.96850001812 Test Accuracy0.919576704502

Iteration: 2400 Loss: 0.566257119179 Train Accuracy: 0.986249983311 Test Accuracy0.926455020905

Iteration: 2500 Loss: 0.563695311546 Train Accuracy: 0.987999975681 Test Accuracy0.926455020905

Iteration: 2600 Loss: 0.563434004784 Train Accuracy: 0.987999975681 Test Accuracy0.92962962389

Iteration: 2700 Loss: 0.563206732273 Train Accuracy: 0.988250017166 Test Accuracy0.9280423522

Iteration: 2800 Loss: 0.563172519207 Train Accuracy: 0.988250017166 Test Accuracy0.931746006012

Iteration: 2900 Loss: 0.563154757023 Train Accuracy: 0.988250017166 Test Accuracy0.931746006012

Iteration: 3000 Loss: 0.563151359558 Train Accuracy: 0.988250017166 Test Accuracy0.930687844753

Iteration: 3100 Loss: 0.563149094582 Train Accuracy: 0.988250017166 Test Accuracy0.930687844753

Iteration: 3200 Loss: 0.563141226768 Train Accuracy: 0.988250017166 Test Accuracy0.930687844753

Iteration: 3300 Loss: 0.563139140606 Train Accuracy: 0.988250017166 Test Accuracy0.931216955185

Iteration: 3400 Loss: 0.563138246536 Train Accuracy: 0.988250017166 Test Accuracy0.931216955185

Iteration: 3500 Loss: 0.563154280186 Train Accuracy: 0.988250017166 Test Accuracy0.931746006012

Iteration: 3600 Loss: 0.563149809837 Train Accuracy: 0.988250017166 Test Accuracy0.931216955185

Iteration: 3700 Loss: 0.563176214695 Train Accuracy: 0.988250017166 Test Accuracy0.929100513458

Iteration: 3800 Loss: 0.563181519508 Train Accuracy: 0.988250017166 Test Accuracy0.931216955185

Iteration: 3900 Loss: 0.563153684139 Train Accuracy: 0.988250017166 Test Accuracy0.929100513458

Iteration: 4000 Loss: 0.563127815723 Train Accuracy: 0.988250017166 Test Accuracy0.930687844753

Iteration: 4100 Loss: 0.563163101673 Train Accuracy: 0.988250017166 Test Accuracy0.9280423522

Iteration: 4200 Loss: 0.563137412071 Train Accuracy: 0.988250017166 Test Accuracy0.930687844753

Iteration: 4300 Loss: 0.563160598278 Train Accuracy: 0.988250017166 Test Accuracy0.930687844753

Iteration: 4400 Loss: 0.563147187233 Train Accuracy: 0.988250017166 Test Accuracy0.926984131336

Iteration: 4500 Loss: 0.563141047955 Train Accuracy: 0.988250017166 Test Accuracy0.9280423522

Iteration: 4600 Loss: 0.563162863255 Train Accuracy: 0.988250017166 Test Accuracy0.930158734322

Iteration: 4700 Loss: 0.563196718693 Train Accuracy: 0.988250017166 Test Accuracy0.929100513458

Iteration: 4800 Loss: 0.563158690929 Train Accuracy: 0.988250017166 Test Accuracy0.92962962389

Iteration: 4900 Loss: 0.563124537468 Train Accuracy: 0.988250017166 Test Accuracy0.926984131336

Iteration: 5000 Loss: 0.563167691231 Train Accuracy: 0.988250017166 Test Accuracy0.930158734322

Iteration: 5100 Loss: 0.563187777996 Train Accuracy: 0.988250017166 Test Accuracy0.930687844753

Iteration: 5200 Loss: 0.56315112114 Train Accuracy: 0.988250017166 Test Accuracy0.9280423522

Iteration: 5300 Loss: 0.570619702339 Train Accuracy: 0.981000006199 Test Accuracy0.924867749214

Iteration: 5400 Loss: 0.576466858387 Train Accuracy: 0.976249992847 Test Accuracy0.927513241768

Iteration: 5500 Loss: 0.563514411449 Train Accuracy: 0.988250017166 Test Accuracy0.928571403027

Iteration: 5600 Loss: 0.562488973141 Train Accuracy: 0.989000022411 Test Accuracy0.93439155817

Iteration: 5700 Loss: 0.56244301796 Train Accuracy: 0.989000022411 Test Accuracy0.933333337307

Iteration: 5800 Loss: 0.562356352806 Train Accuracy: 0.989000022411 Test Accuracy0.934920608997

Iteration: 5900 Loss: 0.562411308289 Train Accuracy: 0.989000022411 Test Accuracy0.935978829861

Iteration: 6000 Loss: 0.562405765057 Train Accuracy: 0.989000022411 Test Accuracy0.933862447739

Iteration: 6100 Loss: 0.562349438667 Train Accuracy: 0.989000022411 Test Accuracy0.935449719429

Iteration: 6200 Loss: 0.562380075455 Train Accuracy: 0.989000022411 Test Accuracy0.934920608997

Iteration: 6300 Loss: 0.562388420105 Train Accuracy: 0.989000022411 Test Accuracy0.935449719429

Iteration: 6400 Loss: 0.562395453453 Train Accuracy: 0.989000022411 Test Accuracy0.935449719429

Iteration: 6500 Loss: 0.562419772148 Train Accuracy: 0.989000022411 Test Accuracy0.933862447739

Iteration: 6600 Loss: 0.562360167503 Train Accuracy: 0.989000022411 Test Accuracy0.934920608997

Iteration: 6700 Loss: 0.562407493591 Train Accuracy: 0.989000022411 Test Accuracy0.93439155817

Iteration: 6800 Loss: 0.562382221222 Train Accuracy: 0.989000022411 Test Accuracy0.934920608997

Iteration: 6900 Loss: 0.562420666218 Train Accuracy: 0.989000022411 Test Accuracy0.932804226875

Iteration: 7000 Loss: 0.562407851219 Train Accuracy: 0.989000022411 Test Accuracy0.933862447739

Iteration: 7100 Loss: 0.562392890453 Train Accuracy: 0.989000022411 Test Accuracy0.932804226875

Iteration: 7200 Loss: 0.562432050705 Train Accuracy: 0.989000022411 Test Accuracy0.932804226875

Iteration: 7300 Loss: 0.562389314175 Train Accuracy: 0.989000022411 Test Accuracy0.934920608997

Iteration: 7400 Loss: 0.562418997288 Train Accuracy: 0.989000022411 Test Accuracy0.933333337307

Iteration: 7500 Loss: 0.562441766262 Train Accuracy: 0.989000022411 Test Accuracy0.935449719429

Iteration: 7600 Loss: 0.562380254269 Train Accuracy: 0.989000022411 Test Accuracy0.934920608997

Iteration: 7700 Loss: 0.562415003777 Train Accuracy: 0.989000022411 Test Accuracy0.935449719429

Iteration: 7800 Loss: 0.562358081341 Train Accuracy: 0.989000022411 Test Accuracy0.93439155817

Iteration: 7900 Loss: 0.562432765961 Train Accuracy: 0.989000022411 Test Accuracy0.935978829861

Iteration: 8000 Loss: 0.562436521053 Train Accuracy: 0.989000022411 Test Accuracy0.936507940292

Iteration: 8100 Loss: 0.562419176102 Train Accuracy: 0.989000022411 Test Accuracy0.93439155817

Iteration: 8200 Loss: 0.562465846539 Train Accuracy: 0.989000022411 Test Accuracy0.931746006012

Iteration: 8300 Loss: 0.562432646751 Train Accuracy: 0.989000022411 Test Accuracy0.934920608997

Iteration: 8400 Loss: 0.562426924706 Train Accuracy: 0.989000022411 Test Accuracy0.933333337307

Iteration: 8500 Loss: 0.562418758869 Train Accuracy: 0.989000022411 Test Accuracy0.933862447739

Iteration: 8600 Loss: 0.562417984009 Train Accuracy: 0.989000022411 Test Accuracy0.935978829861

Iteration: 8700 Loss: 0.562437176704 Train Accuracy: 0.989000022411 Test Accuracy0.935978829861

Iteration: 8800 Loss: 0.578755617142 Train Accuracy: 0.972500026226 Test Accuracy0.927513241768

Iteration: 8900 Loss: 0.565938591957 Train Accuracy: 0.986000001431 Test Accuracy0.935449719429

Iteration: 9000 Loss: 0.562196016312 Train Accuracy: 0.989250004292 Test Accuracy0.935978829861

Iteration: 9100 Loss: 0.561437726021 Train Accuracy: 0.990000009537 Test Accuracy0.938624322414

Iteration: 9200 Loss: 0.561364352703 Train Accuracy: 0.990000009537 Test Accuracy0.938624322414

Iteration: 9300 Loss: 0.561371803284 Train Accuracy: 0.990000009537 Test Accuracy0.938624322414

Iteration: 9400 Loss: 0.561358273029 Train Accuracy: 0.990000009537 Test Accuracy0.939682543278

Iteration: 9500 Loss: 0.561344504356 Train Accuracy: 0.990000009537 Test Accuracy0.939153432846

Iteration: 9600 Loss: 0.561368823051 Train Accuracy: 0.990000009537 Test Accuracy0.939682543278

Iteration: 9700 Loss: 0.56139343977 Train Accuracy: 0.990000009537 Test Accuracy0.937566161156

Iteration: 9800 Loss: 0.561371207237 Train Accuracy: 0.990000009537 Test Accuracy0.939682543278

Iteration: 9900 Loss: 0.561351060867 Train Accuracy: 0.990000009537 Test Accuracy0.939682543278

生成されるファイルについて

学習結果

学習モデル

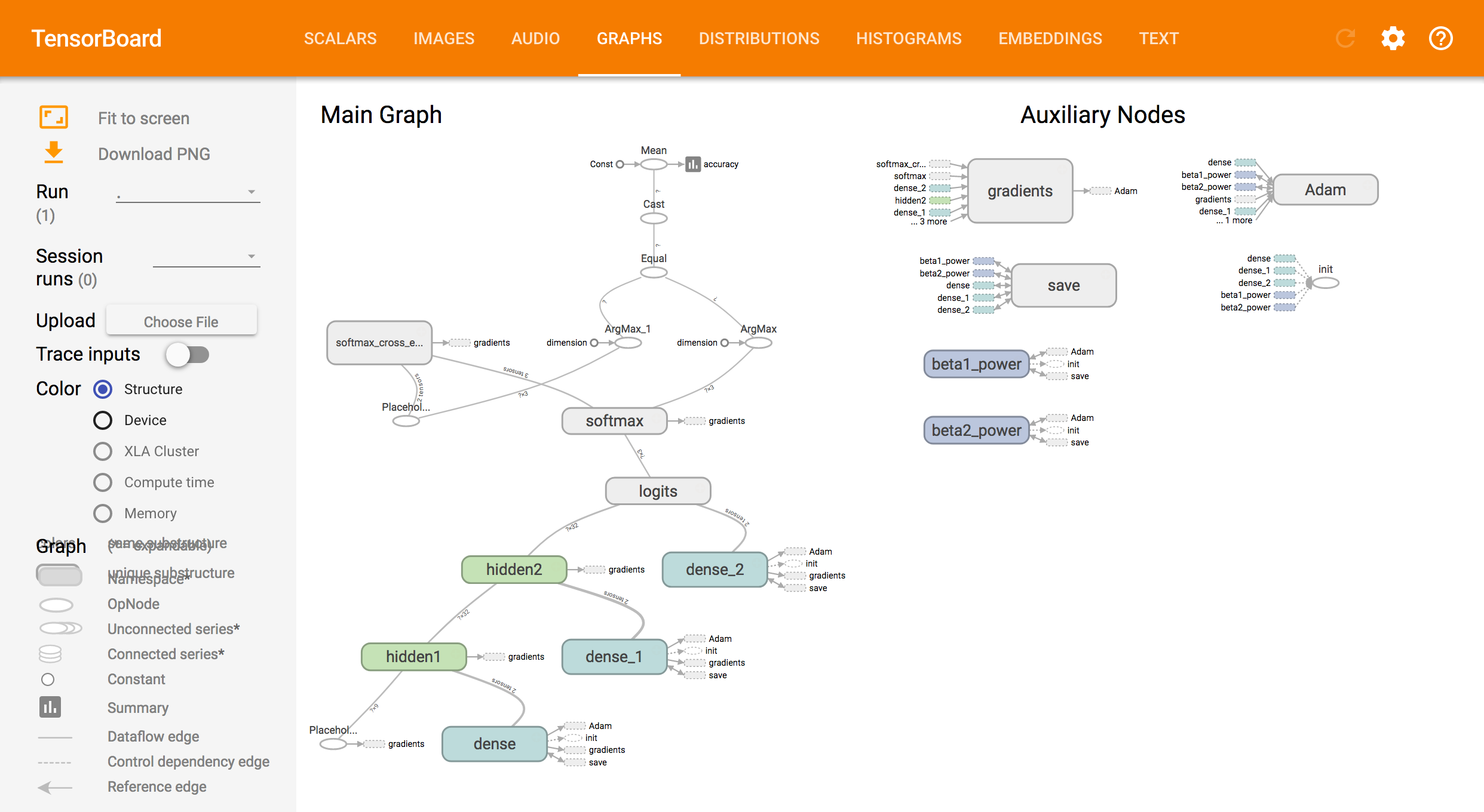

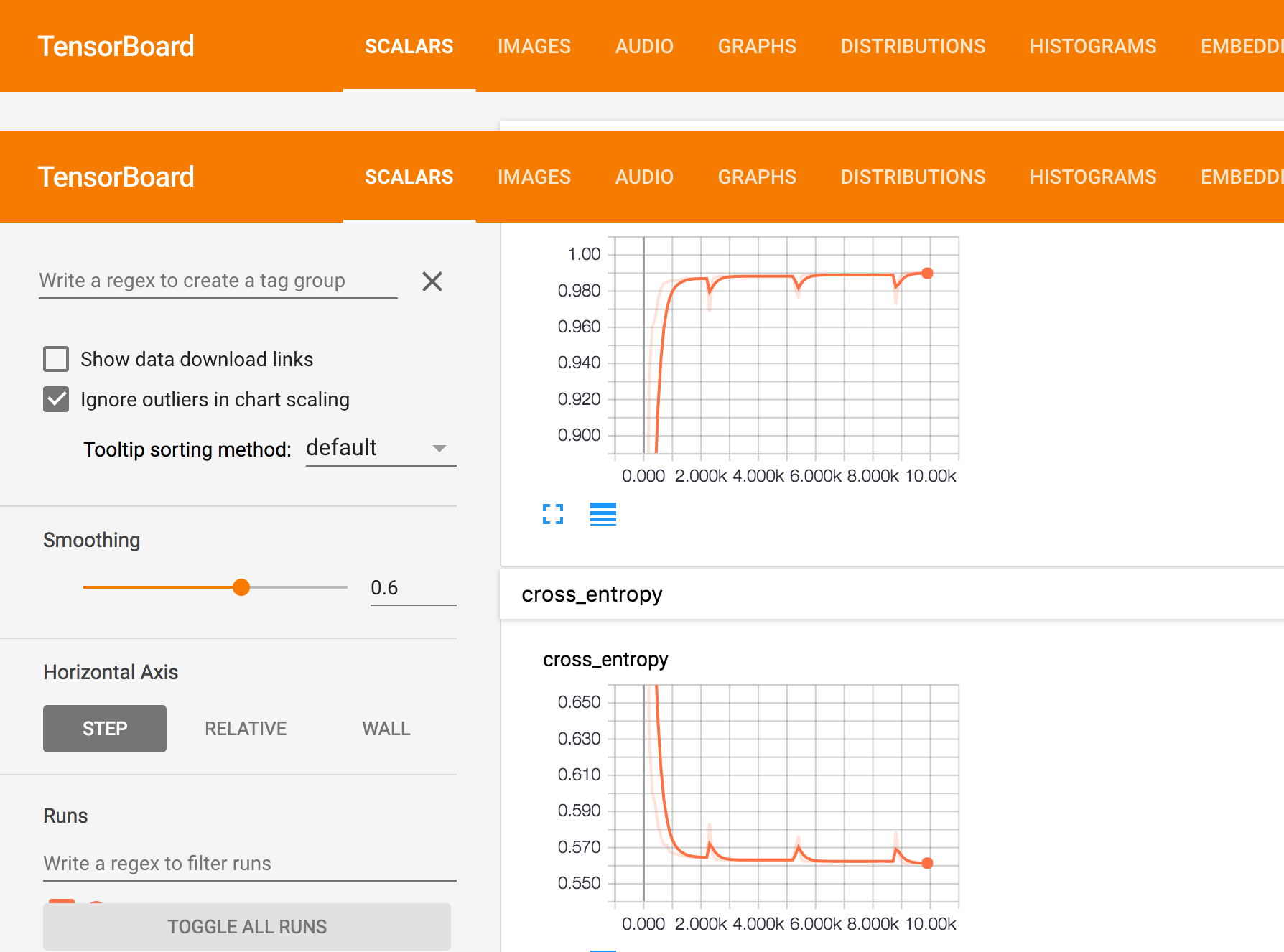

TensorBoardについて

学習グラフ

まとめ

- もしかすると、一番わかり易いモデル構造かもしれない。

- 微妙にグラフが上限してるのは過学習のせい?

- 学習データ作るのしんどそう。

- 次回は実際にプレイしてみたいとおもいます。