GeForce GTX 1070 (8GB)

ASRock Z170M Pro4S [Intel Z170chipset]

Ubuntu 14.04 LTS desktop amd64

TensorFlow v0.11

cuDNN v5.1 for Linux

CUDA v8.0

Python 2.7.6

IPython 5.1.0 -- An enhanced Interactive Python.

関連 http://qiita.com/7of9/items/b364d897b95476a30754

TensorFlowでsine curveを学習して誤差0.2程度になっている。

http://qiita.com/7of9/items/8cb8db458d78d313c6cf

学習結果をグラフ化しようとしている。

predicitionを出力してみることにした。

input.csv生成

code v0.1 (hidden:sigmoid, output:sigmoid)

#output trained curve以降の処理を追加した。

predictionを出力すればいいかと思った。

# !/usr/bin/env python

# -*- coding: utf-8 -*-

import sys

import tensorflow as tf

import tensorflow.contrib.slim as slim

import numpy as np

filename_queue = tf.train.string_input_producer(["input.csv"])

# parse CSV

reader = tf.TextLineReader()

key, value = reader.read(filename_queue)

input1, output = tf.decode_csv(value, record_defaults=[[0.], [0.]])

inputs = tf.pack([input1])

output = tf.pack([output])

batch_size=4 # [4]

inputs_batch, output_batch = tf.train.shuffle_batch([inputs, output], batch_size, capacity=40, min_after_dequeue=batch_size)

input_ph = tf.placeholder("float", [None,1])

output_ph = tf.placeholder("float",[None,1])

## network

hiddens = slim.stack(input_ph, slim.fully_connected, [7,7,7],

activation_fn=tf.nn.sigmoid, scope="hidden")

prediction = slim.fully_connected(hiddens, 1, activation_fn=tf.nn.sigmoid, scope="output")

loss = tf.contrib.losses.mean_squared_error(prediction, output_ph)

train_op = slim.learning.create_train_op(loss, tf.train.AdamOptimizer(0.001))

init_op = tf.initialize_all_variables()

with tf.Session() as sess:

coord = tf.train.Coordinator()

threads = tf.train.start_queue_runners(sess=sess, coord=coord)

try:

sess.run(init_op)

for i in range(30000): #[10000]

inpbt, outbt = sess.run([inputs_batch, output_batch])

_, t_loss = sess.run([train_op, loss], feed_dict={input_ph:inpbt, output_ph: outbt})

if (i+1) % 100 == 0:

print("%d,%f" % (i+1, t_loss))

# output to npy

model_variables = slim.get_model_variables()

res = sess.run(model_variables)

np.save('model_variables.npy', res)

finally:

coord.request_stop()

# output trained curve

print 'output' # used to separate from above lines (grep -A 200 output [outfile])

for loop in range(10):

inpbt, outbt = sess.run([inputs_batch, output_batch])

pred = sess.run([prediction], feed_dict={input_ph:inpbt, output_ph: outbt})

for din,dout in zip(inpbt, pred[0]):

print '%.5f,%.5f' % (din,dout)

coord.join(threads)

$ python output_learnedSine.py > res.161210_1930.org

$ grep -A 200 output res.161210_1930.org > res.161210_1930.cut

res.161210_1930.cutの1行目(output)をviで削除した。

グラフ

Jupyterを使用。

%matplotlib inline

# sine curve learning

# Dec. 10, 2016

import numpy as np

import matplotlib.pyplot as plt

data1 = np.loadtxt('input.csv', delimiter=',')

data2 = np.loadtxt('res.161210_1930.cut', delimiter=',')

input1 = data1[:,0]

output1 = data1[:,1]

input2 = data2[:,0]

output2 = data2[:,1]

fig = plt.figure()

ax1 = fig.add_subplot(2,1,1)

ax2 = fig.add_subplot(2,1,2)

ax1.scatter(input1,output1)

ax2.scatter(input2,output2)

ax1.set_xlabel('x')

ax1.set_ylabel('sin(x)')

ax1.grid(True)

ax2.set_xlabel('x')

ax2.set_ylabel('sin(x)')

ax2.grid(True)

fig.show()

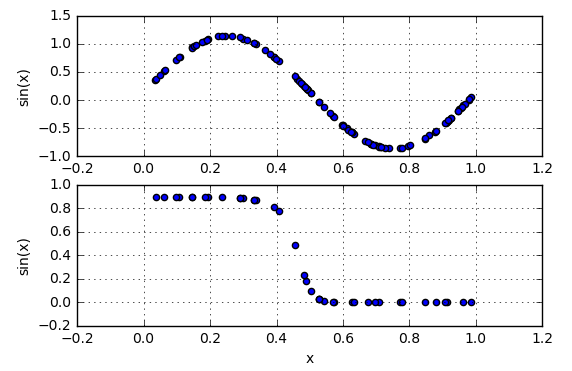

上のグラフがinput.csv(学習元)。

下のグラフが学習結果。

誤差は0.2といっても、sine curveになっていないようだ。

sigmoid関数の左右が逆転したような結果となっている。

code v0.2 (hidden: sigmoid, output: linear)

@ 「深層学習」 by 岡谷貴之さん

p11. ニューラルネットでは、各ユニットの活性化関数が非線形性を持つことが本質的に重要ですが、部分的に線形写像を使う場合があります。

とりあえずoutputはsigmoidをやめて、linearにしてみた。

hiddenはsigmoidにしている。

(hiddenもlinearにしたら「ただの直線になった」のでこれは良くない)

# !/usr/bin/env python

# -*- coding: utf-8 -*-

import sys

import tensorflow as tf

import tensorflow.contrib.slim as slim

import numpy as np

filename_queue = tf.train.string_input_producer(["input.csv"])

# parse CSV

reader = tf.TextLineReader()

key, value = reader.read(filename_queue)

input1, output = tf.decode_csv(value, record_defaults=[[0.], [0.]])

inputs = tf.pack([input1])

output = tf.pack([output])

batch_size=4 # [4]

inputs_batch, output_batch = tf.train.shuffle_batch([inputs, output], batch_size, capacity=40, min_after_dequeue=batch_size)

input_ph = tf.placeholder("float", [None,1])

output_ph = tf.placeholder("float",[None,1])

## network

hiddens = slim.stack(input_ph, slim.fully_connected, [7,7,7],

activation_fn=tf.nn.sigmoid, scope="hidden")

# prediction = slim.fully_connected(hiddens, 1, activation_fn=tf.nn.sigmoid, scope="output")

prediction = slim.fully_connected(hiddens, 1, activation_fn=None, scope="output")

loss = tf.contrib.losses.mean_squared_error(prediction, output_ph)

train_op = slim.learning.create_train_op(loss, tf.train.AdamOptimizer(0.001))

init_op = tf.initialize_all_variables()

with tf.Session() as sess:

coord = tf.train.Coordinator()

threads = tf.train.start_queue_runners(sess=sess, coord=coord)

try:

sess.run(init_op)

for i in range(30000): #[10000]

inpbt, outbt = sess.run([inputs_batch, output_batch])

_, t_loss = sess.run([train_op, loss], feed_dict={input_ph:inpbt, output_ph: outbt})

if (i+1) % 100 == 0:

print("%d,%f" % (i+1, t_loss))

# output to npy

model_variables = slim.get_model_variables()

res = sess.run(model_variables)

np.save('model_variables.npy', res)

finally:

coord.request_stop()

# output trained curve

print 'output' # used to separate from above lines (grep -A 200 output [outfile])

for loop in range(10):

inpbt, outbt = sess.run([inputs_batch, output_batch])

pred = sess.run([prediction], feed_dict={input_ph:inpbt, output_ph: outbt})

for din,dout in zip(inpbt, pred[0]):

print '%.5f,%.5f' % (din,dout)

coord.join(threads)

$python sigmoid_onlyHidden.py > res.161210_1958.org

$grep -A 200 output res.161210_1958.org > res.161210_1958.cut

$vi res.161210_1958.cut # (1行目を削除)

学習結果のグラフ

%matplotlib inline

# sine curve learning

# Dec. 10, 2016

import numpy as np

import matplotlib.pyplot as plt

data1 = np.loadtxt('input.csv', delimiter=',')

data2 = np.loadtxt('res.161210_1958.cut', delimiter=',')

input1 = data1[:,0]

output1 = data1[:,1]

input2 = data2[:,0]

output2 = data2[:,1]

fig = plt.figure()

ax1 = fig.add_subplot(2,1,1)

ax2 = fig.add_subplot(2,1,2)

ax1.scatter(input1,output1)

ax2.scatter(input2,output2)

ax1.set_xlabel('x')

ax1.set_ylabel('sin(x)')

ax1.set_xlim([0,1.0])

ax1.grid(True)

ax2.set_xlabel('x')

ax2.set_ylabel('sin(x)')

ax2.set_xlim([0,1.0])

ax2.grid(True)

fig.show()

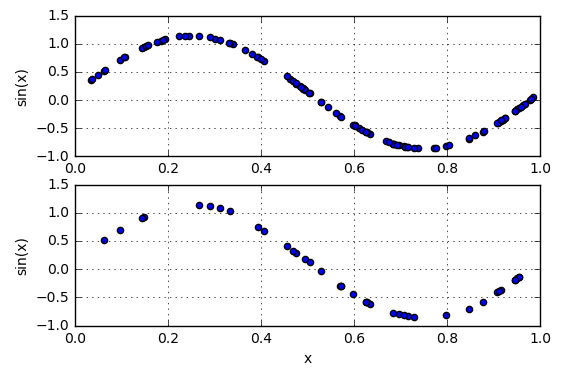

上のグラフがinput.csv(学習元)。

下のグラフが学習結果。学習元に近いものが得られた。

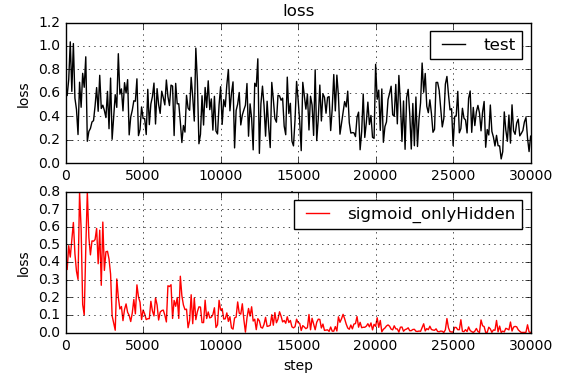

誤差

hidden:sigmoid, output:linearにした状態で、誤差の変化を測定しなおした。

...

29100,0.032129

29200,0.015477

29300,0.006879

29400,0.000079

29500,0.000537

29600,0.000318

29700,0.001543

29800,0.043803

0.2どころではない誤差まで落ちた。ついている。