ポイント

- LSTMをベースに Gradient Clipping ( by norm ) を実装し、効果を検証。

- 効果を確認できず。今後、追加検証。

レファレンス

1. Recurrent Batch Normalization

2. Zoneout: Regularizing RNNs by Randomly Preserving Hidden Activations

3. Zoneout に関するメモ

検証方法

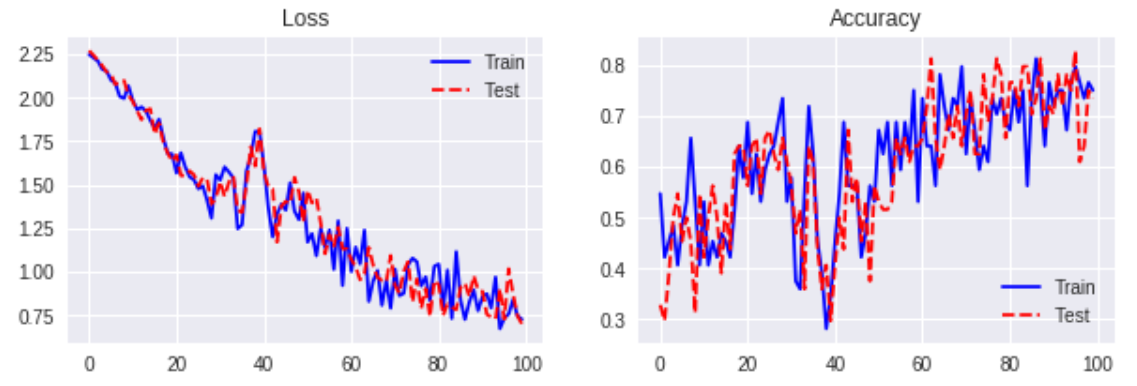

- Base (no regularization)、Base + Recurrent Batch Normalization、Base + Zoneout に適用し、効果を比較。

データ

MNIST handwritten digits

from tensorflow.examples.tutorials.mnist import input_data

mnist = input_data.read_data_sets('***/mnist', \

one_hot = True)

検証結果

数値計算例:

- n_units = 100

- learning_rate = 0.01

- batch_size = 64

- zoneout_prob = 0.2

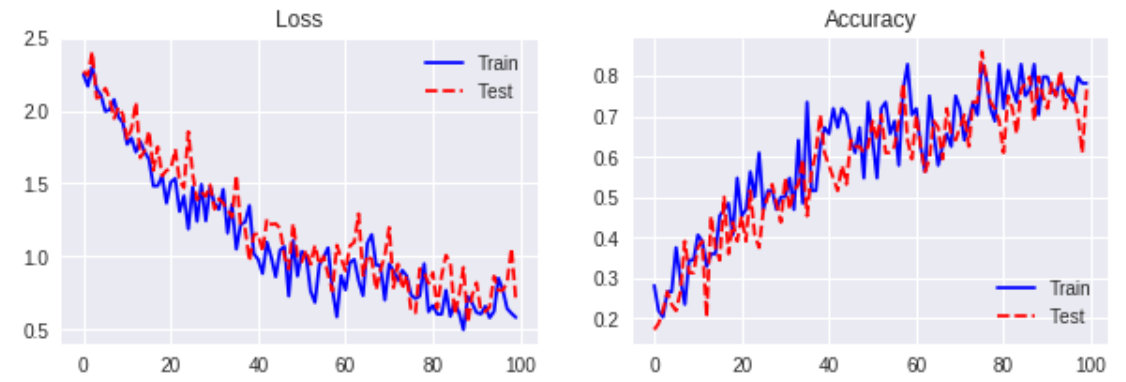

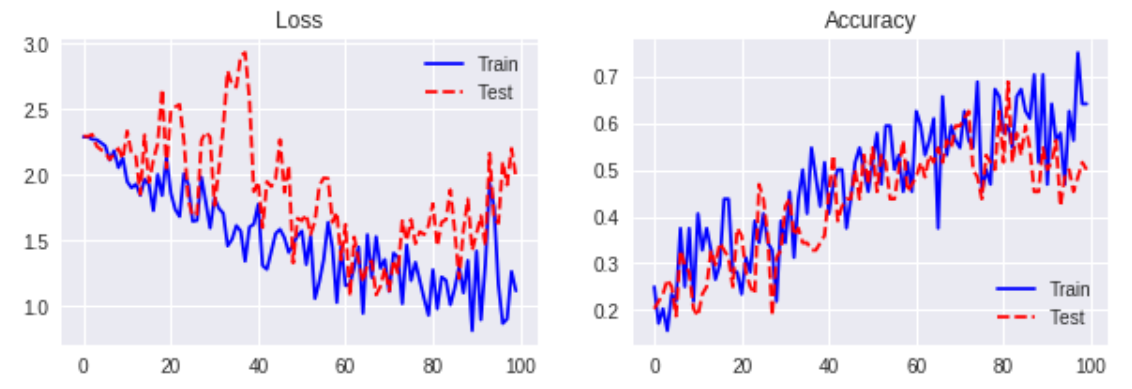

Batch Normalization ( no clipping )

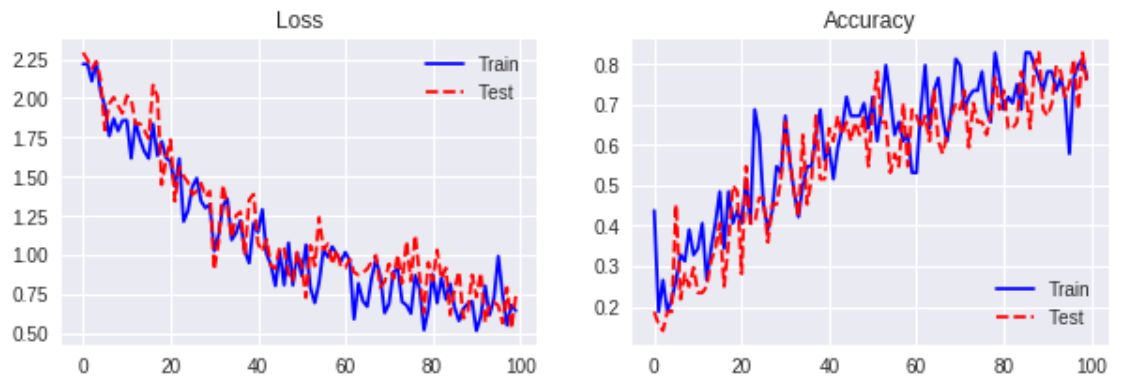

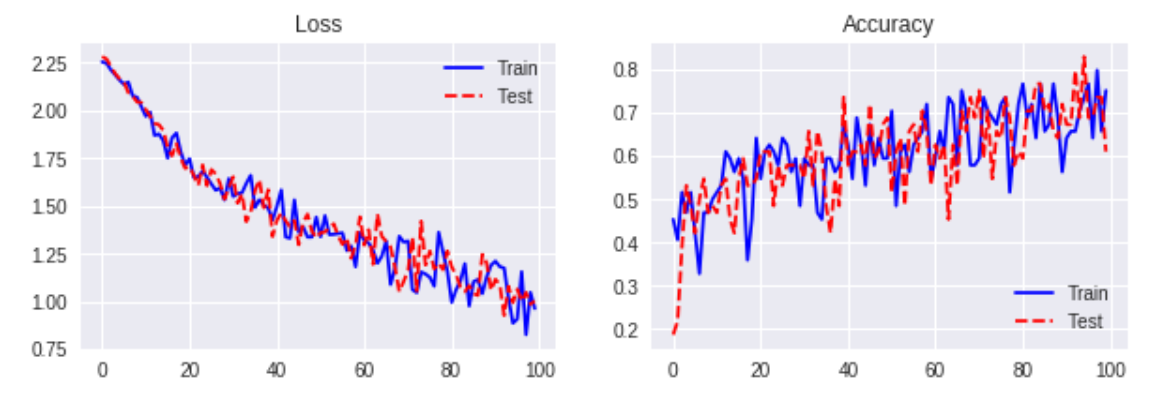

Batch Normalization ( clip_norm = 0.5 )

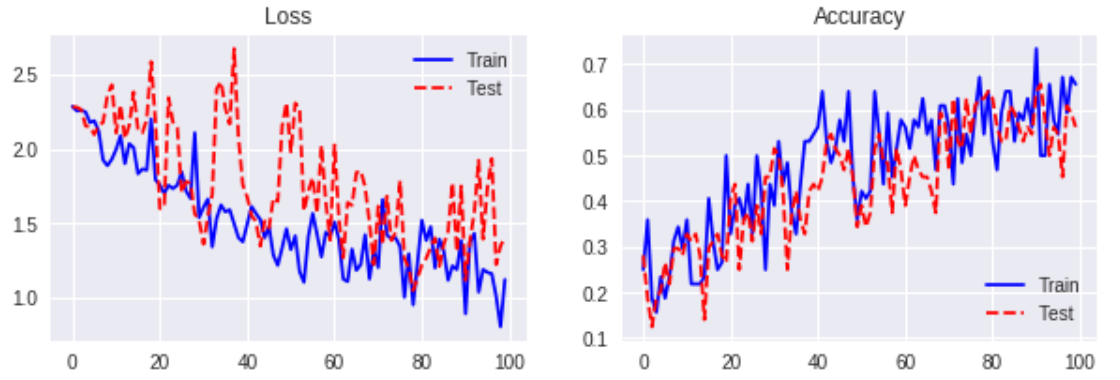

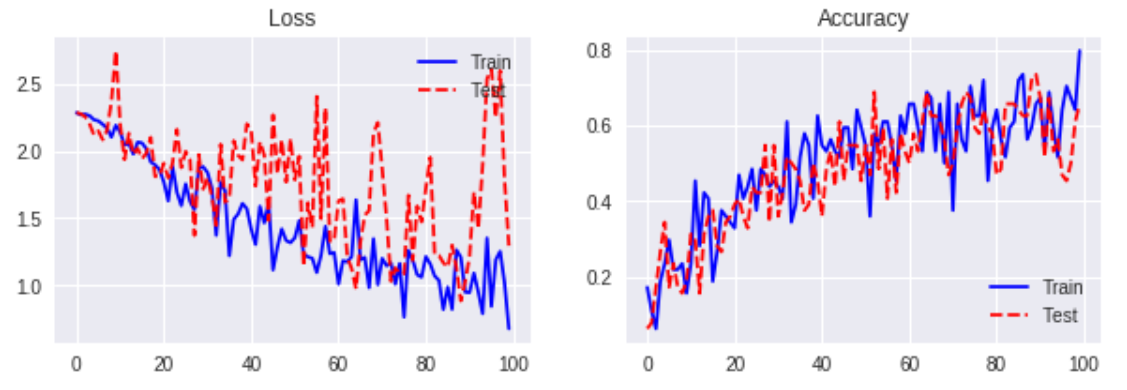

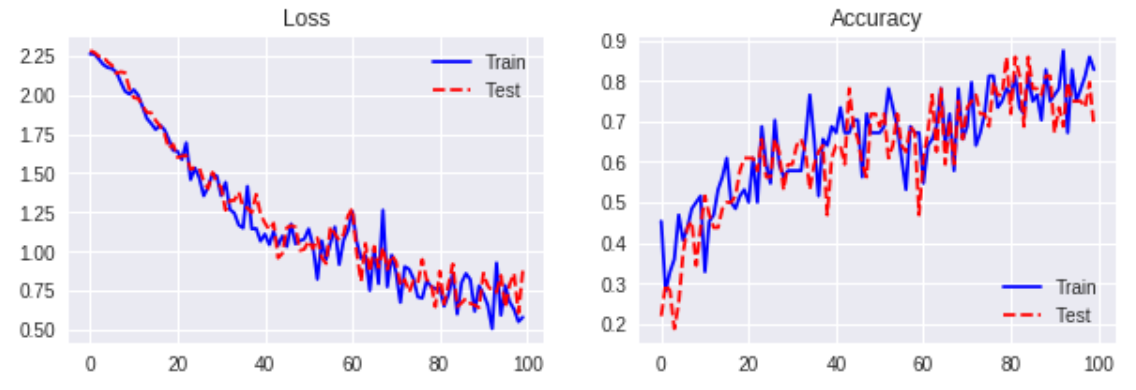

Batch Normalization ( clip_norm = 1.0 )

サンプルコード

def training(self, loss, learning_rate, clip_norm):

optimizer = tf.train.AdamOptimizer(learning_rate = \

learning_rate)

grads_and_vars = optimizer.compute_gradients(loss)

clipped_grads_and_vars = [(tf.clip_by_norm(grad, \

clip_norm = clip_norm), var) for grad, \

var in grads_and_vars]

train_step = \

optimizer.apply_gradients(clipped_grads_and_vars)

return train_step