目的

最新バージョンの組み合わせ(Hive 0.14 + Tez 0.5.2 + Hadoop 2.6.0)でHive on Tezが動作することを確かめる。

前提

Hadoop 2.6.0と、Tez 0.5.2がインストールされていること。

Hiveのインストール

Hiveの最新リリースをダウンロード

$ wget http://www.us.apache.org/dist/hive/hive-0.14.0/apache-hive-0.14.0-src.tar.gz

$ tar xf apache-hive-0.14.0-src.tar.gz

$ cd apache-hive-0.14.0-src

ビルド

せっかくなので、Hadoop 2.6.0とTez 0.5.2を使うよう設定

pom.xml

diff --git a/apache-hive-0.14.0-src.org/pom.xml b/apache-hive-0.14.0-src/pom.xml

index 501d547..d9a9fd1 100644

--- a/apache-hive-0.14.0-src.org/pom.xml

+++ b/apache-hive-0.14.0-src/pom.xml

@@ -115,7 +115,7 @@

<groovy.version>2.1.6</groovy.version>

<hadoop-20.version>0.20.2</hadoop-20.version>

<hadoop-20S.version>1.2.1</hadoop-20S.version>

- <hadoop-23.version>2.5.0</hadoop-23.version>

+ <hadoop-23.version>2.6.0</hadoop-23.version>

<hadoop.bin.path>${basedir}/${hive.path.to.root}/testutils/hadoop</hadoop.bin.path>

<hbase.hadoop1.version>0.98.3-hadoop1</hbase.hadoop1.version>

<hbase.hadoop2.version>0.98.3-hadoop2</hbase.hadoop2.version>

@@ -152,7 +152,7 @@

<stax.version>1.0.1</stax.version>

<slf4j.version>1.7.5</slf4j.version>

<ST4.version>4.0.4</ST4.version>

- <tez.version>0.5.2-SNAPSHOT</tez.version>

+ <tez.version>0.5.2</tez.version>

<super-csv.version>2.2.0</super-csv.version>

<tempus-fugit.version>1.1</tempus-fugit.version>

<snappy.version>0.2</snappy.version>

@@ -209,8 +209,19 @@

<enabled>false</enabled>

</snapshots>

</repository>

+ <repository>

+ <id>org.apache.hadoop</id>

+ <url>https://repository.apache.org/content/repositories/orgapachehadoop-1012</url>

+ </repository>

</repositories>

+ <pluginRepositories>

+ <pluginRepository>

+ <id>org.apache.hadoop</id>

+ <url>https://repository.apache.org/content/repositories/orgapachehadoop-1012</url>

+ </pluginRepository>

+ </pluginRepositories>

+

<!-- Hadoop dependency management is done at the bottom under profiles -->

<dependencyManagement>

<dependencies>

- Hadoop 2.6.0が正式リリースされたら、repository, pluginRepositoryの指定は不要

Mavenでビルド

$ mvn clean install -DskipTests -Phadoop-2,dist

Hiveの設定

$ cp -r packaging/target/apache-hive-0.14.0-bin/apache-hive-0.14.0-bin /usr/local/

# export文は、bashrcあたりに書いておくことを推奨

$ export HIVE_HOME=/usr/local/apache-hive-0.14.0-bin

$ export PATH=$PATH:$HIVE_HOME/bin

$ cd $HIVE_HOME/conf

$ cp hive-default.xml.template hive-default.xml

Hiveを使うためのHDFSの初期設定

必要なディレクトリを作成する

$ hdfs dfs -mkdir /tmp

$ hdfs dfs -mkdir -p /user/hive/warehouse

$ hdfs dfs -chmod g+w /tmp

$ hdfs dfs -chmod g+w /user/hive/warehouse

Hiveの実行

sinchiiさんのblog:とりあえずPig on Tez を動かしてみたのデータとクエリをHiveに移植してみることにします。

データロード

hive> CREATE TABLE flight (number STRING, dept INT, dest INT, equip STRING)

> ROW FORMAT DELIMITED FIELDS TERMINATED BY ',' STORED AS TEXTFILE;

OK

Time taken: 0.916 seconds

hive> CREATE TABLE airport (id INT, name STRING)

> ROW FORMAT DELIMITED FIELDS TERMINATED BY ',' STORED AS TEXTFILE;

OK

Time taken: 0.093 seconds

hive> LOAD DATA LOCAL INPATH './flight.txt' OVERWRITE INTO TABLE flight;

Loading data to table default.flight

Table default.flight stats: [numFiles=1, numRows=0, totalSize=284, rawDataSize=0]

OK

Time taken: 0.903 seconds

hive> LOAD DATA LOCAL INPATH './airport.txt' OVERWRITE INTO TABLE airport;

Loading data to table default.airport

Table default.airport stats: [numFiles=1, numRows=0, totalSize=30, rawDataSize=0]

OK

Time taken: 0.595 seconds

- Pig版だとカラム名が

from,toに設定されていたが、Hiveだとfromが予約語のためそれぞれdept,destに変更している

クエリを書く

個人的なことだけど、変換に苦労した

- 特に、

SUBSTRING(number, 0, 2)を2回書かされてしまったのがつらい (AS句がうまく動いてほしかった)

# tezで実行する設定

hive> set hive.execution.engine=tez;

hive> SELECT name, SUBSTRING(number, 0, 2), count(*) FROM flight

> JOIN airport ON flight.dept = airport.id WHERE equip != '777-300'

> GROUP BY SUBSTRING(number, 0, 2), name;

Query ID = root_20141113002626_5b2ab149-c279-4ba8-8089-f51aedf76e76

Total jobs = 1

Launching Job 1 out of 1

Status: Running (Executing on YARN cluster with App id application_1415780973920_0008)

--------------------------------------------------------------------------------

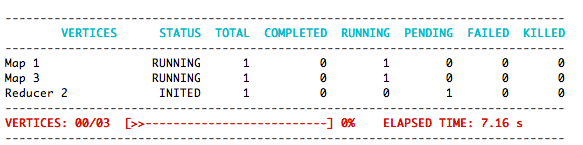

VERTICES STATUS TOTAL COMPLETED RUNNING PENDING FAILED KILLED

--------------------------------------------------------------------------------

Map 1 .......... SUCCEEDED 1 1 0 0 0 0

Map 3 .......... SUCCEEDED 1 1 0 0 0 0

Reducer 2 ...... SUCCEEDED 1 1 0 0 0 0

--------------------------------------------------------------------------------

VERTICES: 03/03 [==========================>>] 100% ELAPSED TIME: 19.70 s

--------------------------------------------------------------------------------

OK

hnd 7G 2

nrt AP 1

nrt GK 1

hnd JL 7

nrt JL 1

hnd NH 5

Time taken: 20.202 seconds, Fetched: 6 row(s)

クエリ実行中は以下の画像のようなProgress barが表示されて、リアルタイムで更新される

- この機能は、Hive 0.14で追加されました! HIVE-8495

MapReduce版

# MapReduceで実行する設定

hive> set hive.execution.engine=mr;

# MapReduce on Tezを設定している場合は、元に戻す

hive> set mapreduce.framework.name=yarn;

hive> SELECT name, SUBSTRING(number, 0, 2), count(*) FROM flight

> JOIN airport ON flight.dept = airport.id WHERE equip != '777-300'

> GROUP BY SUBSTRING(number, 0, 2), name;

Query ID = root_20141113004545_9de60583-4809-4d0f-b139-efbec88a007b

Total jobs = 1

SLF4J: Class path contains multiple SLF4J bindings.

SLF4J: Found binding in [jar:file:/usr/local/hadoop-2.6.0/share/hadoop/common/lib/slf4j-log4j12-1.7.5.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: Found binding in [jar:file:/usr/local/apache-hive-0.14.0-bin/lib/hive-jdbc-0.14.0-standalone.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation.

SLF4J: Actual binding is of type [org.slf4j.impl.Log4jLoggerFactory]

14/11/13 00:45:49 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

Execution log at: /tmp/root/root_20141113004545_9de60583-4809-4d0f-b139-efbec88a007b.log

2014-11-13 12:45:51 Starting to launch local task to process map join; maximum memory = 477102080

2014-11-13 12:45:53 Dump the side-table for tag: 1 with group count: 5 into file: file:/tmp/root/d0dab1cf-a125-4722-b6c2-dd7214e13c9f/hive_2014-11-13_00-45-43_180_2968392168506954651-1/-local-10004/HashTable-Stage-2/MapJoin-mapfile61--.hashtable

2014-11-13 12:45:53 Uploaded 1 File to: file:/tmp/root/d0dab1cf-a125-4722-b6c2-dd7214e13c9f/hive_2014-11-13_00-45-43_180_2968392168506954651-1/-local-10004/HashTable-Stage-2/MapJoin-mapfile61--.hashtable (375 bytes)

2014-11-13 12:45:53 End of local task; Time Taken: 2.277 sec.

Execution completed successfully

MapredLocal task succeeded

Launching Job 1 out of 1

Number of reduce tasks not specified. Estimated from input data size: 1

In order to change the average load for a reducer (in bytes):

set hive.exec.reducers.bytes.per.reducer=<number>

In order to limit the maximum number of reducers:

set hive.exec.reducers.max=<number>

In order to set a constant number of reducers:

set mapreduce.job.reduces=<number>

Starting Job = job_1415780973920_0010, Tracking URL = http://localhost:8088/proxy/application_1415780973920_0010/

Kill Command = /usr/local/hadoop-2.6.0/bin/hadoop job -kill job_1415780973920_0010

Hadoop job information for Stage-2: number of mappers: 1; number of reducers: 1

2014-11-13 00:46:07,539 Stage-2 map = 0%, reduce = 0%

2014-11-13 00:46:20,779 Stage-2 map = 100%, reduce = 0%, Cumulative CPU 4.08 sec

2014-11-13 00:46:34,223 Stage-2 map = 100%, reduce = 100%, Cumulative CPU 7.86 sec

MapReduce Total cumulative CPU time: 7 seconds 860 msec

Ended Job = job_1415780973920_0010

MapReduce Jobs Launched:

Stage-Stage-2: Map: 1 Reduce: 1 Cumulative CPU: 7.86 sec HDFS Read: 497 HDFS Write: 54 SUCCESS

Total MapReduce CPU Time Spent: 7 seconds 860 msec

OK

hnd 7G 2

nrt AP 1

nrt GK 1

hnd JL 7

nrt JL 1

hnd NH 5

Time taken: 53.31 seconds, Fetched: 6 row(s)

性能比較

特にチューニングはしてない状況ですが、

- Tez: 20秒

- MapReduce: 53秒

と差がつきました。Hive on Tez、いい感じだと思います。特にProgress barが。