背景

Googleやfacebookなどで使用されているGANやYOLOやVSLAMなど気になる技術が増えてきました。そこでまずYOLOを実際に手元で動かしてみました。結論、PCスペックが低い為重かったですがプログラム自体の稼働は正常確認する事ができました。機会があればスペックを高めにしたPCを用意し再度コードを流してみたいと思います。

開発環境

win10

x201

ステップ1管理者権限 anaconda設定した仮想環境を起動

まずwindowsのコマンドプロンプトを管理者権限で開きます。

activate yolo_v3

上記コマンドにより、作成したアナコンダ仮想環境に入ることができます。yolo_v3の部分はご自身で設定したanacondaナビゲータで設定した名前に変更してください。

ステップ2 ライブラリインポート

以下3つのライブラリをインポートしてください。

conda install pandas opencv

conda install pytorch torchvision -c pytorch

pip install matplotlib cython

ステップ3 Yolov3インストール及び詳細設定

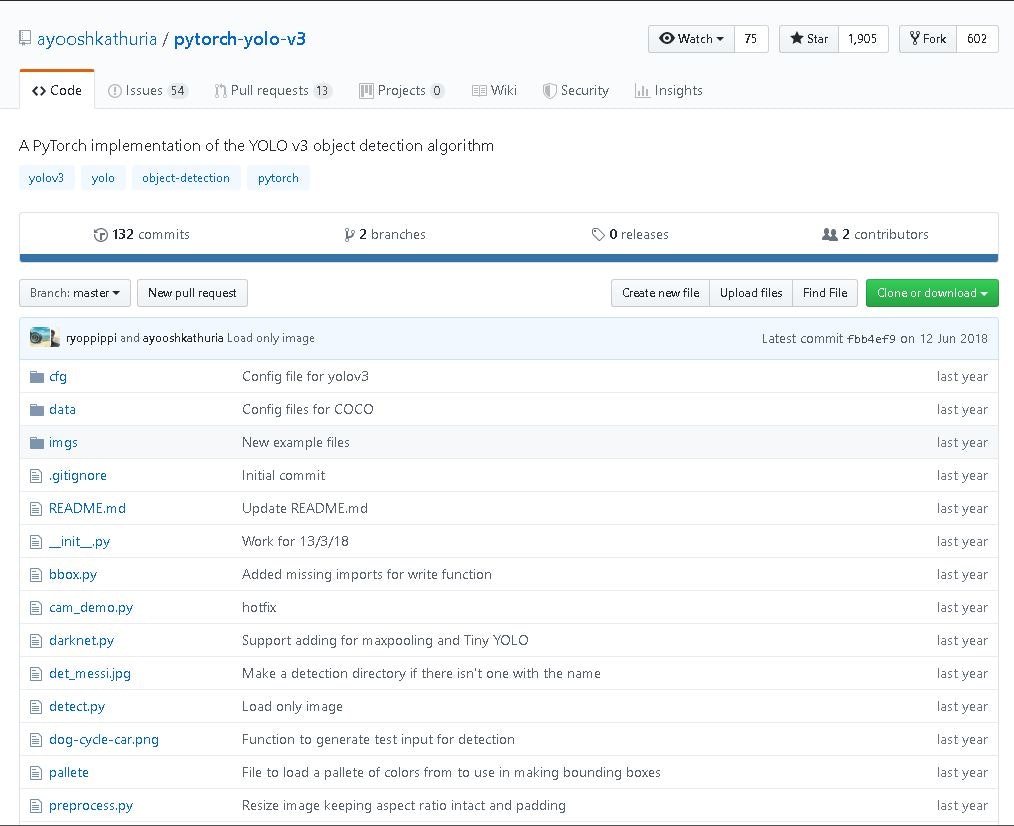

以下gitから 緑のボタンを押してファイルをデスクトップにダウンロードしましょう。

https://github.com/ayooshkathuria/pytorch-yolo-v3.git

ダウンロードが終了したらもう1ファイル

以下サイトから yolov3.weights ファイルをデスクトップにダウンロードしましょう。

https://pjreddie.com/media/files/yolov3.weights

yolov3.weightsのダウンロードが終わりましたら、

pytorch-yolo-v3-masterフォルダの中にyolov3.weightsを格納してください。

ステップ4 動画ファイルを格納

動画認識したいあなたのサンプル動画をsamplemovie.mp4という名前で

pytorch-yolo-v3-masterの中に保存してください。

ステップ5 YOLOv3の実行

以下コマンドでYOLOを立ち上げてください。

C:\github\pytorch-yolo-v3>python video_demo.py --video samplemovie.mp4

実際のソースは以下になります。

from __future__ import division

import time

import torch

import torch.nn as nn

from torch.autograd import Variable

import numpy as np

import cv2

from util import *

from darknet import Darknet

from preprocess import prep_image, inp_to_image, letterbox_image

import pandas as pd

import random

import pickle as pkl

import argparse

def get_test_input(input_dim, CUDA):

img = cv2.imread("dog-cycle-car.png")

img = cv2.resize(img, (input_dim, input_dim))

img_ = img[:,:,::-1].transpose((2,0,1))

img_ = img_[np.newaxis,:,:,:]/255.0

img_ = torch.from_numpy(img_).float()

img_ = Variable(img_)

if CUDA:

img_ = img_.cuda()

return img_

def prep_image(img, inp_dim):

"""

Prepare image for inputting to the neural network.

Returns a Variable

"""

orig_im = img

dim = orig_im.shape[1], orig_im.shape[0]

img = (letterbox_image(orig_im, (inp_dim, inp_dim)))

img_ = img[:,:,::-1].transpose((2,0,1)).copy()

img_ = torch.from_numpy(img_).float().div(255.0).unsqueeze(0)

return img_, orig_im, dim

def write(x, img):

c1 = tuple(x[1:3].int())

c2 = tuple(x[3:5].int())

cls = int(x[-1])

label = "{0}".format(classes[cls])

color = random.choice(colors)

cv2.rectangle(img, c1, c2,color, 1)

t_size = cv2.getTextSize(label, cv2.FONT_HERSHEY_PLAIN, 1 , 1)[0]

c2 = c1[0] + t_size[0] + 3, c1[1] + t_size[1] + 4

cv2.rectangle(img, c1, c2,color, -1)

cv2.putText(img, label, (c1[0], c1[1] + t_size[1] + 4), cv2.FONT_HERSHEY_PLAIN, 1, [225,255,255], 1);

return img

def arg_parse():

"""

Parse arguements to the detect module

"""

parser = argparse.ArgumentParser(description='YOLO v3 Video Detection Module')

parser.add_argument("--video", dest = 'video', help =

"Video to run detection upon",

default = "video.avi", type = str)

parser.add_argument("--dataset", dest = "dataset", help = "Dataset on which the network has been trained", default = "pascal")

parser.add_argument("--confidence", dest = "confidence", help = "Object Confidence to filter predictions", default = 0.5)

parser.add_argument("--nms_thresh", dest = "nms_thresh", help = "NMS Threshhold", default = 0.4)

parser.add_argument("--cfg", dest = 'cfgfile', help =

"Config file",

default = "cfg/yolov3.cfg", type = str)

parser.add_argument("--weights", dest = 'weightsfile', help =

"weightsfile",

default = "yolov3.weights", type = str)

parser.add_argument("--reso", dest = 'reso', help =

"Input resolution of the network. Increase to increase accuracy. Decrease to increase speed",

default = "416", type = str)

return parser.parse_args()

if __name__ == '__main__':

args = arg_parse()

confidence = float(args.confidence)

nms_thesh = float(args.nms_thresh)

start = 0

CUDA = torch.cuda.is_available()

num_classes = 80

CUDA = torch.cuda.is_available()

bbox_attrs = 5 + num_classes

print("Loading network.....")

model = Darknet(args.cfgfile)

model.load_weights(args.weightsfile)

print("Network successfully loaded")

model.net_info["height"] = args.reso

inp_dim = int(model.net_info["height"])

assert inp_dim % 32 == 0

assert inp_dim > 32

if CUDA:

model.cuda()

model(get_test_input(inp_dim, CUDA), CUDA)

model.eval()

videofile = args.video

cap = cv2.VideoCapture(videofile)

assert cap.isOpened(), 'Cannot capture source'

frames = 0

start = time.time()

while cap.isOpened():

ret, frame = cap.read()

if ret:

img, orig_im, dim = prep_image(frame, inp_dim)

im_dim = torch.FloatTensor(dim).repeat(1,2)

if CUDA:

im_dim = im_dim.cuda()

img = img.cuda()

with torch.no_grad():

output = model(Variable(img), CUDA)

output = write_results(output, confidence, num_classes, nms = True, nms_conf = nms_thesh)

if type(output) == int:

frames += 1

print("FPS of the video is {:5.2f}".format( frames / (time.time() - start)))

cv2.imshow("frame", orig_im)

key = cv2.waitKey(1)

if key & 0xFF == ord('q'):

break

continue

im_dim = im_dim.repeat(output.size(0), 1)

scaling_factor = torch.min(inp_dim/im_dim,1)[0].view(-1,1)

output[:,[1,3]] -= (inp_dim - scaling_factor*im_dim[:,0].view(-1,1))/2

output[:,[2,4]] -= (inp_dim - scaling_factor*im_dim[:,1].view(-1,1))/2

output[:,1:5] /= scaling_factor

for i in range(output.shape[0]):

output[i, [1,3]] = torch.clamp(output[i, [1,3]], 0.0, im_dim[i,0])

output[i, [2,4]] = torch.clamp(output[i, [2,4]], 0.0, im_dim[i,1])

classes = load_classes('data/coco.names')

colors = pkl.load(open("pallete", "rb"))

list(map(lambda x: write(x, orig_im), output))

cv2.imshow("frame", orig_im)

key = cv2.waitKey(1)

if key & 0xFF == ord('q'):

break

frames += 1

print("FPS of the video is {:5.2f}".format( frames / (time.time() - start)))

else:

break

補足 動画ではなくカメラを利用したい時

いきなりcam_demo.py ファイルを実行すると以下のようなエラーが発生します。

python cam_demo.py

Traceback (most recent call last):

File "cam_demo.py", line 123, in <module>

im_dim = im_dim.cuda()

NameError: name 'im_dim' is not defined

119 行目(cam_demo.py)がコメントアウトされていますのでここを有効に変更するとカメラ入力が有効になります。

ターゲットは以下になります。

im_dim = torch.FloatTensor(dim).repeat(1,2)

ちなみにcam_demo.pyは以下となりますので参考にしてください。

from __future__ import division

import time

import torch

import torch.nn as nn

from torch.autograd import Variable

import numpy as np

import cv2

from util import *

from darknet import Darknet

from preprocess import prep_image, inp_to_image

import pandas as pd

import random

import argparse

import pickle as pkl

def get_test_input(input_dim, CUDA):

img = cv2.imread("imgs/messi.jpg")

img = cv2.resize(img, (input_dim, input_dim))

img_ = img[:,:,::-1].transpose((2,0,1))

img_ = img_[np.newaxis,:,:,:]/255.0

img_ = torch.from_numpy(img_).float()

img_ = Variable(img_)

if CUDA:

img_ = img_.cuda()

return img_

def prep_image(img, inp_dim):

"""

Prepare image for inputting to the neural network.

Returns a Variable

"""

orig_im = img

dim = orig_im.shape[1], orig_im.shape[0]

img = cv2.resize(orig_im, (inp_dim, inp_dim))

img_ = img[:,:,::-1].transpose((2,0,1)).copy()

img_ = torch.from_numpy(img_).float().div(255.0).unsqueeze(0)

return img_, orig_im, dim

def write(x, img):

c1 = tuple(x[1:3].int())

c2 = tuple(x[3:5].int())

cls = int(x[-1])

label = "{0}".format(classes[cls])

color = random.choice(colors)

cv2.rectangle(img, c1, c2,color, 1)

t_size = cv2.getTextSize(label, cv2.FONT_HERSHEY_PLAIN, 1 , 1)[0]

c2 = c1[0] + t_size[0] + 3, c1[1] + t_size[1] + 4

cv2.rectangle(img, c1, c2,color, -1)

cv2.putText(img, label, (c1[0], c1[1] + t_size[1] + 4), cv2.FONT_HERSHEY_PLAIN, 1, [225,255,255], 1);

return img

def arg_parse():

"""

Parse arguements to the detect module

"""

parser = argparse.ArgumentParser(description='YOLO v3 Cam Demo')

parser.add_argument("--confidence", dest = "confidence", help = "Object Confidence to filter predictions", default = 0.25)

parser.add_argument("--nms_thresh", dest = "nms_thresh", help = "NMS Threshhold", default = 0.4)

parser.add_argument("--reso", dest = 'reso', help =

"Input resolution of the network. Increase to increase accuracy. Decrease to increase speed",

default = "160", type = str)

return parser.parse_args()

if __name__ == '__main__':

cfgfile = "cfg/yolov3.cfg"

weightsfile = "yolov3.weights"

num_classes = 80

args = arg_parse()

confidence = float(args.confidence)

nms_thesh = float(args.nms_thresh)

start = 0

CUDA = torch.cuda.is_available()

num_classes = 80

bbox_attrs = 5 + num_classes

model = Darknet(cfgfile)

model.load_weights(weightsfile)

model.net_info["height"] = args.reso

inp_dim = int(model.net_info["height"])

assert inp_dim % 32 == 0

assert inp_dim > 32

if CUDA:

model.cuda()

model.eval()

videofile = 'video.avi'

cap = cv2.VideoCapture(0)

assert cap.isOpened(), 'Cannot capture source'

frames = 0

start = time.time()

while cap.isOpened():

ret, frame = cap.read()

if ret:

img, orig_im, dim = prep_image(frame, inp_dim)

# im_dim = torch.FloatTensor(dim).repeat(1,2)

if CUDA:

im_dim = im_dim.cuda()

img = img.cuda()

output = model(Variable(img), CUDA)

output = write_results(output, confidence, num_classes, nms = True, nms_conf = nms_thesh)

if type(output) == int:

frames += 1

print("FPS of the video is {:5.2f}".format( frames / (time.time() - start)))

cv2.imshow("frame", orig_im)

key = cv2.waitKey(1)

if key & 0xFF == ord('q'):

break

continue

output[:,1:5] = torch.clamp(output[:,1:5], 0.0, float(inp_dim))/inp_dim

# im_dim = im_dim.repeat(output.size(0), 1)

output[:,[1,3]] *= frame.shape[1]

output[:,[2,4]] *= frame.shape[0]

classes = load_classes('data/coco.names')

colors = pkl.load(open("pallete", "rb"))

list(map(lambda x: write(x, orig_im), output))

cv2.imshow("frame", orig_im)

key = cv2.waitKey(1)

if key & 0xFF == ord('q'):

break

frames += 1

print("FPS of the video is {:5.2f}".format( frames / (time.time() - start)))

else:

break

※ref

https://qiita.com/daiarg/items/03c55c82fdc6e623bf07

https://qiita.com/goodboy_max/items/b75bb9eea52831fcdf15