動作環境

GeForce GTX 1070 (8GB)

ASRock Z170M Pro4S [Intel Z170chipset]

Ubuntu 16.04 LTS desktop amd64

TensorFlow v1.1.0

cuDNN v5.1 for Linux

CUDA v8.0

Python 3.5.2

IPython 6.0.0 -- An enhanced Interactive Python.

gcc (Ubuntu 5.4.0-6ubuntu1~16.04.4) 5.4.0 20160609

GNU bash, version 4.3.48(1)-release (x86_64-pc-linux-gnu)

学習コードv0.1 http://qiita.com/7of9/items/5819f36e78cc4290614e

http://qiita.com/7of9/items/49d073f964e5689d9b96

の続き。

batch size=2としている。

learning rateはAdamのデフォルト値を使用。

比較内容

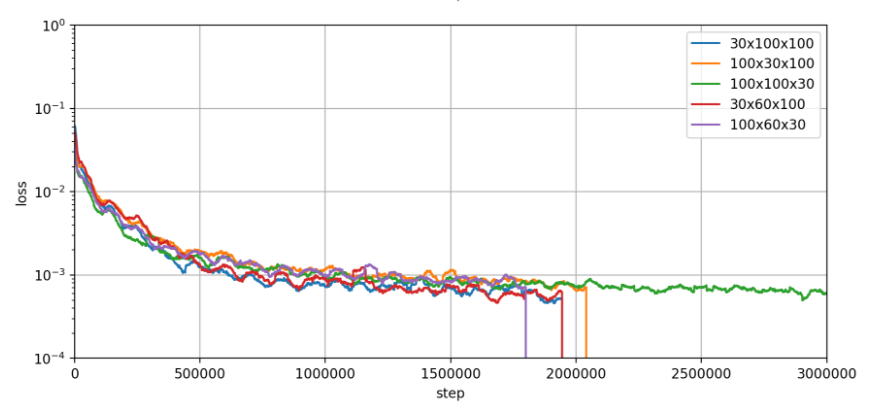

3層のhidden layerのnode数を変更してlossの経過を見た。

code

以下のhiddens =を変更してhidden layerの設定を変える。

learn_mr_mi_170722.py

import numpy as np

import tensorflow as tf

import tensorflow.contrib.slim as slim

"""

v0.10 Jul. 31, 2017

- batch size=2, learning rate=0.001, 30x100x100

- increase step from [3,000,000] to [30,000,000]

v0.9 Jul. 29, 2017

- change network from [30x100x100] to [100x100x100]

v0.8 Jul. 28, 2017

- change batch size from [4] to [2]

- change learning rate from [0.0001] to [0.001]

v0.7 Jul. 28, 2017

- change learning rate from [0.001] to [0.0001]

v0.6 Jul. 27, 2017

- increase step from [1,000,000] to [3,000,000]

v0.5 Jul. 25, 2017

- change network to [30,100,100]

- increase step to [1,000,000]

v0.4 Jul. 23, 2017

- increase step from [90000] to [270000]

v0.3 Jul. 22, 2017

- output model variables

v0.2 Jul. 22, 2017

- increase step from [30000] to [90000]

- change [capacity]

v0.1 Jul. 22, 2017

- increase network structure from [7,7,7] to [100,100,100]

- increase dimension of [input_ph], [output_ph]

- alter read_and_decode() to treat 5 input-, 6 output- nodes

- alter [IN_FILE] to the symbolic linked file

:reference: [learnExr_170504.py] to expand dimensions to [input:3,output:6]

=== branched from [learn_sineCurve_170708.py] ===

v0.6 Jul. 09, 2017

- modify for PEP8

- print prediction after learning

v0.5 Jul. 09, 2017

- fix bug > [Attempting to use uninitialized value hidden/hidden_1/weights]

v0.4 Jul. 09, 2017

- fix bug > stops only for one epoch

+ set [num_epochs=None] for string_input_producer()

- change parameters for shuffle_batch()

- implement training

v0.3 Jul. 09, 2017

- fix warning > use tf.local_variables_initializer() instead of

initialize_local_variables()

- fix warning > use tf.global_variables_initializer() instead of

initialize_all_variables()

v0.2 Jul. 08, 2017

- fix bug > OutOfRangeError (current size 0)

+ use [tf.initialize_local_variables()]

v0.1 Jul. 08, 2017

- only read [.tfrecords]

+ add inputs_xy()

+ add read_and_decode()

"""

# codingrule: PEP8

IN_FILE = 'LN-IntField-Y_170722.tfrecords'

def read_and_decode(filename_queue):

reader = tf.TFRecordReader()

_, serialized_example = reader.read(filename_queue)

features = tf.parse_single_example(

serialized_example,

features={

'xpos_raw': tf.FixedLenFeature([], tf.string),

'ypos_raw': tf.FixedLenFeature([], tf.string),

'zpos_raw': tf.FixedLenFeature([], tf.string),

'mr_raw': tf.FixedLenFeature([], tf.string),

'mi_raw': tf.FixedLenFeature([], tf.string),

'exr_raw': tf.FixedLenFeature([], tf.string),

'exi_raw': tf.FixedLenFeature([], tf.string),

'eyr_raw': tf.FixedLenFeature([], tf.string),

'eyi_raw': tf.FixedLenFeature([], tf.string),

'ezr_raw': tf.FixedLenFeature([], tf.string),

'ezi_raw': tf.FixedLenFeature([], tf.string),

})

xpos_raw = tf.decode_raw(features['xpos_raw'], tf.float32)

ypos_raw = tf.decode_raw(features['ypos_raw'], tf.float32)

zpos_raw = tf.decode_raw(features['zpos_raw'], tf.float32)

mr_raw = tf.decode_raw(features['mr_raw'], tf.float32)

mi_raw = tf.decode_raw(features['mi_raw'], tf.float32)

exr_raw = tf.decode_raw(features['exr_raw'], tf.float32)

exi_raw = tf.decode_raw(features['exi_raw'], tf.float32)

eyr_raw = tf.decode_raw(features['eyr_raw'], tf.float32)

eyi_raw = tf.decode_raw(features['eyi_raw'], tf.float32)

ezr_raw = tf.decode_raw(features['ezr_raw'], tf.float32)

ezi_raw = tf.decode_raw(features['ezi_raw'], tf.float32)

xpos_org = tf.reshape(xpos_raw, [1])

ypos_org = tf.reshape(ypos_raw, [1])

zpos_org = tf.reshape(zpos_raw, [1])

mr_org = tf.reshape(mr_raw, [1])

mi_org = tf.reshape(mi_raw, [1])

exr_org = tf.reshape(exr_raw, [1])

exi_org = tf.reshape(exi_raw, [1])

eyr_org = tf.reshape(eyr_raw, [1])

eyi_org = tf.reshape(eyi_raw, [1])

ezr_org = tf.reshape(ezr_raw, [1])

ezi_org = tf.reshape(ezi_raw, [1])

# input

wrk = [xpos_org[0], ypos_org[0], zpos_org[0], mr_org[0], mi_org[0]]

inputs = tf.stack(wrk)

# output

wrk = [exr_org[0], exi_org[0],

eyr_org[0], eyi_org[0],

ezr_org[0], ezi_org[0]]

outputs = tf.stack(wrk)

return inputs, outputs

def inputs_xy():

filename = IN_FILE

filequeue = tf.train.string_input_producer(

[filename], num_epochs=None)

in_org, out_org = read_and_decode(filequeue)

return in_org, out_org

in_orgs, out_orgs = inputs_xy()

batch_size = 2 # [4]

# Ref: cifar10_input.py

min_fraction_of_examples_in_queue = 0.2 # 0.4

NUM_EXAMPLES_PER_EPOCH_FOR_TRAIN = 223872 # 223872 or 9328

min_queue_examples = int(NUM_EXAMPLES_PER_EPOCH_FOR_TRAIN *

min_fraction_of_examples_in_queue)

cpcty = min_queue_examples + 3 * batch_size

in_batch, out_batch = tf.train.shuffle_batch([in_orgs, out_orgs],

batch_size,

capacity=cpcty,

min_after_dequeue=batch_size)

input_ph = tf.placeholder("float", [None, 5])

output_ph = tf.placeholder("float", [None, 6]) # [6]

# network

hiddens = slim.stack(input_ph, slim.fully_connected, [30, 100, 100],

activation_fn=tf.nn.sigmoid, scope="hidden")

prediction = slim.fully_connected(hiddens, 6,

activation_fn=None, scope="output")

loss = tf.contrib.losses.mean_squared_error(prediction, output_ph)

train_op = slim.learning.create_train_op(loss, tf.train.AdamOptimizer())

init_op = [tf.global_variables_initializer(), tf.local_variables_initializer()]

with tf.Session() as sess:

sess.run(init_op)

coord = tf.train.Coordinator()

threads = tf.train.start_queue_runners(sess=sess, coord=coord)

try:

for idx in range(30000000): # 3000000

inpbt, outbt = sess.run([in_batch, out_batch])

_, t_loss = sess.run([train_op, loss],

feed_dict={input_ph: inpbt, output_ph: outbt})

if (idx + 1) % 100 == 0:

print("%d,%f" % (idx+1, t_loss))

finally:

coord.request_stop()

# output the model

model_variables = slim.get_model_variables()

res = sess.run(model_variables)

np.save('model_variables_170722.npy', res)

coord.join(threads)

結果

以下の構成が一番lossが減少する。

- 30x100x100

- 30x60x100