はじめに

CoreOS Container LinuxでさくらのVPSにkubernetesを立てる。

- CoreOS Container Linux(Stable:1911.5.0)

- kubernetes1.13.1(1 master、nodeなしの構成。RBAC)

- etcd3(dockerで駆動。tls secured)

- Container Linux Config(ignition)

- コンテナネットワークはCanal

- CoreDNS

- さくらのVPSのVPSプラン1つ(メモリ:1GB)

- zram

- swap

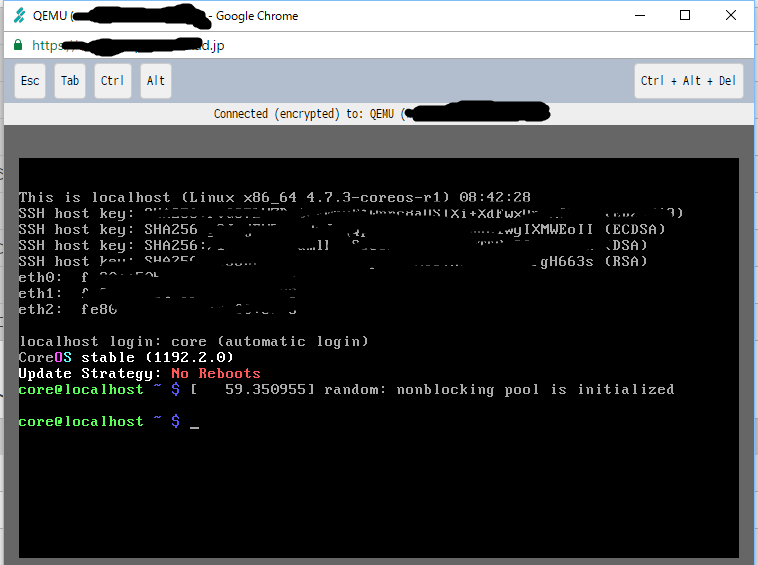

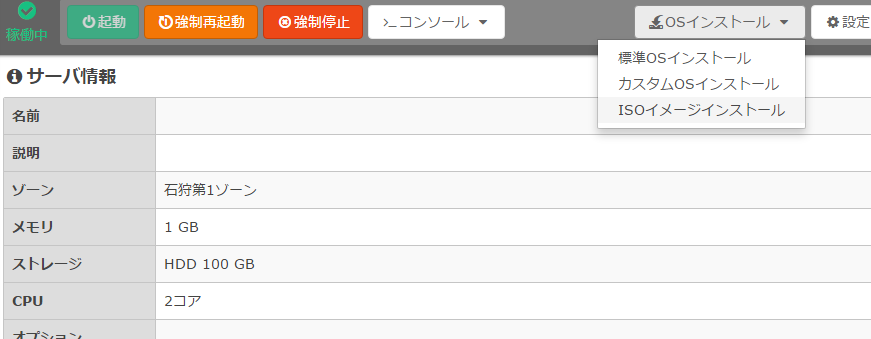

さくらのVPSにCoreOSのISOイメージをアップロード

次のURLからISOイメージをダウンロード

https://stable.release.core-os.net/amd64-usr/1632.3.0/coreos_production_iso_image.iso

管理コンソールでSFTPのアカウントを発行し、ダウンロードしたISOイメージをSFTPでアップロード。

ISOイメージからVPSを起動。

ここで次を入力。

- ${MASTER_IP}をvpsのpublicly routable IPで置き換える

- ${GATEWAY}を置き換える

- ${DNS1}を置き換える

- ${DNS2}を置き換える

$ sudo vi /etc/systemd/network/static.network

[Match]

Name=eth0

[Network]

Address=${MASTER_IP}/23

Gateway=${GATEWAY}.1

DNS=${DNS1}

DNS=${DNS2}

$ sudo systemctl restart systemd-networkd

$ sudo passwd core

次のyamlを環境に合わせて編集

- ssh-rsaを置き換える

- ${MASTER_IP}をvpsのpublicly routable IPで置き換える

- ${GATEWAY}を置き換える

- ${DNS1}を置き換える

- ${DNS2}を置き換える

# /usr/share/oem/config.ign

# ignition再適用

# sudo touch /boot/coreos/first_boot

# sudo rm /etc/machine-id

# sudo reboot

passwd:

users:

- name: core

ssh_authorized_keys:

- ssh-rsa AAAAB3NzaC1yc2EAAAADAQAB・・・ZiDsoTMHdHt0nswTkLhl1NAdEHBqt core@localhost

storage:

files:

- path: /var/lib/iptables/rules-save

filesystem: root

mode: 0644

contents:

inline: |

*filter

:INPUT DROP [0:0]

:FORWARD DROP [0:0]

:OUTPUT ACCEPT [0:0]

-A INPUT -i lo -j ACCEPT

-A INPUT -p icmp -j ACCEPT

-A INPUT -p tcp -m state --state ESTABLISHED,RELATED -j ACCEPT

-A INPUT -p udp --sport 53 -j ACCEPT

-A INPUT -p tcp --dport 22 -j ACCEPT

-A INPUT -p tcp --dport 80 -j ACCEPT

-A INPUT -p tcp --dport 443 -j ACCEPT

-A INPUT -p tcp --dport 6443 -j ACCEPT

COMMIT

- path: /etc/systemd/timesyncd.conf

filesystem: root

mode: 0644

contents:

inline: |

[Time]

NTP=ntp.nict.jp ntp.jst.mfeed.ad.jp

- path: /etc/zrm/zrm.sh

filesystem: root

mode: 0755

contents:

inline: |

#!/bin/bash

### BEGIN INIT INFO

# Provides: zram

# Required-Start:

# Required-Stop:

# Default-Start: 2 3 4 5

# Default-Stop: 0 1 6

# Short-Description: Increased Performance In Linux With zRam (Virtual Swap Compressed in RAM)

# Description: Adapted from systemd scripts at https://github.com/mystilleef/FedoraZram

### END INIT INFO

start() {

# get the number of CPUs

num_cpus=$(grep -c processor /proc/cpuinfo)

# if something goes wrong, assume we have 1

[ "$num_cpus" != 0 ] || num_cpus=1

# set decremented number of CPUs

decr_num_cpus=$((num_cpus - 1))

# get the amount of memory in the machine

mem_total_kb=$(grep MemTotal /proc/meminfo | grep -E --only-matching '[[:digit:]]+')

#we will only assign 50% of system memory to zram

mem_total_kb=$((mem_total_kb * 2 / 5))

mem_total=$((mem_total_kb * 1024))

# load dependency modules

modprobe zram num_devices=$num_cpus

# initialize the devices

for i in $(seq 0 $decr_num_cpus); do

echo $((mem_total / num_cpus)) > /sys/block/zram$i/disksize

done

# Creating swap filesystems

for i in $(seq 0 $decr_num_cpus); do

mkswap /dev/zram$i

done

# Switch the swaps on

for i in $(seq 0 $decr_num_cpus); do

swapon -p 100 /dev/zram$i

done

}

stop() {

for i in $(grep '^/dev/zram' /proc/swaps | awk '{ print $1 }'); do

swapoff "$i"

done

if grep -q "^zram " /proc/modules; then

sleep 1

rmmod zram

fi

}

case "$1" in

start)

start

;;

stop)

stop

;;

restart)

stop

sleep 3

start

;;

esac

wait

- path: /etc/kubernetes/manifests/kube-apiserver.yaml

filesystem: root

mode: 0755

contents:

inline: |

apiVersion: v1

kind: Pod

metadata:

name: kube-apiserver

namespace: kube-system

spec:

hostNetwork: true

containers:

- name: kube-apiserver

image: gcr.io/google_containers/hyperkube-amd64:v1.13.1

command:

- /hyperkube

- apiserver

- --bind-address=0.0.0.0

- --etcd-servers=https://${MASTER_IP}:2379

- --etcd-cafile=/etc/kubernetes/ssl/ca.pem

- --etcd-certfile=/etc/kubernetes/ssl/apiserver.pem

- --etcd-keyfile=/etc/kubernetes/ssl/apiserver-key.pem

- --allow-privileged=true

- --apiserver-count=1

- --endpoint-reconciler-type=lease

- --service-cluster-ip-range=10.3.0.0/24

- --secure-port=6443

- --advertise-address=${MASTER_IP}

- --enable-admission-plugins=NamespaceLifecycle,LimitRanger,ServiceAccount,DefaultStorageClass,DefaultTolerationSeconds,MutatingAdmissionWebhook,ValidatingAdmissionWebhook,ResourceQuota

- --storage-backend=etcd3

- --tls-cert-file=/etc/kubernetes/ssl/apiserver.pem

- --tls-private-key-file=/etc/kubernetes/ssl/apiserver-key.pem

- --client-ca-file=/etc/kubernetes/ssl/ca.pem

- --service-account-key-file=/etc/kubernetes/ssl/apiserver-key.pem

- --runtime-config=extensions/v1beta1/networkpolicies=true

- --service-node-port-range=25-32767

- --authorization-mode=RBAC

ports:

- containerPort: 6443

hostPort: 6443

name: https

- containerPort: 8080

hostPort: 8080

name: local

volumeMounts:

- mountPath: /etc/kubernetes/ssl

name: ssl-certs-kubernetes

readOnly: true

volumes:

- hostPath:

path: /etc/kubernetes/ssl

name: ssl-certs-kubernetes

- path: /etc/kubernetes/manifests/kube-proxy.yaml

filesystem: root

mode: 0755

contents:

inline: |

apiVersion: v1

kind: Pod

metadata:

name: kube-proxy

namespace: kube-system

spec:

hostNetwork: true

containers:

- name: kube-proxy

image: gcr.io/google_containers/hyperkube-amd64:v1.13.1

command:

- /hyperkube

- proxy

- --kubeconfig=/etc/kubernetes/worker-kubeconfig.yaml

- --proxy-mode=iptables

securityContext:

privileged: true

volumeMounts:

- mountPath: /etc/kubernetes/worker-kubeconfig.yaml

name: "kubeconfig"

readOnly: true

- mountPath: /etc/kubernetes/ssl

name: "etc-kube-ssl"

readOnly: true

volumes:

- name: "kubeconfig"

hostPath:

path: "/etc/kubernetes/worker-kubeconfig.yaml"

- name: "etc-kube-ssl"

hostPath:

path: "/etc/kubernetes/ssl"

- path: /etc/kubernetes/manifests/kube-controller-manager.yaml

filesystem: root

mode: 0755

contents:

inline: |

apiVersion: v1

kind: Pod

metadata:

name: kube-controller-manager

namespace: kube-system

spec:

hostNetwork: true

containers:

- name: kube-controller-manager

image: gcr.io/google_containers/hyperkube-amd64:v1.13.1

command:

- /hyperkube

- controller-manager

- --kubeconfig=/etc/kubernetes/worker-kubeconfig.yaml

- --leader-elect=true

- --service-account-private-key-file=/etc/kubernetes/ssl/apiserver-key.pem

- --root-ca-file=/etc/kubernetes/ssl/ca.pem

- --cluster-cidr=10.244.0.0/16

- --allocate-node-cidrs=true

livenessProbe:

httpGet:

host: 127.0.0.1

path: /healthz

port: 10252

initialDelaySeconds: 15

timeoutSeconds: 15

volumeMounts:

- mountPath: /etc/kubernetes/worker-kubeconfig.yaml

name: "kubeconfig"

readOnly: true

- mountPath: /etc/kubernetes/ssl

name: ssl-certs-kubernetes

readOnly: true

volumes:

- name: "kubeconfig"

hostPath:

path: "/etc/kubernetes/worker-kubeconfig.yaml"

- hostPath:

path: /etc/kubernetes/ssl

name: ssl-certs-kubernetes

- path: /etc/kubernetes/manifests/kube-scheduler.yaml

filesystem: root

mode: 0755

contents:

inline: |

apiVersion: v1

kind: Pod

metadata:

name: kube-scheduler

namespace: kube-system

spec:

hostNetwork: true

containers:

- name: kube-scheduler

image: gcr.io/google_containers/hyperkube-amd64:v1.13.1

command:

- /hyperkube

- scheduler

- --kubeconfig=/etc/kubernetes/worker-kubeconfig.yaml

- --leader-elect=true

livenessProbe:

httpGet:

host: 127.0.0.1

path: /healthz

port: 10251

initialDelaySeconds: 15

timeoutSeconds: 15

volumeMounts:

- mountPath: /etc/kubernetes/worker-kubeconfig.yaml

name: "kubeconfig"

readOnly: true

- mountPath: /etc/kubernetes/ssl

name: "etc-kube-ssl"

readOnly: true

volumes:

- name: "kubeconfig"

hostPath:

path: "/etc/kubernetes/worker-kubeconfig.yaml"

- name: "etc-kube-ssl"

hostPath:

path: "/etc/kubernetes/ssl"

- path: /etc/kubernetes/worker-kubeconfig.yaml

filesystem: root

mode: 0755

contents:

inline: |

apiVersion: v1

kind: Config

clusters:

- name: local

cluster:

certificate-authority: /etc/kubernetes/ssl/ca.pem

server: https://${MASTER_IP}:6443

users:

- name: kubelet

user:

client-certificate: /etc/kubernetes/ssl/worker.pem

client-key: /etc/kubernetes/ssl/worker-key.pem

contexts:

- context:

cluster: local

user: kubelet

name: kubelet-context

current-context: kubelet-context

- path: /etc/kubernetes/kubelet-conf.yaml

filesystem: root

mode: 0755

contents:

inline: |

kind: KubeletConfiguration

apiVersion: kubelet.config.k8s.io/v1beta1

staticPodPath: "/etc/kubernetes/manifests"

clusterDNS: ["10.3.0.10"]

clusterDomain: "cluster.local"

# Restore default authentication and authorization modes from K8s < 1.9

authentication:

anonymous:

enabled: true # Defaults to false as of 1.10

webhook:

enabled: false # Deafults to true as of 1.10

authorization:

mode: AlwaysAllow # Deafults to webhook as of 1.10

readOnlyPort: 10255 # Used by heapster. Defaults to 0 (disabled) as of 1.10. Needed for metrics.

failSwapOn: false

networkd:

units:

- name: static.network

contents: |

[Match]

Name=eth0

[Network]

Address=${MASTER_IP}/23

Gateway=${GATEWAY}.1

DNS=${DNS1}

DNS=${DNS2}

systemd:

units:

- name: iptables-restore.service

enabled: true

contents: |

[Unit]

Description=Restore iptables firewall rules

# if both are queued for some reason, don't store before restoring :)

Before=iptables-store.service

# sounds reasonable to have firewall up before any of the services go up

Before=network.target

Conflicts=shutdown.target

[Service]

Type=oneshot

ExecStart=/sbin/iptables-restore /var/lib/iptables/rules-save

[Install]

WantedBy=basic.target

- name: settimezone.service

enabled: true

contents: |

[Unit]

Description=Set the time zone

[Service]

ExecStart=/usr/bin/timedatectl set-timezone Asia/Tokyo

RemainAfterExit=yes

Type=oneshot

[Install]

WantedBy=basic.target

- name: zrm.service

enabled: true

contents: |

[Unit]

Description=Manage swap spaces on zram, files and partitions.

After=local-fs.target

[Service]

RemainAfterExit=yes

ExecStart=/etc/zrm/zrm.sh start

ExecStop=/etc/zrm/zrm.sh stop

[Install]

WantedBy=local-fs.target

- name: swap.service

enabled: true

contents: |

[Unit]

Description=Turn on swap

Before=docker.service

[Service]

Type=oneshot

Environment="SWAPFILE=/swapfile"

Environment="SWAPSIZE=1GiB"

RemainAfterExit=true

ExecStartPre=/usr/bin/sh -c '/usr/bin/fallocate -l 1GiB /swapfile && chmod 0600 /swapfile && /usr/sbin/mkswap /swapfile'

ExecStartPre=/usr/sbin/losetup -f /swapfile

ExecStart=/usr/bin/sh -c "/sbin/swapon /dev/loop0"

ExecStop=/usr/bin/sh -c "/sbin/swapoff /dev/loop0"

ExecStopPost=/usr/bin/sh -c "/usr/sbin/losetup -d /dev/loop0"

[Install]

WantedBy=multi-user.target

# https://github.com/kubernetes/kube-deploy/blob/master/docker-multinode/common.sh

- name: etcd3.service

enabled: true

contents: |

[Unit]

Description=etcd3

Before=kubelet.service

Requires=docker.service

After=docker.service

[Service]

ExecStartPre=-/usr/bin/docker stop etcd3

ExecStartPre=-/usr/bin/docker rm etcd3

ExecStart=/usr/bin/docker run -p 2379:2379 -p 2380:2380 -v /var/lib/etcd:/var/lib/etcd -v /etc/kubernetes/ssl:/etc/kubernetes/ssl --name=etcd3 gcr.io/google_containers/etcd:3.2.18 \

/usr/local/bin/etcd \

-data-dir /var/lib/etcd \

-name infra0 \

--client-cert-auth \

--trusted-ca-file=/etc/kubernetes/ssl/ca.pem \

--cert-file=/etc/kubernetes/ssl/apiserver.pem \

--key-file=/etc/kubernetes/ssl/apiserver-key.pem \

--peer-client-cert-auth \

--peer-trusted-ca-file=/etc/kubernetes/ssl/ca.pem \

--peer-cert-file=/etc/kubernetes/ssl/apiserver.pem \

--peer-key-file=/etc/kubernetes/ssl/apiserver-key.pem \

-advertise-client-urls https://${MASTER_IP}:2379 \

-listen-client-urls https://0.0.0.0:2379 \

-initial-advertise-peer-urls https://${MASTER_IP}:2380 \

-listen-peer-urls https://0.0.0.0:2380 \

-initial-cluster-token etcd-cluster-1 \

-initial-cluster infra0=https://${MASTER_IP}:2380 \

-initial-cluster-state new

ExecStop=/usr/bin/docker stop etcd3

Restart=on-failure

RestartSec=10

[Install]

WantedBy=multi-user.target

- name: kubelet.service

enabled: true

contents: |

[Unit]

Description=kubelet

Requires=etcd3.service

After=etcd3.service

[Service]

ExecStartPre=/usr/bin/mkdir -p /etc/kubernetes/manifests

ExecStartPre=/bin/mkdir -p /var/lib/kubelet/volumeplugins

ExecStartPre=/bin/mkdir -p /var/lib/rook

ExecStart=/usr/bin/docker run \

--net=host \

--pid=host \

--privileged \

-v /:/rootfs:ro \

-v /sys:/sys:ro \

-v /dev:/dev \

-v /var/run:/var/run:rw \

-v /run:/run:rw \

-v /var/lib/docker:/var/lib/docker:rw \

-v /var/lib/kubelet:/var/lib/kubelet:shared \

-v /var/log/containers:/var/log/containers:rw \

-v /etc/kubernetes:/etc/kubernetes:rw \

-v /etc/cni/net.d:/etc/cni/net.d:rw \

-v /opt/cni/bin:/opt/cni/bin:rw \

gcr.io/google_containers/hyperkube-amd64:v1.13.1 \

/hyperkube kubelet \

--config=/etc/kubernetes/kubelet-conf.yaml \

--network-plugin=cni \

--cni-conf-dir=/etc/cni/net.d \

--cni-bin-dir=/opt/cni/bin \

--container-runtime=docker \

--register-node=true \

--allow-privileged=true \

--hostname-override=${MASTER_IP} \

--kubeconfig=/etc/kubernetes/worker-kubeconfig.yaml \

--containerized \

--v=4 \

--volume-plugin-dir=/var/lib/kubelet/volumeplugins

Restart=always

RestartSec=10

[Install]

WantedBy=multi-user.target

Container Linux Config TranspilerでContainer Linux Configをignitionに変換(次の例はwindowsコマンドでの例)。

Container Linux Config Transpiler:https://github.com/coreos/container-linux-config-transpiler/releases

C:\>ct-v0.9.0-x86_64-pc-windows-gnu.exe --in-file C:\temp\container-linux-config.yaml > C:\temp\ignition.json

ignitionをvpsにアップロードしてcoreos-installを実行。

$ scp -r ignition.json core@${MASTER_IP}:/home/core/

$ ssh ${MASTER_IP} -l core

$ curl https://raw.githubusercontent.com/coreos/init/master/bin/coreos-install > coreos-install-current

$ chmod 777 coreos-install-current

$ sudo ./coreos-install-current -d /dev/vda -C stable -V 1911.5.0 -i ignition.json

$ sudo systemctl poweroff

次に、さくらのVPSのコンソールからvpsを起動しておきます。

KubernetesのためのTLS Assetsを準備

次の手順に従ってRBACに対応するTLS Assetsを生成してください。

(

https://coreos.com/kubernetes/docs/1.0.6/openssl.html

or

https://github.com/coreos/coreos-kubernetes/blob/master/Documentation/openssl.md

)

$ openssl genrsa -out ca-key.pem 2048

$ openssl req -x509 -new -nodes -key ca-key.pem -days 10000 -out ca.pem -subj "/CN=kube-ca"

[req]

req_extensions = v3_req

distinguished_name = req_distinguished_name

[req_distinguished_name]

[ v3_req ]

basicConstraints = CA:FALSE

keyUsage = nonRepudiation, digitalSignature, keyEncipherment

subjectAltName = @alt_names

[alt_names]

DNS.1 = kubernetes

DNS.2 = kubernetes.default

DNS.3 = kubernetes.default.svc

DNS.4 = kubernetes.default.svc.cluster.local

IP.1 = 10.3.0.1

IP.2 = ${MASTER_IP}

$ openssl genrsa -out apiserver-key.pem 2048

$ openssl req -new -key apiserver-key.pem -out apiserver.csr -subj "/CN=kube-apiserver" -config openssl.cnf

$ openssl x509 -req -in apiserver.csr -CA ca.pem -CAkey ca-key.pem -CAcreateserial -out apiserver.pem -days 365 -extensions v3_req -extfile openssl.cnf

[req]

req_extensions = v3_req

distinguished_name = req_distinguished_name

[req_distinguished_name]

[ v3_req ]

basicConstraints = CA:FALSE

keyUsage = nonRepudiation, digitalSignature, keyEncipherment

subjectAltName = @alt_names

[alt_names]

IP.1 = $ENV::WORKER_IP

$ openssl genrsa -out kube-worker-0-worker-key.pem 2048

$ WORKER_IP=${MASTER_IP} openssl req -new -key kube-worker-0-worker-key.pem -out kube-worker-0-worker.csr -subj "/CN=kube-worker-0/O=system:masters" -config worker-openssl.cnf

$ WORKER_IP=${MASTER_IP} openssl x509 -req -in kube-worker-0-worker.csr -CA ca.pem -CAkey ca-key.pem -CAcreateserial -out kube-worker-0-worker.pem -days 365 -extensions v3_req -extfile worker-openssl.cnf

$ openssl genrsa -out admin-key.pem 2048

$ openssl req -new -key admin-key.pem -out admin.csr -subj "/CN=kube-admin/O=system:masters"

$ openssl x509 -req -in admin.csr -CA ca.pem -CAkey ca-key.pem -CAcreateserial -out admin.pem -days 365

以降の手順では次の8つのキーが生成されていることを仮定します。

- ca.pem

- ca-key.pem

- apiserver.pem

- apiserver-key.pem

- admin.pem

- admin-key.pem

- kube-worker-0-worker.pem

- kube-worker-0-worker-key.pem

vpsでディレクトリを作り、生成したキーを置きます。

$ scp -r tls-assets core@${MASTER_IP}:/home/core/

$ rm ~/.ssh/known_hosts

$ ssh ${MASTER_IP} -l core

$ mkdir -p /etc/kubernetes/ssl

$ sudo cp tls-assets/*.pem /etc/kubernetes/ssl

- File: /etc/kubernetes/ssl/ca.pem

- File: /etc/kubernetes/ssl/ca-key.pem

- File: /etc/kubernetes/ssl/apiserver.pem

- File: /etc/kubernetes/ssl/apiserver-key.pem

- File: /etc/kubernetes/ssl/kube-worker-0-worker.pem

- File: /etc/kubernetes/ssl/kube-worker-0-worker-key.pem

キーに適切なパーミッションを設定

$ sudo chmod 600 /etc/kubernetes/ssl/*-key.pem

$ sudo chown root:root /etc/kubernetes/ssl/*-key.pem

シンボリックリンクを作成する

$ cd /etc/kubernetes/ssl/

$ sudo ln -s kube-worker-0-worker.pem worker.pem

$ sudo ln -s kube-worker-0-worker-key.pem worker-key.pem

vpsを再起動

$ sudo reboot

$ ssh ${MASTER_IP} -l core

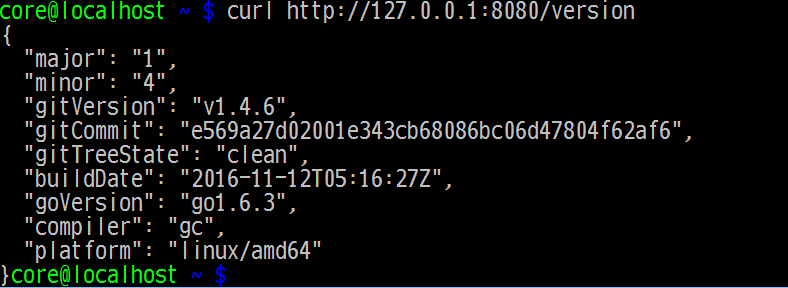

etcd3が動作していることを確認

$ systemctl status etcd3

$ curl http://127.0.0.1:8080/version

vpsから出る。

$ exit

kubectlをセットアップする

kubectlをダウンロードする

linuxの場合、次のようなコマンド

$ curl -O https://storage.googleapis.com/kubernetes-release/release/v1.13.1/bin/linux/amd64/kubectl

ダウンロードしたら、実行権限を確認し、適切なパスに移動する

$ chmod +x kubectl

$ mv kubectl /usr/local/bin/kubectl

kubectlを設定する。

- Replace ${MASTER_HOST} with the master node address or name used in previous steps

- Replace ${CA_CERT} with the absolute path to the ca.pem created in previous steps

- Replace ${ADMIN_KEY} with the absolute path to the admin-key.pem created in previous steps

- Replace ${ADMIN_CERT} with the absolute path to the admin.pem created in previous steps

$ kubectl config set-cluster default-cluster --server=https://${MASTER_HOST}:6443 --certificate-authority=${CA_CERT}

$ kubectl config set-credentials default-admin --certificate-authority=${CA_CERT} --client-key=${ADMIN_KEY} --client-certificate=${ADMIN_CERT}

$ kubectl config set-context default-system --cluster=default-cluster --user=default-admin

$ kubectl config use-context default-system

kubectlの設定と接続を確認する。

$ kubectl get nodes

NAME STATUS AGE

X.X.X.X Ready 1d

Canalをデプロイする

再インストールする場合には、各サーバでcalico関連のディレクトリを削除しておきます。

$ sudo rm -r /etc/cni/net.d /opt/cni/bin /var/lib/calico

https://docs.projectcalico.org/getting-started/kubernetes/flannel/flannel

に記載の手順でCanal(Calico for policy and flannel for networking)をデプロイする。

$ curl https://docs.projectcalico.org/manifests/canal.yaml -O

$ sed -i -e "s?/usr/libexec/kubernetes/kubelet-plugins?/var/lib/kubernetes/kubelet-plugins?g" canal.yaml

$ kubectl apply -f canal.yaml

CoreDNSをデプロイする

https://github.com/coredns/deployment/tree/master/kubernetes

に記載の手順でCoreDNSをデプロイする。

$ sudo apt -y install jq

$ curl -O https://raw.githubusercontent.com/coredns/deployment/master/kubernetes/coredns.yaml.sed

$ curl -O https://raw.githubusercontent.com/coredns/deployment/master/kubernetes/deploy.sh

$ ./deploy.sh -i 10.3.0.10 | kubectl apply -f -

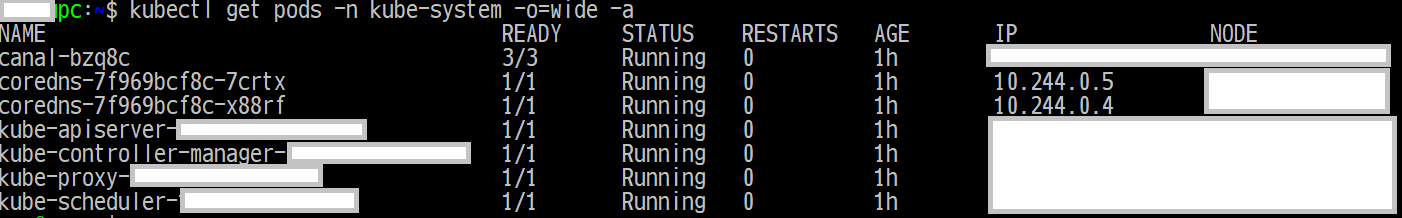

次のコマンドを実行すると、次の画面のようになるはずです。

$ kubectl get pods -n kube-system -o=wide -a

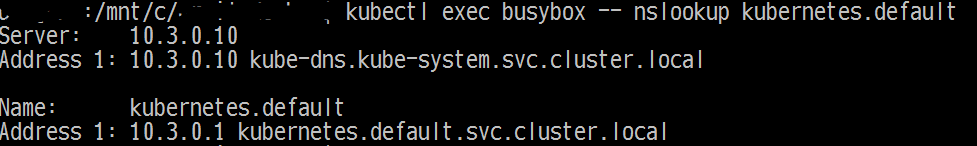

Podがデプロイできることを確認

apiVersion: v1

kind: Pod

metadata:

name: busybox

namespace: default

spec:

containers:

- image: busybox

command:

- sleep

- "3600"

imagePullPolicy: IfNotPresent

name: busybox

restartPolicy: Always

$ kubectl create -f busybox.yaml

$ kubectl get pods busybox

$ kubectl exec busybox -- nslookup kubernetes.default