参考

1.構成

- CentOS5.11 x86_64

- IP

- drbd1.local: 192.168.100.199

- drbd2.local: 192.168.100.200

- drbd仮想IP: 192.168.100.198

- DRBD

- /dev/vdb1

- リソース

- mysqldata : /var/lib/mysql/

2.リポジトリ追加

epel-release

yum install -y epel-release

clusterlabs.repo

cd /etc/yum.repos.d/

wget http://clusterlabs.org/rpm/epel-5/clusterlabs.repo

パッケージインストール

yum install {corosync,heartbeat,pacemaker}.x86_64

- i386のパッケージが入らないように、x86_64を指定。

3.corosync設定

/etc/corosync/corosync.conf

aisexec {

user: root

group: root

}

service {

name: pacemaker

ver: 0

use_mgmtd: yes

}

totem {

version: 2

secauth: off

threads: 0

rrp_mode: active

clear_node_high_bit: yes

token: 4000

consensus: 10000

rrp_problem_count_timeout: 3000

interface {

ringnumber: 0

bindnetaddr: 192.168.100.0

mcastaddr: 226.94.1.1

mcastport: 5405

}

}

logging {

fileline: on

to_syslog: yes

syslog_facility: local1

syslog_priority: info

debug: off

timestamp: on

}

4.syslog

/etc/syslog.conf

- *.info;mail.none;authpriv.none;cron.none /var/log/messages

+ *.info;mail.none;authpriv.none;cron.none;local1.none /var/log/messages

+ local1.* /var/log/pacemaker.log

- /var/log/pacemaker.log にログが記録されます。

5.corosync

5.1. 起動

/sbin/service corosync start

5.2. STONITH無効化

PATHを通す

export PATH=$PATH:/usr/sbin:/sbin

以下は一台だけで実施

確認

# /usr/sbin/crm_verify -L

crm_verify[2097]: 2015/03/16_16:42:10 ERROR: unpack_resources: Resource start-up disabled since no STONITH resources have been defined

crm_verify[2097]: 2015/03/16_16:42:10 ERROR: unpack_resources: Either configure some or disable STONITH with the stonith-enabled option

crm_verify[2097]: 2015/03/16_16:42:10 ERROR: unpack_resources: NOTE: Clusters with shared data need STONITH to ensure data integrity

Errors found during check: config not valid

-V may provide more details

STONITH無効化

# crm configure property stonith-enabled=false

INFO: building help index

確認

# crm_verify -L

5.3. 仮想IPの設定

これは一台だけで実施

# crm configure \

primitive \

ClusterIP \

ocf:heartbeat:IPaddr2 \

params \

ip=192.168.100.198 \

cidr_netmask=24 \

nic=eth0 \

iflabel=0 \

op \

monitor \

interval=30s \

nic=eth0 \

iflabel=:0

crm_verify[2171]: 2015/03/16_16:46:44 WARN: cluster_status: We do not have quorum - fencing and resource management disabled

5.4. quorum無効化

これは一台だけで実施

# crm configure property no-quorum-policy=ignore

ノード復帰時に自動でリソースを元のノード上に移そうとするのを止める

# crm configure rsc_defaults resource-stickiness=100

6. DRBD

6.1. インストール

yum install drbd83 drbd83-kmod

chkconfig drbd off

6.2. 設定

/etc/drbd.conf

global {

usage-count no;

}

common {

protocol C;

}

resource mysqldata{

meta-disk internal;

device /dev/drbd0;

syncer {

verify-alg sha1;

rate 80M;

}

on drbd1.local {

disk /dev/vdb1;

address 192.168.100.199:7789;

}

on drbd2.local {

disk /dev/vdb1;

address 192.168.100.200:7789;

}

}

6.3 hostsにて名前解決

/etc/hosts

+ 192.168.100.199 drbd1 drbd1.local

+ 192.168.100.200 drbd2 drbd2.local

6.4. create-md

# drbdadm create-md mysqldata

Writing meta data...

initializing activity log

NOT initialized bitmap

New drbd meta data block successfully created.

modprobe drbd

drbdadm up mysqldata

6.5. DRBDデバイスの状態確認

/proc/drbd

# cat /proc/drbd

version: 8.3.15 (api:88/proto:86-97)

GIT-hash: 0ce4d235fc02b5c53c1c52c53433d11a694eab8c build by mockbuild@builder10.centos.org, 2013-03-27 16:01:26

1: cs:WFConnection ro:Secondary/Unknown ds:Inconsistent/DUnknown C r----s

ns:0 nr:0 dw:0 dr:0 al:0 bm:0 lo:0 pe:0 ua:0 ap:0 ep:1 wo:b oos:4194092

6.6. DRBDデバイスの初期化

初期化

drbdadm -- --overwrite-data-of-peer primary mysqldata

mkfs.ext3 /dev/drbd0

同期が終わるまで待つ。

mkdir /var/lib/mysql/

mount /dev/drbd0 /var/lib/mysql/

確認

# df -h /var/lib/mysql/

Filesystem サイズ 使用 残り 使用% マウント位置

/dev/drbd0 4.0G 73M 3.7G 2% /var/lib/mysql

7. crm (pacemaker)

- corosyncが起動していること

- 2台目も動作していること

7.1. DRBDの登録

# crm

crm(live)# cib new drbd

INFO: building help index

INFO: drbd shadow CIB created

rm(drbd)# configure primitive MySQLData ocf:heartbeat:drbd params drbd_resource=mysqldata op monitor interval=60s

WARNING: MySQLData: default timeout 20s for start is smaller than the advised 240

WARNING: MySQLData: default timeout 20s for stop is smaller than the advised 100

WARNING: MySQLData: action monitor not advertised in meta-data, it may not be supported by the RA

WARNING: MySQLData: default timeout 20s for start is smaller than the advised 240

WARNING: MySQLData: default timeout 20s for stop is smaller than the advised 100

WARNING: MySQLData: action monitor not advertised in meta-data, it may not be supported by the RA

crm(drbd)# configure ms MySQLDataClone MySQLData meta master-max=1 master-node-max=1 clone-max=2 clone-node-max=1 notify=true

crm(drbd)# cib commit drbd

INFO: commited 'drbd' shadow CIB to the cluster

crm(drbd)# quit

7.2. /var/lib/mysql/の登録

# crm

crm(live)# cib new fs

INFO: fs shadow CIB created

crm(fs)# configure primitive MySQLFS ocf:heartbeat:Filesystem params device="/dev/drbd0" directory="/var/lib/mysql" fstype="ext3"

WARNING: MySQLFS: default timeout 20s for start is smaller than the advised 60

WARNING: MySQLFS: default timeout 20s for stop is smaller than the advised 60

WARNING: MySQLFS: default timeout 20s for start is smaller than the advised 60

WARNING: MySQLFS: default timeout 20s for stop is smaller than the advised 60

DRBDと同じノード上で動作させる(colocation)

crm(fs)# configure colocation fs_on_drbd inf: MySQLFS MySQLDataClone:Master

DRBDの後にmount(order)

crm(fs)# configure order MySQLFS-after-MySQLData inf: MySQLDataClone:promote MySQLFS:start

crm(fs)# cib commit fs

crm(fs)# quit

7.3. MySQL

yum install mysql-server

初期設定するためにstart,stopする

service mysqld start

service mysqld stop

# ls /var/lib/mysql

ib_logfile0 ib_logfile1 ibdata1 lost+found mysql test

umount /var/lib/mysql

7.4. pacemakerにMySQL登録

# crm

crm(live)# configure

crm(live)configure# primitive MySQL ocf:heartbeat:mysql params binary=/usr/bin/mysqld_safe pid=/var/run/mysqld/mysqld.pid op monitor interval=60s

WARNING: MySQL: default timeout 20s for start is smaller than the advised 120

WARNING: MySQL: default timeout 20s for stop is smaller than the advised 120

WARNING: MySQL: default timeout 20s for monitor is smaller than the advised 30

DRBDと同じノード上で動作させる(colocation)

crm(live)configure# colocation MySQL-with-MySQLFS inf: MySQL MySQLFS

DRBDの後にmount(order)

crm(live)configure# order MySQL-after-MySQLFS inf: MySQLFS MySQL

DRBDと同じノード上で動作させる(colocation)

crm(live)configure# colocation MySQL-with-ClusterIP inf: MySQL ClusterIP

DRBDの後にmount(order)

crm(live)configure# order MySQL-after-ClusterIP inf: ClusterIP MySQL

crm(live)configure# commit

WARNING: MySQL: default timeout 20s for start is smaller than the advised 120

WARNING: MySQL: default timeout 20s for stop is smaller than the advised 120

WARNING: MySQL: default timeout 20s for monitor is smaller than the advised 30

7.5. クラスタグループの作成

# crm configure group MySQLGroup MySQLFS ClusterIP MySQL

INFO: resource references in colocation:fs_on_drbd updated

INFO: resource references in colocation:MySQL-with-MySQLFS updated

INFO: resource references in order:MySQLFS-after-MySQLData updated

INFO: resource references in order:MySQL-after-MySQLFS updated

INFO: resource references in colocation:MySQL-with-ClusterIP updated

INFO: resource references in order:MySQL-after-ClusterIP updated

INFO: resource references in colocation:MySQL-with-MySQLFS updated

INFO: resource references in colocation:MySQL-with-ClusterIP updated

INFO: resource references in order:MySQL-after-MySQLFS updated

INFO: resource references in order:MySQL-after-ClusterIP updated

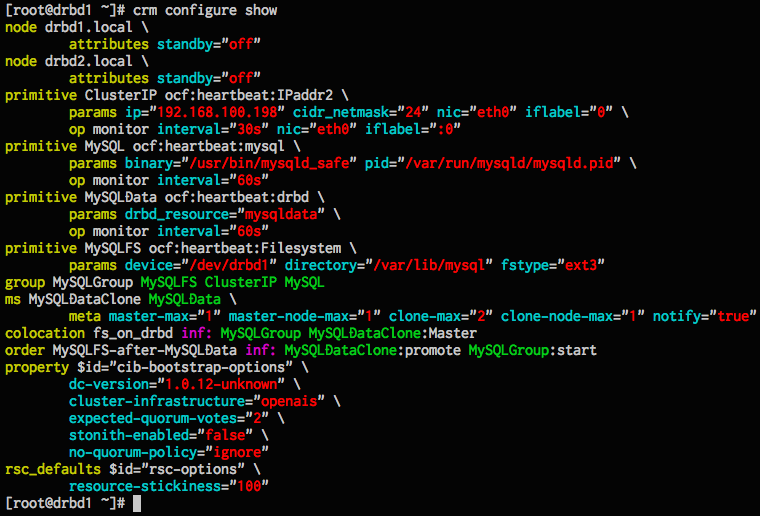

- crm configure show

8. 動作確認

OS起動後、

drbd1, drbd2 両方にて/sbin/service corosync start

8.1. drbd1(Master), drbd2(Slave)

crm_mon -A

Online: [ drbd1.local drbd2.local ]

Master/Slave Set: MySQLDataClone

Masters: [ drbd1.local ]

Slaves: [ drbd2.local ]

Resource Group: MySQLGroup

MySQLFS (ocf::heartbeat:Filesystem): Started drbd1.local

ClusterIP (ocf::heartbeat:IPaddr2): Started drbd1.local

MySQL (ocf::heartbeat:mysql): Started drbd1.local

Node Attributes:

* Node drbd1.local:

+ master-MySQLData:0 : 10

* Node drbd2.local:

+ master-MySQLData:1 : 10

8.2. drbd1(Slave), drbd2(Master)に切替

drbd1をオフラインにする

crm node standby drbd1.local

crm_mon -A

Node drbd1.local: standby

Online: [ drbd2.local ]

Master/Slave Set: MySQLDataClone

Masters: [ drbd2.local ]

Stopped: [ MySQLData:0 ]

Resource Group: MySQLGroup

MySQLFS (ocf::heartbeat:Filesystem): Started drbd2.local

ClusterIP (ocf::heartbeat:IPaddr2): Started drbd2.local

MySQL (ocf::heartbeat:mysql): Started drbd2.local

Node Attributes:

* Node drbd1.local:

* Node drbd2.local:

+ master-MySQLData:1 : 5

drbd1をオンラインにする

crm node online drbd1.local

Online: [ drbd1.local drbd2.local ]

Master/Slave Set: MySQLDataClone

Masters: [ drbd2.local ]

Slaves: [ drbd1.local ]

Resource Group: MySQLGroup

MySQLFS (ocf::heartbeat:Filesystem): Started drbd2.local

ClusterIP (ocf::heartbeat:IPaddr2): Started drbd2.local

MySQL (ocf::heartbeat:mysql): Started drbd2.local

Node Attributes:

* Node drbd1.local:

+ master-MySQLData:0 : 10

* Node drbd2.local:

+ master-MySQLData:1 : 5

8.3. drbd1(Master), drbd2(Slave)に戻す

crm node standby drbd1.local

crm node online drbd1.local

9. 障害テスト

- drbd2がMasterの時、drbd2を再起動

- drbd1がMasterに切り替わる