はじめに

この記事は、OpenStack Advent Calendar 2016の12/14分の記事になります.

Canonicalの鈴木です、OpenStackのComputeノードをLXDで用意する機会がありましたので

簡易ですがご紹介したいと思います.

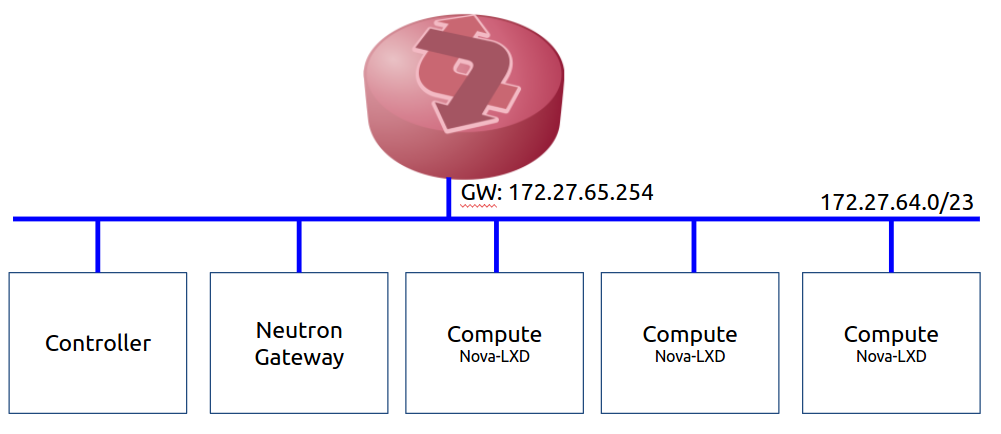

物理環境

[OpenStackノード]

Nodes: Controller 1台、Neutron GW 1台、Compute3台

OS: Ubuntu16.04 xenial

OpenStackの用意

Jujuを利用してOpenStack LXD Bundle Charmを利用してデプロイ.

OpenStack LXD Bundle Charm: https://jujucharms.com/u/openstack-charmers-next/openstack-lxd

ubuntu@OrangeBox64:~$ juju status

Model Controller Cloud/Region Version

default maas maas 2.0.1

App Version Status Scale Charm Store Rev OS Notes

glance 13.0.0 active 1 glance jujucharms 253 ubuntu

keystone 10.0.0 active 1 keystone jujucharms 258 ubuntu

lxd 2.0.5 active 3 lxd jujucharms 4 ubuntu

mysql 5.6.21-25.8 active 1 percona-cluster jujucharms 246 ubuntu

neutron-api 9.0.0 active 1 neutron-api jujucharms 246 ubuntu

neutron-gateway 9.0.0 active 1 neutron-gateway jujucharms 232 ubuntu

neutron-openvswitch 9.0.0 active 3 neutron-openvswitch jujucharms 238 ubuntu

nova-cloud-controller 14.0.1 active 1 nova-cloud-controller jujucharms 292 ubuntu

nova-compute 14.0.1 active 3 nova-compute jujucharms 259 ubuntu

ntp unknown 4 ntp jujucharms 0 ubuntu

openstack-dashboard 10.0.0 active 1 openstack-dashboard jujucharms 243 ubuntu

rabbitmq-server 3.5.7 active 1 rabbitmq-server jujucharms 54 ubuntu

Unit Workload Agent Machine Public address Ports Message

glance/0* active idle 23 172.27.64.5 9292/tcp Unit is ready

keystone/0* active idle 23 172.27.64.5 5000/tcp Unit is ready

mysql/0* active idle 21 172.27.64.3 Unit is ready

neutron-api/0* active idle 23 172.27.64.5 9696/tcp Unit is ready

neutron-gateway/0* active idle 26 172.27.64.7 Unit is ready

ntp/3 unknown idle 172.27.64.7

nova-cloud-controller/0* active idle 23 172.27.64.5 8774/tcp Unit is ready

nova-compute/0 active idle 25 172.27.64.8 Unit is ready

lxd/1 active idle 172.27.64.8 Unit is ready

neutron-openvswitch/1 active idle 172.27.64.8 Unit is ready

ntp/1 unknown idle 172.27.64.8

nova-compute/1* active idle 22 172.27.64.4 Unit is ready

lxd/0* active idle 172.27.64.4 Unit is ready

neutron-openvswitch/0* active idle 172.27.64.4 Unit is ready

ntp/0* unknown idle 172.27.64.4

nova-compute/2 active idle 24 172.27.64.6 Unit is ready

lxd/2 active idle 172.27.64.6 Unit is ready

neutron-openvswitch/2 active idle 172.27.64.6 Unit is ready

ntp/2 unknown idle 172.27.64.6

openstack-dashboard/0* active idle 23 172.27.64.5 80/tcp,443/tcp Unit is ready

rabbitmq-server/0* active idle 23 172.27.64.5 5672/tcp Unit is ready

Machine State DNS Inst id Series AZ

21 started 172.27.64.3 4y3hgt xenial zone0

22 started 172.27.64.4 4y3hfm xenial zone1

22/lxd/0 started 172.27.65.27 juju-79fcc7-22-lxd-0 xenial

22/lxd/1 started 172.27.64.10 juju-79fcc7-22-lxd-1 xenial

22/lxd/2 started 172.27.65.25 juju-79fcc7-22-lxd-2 xenial

23 started 172.27.64.5 4y3hfs xenial zone2

23/lxd/0 started 172.27.64.9 juju-79fcc7-23-lxd-0 xenial

23/lxd/1 started 172.27.65.23 juju-79fcc7-23-lxd-1 xenial

23/lxd/2 started 172.27.65.24 juju-79fcc7-23-lxd-2 xenial

24 started 172.27.64.6 4y3hgr xenial zone0

24/lxd/0 started 172.27.65.22 juju-79fcc7-24-lxd-0 xenial

24/lxd/1 started 172.27.65.28 juju-79fcc7-24-lxd-1 xenial

24/lxd/2 started 172.27.65.29 juju-79fcc7-24-lxd-2 xenial

25 started 172.27.64.8 4y3hft xenial zone2

25/lxd/0 started 172.27.65.21 juju-79fcc7-25-lxd-0 xenial

25/lxd/1 started 172.27.65.26 juju-79fcc7-25-lxd-1 xenial

26 started 172.27.64.7 4y3hfn xenial zone1

Relation Provides Consumes Type

cluster glance glance peer

identity-service glance keystone regular

shared-db glance mysql regular

image-service glance nova-cloud-controller regular

image-service glance nova-compute regular

amqp glance rabbitmq-server regular

cluster keystone keystone peer

shared-db keystone mysql regular

identity-service keystone neutron-api regular

identity-service keystone nova-cloud-controller regular

identity-service keystone openstack-dashboard regular

lxd-migration lxd lxd peer

lxd lxd nova-compute regular

cluster mysql mysql peer

shared-db mysql neutron-api regular

shared-db mysql nova-cloud-controller regular

cluster neutron-api neutron-api peer

neutron-plugin-api neutron-api neutron-gateway regular

neutron-plugin-api neutron-api neutron-openvswitch regular

neutron-api neutron-api nova-cloud-controller regular

amqp neutron-api rabbitmq-server regular

cluster neutron-gateway neutron-gateway peer

quantum-network-service neutron-gateway nova-cloud-controller regular

juju-info neutron-gateway ntp subordinate

amqp neutron-gateway rabbitmq-server regular

neutron-plugin neutron-openvswitch nova-compute regular

amqp neutron-openvswitch rabbitmq-server regular

cluster nova-cloud-controller nova-cloud-controller peer

cloud-compute nova-cloud-controller nova-compute regular

amqp nova-cloud-controller rabbitmq-server regular

lxd nova-compute lxd subordinate

neutron-plugin nova-compute neutron-openvswitch subordinate

compute-peer nova-compute nova-compute peer

juju-info nova-compute ntp subordinate

amqp nova-compute rabbitmq-server regular

ntp-peers ntp ntp peer

cluster openstack-dashboard openstack-dashboard peer

cluster rabbitmq-server rabbitmq-server peer

ComputeノードLXDパラメータ

[DEFAULT]

verbose=True

debug=True

dhcpbridge_flagfile=/etc/nova/nova.conf

dhcpbridge=/usr/bin/nova-dhcpbridge

logdir=/var/log/nova

state_path=/var/lib/nova

force_dhcp_release=True

use_syslog = False

ec2_private_dns_show_ip=True

api_paste_config=/etc/nova/api-paste.ini

enabled_apis=osapi_compute,metadata

auth_strategy=keystone

my_ip = 172.27.64.8

vnc_enabled = False

novnc_enabled = False

libvirt_vif_driver = nova.virt.libvirt.vif.LibvirtGenericVIFDriver

security_group_api = neutron

firewall_driver = nova.virt.firewall.NoopFirewallDriver

network_api_class = nova.network.neutronv2.api.API

use_neutron = True

volume_api_class = nova.volume.cinder.API

reserved_host_memory = 512

[neutron]

url = http://172.27.64.5:9696

auth_url = http://172.27.64.5:35357

auth_type = password

project_domain_name = default

user_domain_name = default

project_name = services

username = nova

password = ptMFPHWVRBhyGrXqhxhPwwtmKbZwcXXwHkxTVkGbrr9FXSxZmCghYLb7VwHh7KNC

signing_dir =

[keystone_authtoken]

auth_uri = http://172.27.64.5:5000

auth_url = http://172.27.64.5:35357

auth_type = password

project_domain_name = default

user_domain_name = default

project_name = services

username = nova

password = ptMFPHWVRBhyGrXqhxhPwwtmKbZwcXXwHkxTVkGbrr9FXSxZmCghYLb7VwHh7KNC

signing_dir =

[glance]

api_servers = http://172.27.64.5:9292

[libvirt]

rbd_user =

rbd_secret_uuid =

live_migration_uri = qemu+ssh://%s/system

# Disable tunnelled migration so that selective

# live block migration can be supported.

live_migration_tunnelled = False

[oslo_messaging_rabbit]

rabbit_userid = nova

rabbit_virtual_host = openstack

rabbit_password = SK2CLycVLTYVYJmCkN6rhCYPjPrNdYGpNyxSShqY775WPJyJC458HVHrswzwZXYW

rabbit_host = 172.27.64.5

[cinder]

os_region_name = RegionOne

[oslo_concurrency]

lock_path=/var/lock/nova

[workarounds]

disable_libvirt_livesnapshot = False

[serial_console]

enabled = false

proxyclient_address = 172.27.64.8

base_url = ws://172.27.64.5:6083/

[DEFAULT]

compute_driver = lxd.LXDDriver

compute_driverパラメータをlxd.LXDDriverに変更.

パッケージバージョンとLXDの動作確認

root@node07ob64:~# dpkg -l | grep lxd

ii lxd 2.0.5-0ubuntu1~ubuntu16.04.1 amd64 Container hypervisor based on LXC - daemon

ii lxd-client 2.0.5-0ubuntu1~ubuntu16.04.1 amd64 Container hypervisor based on LXC - client

ii nova-compute-lxd 14.0.0-0ubuntu1~cloud0 all Openstack Compute - LXD container hypervisor support

ii python-nova-lxd 14.0.0-0ubuntu1~cloud0 all OpenStack Compute Python libraries - LXD driver

ii python-pylxd 2.1.1-0ubuntu1~cloud0 all Python library for interacting with LXD REST API

root@node07ob64:~# lxc list

+----------------------+---------+----------------------+------+------------+-----------+

| NAME | STATE | IPV4 | IPV6 | TYPE | SNAPSHOTS |

+----------------------+---------+----------------------+------+------------+-----------+

| juju-79fcc7-25-lxd-0 | RUNNING | 172.27.65.21 (eth0) | | PERSISTENT | 0 |

+----------------------+---------+----------------------+------+------------+-----------+

| juju-79fcc7-25-lxd-1 | RUNNING | 172.27.65.26 (eth0) | | PERSISTENT | 0 |

+----------------------+---------+----------------------+------+------------+-----------+

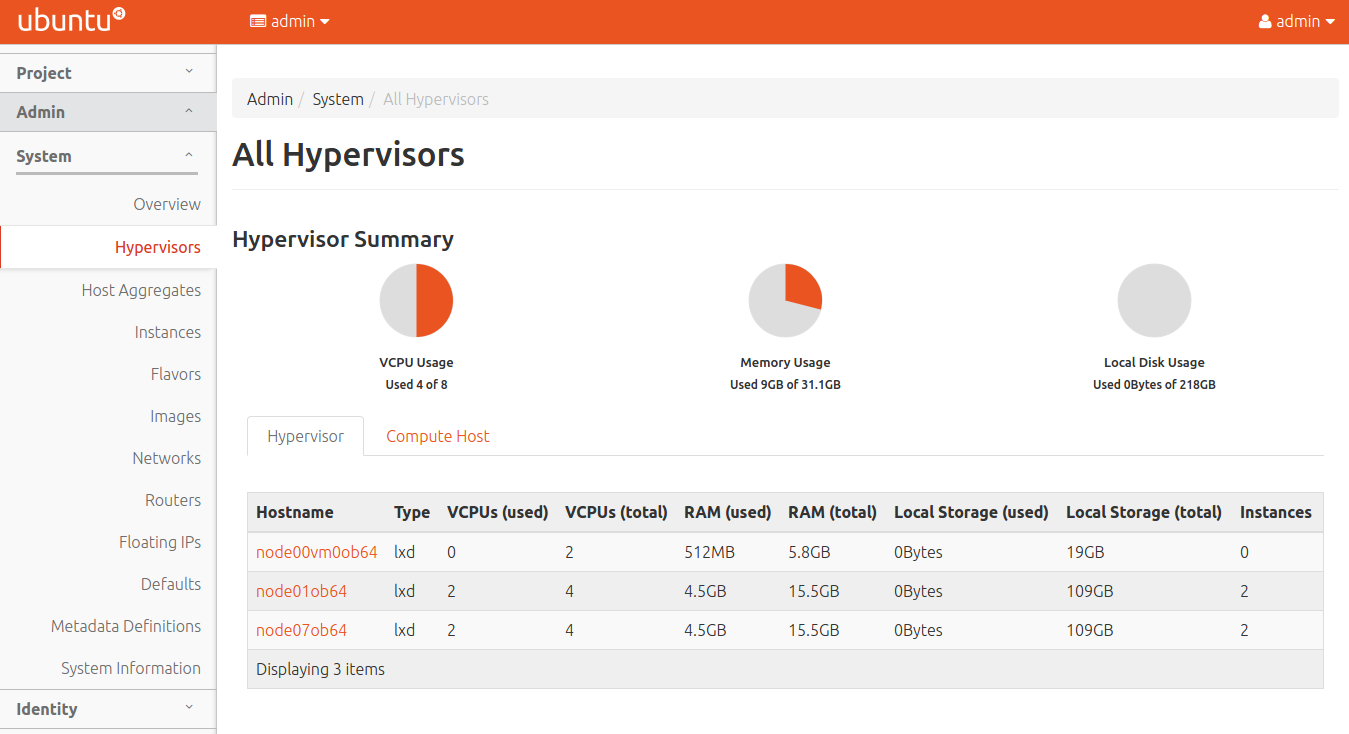

Hypervisorの確認

ComputeノードがType "lxd"として認識されている.

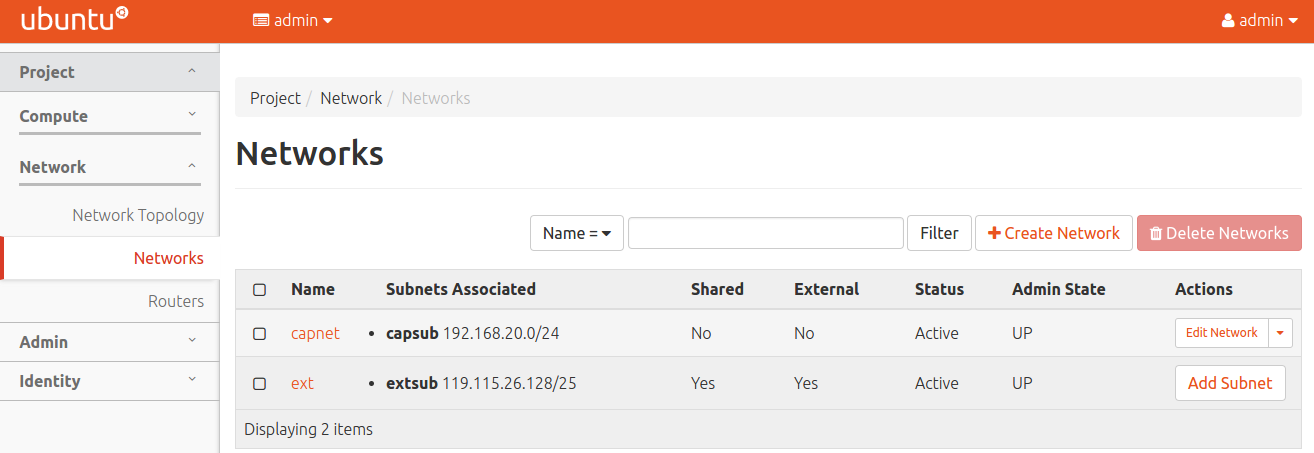

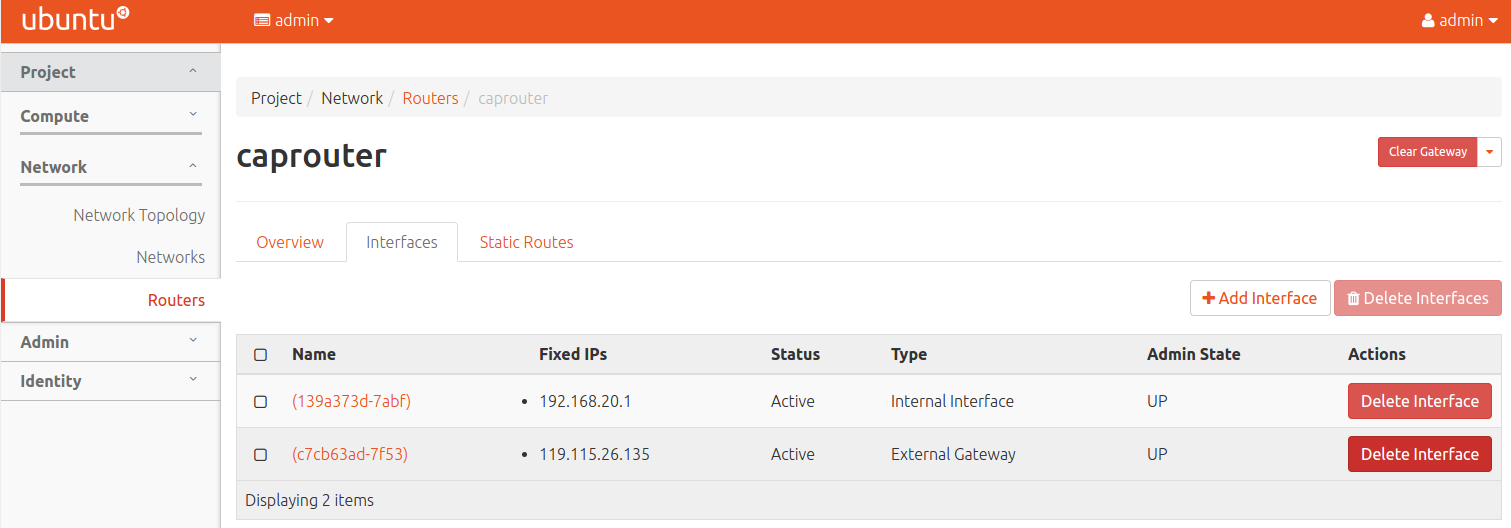

外部ネットワークとテナントネットワークを作成

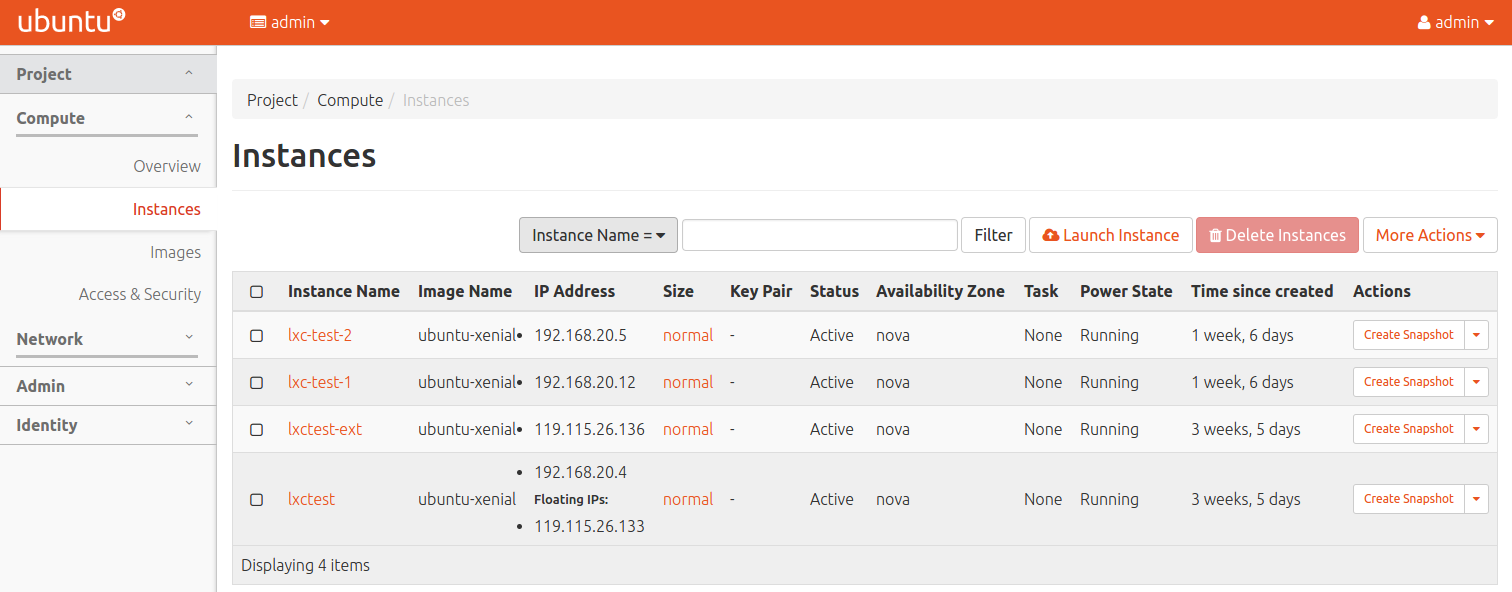

インスタンスを複数起動

Nova LXDの動作概要

/usr/lib/python2.7/dist-packages/nova/virt/lxd/driver.pyを参照.

- pylxdを利用してLXDをコントロール.

- Glanceからイメージを取得してくる.

- LXCコンテナ用のインターフェース作成.

- LXCコンテナの作成.

- LXCコンテナのスタート.

# インスタンス用LinuxBridgeの作成

sudo nova-rootwrap /etc/nova/rootwrap.conf brctl addbr qbr9e0c6af1-30

sudo nova-rootwrap /etc/nova/rootwrap.conf brctl stp qbr9e0c6af1-30 off

sudo nova-rootwrap /etc/nova/rootwrap.conf tee /sys/class/net/qbr9e0c6af1-30/bridge/multicast_snooping

# インスタンス用のvethの作成

sudo nova-rootwrap /etc/nova/rootwrap.conf ip link add qvb9e0c6af1-30 type veth peer name qvo9e0c6af1-30

sudo nova-rootwrap /etc/nova/rootwrap.conf ip link set qvb9e0c6af1-30 up

sudo nova-rootwrap /etc/nova/rootwrap.conf ip link set qvb9e0c6af1-30 promisc on

sudo nova-rootwrap /etc/nova/rootwrap.conf ip link set qvo9e0c6af1-30 up

sudo nova-rootwrap /etc/nova/rootwrap.conf ip link set qvo9e0c6af1-30 promisc on

sudo nova-rootwrap /etc/nova/rootwrap.conf ip link set qbr9e0c6af1-30 up

# vethをLinuxBridgeへ接続

sudo nova-rootwrap /etc/nova/rootwrap.conf brctl addif qbr9e0c6af1-30 qvb9e0c6af1-30

# vethをOVSのbr-intへ接続

sudo nova-rootwrap /etc/nova/rootwrap.conf ovs-vsctl --timeout=120 -- --if-exists del-port qvo9e0c6af1-30 -- add-port br-int qvo9e0c6af1-30 -- set Interface qvo9e0c6af1-30 external-ids:iface-id=9e0c6af1-30bc-418d-b82f-c025c935d987 external-ids:iface-status=active external-ids:attached-mac=fa:16:3e:e4:a3:bf external-ids:vm-uuid=instance-00000014

root@node07ob64:~# lxc list

+----------------------+---------+----------------------+------+------------+-----------+

| NAME | STATE | IPV4 | IPV6 | TYPE | SNAPSHOTS |

+----------------------+---------+----------------------+------+------------+-----------+

| instance-00000002 | RUNNING | 192.168.20.4 (eth0) | | PERSISTENT | 0 |

+----------------------+---------+----------------------+------+------------+-----------+

| instance-0000000c | RUNNING | 192.168.20.12 (eth0) | | PERSISTENT | 0 |

+----------------------+---------+----------------------+------+------------+-----------+

| instance-00000012 | RUNNING | 192.168.20.7 (eth0) | | PERSISTENT | 0 |

+----------------------+---------+----------------------+------+------------+-----------+

| juju-79fcc7-25-lxd-0 | RUNNING | 172.27.65.21 (eth0) | | PERSISTENT | 0 |

+----------------------+---------+----------------------+------+------------+-----------+

| juju-79fcc7-25-lxd-1 | RUNNING | 172.27.65.26 (eth0) | | PERSISTENT | 0 |

+----------------------+---------+----------------------+------+------------+-----------+

novaからインスタンスを起動後にコンピュートノードにてlxc listをするとコンテナ一覧が見れます.

root@node07ob64:/usr/lib/python2.7/dist-packages/nova/api# lxc profile show instance-00000014

name: instance-00000014

config:

boot.autostart: "True"

limits.cpu: "1"

limits.memory: 2048MB

raw.lxc: |

lxc.console.logfile=/var/log/lxd/instance-00000014/console.log

description: ""

devices:

qbr9e0c6af1-30:

host_name: tap9e0c6af1-30

hwaddr: fa:16:3e:e4:a3:bf

nictype: bridged

parent: qbr9e0c6af1-30

type: nic

root:

path: /

size: 0GB

type: disk

lxc profileコマンドで詳細を確認、nictypeは"bridged".

root@node07ob64:~# lxc exec instance-0000000c /bin/bash

root@lxc-test-1:~# ifconfig

eth0 Link encap:Ethernet HWaddr fa:16:3e:54:f9:9d

inet addr:192.168.20.12 Bcast:192.168.20.255 Mask:255.255.255.0

inet6 addr: fe80::f816:3eff:fe54:f99d/64 Scope:Link

UP BROADCAST RUNNING MULTICAST MTU:1456 Metric:1

RX packets:2711 errors:0 dropped:0 overruns:0 frame:0

TX packets:1425 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:1000

RX bytes:252983 (252.9 KB) TX bytes:129955 (129.9 KB)

lo Link encap:Local Loopback

inet addr:127.0.0.1 Mask:255.0.0.0

inet6 addr: ::1/128 Scope:Host

UP LOOPBACK RUNNING MTU:65536 Metric:1

RX packets:4 errors:0 dropped:0 overruns:0 frame:0

TX packets:4 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:1

RX bytes:280 (280.0 B) TX bytes:280 (280.0 B)

もちろんlxc execコマンドでコンテナにアクセスすることも可能です。

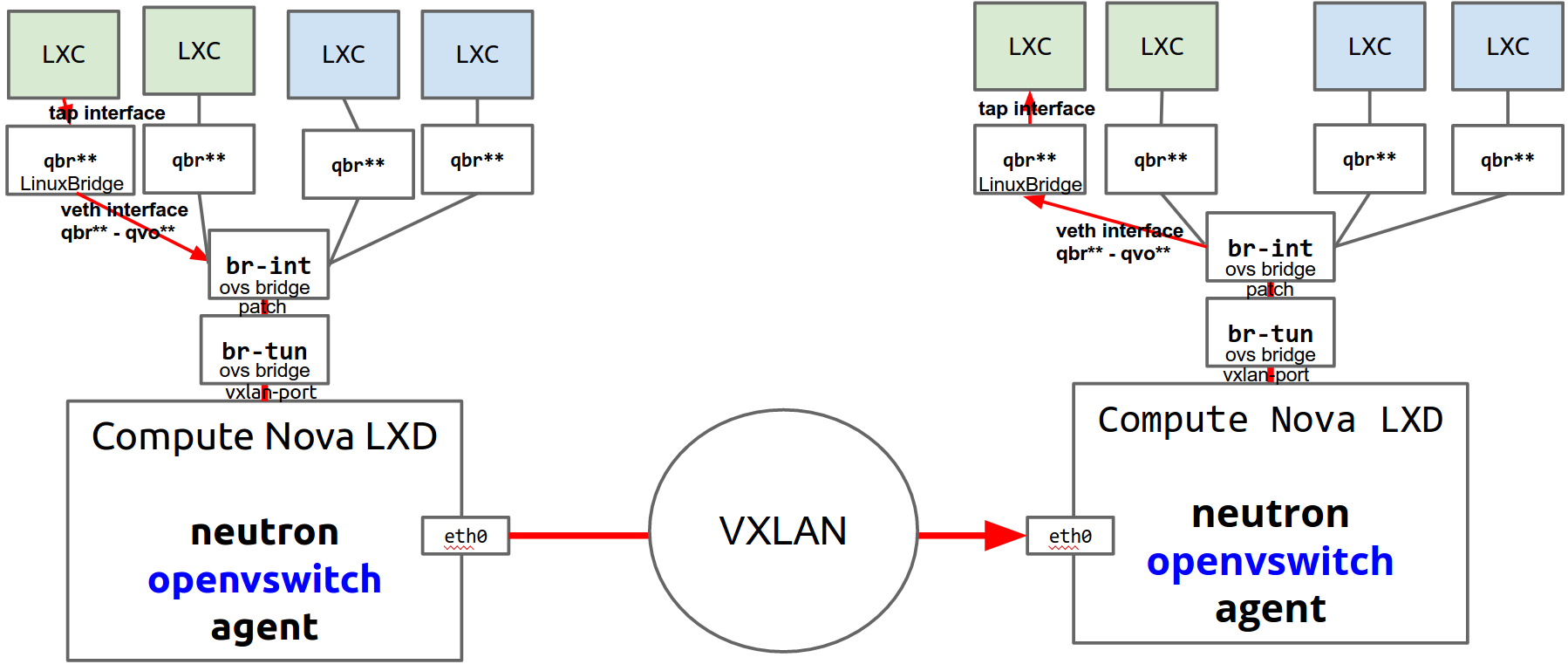

コンピュートノード間での簡易ネットワーク図.

ubuntu@OrangeBox64:~$ juju ssh 26

ubuntu@node02ob64:~$ sudo -s

root@node02ob64:~# ip netns

qdhcp-f8ec06d8-c199-45b8-bc72-56ee2c806fed (id: 3)

qdhcp-2ed6a3e2-9d78-4323-b6dd-d433e67d7f9f (id: 2)

qrouter-35fea0f3-ca4d-4bdf-a3b7-c00194bbd566 (id: 1)

qdhcp-ac7bafe8-3c37-48ba-8c24-98be14f4d9f7 (id: 0)

root@node02ob64:~# ip netns exec qrouter-35fea0f3-ca4d-4bdf-a3b7-c00194bbd566 bash

root@node02ob64:~# ping 119.115.26.136

PING 119.115.26.136 (119.115.26.136) 56(84) bytes of data.

64 bytes from 119.115.26.136: icmp_seq=1 ttl=64 time=0.816 ms

64 bytes from 119.115.26.136: icmp_seq=2 ttl=64 time=0.462 ms

64 bytes from 119.115.26.136: icmp_seq=3 ttl=64 time=0.383 ms

^C

--- 119.115.26.136 ping statistics ---

3 packets transmitted, 3 received, 0% packet loss, time 2000ms

rtt min/avg/max/mdev = 0.383/0.553/0.816/0.190 ms

root@node02ob64:~# ping 119.115.26.133

PING 119.115.26.133 (119.115.26.133) 56(84) bytes of data.

64 bytes from 119.115.26.133: icmp_seq=1 ttl=64 time=0.827 ms

64 bytes from 119.115.26.133: icmp_seq=2 ttl=64 time=0.369 ms

64 bytes from 119.115.26.133: icmp_seq=3 ttl=64 time=0.497 ms

ネットワークノードからインスタンスに付与した外部ネットワークやFloatingIPの接続も可能です.

おわりに

通常はKVMをハイパーバイザーに使用されてることが多いのではないかと思います。NovaLXDを利用してLXCにすることでKVMに存在するオーバーヘッドを取り除いたりインスタンスの作成削除時間を短縮するできるのかなと考えます、NovaLXDはOpenStackの可能性を広めてくれる存在一つです.