More than 5 years have passed since last update.

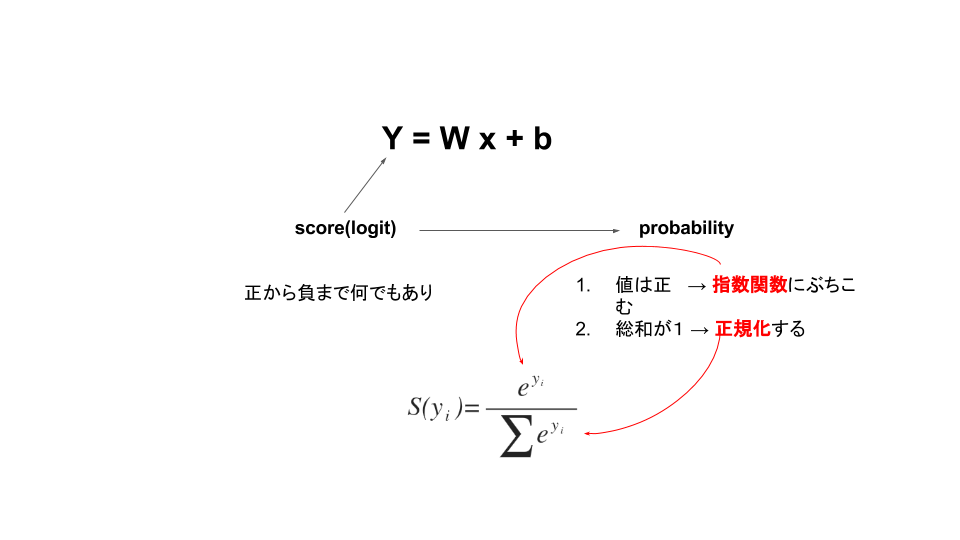

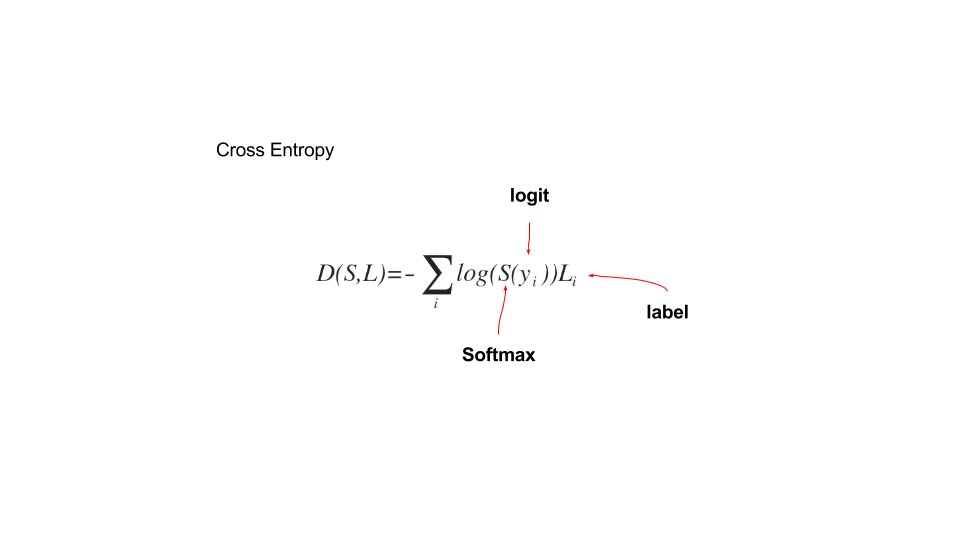

[DL]SoftmaxとCross Entropyって何?

Last updated at Posted at 2016-02-15

Register as a new user and use Qiita more conveniently

- You get articles that match your needs

- You can efficiently read back useful information

- You can use dark theme