はじめに

- 久々にPythonに触れます。現在、ハマっているので内容と実行結果だけ載せておき、進み次第更新していきたいとおもいます。

- 最終的には、以前、SSDモデルをコピペで動かしてみましたが、自身の中でモノにできるようにしたいとおもってます。

- 今回、VGG16モデルはディープラーニングのモデルにおいて応用的なものとなりますが、あまりTensorFlowを使って実装したものを懇切丁寧に説明されているものも少なく、またSSDモデルにもこのVGG16モデルの概念が含まれているので、足がかり的に試してみました。

- 当初、TF-Slimを使って実装していましたが、モデル作成時にエラーとなってしまったので、この際と冗長ですが素のまま実装してみました。

前提

実装

参考

プログラム

network.py

# !/usr/local/bin/python

# -*- coding: utf-8 -*-

import numpy as np

import tensorflow as tf

def vgg16(image, keep_prob):

def weight_variable(shape):

initial = tf.truncated_normal(shape, stddev=0.1)

return tf.Variable(initial)

def bias_variable(shape):

initial = tf.constant(0.1, shape=shape)

return tf.Variable(initial)

def conv2d(conv, weight):

return tf.nn.conv2d(conv, weight, strides=[1, 1, 1, 1], padding='SAME')

def max_pool_2x2(conv):

return tf.nn.max_pool(conv, ksize=[1, 2, 2, 1], strides=[1, 2, 2, 1], padding='SAME')

with tf.name_scope('conv1_1') as scope:

weight = weight_variable([3, 3, 3, 64])

bias = bias_variable([64])

conv = conv2d(image, weight)

out = tf.nn.bias_add(conv, bias)

conv1_1 = tf.nn.relu(out, name=scope)

with tf.name_scope('conv1_2') as scope:

weight = weight_variable([3, 3, 64, 64])

bias = bias_variable([64])

conv = conv2d(conv1_1, weight)

out = tf.nn.bias_add(conv, bias)

conv1_2 = tf.nn.relu(out, name=scope)

with tf.name_scope('pool1') as scope:

pool1 = max_pool_2x2(conv1_2)

with tf.name_scope('conv2_1') as scope:

weight = weight_variable([3, 3, 64, 128])

bias = bias_variable([128])

conv = conv2d(pool1, weight)

out = tf.nn.bias_add(conv, bias)

conv2_1 = tf.nn.relu(out, name=scope)

with tf.name_scope('conv2_2') as scope:

weight = weight_variable([3, 3, 128, 128])

bias = bias_variable([128])

conv = conv2d(conv2_1, weight)

out = tf.nn.bias_add(conv, bias)

conv2_2 = tf.nn.relu(out, name=scope)

with tf.name_scope('pool2') as scope:

pool2 = max_pool_2x2(conv2_2)

with tf.name_scope('conv3_1') as scope:

weight = weight_variable([3, 3, 128, 256])

bias = bias_variable([256])

conv = conv2d(pool2, weight)

out = tf.nn.bias_add(conv, bias)

conv3_1 = tf.nn.relu(out, name=scope)

with tf.name_scope('conv3_2') as scope:

weight = weight_variable([3, 3, 256, 256])

bias = bias_variable([256])

conv = conv2d(conv3_1, weight)

out = tf.nn.bias_add(conv, bias)

conv3_2 = tf.nn.relu(out, name=scope)

with tf.name_scope('conv3_3') as scope:

weight = weight_variable([3, 3, 256, 256])

bias = bias_variable([256])

conv = conv2d(conv3_2, weight)

out = tf.nn.bias_add(conv, bias)

conv3_3 = tf.nn.relu(out, name=scope)

with tf.name_scope('pool3') as scope:

pool3 = max_pool_2x2(conv3_3)

with tf.name_scope('conv4_1') as scope:

weight = weight_variable([3, 3, 256, 512])

bias = bias_variable([512])

conv = conv2d(pool3, weight)

out = tf.nn.bias_add(conv, bias)

conv4_1 = tf.nn.relu(out, name=scope)

with tf.name_scope('conv4_2') as scope:

weight = weight_variable([3, 3, 512, 512])

bias = bias_variable([512])

conv = conv2d(conv4_1, weight)

out = tf.nn.bias_add(conv, bias)

conv4_2 = tf.nn.relu(out, name=scope)

with tf.name_scope('conv4_3') as scope:

weight = weight_variable([3, 3, 512, 512])

bias = bias_variable([512])

conv = conv2d(conv4_2, weight)

out = tf.nn.bias_add(conv, bias)

conv4_3 = tf.nn.relu(out, name=scope)

with tf.name_scope('pool4') as scope:

pool4 = max_pool_2x2(conv4_3)

with tf.name_scope('conv5_1') as scope:

weight = weight_variable([3, 3, 512, 512])

bias = bias_variable([512])

conv = conv2d(pool4, weight)

out = tf.nn.bias_add(conv, bias)

conv5_1 = tf.nn.relu(out, name=scope)

with tf.name_scope('conv5_2') as scope:

weight = weight_variable([3, 3, 512, 512])

bias = bias_variable([512])

conv = conv2d(conv5_1, weight)

out = tf.nn.bias_add(conv, bias)

conv5_2 = tf.nn.relu(out, name=scope)

with tf.name_scope('conv5_3') as scope:

weight = weight_variable([3, 3, 512, 512])

bias = bias_variable([512])

conv = conv2d(conv5_2, weight)

out = tf.nn.bias_add(conv, bias)

conv5_3 = tf.nn.relu(out, name=scope)

with tf.name_scope('pool5') as scope:

pool5 = max_pool_2x2(conv5_3)

with tf.name_scope('fc6') as scope:

shape = int(np.prod(pool5.get_shape()[1:]))

weight = weight_variable([shape, 4096])

bias = bias_variable([4096])

pool5_flat = tf.reshape(pool5, [-1, shape])

fc6 = tf.nn.relu(tf.nn.bias_add(tf.matmul(pool5_flat, weight), bias))

# fc6_drop = tf.nn.dropout(fc6, keep_prob)

with tf.name_scope('fc7') as scope:

weight = weight_variable([4096, 4096])

bias = bias_variable([4096])

fc7 = tf.nn.relu(tf.nn.bias_add(tf.matmul(fc6, weight), bias))

# fc7_drop = tf.nn.dropout(fc7, keep_prob)

with tf.name_scope('fc8') as scope:

weight = weight_variable([4096, 3])

bias = bias_variable([3])

fc8 = tf.nn.bias_add(tf.matmul(fc7, weight), bias)

with tf.name_scope('softmax') as scope:

probs = tf.nn.softmax(fc8)

return probs

実行結果

学習がまったくもって進みません!

2017-08-01 17:39:33.518478: W tensorflow/core/platform/cpu_feature_guard.cc:45] The TensorFlow library wasn't compiled to use SSE4.2 instructions, but these are available on your machine and could speed up CPU computations.

2017-08-01 17:39:33.518513: W tensorflow/core/platform/cpu_feature_guard.cc:45] The TensorFlow library wasn't compiled to use AVX instructions, but these are available on your machine and could speed up CPU computations.

2017-08-01 17:39:33.518523: W tensorflow/core/platform/cpu_feature_guard.cc:45] The TensorFlow library wasn't compiled to use AVX2 instructions, but these are available on your machine and could speed up CPU computations.

2017-08-01 17:39:33.518532: W tensorflow/core/platform/cpu_feature_guard.cc:45] The TensorFlow library wasn't compiled to use FMA instructions, but these are available on your machine and could speed up CPU computations.

step 0, training accuracy 0.588235

step 1, training accuracy 0.588235

step 2, training accuracy 0.588235

step 3, training accuracy 0.588235

step 4, training accuracy 0.588235

step 5, training accuracy 0.588235

step 6, training accuracy 0.588235

step 7, training accuracy 0.588235

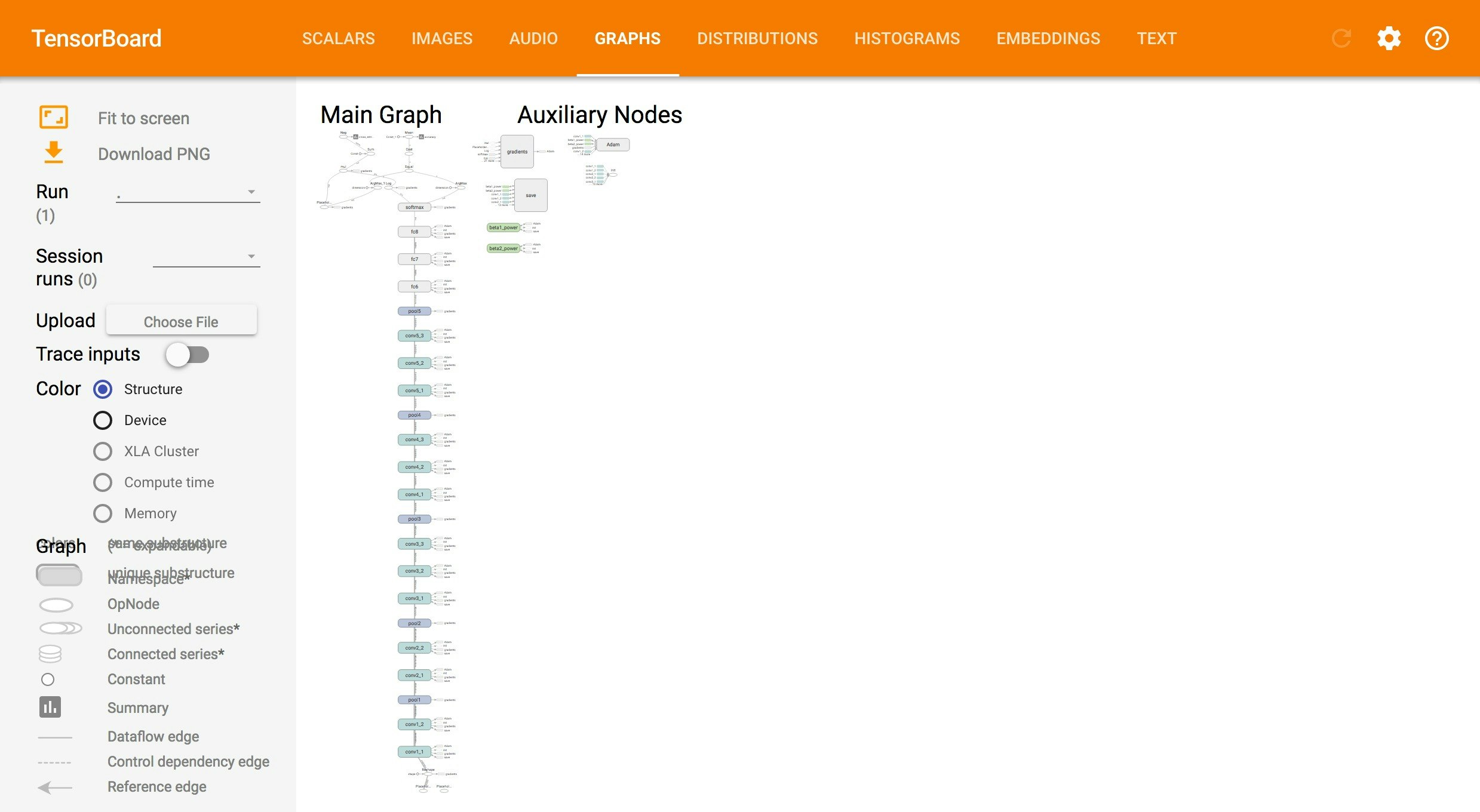

可視化

構成としては正しそうなんだけどなぁ...

やってみたこと

-

VGGモデルの画像サイズはデフォルト224x224で最低でも48x48らしいので、48や224で設定してみた。 → 変わらず。

-

層を色々減らしてみた。 → 変わらず。

-

学習データを変えてみた。 → 変わらず。

-

記法は今のままで、通常のCNNの層まで変えてみた。 → 学習が正常に行われた。

(゚д゚)