はじめに

OSX + Vagrant + CoreOSでKubernetesを試してみた - Qiitaを試した時に、既にあったのか見落としていたのかは不明ですが、今見たらGoogleCloudPlatform/kubernetesにGetting started with a Vagrant cluster on your hostというドキュメントがありました。

ということで、ここに書いてある手順に添って試してみました。が、サービスの起動はひと通り成功したのですが、いざブラウザでアクセスしてみるとエラーになってしまいました。

自分メモとして記録しておきます。

Vagrant起動までの手順

Kubernetesソース取得

git clone https://github.com/GoogleCloudPlatform/kubernetes.git

kubecfgなどのビルド

kubernetesのcluster/kubecfg.shには以下の様な記述があり、kubernetes/output/go/bin/kubecfgにバイナリを配置しておく必要があります。

CLOUDCFG=$(dirname $0)/../output/go/bin/kubecfg

if [ ! -x $CLOUDCFG ]; then

echo "Could not find kubecfg binary. Run hack/build-go.sh to build it."

exit 1

fi

Getting started with a Vagrant cluster on your hostのほうには説明がないのですが、Getting started on Google Compute Engineの

Running a container (simple version)に書かれている以下のコマンドを実行することで、kubernetes/output/go/bin/ にバイナリが生成されます(事前にGetting Started - The Go Programming Languageの手順に従ってGoをセットアップしておいてください)。

cd kubernetes

hack/build-go.sh

Kubernetesのバージョン(コミットハッシュ)確認

記録のため調べておきます。

% git log -1

commit 2282f9ce3a85366914893a060468a5d29faebb8d

Merge: 10a577a 1101c00

Author: Daniel Smith <dbsmith@google.com>

Date: Sat Aug 2 09:30:37 2014

Merge pull request #737 from brendandburns/atomic

Make updates atomic from the client side.

Vagrant起動

kubernetesのディレクトリにあるVagrantfileのデフォルト設定では、1台のkubernetes-masterと3台のkubernetes-minionを起動するようになっています。

replicaを試さない場合は

export KUBERNETES_NUM_MINIONS=3

としてminionを1台にするとマシンリソースを節減できると書かれていますが、これはタイプミスで実際は

export KUBERNETES_NUM_MINIONS=1

が正しいです。

が、今回はこの設定はせずminionは3台でVagrantを起動します。

% vagrant up

体感で10分ぐらいかかりましたが、無事起動出来ました。ちなみに作業環境はMacBook Pro 2012, CPUは2.6GHz Intel Core i7 4コア, RAM 16GBです。

vagrant status で状態を確認すると1台のmasterとminion-1〜3の3台のminionが起動していることがわかります。

% vagrant status

Current machine states:

master running (virtualbox)

minion-1 running (virtualbox)

minion-2 running (virtualbox)

minion-3 running (virtualbox)

…(略)…

OSXのアクティビティモニタで見ると1VMあたり560MB程度のRAMを使用していました。

Vagrantで起動したVMでサービスの状態確認

masterにssh接続してサービスの起動状態とログを確認します。

% vagrant ssh master

[vagrant@kubernetes-master ~] $ sudo systemctl status apiserver

[vagrant@kubernetes-master ~] $ sudo journalctl -r -u apiserver

[vagrant@kubernetes-master ~] $ sudo systemctl status controller-manager

[vagrant@kubernetes-master ~] $ sudo journalctl -r -u controller-manager

[vagrant@kubernetes-master ~] $ sudo systemctl status etcd

[vagrant@kubernetes-master ~] $ sudo systemctl status nginx

minion-1にssh接続してサービスの起動状態とログを確認します。

% vagrant ssh minion-1

[vagrant@kubernetes-minion-1] $ sudo systemctl status docker

[vagrant@kubernetes-minion-1] $ sudo journalctl -r -u docker

[vagrant@kubernetes-minion-1] $ sudo systemctl status kubelet

[vagrant@kubernetes-minion-1] $ sudo journalctl -r -u kubelet

同様にminion-2と3も確認します。

IPアドレス確認

またip aでIPアドレスを調べておきました。

- master: 10.245.1.2

- minion-1: 10.245.2.2

- minion-2: 10.245.2.3

- minion-3: 10.245.2.4

これらの値はVagrantfileで以下のように設定されています。

# The number of minions to provision

num_minion = (ENV['KUBERNETES_NUM_MINIONS'] || 3).to_i

# ip configuration

master_ip = "10.245.1.2"

minion_ip_base = "10.245.2."

minion_ips = num_minion.times.collect { |n| minion_ip_base + "#{n+2}" }

minion_ips_str = minion_ips.join(",")

環境変数KUBERNETES_PROVIDERをvagrantに設定

Setupの最後に、cluster/kube-env.shを KUBERNETES_PROVIDER="vagrant" のように修正するよう書かれています。

cd kubernetes

modify cluster/kube-env.sh:

KUBERNETES_PROVIDER="vagrant"

中身を見てみると以下のようになっていました。

…(略)…

# Set the default provider of Kubernetes cluster to know where to load provider-specific scripts

# You can override the default provider by exporting the KUBERNETES_PROVIDER

# variable in your bashrc

#

# The valid values: 'gce', 'azure' and 'vagrant'

KUBERNETES_PROVIDER=${KUBERNETES_PROVIDER:-gce}

ということでcluster/kube-env.shを変更しなくても以下のコマンドを実行しておけばOKです。

% export KUBERNETES_PROVIDER=vagrant

まずはnginxのサンプルを試す

Running a containerの説明にそってnginxのサンプルを試してみます。

nginx起動前の状態確認

pods, services, replicationControllersの一覧を表示してみます。まだ何も作っていないので全て空です。

% cluster/kubecfg.sh list /pods

Name Image(s) Host Labels

---------- ---------- ---------- ----------

% cluster/kubecfg.sh list /services

Name Labels Selector Port

---------- ---------- ---------- ----------

% cluster/kubecfg.sh list /replicationControllers

Name Image(s) Selector Replicas

---------- ---------- ---------- ----------

nginx起動

replicasを3でnginxを起動します。

% cluster/kubecfg.sh -p 8080:80 run dockerfile/nginx 3 myNginx

I0802 19:24:21.075940 71787 request.go:249] Waiting for completion of /operations/1

id: myNginx

desiredState:

replicas: 3

replicaSelector:

name: myNginx

podTemplate:

desiredState:

manifest:

version: v1beta2

id: ""

volumes: []

containers:

- name: mynginx

image: dockerfile/nginx

ports:

- hostPort: 8080

containerPort: 80

protocol: TCP

restartpolicy: {}

labels:

name: myNginx

labels:

name: myNginx

minion-1の状態確認

% vagrant ssh minion-1

[vagrant@kubernetes-minion-1 ~]$ sudo docker images

REPOSITORY TAG IMAGE ID CREATED VIRTUAL SIZE

google/cadvisor latest 7dac1ef04f73 7 days ago 11.84 MB

kubernetes/pause latest 6c4579af347b 2 weeks ago 239.8 kB

<none> <none> 42404685406e 10 weeks ago 584.4 MB

[vagrant@kubernetes-minion-1 ~]$ sudo docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

4546649ddf33 kubernetes/pause:latest /pause 2 minutes ago Up 2 minutes 0.0.0.0:8080->80/tcp k8s--net--2a1c7b99_-_1a2f_-_11e4_-_80f8_-_0800279696e1.etcd--57362f1c

2cc04e49854d google/cadvisor:latest /usr/bin/cadvisor 20 minutes ago Up 20 minutes k8s--cadvisor--cadvisor_-_agent.file--5517c8e2

b4fa106e1cae kubernetes/pause:latest /pause 21 minutes ago Up 21 minutes 0.0.0.0:4194->8080/tcp k8s--net--cadvisor_-_agent.file--0d84da50

[vagrant@kubernetes-minion-1 ~]$ exit

ホスト(OSX)上からcluster/kubecfg.shで状態確認

% cluster/kubecfg.sh list /pods

Name Image(s) Host Labels

---------- ---------- ---------- ----------

2a1c7b99-1a2f-11e4-80f8-0800279696e1 dockerfile/nginx 10.245.2.2/ name=myNginx,replicationController=myNginx

2a1bc312-1a2f-11e4-80f8-0800279696e1 dockerfile/nginx 10.245.2.3/ name=myNginx,replicationController=myNginx

2a1c9d49-1a2f-11e4-80f8-0800279696e1 dockerfile/nginx 10.245.2.4/ name=myNginx,replicationController=myNginx

% cluster/kubecfg.sh list /services

Name Labels Selector Port

---------- ---------- ---------- ----------

% cluster/kubecfg.sh list /replicationControllers

Name Image(s) Selector Replicas

---------- ---------- ---------- ----------

myNginx dockerfile/nginx name=myNginx 3

VM停止

% cluster/kube-down.sh

Bringing down cluster using provider: vagrant

==> minion-3: Forcing shutdown of VM...

==> minion-3: Destroying VM and associated drives...

==> minion-3: Running cleanup tasks for 'shell' provisioner...

==> minion-2: Forcing shutdown of VM...

==> minion-2: Destroying VM and associated drives...

==> minion-2: Running cleanup tasks for 'shell' provisioner...

==> minion-1: Forcing shutdown of VM...

==> minion-1: Destroying VM and associated drives...

==> minion-1: Running cleanup tasks for 'shell' provisioner...

==> master: Forcing shutdown of VM...

==> master: Destroying VM and associated drives...

==> master: Running cleanup tasks for 'shell' provisioner...

Done

GuestBook exampleを試す

% vagrant up

でVMを起動し、GuestBook exampleのREADMEの手順にそって試します。

ステップ1. redisマスター起動

Step One: Turn up the redis master.

% cat examples/guestbook/redis-master.json

{

"id": "redis-master-2",

"kind": "Pod",

"apiVersion": "v1beta1",

"desiredState": {

"manifest": {

"version": "v1beta1",

"id": "redis-master-2",

"containers": [{

"name": "master",

"image": "dockerfile/redis",

"ports": [{

"containerPort": 6379,

"hostPort": 6379

}]

}]

}

},

"labels": {

"name": "redis-master"

}

}

% cluster/kubecfg.sh -c examples/guestbook/redis-master.json create pods

I0802 19:58:50.330867 72493 request.go:249] Waiting for completion of /operations/1

I0802 19:59:10.338596 72493 request.go:249] Waiting for completion of /operations/1

…(略)…

I0802 20:03:30.366518 72493 request.go:249] Waiting for completion of /operations/1

Name Image(s) Host Labels

---------- ---------- ---------- ----------

redis-master-2 dockerfile/redis / name=redis-master

pods一覧確認

% cluster/kubecfg.sh list pods

Name Image(s) Host Labels

---------- ---------- ---------- ----------

redis-master-2 dockerfile/redis 10.245.2.2/ name=redis-master

ステップ2. マスターサービス起動

Step Two: Turn up the master service.

% cat examples/guestbook/redis-master-service.json

{

"id": "redismaster",

"kind": "Service",

"apiVersion": "v1beta1",

"port": 10000,

"selector": {

"name": "redis-master"

}

}

% cluster/kubecfg.sh -c examples/guestbook/redis-master-service.json create services

Name Labels Selector Port

---------- ---------- ---------- ----------

redismaster name=redis-master 10000

services一覧確認

% cluster/kubecfg.sh list services

Name Labels Selector Port

---------- ---------- ---------- ----------

redismaster name=redis-master 10000

ステップ3. slave pod起動

Step Three: Turn up the replicated slave pods.

% cat examples/guestbook/redis-slave-controller.json

{

"id": "redisSlaveController",

"kind": "ReplicationController",

"apiVersion": "v1beta1",

"desiredState": {

"replicas": 2,

"replicaSelector": {"name": "redisslave"},

"podTemplate": {

"desiredState": {

"manifest": {

"version": "v1beta1",

"id": "redisSlaveController",

"containers": [{

"name": "slave",

"image": "brendanburns/redis-slave",

"ports": [{"containerPort": 6379, "hostPort": 6380}]

}]

}

},

"labels": {"name": "redisslave"}

}},

"labels": {"name": "redisslave"}

}

% cluster/kubecfg.sh -c examples/guestbook/redis-slave-controller.json create replicationControllers

I0802 20:36:54.752954 72778 request.go:249] Waiting for completion of /operations/3

Name Image(s) Selector Replicas

---------- ---------- ---------- ----------

redisSlaveController brendanburns/redis-slave name=redisslave 2

replicationControllers一覧確認

% cluster/kubecfg.sh list replicationControllers

Name Image(s) Selector Replicas

---------- ---------- ---------- ----------

redisSlaveController brendanburns/redis-slave name=redisslave 2

pods一覧確認

% cluster/kubecfg.sh list pods

Name Image(s) Host Labels

---------- ---------- ---------- ----------

redis-master-2 dockerfile/redis 10.245.2.2/ name=redis-master

53143cc1-1a39-11e4-982a-0800279696e1 brendanburns/redis-slave 10.245.2.2/ name=redisslave,replicationController=redisSlaveController

531338b3-1a39-11e4-982a-0800279696e1 brendanburns/redis-slave 10.245.2.3/ name=redisslave,replicationController=redisSlaveController

ステップ4. redis slaveサービス起動

Step Four: Create the redis slave service.

% cat examples/guestbook/redis-slave-service.json

{

"id": "redisslave",

"kind": "Service",

"apiVersion": "v1beta1",

"port": 10001,

"labels": {

"name": "redisslave"

},

"selector": {

"name": "redisslave"

}

}

% cluster/kubecfg.sh -c examples/guestbook/redis-slave-service.json create services

Name Labels Selector Port

---------- ---------- ---------- ----------

redisslave name=redisslave name=redisslave 10001

services一覧確認

% cluster/kubecfg.sh list services

Name Labels Selector Port

---------- ---------- ---------- ----------

redismaster name=redis-master 10000

redisslave name=redisslave name=redisslave 10001

ステップ5. フロントエンドのpodを作成

Step Five: Create the frontend pod.

% cat examples/guestbook/frontend-controller.json

{

"id": "frontendController",

"kind": "ReplicationController",

"apiVersion": "v1beta1",

"desiredState": {

"replicas": 3,

"replicaSelector": {"name": "frontend"},

"podTemplate": {

"desiredState": {

"manifest": {

"version": "v1beta1",

"id": "frontendController",

"containers": [{

"name": "php-redis",

"image": "brendanburns/php-redis",

"ports": [{"containerPort": 80, "hostPort": 8000}]

}]

}

},

"labels": {"name": "frontend"}

}},

"labels": {"name": "frontend"}

}

% cluster/kubecfg.sh -c examples/guestbook/frontend-controller.json create replicationControllers

I0802 20:41:33.421541 72831 request.go:249] Waiting for completion of /operations/7

Name Image(s) Selector Replicas

---------- ---------- ---------- ----------

frontendController brendanburns/php-redis name=frontend 3

pods一覧確認

% cluster/kubecfg.sh list pods

Name Image(s) Host Labels

---------- ---------- ---------- ----------

redis-master-2 dockerfile/redis 10.245.2.2/ name=redis-master

53143cc1-1a39-11e4-982a-0800279696e1 brendanburns/redis-slave 10.245.2.2/ name=redisslave,replicationController=redisSlaveController

f4106c62-1a39-11e4-982a-0800279696e1 brendanburns/php-redis 10.245.2.2/ name=frontend,replicationController=frontendController

531338b3-1a39-11e4-982a-0800279696e1 brendanburns/redis-slave 10.245.2.3/ name=redisslave,replicationController=redisSlaveController

f410433e-1a39-11e4-982a-0800279696e1 brendanburns/php-redis 10.245.2.3/ name=frontend,replicationController=frontendController

f4103e89-1a39-11e4-982a-0800279696e1 brendanburns/php-redis 10.245.2.4/ name=frontend,replicationController=frontendController

ステップ6. フロントエンドサービス起動

GuestBook exampleのREADMEにはステップ6は書いてないのですが、frontenv-service.jsonというファイルが追加されているので、これも起動します。

% cat examples/guestbook/frontend-service.json

{

"id": "frontend",

"kind": "Service",

"apiVersion": "v1beta1",

"port": 9998,

"selector": {

"name": "frontend"

}

}

% cluster/kubecfg.sh -c examples/guestbook/frontend-service.json create services

Name Labels Selector Port

---------- ---------- ---------- ----------

frontend name=frontend 9998

services一覧確認

% cluster/kubecfg.sh list services

Name Labels Selector Port

---------- ---------- ---------- ----------

redismaster name=redis-master 10000

redisslave name=redisslave name=redisslave 10001

frontend name=frontend 9998

OSXからphpにアクセスしてみるが、イマイチ挙動がおかしい

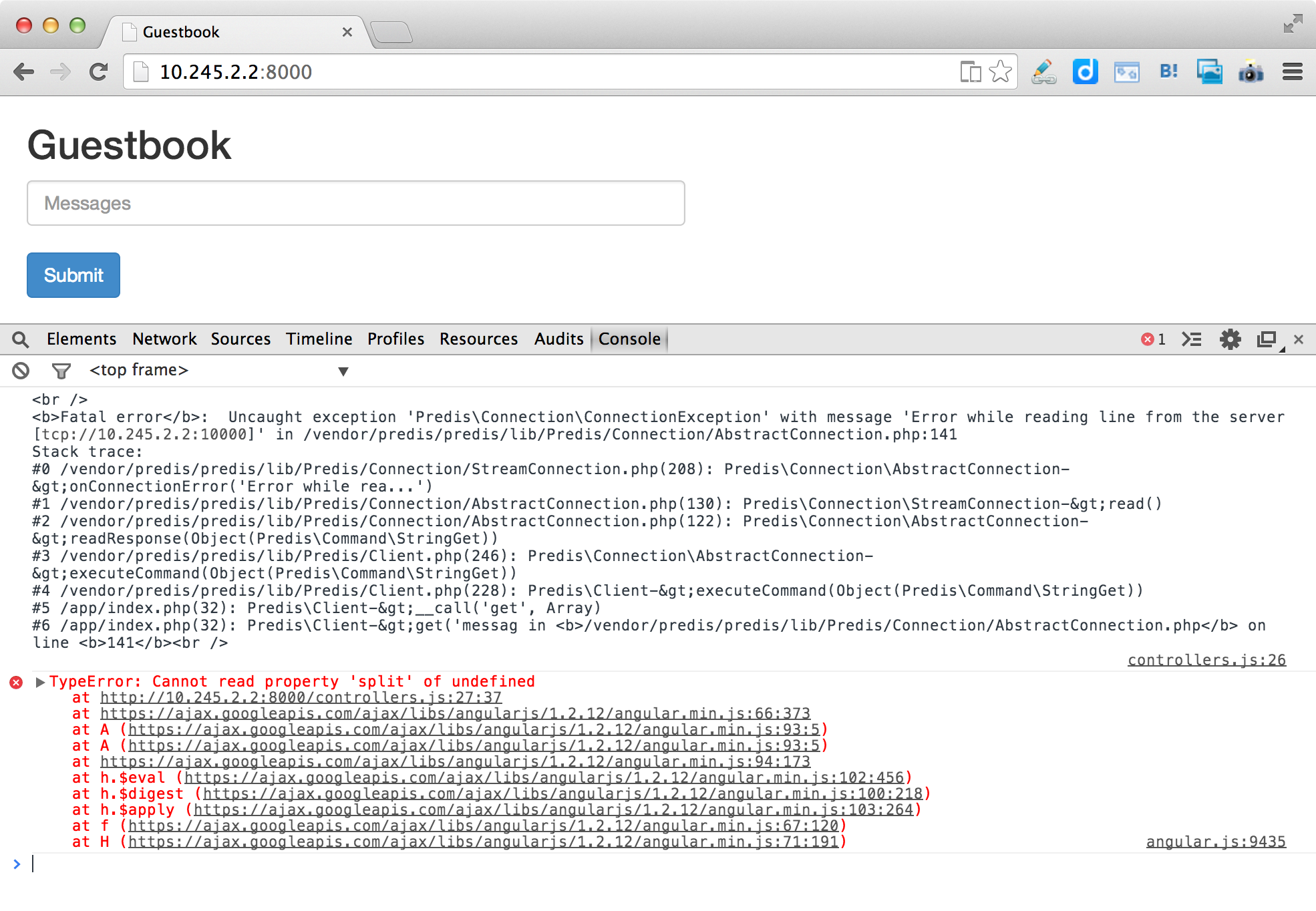

http://10.245.2.2:8000/ でGuestBookのページが表示できましたが、JavaScriptのコンソールにエラーが出ていました。

<br />

<b>Fatal error</b>: Uncaught exception 'Predis\Connection\ConnectionException' with message 'Error while reading line from the server [tcp://10.245.2.2:10000]' in /vendor/predis/predis/lib/Predis/Connection/AbstractConnection.php:141

Stack trace:

#0 /vendor/predis/predis/lib/Predis/Connection/StreamConnection.php(208): Predis\Connection\AbstractConnection->onConnectionError('Error while rea...')

#1 /vendor/predis/predis/lib/Predis/Connection/AbstractConnection.php(130): Predis\Connection\StreamConnection->read()

#2 /vendor/predis/predis/lib/Predis/Connection/AbstractConnection.php(122): Predis\Connection\AbstractConnection->readResponse(Object(Predis\Command\StringGet))

#3 /vendor/predis/predis/lib/Predis/Client.php(246): Predis\Connection\AbstractConnection->executeCommand(Object(Predis\Command\StringGet))

#4 /vendor/predis/predis/lib/Predis/Client.php(228): Predis\Client->executeCommand(Object(Predis\Command\StringGet))

#5 /app/index.php(32): Predis\Client->__call('get', Array)

#6 /app/index.php(32): Predis\Client->get('messag in <b>/vendor/predis/predis/lib/Predis/Connection/AbstractConnection.php</b> on line <b>141</b><br />

controllers.js:26

TypeError: Cannot read property 'split' of undefined

at http://10.245.2.2:8000/controllers.js:27:37

at https://ajax.googleapis.com/ajax/libs/angularjs/1.2.12/angular.min.js:66:373

at A (https://ajax.googleapis.com/ajax/libs/angularjs/1.2.12/angular.min.js:93:5)

at A (https://ajax.googleapis.com/ajax/libs/angularjs/1.2.12/angular.min.js:93:5)

at https://ajax.googleapis.com/ajax/libs/angularjs/1.2.12/angular.min.js:94:173

at h.$eval (https://ajax.googleapis.com/ajax/libs/angularjs/1.2.12/angular.min.js:102:456)

at h.$digest (https://ajax.googleapis.com/ajax/libs/angularjs/1.2.12/angular.min.js:100:218)

at h.$apply (https://ajax.googleapis.com/ajax/libs/angularjs/1.2.12/angular.min.js:103:264)

at f (https://ajax.googleapis.com/ajax/libs/angularjs/1.2.12/angular.min.js:67:120)

at H (https://ajax.googleapis.com/ajax/libs/angularjs/1.2.12/angular.min.js:71:191) angular.js:9435

また、 http://10.245.2.3:8000/ と http://10.245.2.4:8000/ はページが表示できませんでした。

3台のminionのdocker ps確認

[vagrant@kubernetes-minion-1 ~]$ sudo docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

7ae1e6f07e89 brendanburns/php-redis:latest /bin/sh -c /run.sh 9 minutes ago Up 9 minutes k8s--php_-_redis--f4106c62_-_1a39_-_11e4_-_982a_-_0800279696e1.etcd--ddd9f50b

aabcab927686 kubernetes/pause:latest /pause 13 minutes ago Up 13 minutes 0.0.0.0:8000->80/tcp k8s--net--f4106c62_-_1a39_-_11e4_-_982a_-_0800279696e1.etcd--02e78631

da13451a67ff brendanburns/redis-slave:latest /bin/sh -c /run.sh 18 minutes ago Up 18 minutes k8s--slave--53143cc1_-_1a39_-_11e4_-_982a_-_0800279696e1.etcd--7bf78e7b

f2d7ce7d54c1 kubernetes/pause:latest /pause 18 minutes ago Up 18 minutes 0.0.0.0:6380->6379/tcp k8s--net--53143cc1_-_1a39_-_11e4_-_982a_-_0800279696e1.etcd--d85252cd

ff41389437a2 dockerfile/redis:latest redis-server /etc/re 52 minutes ago Up 52 minutes k8s--master--redis_-_master_-_2.etcd--141a207b

fcf5a8460f8f kubernetes/pause:latest /pause 54 minutes ago Up 54 minutes 0.0.0.0:6379->6379/tcp k8s--net--redis_-_master_-_2.etcd--bf3ef427

aee5bdeaa7da google/cadvisor:latest /usr/bin/cadvisor 58 minutes ago Up 58 minutes k8s--cadvisor--cadvisor_-_agent.file--cc0850c4

601b449bbde1 kubernetes/pause:latest /pause 58 minutes ago Up 58 minutes 0.0.0.0:4194->8080/tcp k8s--net--cadvisor_-_agent.file--93d705e0

[vagrant@kubernetes-minion-2 ~]$ sudo docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

a543b204b127 brendanburns/php-redis:latest /bin/sh -c /run.sh 9 minutes ago Up 9 minutes k8s--php_-_redis--f410433e_-_1a39_-_11e4_-_982a_-_0800279696e1.etcd--cc1369ed

579fb24725e2 kubernetes/pause:latest /pause 14 minutes ago Up 14 minutes 0.0.0.0:8000->80/tcp k8s--net--f410433e_-_1a39_-_11e4_-_982a_-_0800279696e1.etcd--77daec18

ffa589c1b016 brendanburns/redis-slave:latest /bin/sh -c /run.sh 16 minutes ago Up 16 minutes k8s--slave--531338b3_-_1a39_-_11e4_-_982a_-_0800279696e1.etcd--7247b34c

5fec0b5be657 kubernetes/pause:latest /pause 18 minutes ago Up 18 minutes 0.0.0.0:6380->6379/tcp k8s--net--531338b3_-_1a39_-_11e4_-_982a_-_0800279696e1.etcd--76f00279

d3148aa2d345 google/cadvisor:latest /usr/bin/cadvisor 56 minutes ago Up 56 minutes k8s--cadvisor--cadvisor_-_agent.file--95c488c8

eb89aeb12d01 kubernetes/pause:latest /pause 56 minutes ago Up 56 minutes 0.0.0.0:4194->8080/tcp k8s--net--cadvisor_-_agent.file--fe601c9e

[vagrant@kubernetes-minion-3 ~]$ sudo docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

22bb175fd840 brendanburns/php-redis:latest /bin/sh -c /run.sh 9 minutes ago Up 9 minutes k8s--php_-_redis--f4103e89_-_1a39_-_11e4_-_982a_-_0800279696e1.etcd--26a38d58

e70479ff8eb5 kubernetes/pause:latest /pause 13 minutes ago Up 13 minutes 0.0.0.0:8000->80/tcp k8s--net--f4103e89_-_1a39_-_11e4_-_982a_-_0800279696e1.etcd--b6122286

117518d14a84 google/cadvisor:latest /usr/bin/cadvisor 52 minutes ago Up 52 minutes k8s--cadvisor--cadvisor_-_agent.file--b8173c07

e8361ff51542 kubernetes/pause:latest /pause 53 minutes ago Up 53 minutes 0.0.0.0:4194->8080/tcp k8s--net--cadvisor_-_agent.file--69a46847

3台のminionのiptables確認

[vagrant@kubernetes-minion-1 ~]$ sudo iptables-save

# Generated by iptables-save v1.4.19.1 on Sat Aug 2 11:58:31 2014

*nat

:PREROUTING ACCEPT [1075:64808]

:INPUT ACCEPT [1075:64808]

:OUTPUT ACCEPT [1390:86173]

:POSTROUTING ACCEPT [1400:86809]

:DOCKER - [0:0]

-A PREROUTING -m addrtype --dst-type LOCAL -j DOCKER

-A OUTPUT ! -d 127.0.0.0/8 -m addrtype --dst-type LOCAL -j DOCKER

-A POSTROUTING -s 172.17.0.0/16 ! -d 172.17.0.0/16 -j MASQUERADE

-A DOCKER ! -i docker0 -p tcp -m tcp --dport 4194 -j DNAT --to-destination 172.17.0.2:8080

-A DOCKER ! -i docker0 -p tcp -m tcp --dport 6379 -j DNAT --to-destination 172.17.0.3:6379

-A DOCKER ! -i docker0 -p tcp -m tcp --dport 6380 -j DNAT --to-destination 172.17.0.4:6379

-A DOCKER ! -i docker0 -p tcp -m tcp --dport 8000 -j DNAT --to-destination 172.17.0.5:80

COMMIT

# Completed on Sat Aug 2 11:58:31 2014

# Generated by iptables-save v1.4.19.1 on Sat Aug 2 11:58:31 2014

*filter

:INPUT ACCEPT [38516:140055570]

:FORWARD ACCEPT [0:0]

:OUTPUT ACCEPT [21581:1524308]

-A FORWARD -d 172.17.0.5/32 ! -i docker0 -o docker0 -p tcp -m tcp --dport 80 -j ACCEPT

-A FORWARD -d 172.17.0.4/32 ! -i docker0 -o docker0 -p tcp -m tcp --dport 6379 -j ACCEPT

-A FORWARD -d 172.17.0.3/32 ! -i docker0 -o docker0 -p tcp -m tcp --dport 6379 -j ACCEPT

-A FORWARD -d 172.17.0.2/32 ! -i docker0 -o docker0 -p tcp -m tcp --dport 8080 -j ACCEPT

-A FORWARD -o docker0 -m conntrack --ctstate RELATED,ESTABLISHED -j ACCEPT

-A FORWARD -i docker0 ! -o docker0 -j ACCEPT

-A FORWARD -i docker0 -o docker0 -j ACCEPT

COMMIT

# Completed on Sat Aug 2 11:58:31 2014

[vagrant@kubernetes-minion-2 ~]$ sudo iptables-save

# Generated by iptables-save v1.4.19.1 on Sat Aug 2 11:59:09 2014

*nat

:PREROUTING ACCEPT [176:10952]

:INPUT ACCEPT [176:10952]

:OUTPUT ACCEPT [477:31145]

:POSTROUTING ACCEPT [482:31461]

:DOCKER - [0:0]

-A PREROUTING -m addrtype --dst-type LOCAL -j DOCKER

-A OUTPUT ! -d 127.0.0.0/8 -m addrtype --dst-type LOCAL -j DOCKER

-A POSTROUTING -s 172.17.0.0/16 ! -d 172.17.0.0/16 -j MASQUERADE

-A DOCKER ! -i docker0 -p tcp -m tcp --dport 4194 -j DNAT --to-destination 172.17.0.2:8080

-A DOCKER ! -i docker0 -p tcp -m tcp --dport 6380 -j DNAT --to-destination 172.17.0.3:6379

-A DOCKER ! -i docker0 -p tcp -m tcp --dport 8000 -j DNAT --to-destination 172.17.0.4:80

COMMIT

# Completed on Sat Aug 2 11:59:09 2014

# Generated by iptables-save v1.4.19.1 on Sat Aug 2 11:59:09 2014

*filter

:INPUT ACCEPT [37582:139996288]

:FORWARD ACCEPT [0:0]

:OUTPUT ACCEPT [19068:1248976]

-A FORWARD -d 172.17.0.4/32 ! -i docker0 -o docker0 -p tcp -m tcp --dport 80 -j ACCEPT

-A FORWARD -d 172.17.0.3/32 ! -i docker0 -o docker0 -p tcp -m tcp --dport 6379 -j ACCEPT

-A FORWARD -d 172.17.0.2/32 ! -i docker0 -o docker0 -p tcp -m tcp --dport 8080 -j ACCEPT

-A FORWARD -o docker0 -m conntrack --ctstate RELATED,ESTABLISHED -j ACCEPT

-A FORWARD -i docker0 ! -o docker0 -j ACCEPT

-A FORWARD -i docker0 -o docker0 -j ACCEPT

COMMIT

# Completed on Sat Aug 2 11:59:09 2014

[vagrant@kubernetes-minion-3 ~]$ sudo iptables-save

# Generated by iptables-save v1.4.19.1 on Sat Aug 2 11:59:34 2014

*nat

:PREROUTING ACCEPT [74:4796]

:INPUT ACCEPT [74:4796]

:OUTPUT ACCEPT [385:25721]

:POSTROUTING ACCEPT [386:25785]

:DOCKER - [0:0]

-A PREROUTING -m addrtype --dst-type LOCAL -j DOCKER

-A OUTPUT ! -d 127.0.0.0/8 -m addrtype --dst-type LOCAL -j DOCKER

-A POSTROUTING -s 172.17.0.0/16 ! -d 172.17.0.0/16 -j MASQUERADE

-A DOCKER ! -i docker0 -p tcp -m tcp --dport 4194 -j DNAT --to-destination 172.17.0.2:8080

-A DOCKER ! -i docker0 -p tcp -m tcp --dport 8000 -j DNAT --to-destination 172.17.0.3:80

COMMIT

# Completed on Sat Aug 2 11:59:34 2014

# Generated by iptables-save v1.4.19.1 on Sat Aug 2 11:59:34 2014

*filter

:INPUT ACCEPT [28327:139571804]

:FORWARD ACCEPT [0:0]

:OUTPUT ACCEPT [15901:964005]

-A FORWARD -d 172.17.0.3/32 ! -i docker0 -o docker0 -p tcp -m tcp --dport 80 -j ACCEPT

-A FORWARD -d 172.17.0.2/32 ! -i docker0 -o docker0 -p tcp -m tcp --dport 8080 -j ACCEPT

-A FORWARD -o docker0 -m conntrack --ctstate RELATED,ESTABLISHED -j ACCEPT

-A FORWARD -i docker0 ! -o docker0 -j ACCEPT

-A FORWARD -i docker0 -o docker0 -j ACCEPT

COMMIT

# Completed on Sat Aug 2 11:59:34 2014

minion 3台のIPアドレス情報確認

[vagrant@kubernetes-minion-1 ~]$ ip a

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: p2p1: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP group default qlen 1000

link/ether 08:00:27:96:96:e1 brd ff:ff:ff:ff:ff:ff

inet 10.0.2.15/24 scope global dynamic p2p1

valid_lft 81951sec preferred_lft 81951sec

inet6 fe80::a00:27ff:fe96:96e1/64 scope link

valid_lft forever preferred_lft forever

3: enp0s8: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP group default qlen 1000

link/ether 08:00:27:f7:7b:90 brd ff:ff:ff:ff:ff:ff

inet 10.245.2.2/24 brd 10.245.2.255 scope global enp0s8

valid_lft forever preferred_lft forever

inet6 fe80::a00:27ff:fef7:7b90/64 scope link

valid_lft forever preferred_lft forever

4: docker0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group default

link/ether 56:84:7a:fe:97:99 brd ff:ff:ff:ff:ff:ff

inet 172.17.42.1/16 scope global docker0

valid_lft forever preferred_lft forever

inet6 fe80::5484:7aff:fefe:9799/64 scope link

valid_lft forever preferred_lft forever

6: veth2246: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast master docker0 state UP group default qlen 1000

link/ether f6:be:20:f4:c6:20 brd ff:ff:ff:ff:ff:ff

inet6 fe80::f4be:20ff:fef4:c620/64 scope link

valid_lft forever preferred_lft forever

8: veth78e8: <BROADCAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast master docker0 state UP group default qlen 1000

link/ether fe:53:a9:29:e1:bf brd ff:ff:ff:ff:ff:ff

inet6 fe80::fc53:a9ff:fe29:e1bf/64 scope link

valid_lft forever preferred_lft forever

10: vethc929: <BROADCAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast master docker0 state UP group default qlen 1000

link/ether 36:20:45:da:b0:32 brd ff:ff:ff:ff:ff:ff

inet6 fe80::3420:45ff:feda:b032/64 scope link

valid_lft forever preferred_lft forever

12: veth0517: <BROADCAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast master docker0 state UP group default qlen 1000

link/ether 2e:69:6d:98:6a:06 brd ff:ff:ff:ff:ff:ff

inet6 fe80::2c69:6dff:fe98:6a06/64 scope link

valid_lft forever preferred_lft forever

[vagrant@kubernetes-minion-2 ~]$ ip a

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: p2p1: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP group default qlen 1000

link/ether 08:00:27:96:96:e1 brd ff:ff:ff:ff:ff:ff

inet 10.0.2.15/24 scope global dynamic p2p1

valid_lft 81987sec preferred_lft 81987sec

inet6 fe80::a00:27ff:fe96:96e1/64 scope link

valid_lft forever preferred_lft forever

3: enp0s8: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP group default qlen 1000

link/ether 08:00:27:39:62:e5 brd ff:ff:ff:ff:ff:ff

inet 10.245.2.3/24 brd 10.245.2.255 scope global enp0s8

valid_lft forever preferred_lft forever

inet6 fe80::a00:27ff:fe39:62e5/64 scope link

valid_lft forever preferred_lft forever

4: docker0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group default

link/ether 56:84:7a:fe:97:99 brd ff:ff:ff:ff:ff:ff

inet 172.17.42.1/16 scope global docker0

valid_lft forever preferred_lft forever

inet6 fe80::5484:7aff:fefe:9799/64 scope link

valid_lft forever preferred_lft forever

6: vethabb9: <BROADCAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast master docker0 state UP group default qlen 1000

link/ether ca:f8:d8:a3:38:b6 brd ff:ff:ff:ff:ff:ff

inet6 fe80::c8f8:d8ff:fea3:38b6/64 scope link

valid_lft forever preferred_lft forever

8: veth3332: <BROADCAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast master docker0 state UP group default qlen 1000

link/ether 5a:6e:c8:1f:b1:3b brd ff:ff:ff:ff:ff:ff

inet6 fe80::586e:c8ff:fe1f:b13b/64 scope link

valid_lft forever preferred_lft forever

10: veth22bd: <BROADCAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast master docker0 state UP group default qlen 1000

link/ether 12:1f:6b:86:f3:fc brd ff:ff:ff:ff:ff:ff

inet6 fe80::101f:6bff:fe86:f3fc/64 scope link

valid_lft forever preferred_lft forever

[vagrant@kubernetes-minion-3 ~]$ ip a

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: p2p1: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP group default qlen 1000

link/ether 08:00:27:96:96:e1 brd ff:ff:ff:ff:ff:ff

inet 10.0.2.15/24 scope global dynamic p2p1

valid_lft 82106sec preferred_lft 82106sec

inet6 fe80::a00:27ff:fe96:96e1/64 scope link

valid_lft forever preferred_lft forever

3: enp0s8: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP group default qlen 1000

link/ether 08:00:27:ea:0b:72 brd ff:ff:ff:ff:ff:ff

inet 10.245.2.4/24 brd 10.245.2.255 scope global enp0s8

valid_lft forever preferred_lft forever

inet6 fe80::a00:27ff:feea:b72/64 scope link

valid_lft forever preferred_lft forever

4: docker0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group default

link/ether 56:84:7a:fe:97:99 brd ff:ff:ff:ff:ff:ff

inet 172.17.42.1/16 scope global docker0

valid_lft forever preferred_lft forever

inet6 fe80::5484:7aff:fefe:9799/64 scope link

valid_lft forever preferred_lft forever

6: veth202a: <BROADCAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast master docker0 state UP group default qlen 1000

link/ether 1e:22:87:aa:9e:a1 brd ff:ff:ff:ff:ff:ff

inet6 fe80::1c22:87ff:feaa:9ea1/64 scope link

valid_lft forever preferred_lft forever

8: veth1256: <BROADCAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast master docker0 state UP group default qlen 1000

link/ether ce:f8:d6:c2:9e:6b brd ff:ff:ff:ff:ff:ff

inet6 fe80::ccf8:d6ff:fec2:9e6b/64 scope link

valid_lft forever preferred_lft forever

ホストからredisに接続してみた

pods一覧を見ると10.245.2.2 (minion-1)でredisのマスターとスレーブ、10.245.2.3 (minion-2)でredisのスレーブが動いています。

% cluster/kubecfg.sh list pods

Name Image(s) Host Labels

---------- ---------- ---------- ----------

redis-master-2 dockerfile/redis 10.245.2.2/ name=redis-master

53143cc1-1a39-11e4-982a-0800279696e1 brendanburns/redis-slave 10.245.2.2/ name=redisslave,replicationController=redisSlaveController

f4106c62-1a39-11e4-982a-0800279696e1 brendanburns/php-redis 10.245.2.2/ name=frontend,replicationController=frontendController

531338b3-1a39-11e4-982a-0800279696e1 brendanburns/redis-slave 10.245.2.3/ name=redisslave,replicationController=redisSlaveController

f410433e-1a39-11e4-982a-0800279696e1 brendanburns/php-redis 10.245.2.3/ name=frontend,replicationController=frontendController

f4103e89-1a39-11e4-982a-0800279696e1 brendanburns/php-redis 10.245.2.4/ name=frontend,replicationController=frontendController

examples/guestbook/redis-master.jsonを見るとコンテナのポートは6379でホストのポートは6379、

examples/guestbook/redis-slave-controller.jsonを見るとコンテナのポートは6379でホストのポートは6380となっています。

redisマスターにはつながりませんでした。

% redis-cli -h 10.245.2.2

Could not connect to Redis at 10.245.2.2:6379: Connection refused

not connected>

minion-1のredisスレーブにはつながりましたが、書き込みを試すとスレーブのためエラーになりました。

% redis-cli -h 10.245.2.2 -p 6380

10.245.2.2:6380> keys *

(empty list or set)

10.245.2.2:6380> exit

% redis-cli -h 10.245.2.2 -p 6380

10.245.2.2:6380> set foo bar

(error) READONLY You can't write against a read only slave.

10.245.2.2:6380> exit

minion-2のスレーブも同様でした。

% redis-cli -h 10.245.2.3 -p 6380

10.245.2.3:6380> keys *

(empty list or set)

(8.30s)

10.245.2.3:6380> exit

% redis-cli -h 10.245.2.3 -p 6380

10.245.2.3:6380> set foo bar

(error) READONLY You can't write against a read only slave.

(8.49s)

10.245.2.3:6380>

VirtualBoxの状態確認

KubernetesのVM一覧

% VBoxManage list vms | grep kubernetes_

"kubernetes_master_1406976259765_56468" {a7eb2284-f2ff-43e6-b6de-b25c65562abd}

"kubernetes_minion-1_1406976619017_56753" {7140e1b8-c75d-488f-a666-98111ace0f95}

"kubernetes_minion-2_1406976757338_71574" {9949e607-cd99-4da6-8375-c26979e95815}

"kubernetes_minion-3_1406976897193_54508" {4086c1d0-f1e3-4843-a59f-94a2f060112f}

% VBoxManage showvminfo kubernetes_master_1406976259765_56468 | grep '^NIC'

NIC 1: MAC: 0800279696E1, Attachment: NAT, Cable connected: on, Trace: off (file: none), Type: 82540EM, Reported speed: 0 Mbps, Boot priority: 0, Promisc Policy: deny, Bandwidth group: none

NIC 1 Settings: MTU: 0, Socket (send: 64, receive: 64), TCP Window (send:64, receive: 64)

NIC 1 Rule(0): name = ssh, protocol = tcp, host ip = 127.0.0.1, host port = 2222, guest ip = , guest port = 22

NIC 2: MAC: 0800271F7E3D, Attachment: Host-only Interface 'vboxnet3', Cable connected: on, Trace: off (file: none), Type: 82540EM, Reported speed: 0 Mbps, Boot priority: 0, Promisc Policy: deny, Bandwidth group: none

NIC 3: disabled

NIC 4: disabled

NIC 5: disabled

NIC 6: disabled

NIC 7: disabled

NIC 8: disabled

% VBoxManage showvminfo kubernetes_minion-1_1406976619017_56753 | grep '^NIC'

NIC 1: MAC: 0800279696E1, Attachment: NAT, Cable connected: on, Trace: off (file: none), Type: 82540EM, Reported speed: 0 Mbps, Boot priority: 0, Promisc Policy: deny, Bandwidth group: none

NIC 1 Settings: MTU: 0, Socket (send: 64, receive: 64), TCP Window (send:64, receive: 64)

NIC 1 Rule(0): name = ssh, protocol = tcp, host ip = 127.0.0.1, host port = 2200, guest ip = , guest port = 22

NIC 2: MAC: 080027F77B90, Attachment: Host-only Interface 'vboxnet4', Cable connected: on, Trace: off (file: none), Type: 82540EM, Reported speed: 0 Mbps, Boot priority: 0, Promisc Policy: deny, Bandwidth group: none

NIC 3: disabled

NIC 4: disabled

NIC 5: disabled

NIC 6: disabled

NIC 7: disabled

NIC 8: disabled

% VBoxManage showvminfo kubernetes_minion-3_1406976897193_54508 | grep '^NIC'

NIC 1: MAC: 0800279696E1, Attachment: NAT, Cable connected: on, Trace: off (file: none), Type: 82540EM, Reported speed: 0 Mbps, Boot priority: 0, Promisc Policy: deny, Bandwidth group: none

NIC 1 Settings: MTU: 0, Socket (send: 64, receive: 64), TCP Window (send:64, receive: 64)

NIC 1 Rule(0): name = ssh, protocol = tcp, host ip = 127.0.0.1, host port = 2202, guest ip = , guest port = 22

NIC 2: MAC: 080027EA0B72, Attachment: Host-only Interface 'vboxnet4', Cable connected: on, Trace: off (file: none), Type: 82540EM, Reported speed: 0 Mbps, Boot priority: 0, Promisc Policy: deny, Bandwidth group: none

NIC 3: disabled

NIC 4: disabled

NIC 5: disabled

NIC 6: disabled

NIC 7: disabled

NIC 8: disabled

minion-1からのhttpアクセステスト

[vagrant@kubernetes-minion-1 ~]$ curl http://10.245.2.2:8000

<html ng-app="redis">

<head>

<title>Guestbook</title>

<link rel="stylesheet" href="//netdna.bootstrapcdn.com/bootstrap/3.1.1/css/bootstrap.min.css">

<script src="https://ajax.googleapis.com/ajax/libs/angularjs/1.2.12/angular.min.js"></script>

<script src="/controllers.js"></script>

<script src="ui-bootstrap-tpls-0.10.0.min.js"></script>

</head>

<body ng-controller="RedisCtrl">

<div style="width: 50%; margin-left: 20px">

<h2>Guestbook</h2>

<form>

<fieldset>

<input ng-model="msg" placeholder="Messages" class="form-control" type="text" name="input"><br>

<button type="button" class="btn btn-primary" ng-click="controller.onRedis()">Submit</button>

</fieldset>

</form>

<div>

<div ng-repeat="msg in messages">

{{msg}}

</div>

</div>

</div>

</body>

</html>

[vagrant@kubernetes-minion-1 ~]$ curl http://10.245.2.3:8000

curl: (7) Failed connect to 10.245.2.3:8000; 接続を拒否されました

[vagrant@kubernetes-minion-1 ~]$ curl http://10.245.2.4:8000

curl: (7) Failed connect to 10.245.2.4:8000; 接続を拒否されました

結論

よくわかりません!iptablesとかdocker psを見てると http://10.245.2.2 が表示できるなら http://10.245.2.3 も http://10.245.2.4 も表示できても良さそうな気がするんですが。

素人が手を出すのはまだ早かったようです。うまく行った人がいたらぜひ記事を書いてください。期待しています!