目的

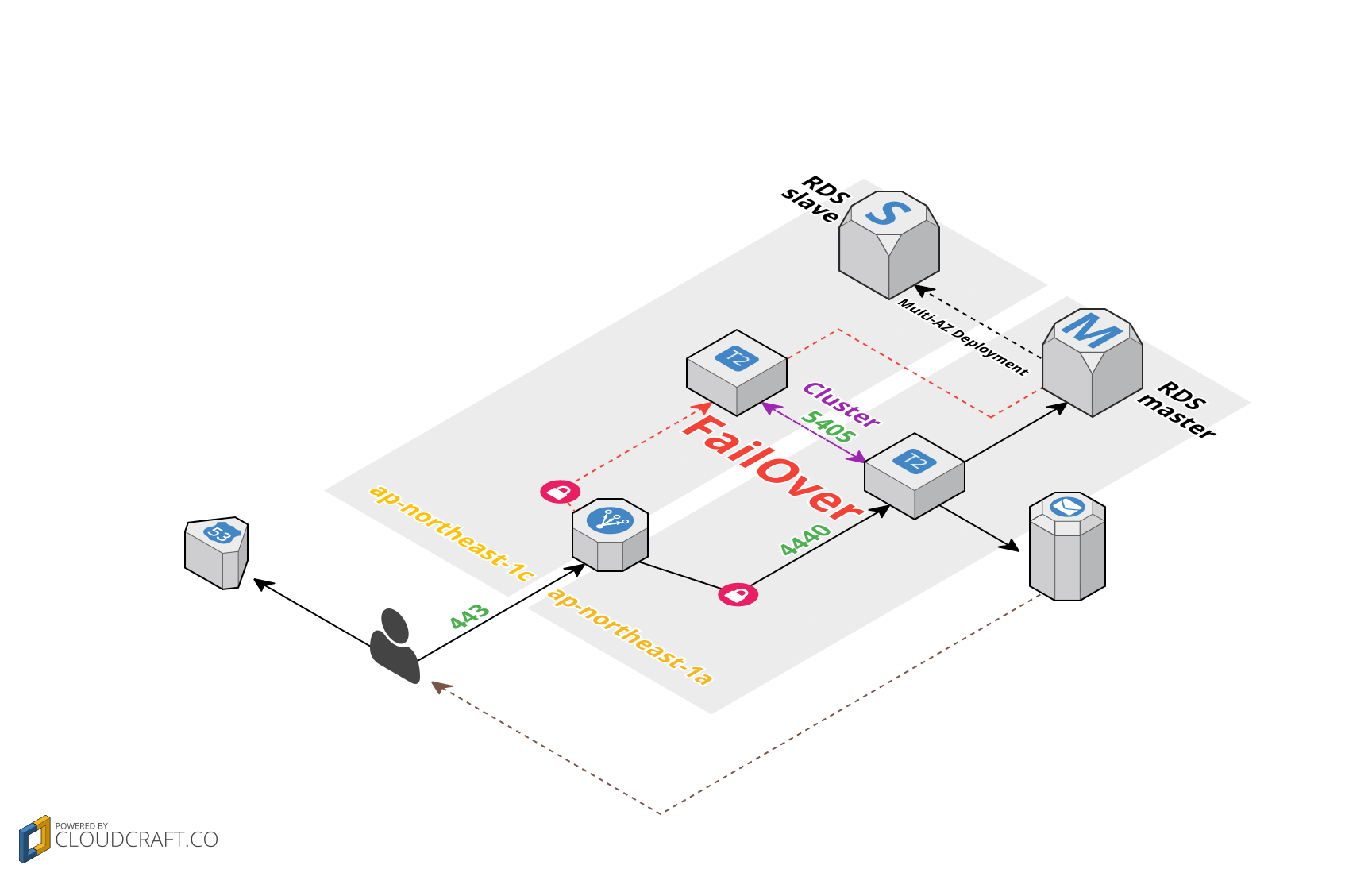

- AZを跨いだ冗長構成(Active-Standby)

- 浮動ipでの運用でなく、ELBのヘルスチェックに依存させる

- 最近Updateされた機能により、運用が可能に。Register or De-Register EC2 Instances for Your Load Balancer

- Scaling Rundeck

イメージ

環境

- Rundeck -構築(H/Aなし)-の構成にEC2インスタンス追加

- Active/Standby構成(Standbyはサーバは起動しているが、F/Oのトリガーとなるプロセスを停止させている)

- F/OのトリガーはRundeckdの停止

- クラスタ制御にはCorosync、リソース制御はPacemakerを使用

- RundeckはProjectやそれに参加するノードを記述したResourceをDBでなく、サーバで持つ為、PrimaryとSecondaryで同期が必要(DRBDやRsync等)

- ELB、SES,RDS,Route53,DRBD,VPC設計は省きます

構築

インストール

$ sudo yum install http://iij.dl.osdn.jp/linux-ha/63919/pacemaker-repo-1.1.13-1.1.el6.x86_64.rpm

$ sudo yum -c pacemaker.repo install pacemaker corosync pcs

クラスタ制御設定

- Primaryでauthkey作成

$ cd /etc/corosync

$ sudo corosync-keygen -l

Corosync Cluster Engine Authentication key generator.

Gathering 1024 bits for key from /dev/urandom.

Writing corosync key to /etc/corosync/authkey.

# PrimaryとSecondaryのパスフレーズなしでKeypair作成

$ ssh-keygen -t rsa

Generating public/private rsa key pair.

Enter file in which to save the key (/home/ec2-user/.ssh/id_rsa):

Enter passphrase (empty for no passphrase):

Enter same passphrase again:

Your identification has been saved in /home/ec2-user/.ssh/id_rsa.

Your public key has been saved in /home/ec2-user/.ssh/id_rsa.pub.

The key fingerprint is:

d1:47:2f:98:fe:17:ab:02:f1:9f:14:cb:2c:73:b1:e5 ec2-user@ip-10-0-3-6

The key's randomart image is:

+--[ RSA 2048]----+

| . |

| . + . |

| . + o . |

| .o .o.. |

| So.o B. |

| . +.B Eo |

| . *..o |

| . oo |

| .. |

+-----------------+

$ sudo chown ec2-user authkey

- Secondaryでauthkey作成

$ cd /etc/corosync

$ sudo touch authkey

$ sudo chown e2-user authkey&&sudo chmod 600 authkey

# ~/.ssh/authorized_keysにPrimaryで作成した公開鍵を記述

- PrimaryからSecondaryへauthkeyをコピー

$ scp -i /home/ec2-user/.ssh/id_rsa /etc/corosync/authkey ec2-user@10.0.3.6:/etc/corosync/authkey

authkey 100% 128 0.1KB/s 00:00

- conf設定

- Primary

corosync.conf

$ cd /etc/corosync

$ sudo cp corosync.conf.example.udpu corosync.conf

# Please read the corosync.conf.5 manual page

totem {

version: 2

crypto_cipher: none

crypto_hash: none

interface {

ringnumber: 0

bindnetaddr: 10.0.1.23

mcastport: 5405

ttl: 1

}

transport: udpu

}

logging {

fileline: off

to_logfile: yes

to_syslog: yes

logfile: /var/log/cluster/corosync.log

debug: off

timestamp: on

logger_subsys {

subsys: QUORUM

debug: off

}

}

nodelist {

node {

ring0_addr: 10.0.1.23

nodeid: 1

}

node {

ring0_addr: 10.0.3.6

nodeid: 2

}

}

quorum {

# Enable and configure quorum subsystem (default: off)

# see also corosync.conf.5 and votequorum.5

provider: corosync_votequorum

expected_votes: 2

}

- conf設定

- Secondary

# Please read the corosync.conf.5 manual page

totem {

version: 2

crypto_cipher: none

crypto_hash: none

interface {

ringnumber: 0

bindnetaddr: 10.0.3.6

mcastport: 5405

ttl: 1

}

transport: udpu

}

logging {

fileline: off

to_logfile: yes

to_syslog: yes

logfile: /var/log/cluster/corosync.log

debug: off

timestamp: on

logger_subsys {

subsys: QUORUM

debug: off

}

}

nodelist {

node {

ring0_addr: 10.0.1.23

nodeid: 1

}

node {

ring0_addr: 10.0.3.6

nodeid: 2

}

}

quorum {

# Enable and configure quorum subsystem (default: off)

# see also corosync.conf.5 and votequorum.5

provider: corosync_votequorum

expected_votes: 2

}

- SGのInboundに5405(UDP)を定義する

- 起動確認

- Primary・Secondaryでサービス起動

$ sudo service corosync start && sudo service pacemaker start

$ sudo chkconfig corosync on&&sudo chkconfig pacemaker on&&sudo chkconfig --list | egrep -i 'corosync|pacemaker'

corosync 0:off 1:off 2:on 3:on 4:on 5:on 6:off

pacemaker 0:off 1:off 2:on 3:on 4:on 5:on 6:off

$ sudo crm_mon -1

# Current DC(designated controller)は先にサービスを起動させたサーバになる。

# ここではPrimaryがDC。

Last updated: Sat Dec 26 02:59:16 2015

Last change: Sat Dec 26 02:28:53 2015 by hacluster via crmd on ip-10-0-1-23

Stack: corosync

Current DC: ip-10-0-1-23 - partition with quorum

Version: 1.1.13-6052cd1

2 Nodes configured

0 Resources configured

Online: [ ip-10-0-1-23 ip-10-0-3-6 ]

リソース制御設定

- F/Oのトリガーはrundeckdの停止

- 設定はPrimaryだけでOK。

# "no-quorum-policy: ignore"はスプリットブレイン発生時にクォーラム(過半数と通信できるノードがHAクラスタとして動作できる)

# を無視する設定(クォーラムを獲得して動作する)で、2台構成の場合は必須

# STONITH(制御不能のサーバを強制的に電源OFF)は2台構成の場合、タイミングが悪いと相打ちになる可能性があるため、明示的に無効化設定

# リソース故障が1回発生でF/Oするように設定

# リソース監視感覚は30秒

$ sudo pcs property set no-quorum-policy=ignore

$ sudo pcs property set stonith-enabled=false

$ sudo pcs resource defaults resource-stickiness="INFINITY" migration-threshold="1"

$ sudo pcs resource create resource1 lsb:rundeckd op monitor interval="30"

$ sudo pcs resource group add rundeckd resource1

$ sudo pcs property show

Cluster Properties:

cluster-infrastructure: corosync

dc-version: 1.1.13-6052cd1

have-watchdog: false

no-quorum-policy: ignore

stonith-enabled: false

$ sudo pcs resource show

resource1 (lsb:rundeckd): Started ip-10-0-1-23

- 正常にクラスタリングされている状態

- クォーラムはPrimaryが獲得

$ sudo crm_mon -1

Last updated: Sat Dec 26 07:38:10 2015

Last change: Sat Dec 26 05:19:08 2015 by root via cibadmin on ip-10-0-1-23

Stack: corosync

Current DC: ip-10-0-1-23 - partition with quorum

Version: 1.1.13-6052cd1

2 Nodes configured

1 Resources configured

Online: [ ip-10-0-1-23 ip-10-0-3-6 ]

resource1 (lsb:rundeckd): Started ip-10-0-1-23

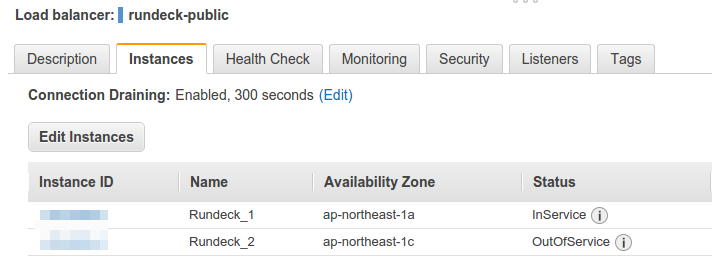

動作確認

[ec2-user@ip-10-0-1-23 ~]$ sudo service rundeckd stop

Stopping rundeckd: [ OK ]

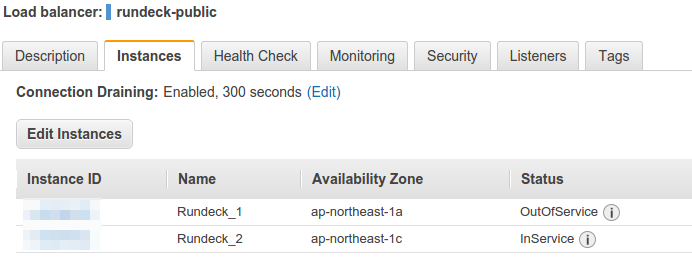

- F/O

- Primaryでプロセスダウンを検知し、resourceがSecondaryでスタートしたことを確認

$ sudo crm_mon

Last updated: Sun Dec 27 05:01:47 2015

Last change: Sat Dec 26 05:19:08 2015 by root via cibadmin on ip-10-0-1-23

Stack: corosync

Current DC: ip-10-0-1-23 - partition with quorum

Version: 1.1.13-6052cd1

2 Nodes configured

1 Resources configured

Online: [ ip-10-0-1-23 ip-10-0-3-6 ]

resource1 (lsb:rundeckd): Started ip-10-0-3-6

Failed actions:

resource1_monitor_30000 on ip-10-0-1-23 'not running' (7): call=13, status=complete, exit-reason='none', last-rc-change='Sun Dec 27 05:01:21 2015', queued=0ms, exec=0ms

-

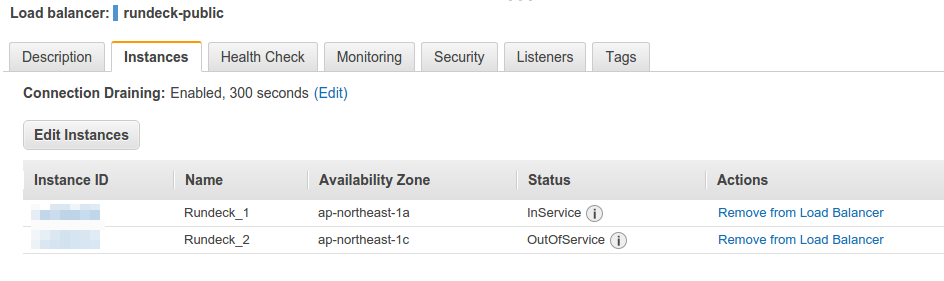

ELB

-

F/B

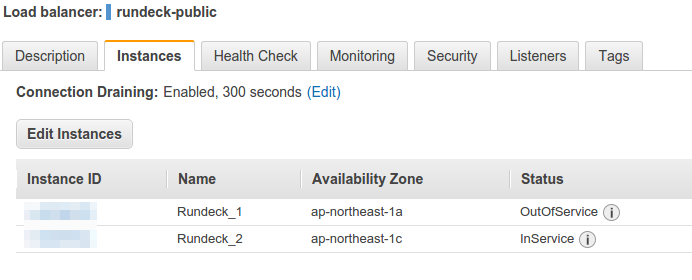

- Secondaryがクォーラムを獲得するようにPrimaryのクラスタを再起動させる

$ sudo service corosync restart&&sudo service pacemaker restart

$ sudo crm_mon -1

Last updated: Sun Dec 27 16:43:30 2015

Last change: Sat Dec 26 05:19:08 2015 by root via cibadmin on ip-10-0-1-23

Stack: corosync

Current DC: ip-10-0-3-6 - partition with quorum

Version: 1.1.13-6052cd1

2 Nodes configured

1 Resources configured

Online: [ ip-10-0-1-23 ip-10-0-3-6 ]

resource1 (lsb:rundeckd): Started ip-10-0-3-6

- Secondaryでトリガー発動

- クラスタ再起動させ、Primaryにクォーラムを獲得させる

$ sudo service rundeckd stop

Stopping rundeckd: [ OK ]

[ec2-user@ip-10-0-3-6 ~]$ sudo service corosync restart&&sudo service pacemaker restart

Signaling Corosync Cluster Engine (corosync) to terminate: [ OK ]

Waiting for corosync services to unload:. [ OK ]

Starting Corosync Cluster Engine (corosync): [ OK ]

Pacemaker Cluster Manager is already stopped [ OK ]

Starting Pacemaker Cluster Manager

$ sudo crm_mon -1

Last updated: Sun Dec 27 16:53:45 2015

Last change: Sat Dec 26 05:19:08 2015 by root via cibadmin on ip-10-0-1-23

Stack: corosync

Current DC: ip-10-0-1-23 - partition with quorum

Version: 1.1.13-6052cd1

2 Nodes configured

1 Resources configured

Online: [ ip-10-0-1-23 ip-10-0-3-6 ]

resource1 (lsb:rundeckd): Started ip-10-0-1-23