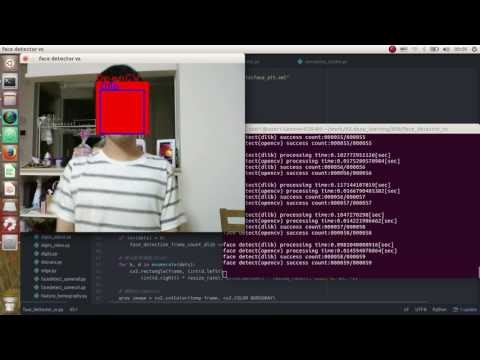

dlibとOpenCVの顔検出比較をしてみました。

時々見かける動画ですが、自分でもやってみたかったので、ちょっとお試し。

dlibのほうが向きとかに対する精度がよくて、

OpenCVのほうが早い感じ(Adaboostのおかげ?

業務で使用することになったら、もっと詳細に調査予定。

Linuxのほうがdlibの導入が簡単なので、Ubutntuでやってます。

Windowsでもdlibいれれば同じソースで動くはず。。。?

動画は以下。

赤色がOpenCVによる検出で、青色がdlibによる検出です。

https://www.youtube.com/watch?v=SQTXLfwlPjQ

ソースコードは以下。

動作させるには、pyファイルと同じディレクトリにOpenCVの学習済みデータを

配置する必要があります。

→./data/haarcascades/haarcascade_frontalface_alt.xml

# !/usr/bin/env python

# -*- coding: utf-8 -*-

'''

face_landmark_detector.py.

Usage:

face_landmark_detector.py [<video source>] [<resize rate>]

'''

import sys

import dlib

import cv2

import time

import copy

try:

fn = sys.argv[1]

if fn.isdigit() == True:

fn = int(fn)

except:

fn = 0

try:

resize_rate = sys.argv[2]

resize_rate = int(resize_rate)

except:

resize_rate = 1

# Dlib

detector = dlib.get_frontal_face_detector()

# OpenCV

cascade_fn = "./data/haarcascades/haarcascade_frontalface_alt.xml"

cascade = cv2.CascadeClassifier(cascade_fn)

video_input = cv2.VideoCapture(fn)

total_frame_count = 0

face_detection_frame_count_dlib = 0

face_detection_frame_count_opencv = 0

while(video_input.isOpened() == True):

total_frame_count += 1

ret, frame = video_input.read()

temp_frame = copy.deepcopy(frame)

# 処理負荷軽減のための対象フレーム縮小(引数指定時)

height, width = frame.shape[:2]

temp_frame = cv2.resize(frame, (int(width/resize_rate), int(height/resize_rate)))

# 顔検出(dlib)

start = time.time()

dets = detector(temp_frame, 1)

elapsed_time_dlib = time.time() - start

if len(dets) > 0:

face_detection_frame_count_dlib += 1

# 検出結果描画(dlib)

for k, d in enumerate(dets):

cv2.rectangle(frame, (int(d.left() * resize_rate), int(d.top() * resize_rate)), \

(int(d.right() * resize_rate), int(d.bottom() * resize_rate)), (255, 0, 0), -1)

# 顔検出(opencv)

gray_image = cv2.cvtColor(temp_frame, cv2.COLOR_BGR2GRAY)

gray_image = cv2.equalizeHist(gray_image)

start = time.time()

rects = cascade.detectMultiScale(gray_image, scaleFactor=1.3, minNeighbors=4, minSize=(30, 30), flags=cv2.CASCADE_SCALE_IMAGE)

if len(rects) == 0:

rects = []

else:

rects[:,2:] += rects[:,:2]

elapsed_time_opencv = time.time() - start

if len(rects) > 0:

face_detection_frame_count_opencv += 1

# 検出結果描画(OpenCV)

for x1, y1, x2, y2 in rects:

cv2.putText(frame, "OpenCV", (int(x1 * resize_rate), int(y1 * resize_rate)), cv2.FONT_HERSHEY_PLAIN, 2.0, (0, 0, 255), thickness = 2)

cv2.rectangle(frame, (int(x1 * resize_rate), int(y1 * resize_rate)), (int(x2 * resize_rate), int(y2 * resize_rate)), (0, 0, 255), -1)

# 検出結果描画(dlib)

for k, d in enumerate(dets):

cv2.putText(frame, "Dlib", (int(d.left() * resize_rate), int(d.top() * resize_rate)), cv2.FONT_HERSHEY_PLAIN, 2.0, (255, 0, 0), thickness = 2)

cv2.rectangle(frame, (int(d.left() * resize_rate), int(d.top() * resize_rate)), \

(int(d.right() * resize_rate), int(d.bottom() * resize_rate)), (255, 0, 0), 2)

print ("face detect(dlib) processing time:{0}".format(elapsed_time_dlib)) + "[sec]"

print ("face detect(opencv) processing time:{0}".format(elapsed_time_opencv)) + "[sec]"

print ("face detect(dlib) success count:" + '%06d' % face_detection_frame_count_dlib + "/" + '%06d' % total_frame_count)

print ("face detect(opencv) success count:" + '%06d' % face_detection_frame_count_opencv + "/" + '%06d' % total_frame_count)

print

cv2.imshow('face detector vs', frame)

c = cv2.waitKey(50) & 0xFF

if c==27: # ESC

break

video_input.release()

cv2.destroyAllWindows()

以上。